A full-scale error-corrected quantum laptop will be capable to remedy some issues which are unattainable for classical computer systems, however constructing such a tool is a big endeavor. We’re pleased with the milestones that now we have achieved towards a completely error-corrected quantum laptop, however that large-scale laptop remains to be some variety of years away. In the meantime, we’re utilizing our present noisy quantum processors as versatile platforms for quantum experiments.

In distinction to an error-corrected quantum laptop, experiments in noisy quantum processors are presently restricted to a couple thousand quantum operations or gates, earlier than noise degrades the quantum state. In 2019 we applied a selected computational job known as random circuit sampling on our quantum processor and confirmed for the primary time that it outperformed state-of-the-art classical supercomputing.

Though they haven’t but reached beyond-classical capabilities, now we have additionally used our processors to watch novel bodily phenomena, resembling time crystals and Majorana edge modes, and have made new experimental discoveries, resembling sturdy certain states of interacting photons and the noise-resilience of Majorana edge modes of Floquet evolutions.

We anticipate that even on this intermediate, noisy regime, we’ll discover purposes for the quantum processors through which helpful quantum experiments might be carried out a lot sooner than might be calculated on classical supercomputers — we name these “computational purposes” of the quantum processors. Nobody has but demonstrated such a beyond-classical computational software. In order we goal to realize this milestone, the query is: What’s one of the simplest ways to match a quantum experiment run on such a quantum processor to the computational value of a classical software?

We already know tips on how to evaluate an error-corrected quantum algorithm to a classical algorithm. In that case, the sphere of computational complexity tells us that we will evaluate their respective computational prices — that’s, the variety of operations required to perform the duty. However with our present experimental quantum processors, the scenario isn’t so properly outlined.

In “Efficient quantum quantity, constancy and computational value of noisy quantum processing experiments”, we offer a framework for measuring the computational value of a quantum experiment, introducing the experiment’s “efficient quantum quantity”, which is the variety of quantum operations or gates that contribute to a measurement final result. We apply this framework to judge the computational value of three latest experiments: our random circuit sampling experiment, our experiment measuring portions referred to as “out of time order correlators” (OTOCs), and a latest experiment on a Floquet evolution associated to the Ising mannequin. We’re notably enthusiastic about OTOCs as a result of they supply a direct strategy to experimentally measure the efficient quantum quantity of a circuit (a sequence of quantum gates or operations), which is itself a computationally troublesome job for a classical laptop to estimate exactly. OTOCs are additionally vital in nuclear magnetic resonance and electron spin resonance spectroscopy. Subsequently, we imagine that OTOC experiments are a promising candidate for a first-ever computational software of quantum processors.

|

| Plot of computational value and impression of some latest quantum experiments. Whereas some (e.g., QC-QMC 2022) have had excessive impression and others (e.g., RCS 2023) have had excessive computational value, none have but been each helpful and arduous sufficient to be thought-about a “computational software.” We hypothesize that our future OTOC experiment could possibly be the primary to move this threshold. Different experiments plotted are referenced within the textual content. |

Random circuit sampling: Evaluating the computational value of a loud circuit

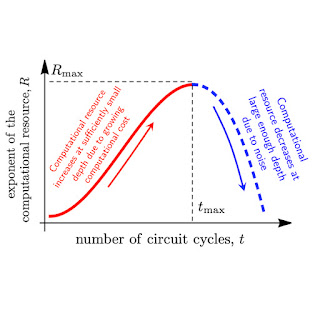

In terms of operating a quantum circuit on a loud quantum processor, there are two competing issues. On one hand, we goal to do one thing that’s troublesome to realize classically. The computational value — the variety of operations required to perform the duty on a classical laptop — relies on the quantum circuit’s efficient quantum quantity: the bigger the amount, the upper the computational value, and the extra a quantum processor can outperform a classical one.

However then again, on a loud processor, every quantum gate can introduce an error to the calculation. The extra operations, the upper the error, and the decrease the constancy of the quantum circuit in measuring a amount of curiosity. Underneath this consideration, we’d choose less complicated circuits with a smaller efficient quantity, however these are simply simulated by classical computer systems. The steadiness of those competing issues, which we need to maximize, known as the “computational useful resource”, proven beneath.

We are able to see how these competing issues play out in a easy “hi there world” program for quantum processors, referred to as random circuit sampling (RCS), which was the primary demonstration of a quantum processor outperforming a classical laptop. Any error in any gate is prone to make this experiment fail. Inevitably, it is a arduous experiment to realize with vital constancy, and thus it additionally serves as a benchmark of system constancy. But it surely additionally corresponds to the very best identified computational value achievable by a quantum processor. We not too long ago reported the strongest RCS experiment carried out to this point, with a low measured experimental constancy of 1.7×10-3, and a excessive theoretical computational value of ~1023. These quantum circuits had 700 two-qubit gates. We estimate that this experiment would take ~47 years to simulate on this planet’s largest supercomputer. Whereas this checks one of many two packing containers wanted for a computational software — it outperforms a classical supercomputer — it isn’t a very helpful software per se.

OTOCs and Floquet evolution: The efficient quantum quantity of an area observable

There are lots of open questions in quantum many-body physics which are classically intractable, so operating a few of these experiments on our quantum processor has nice potential. We sometimes consider these experiments a bit in a different way than we do the RCS experiment. Reasonably than measuring the quantum state of all qubits on the finish of the experiment, we’re normally involved with extra particular, native bodily observables. As a result of not each operation within the circuit essentially impacts the observable, an area observable’s efficient quantum quantity is perhaps smaller than that of the total circuit wanted to run the experiment.

We are able to perceive this by making use of the idea of a lightweight cone from relativity, which determines which occasions in space-time might be causally linked: some occasions can not presumably affect each other as a result of data takes time to propagate between them. We are saying that two such occasions are exterior their respective gentle cones. In a quantum experiment, we change the sunshine cone with one thing known as a “butterfly cone,” the place the expansion of the cone is set by the butterfly pace — the pace with which data spreads all through the system. (This pace is characterised by measuring OTOCs, mentioned later.) The efficient quantum quantity of an area observable is actually the amount of the butterfly cone, together with solely the quantum operations which are causally linked to the observable. So, the sooner data spreads in a system, the bigger the efficient quantity and subsequently the tougher it’s to simulate classically.

We apply this framework to a latest experiment implementing a so-called Floquet Ising mannequin, a bodily mannequin associated to the time crystal and Majorana experiments. From the info of this experiment, one can immediately estimate an efficient constancy of 0.37 for the biggest circuits. With the measured gate error price of ~1%, this provides an estimated efficient quantity of ~100. That is a lot smaller than the sunshine cone, which included two thousand gates on 127 qubits. So, the butterfly velocity of this experiment is kind of small. Certainly, we argue that the efficient quantity covers solely ~28 qubits, not 127, utilizing numerical simulations that get hold of a bigger precision than the experiment. This small efficient quantity has additionally been corroborated with the OTOC method. Though this was a deep circuit, the estimated computational value is 5×1011, virtually one trillion occasions lower than the latest RCS experiment. Correspondingly, this experiment might be simulated in lower than a second per knowledge level on a single A100 GPU. So, whereas that is definitely a helpful software, it doesn’t fulfill the second requirement of a computational software: considerably outperforming a classical simulation.

Info scrambling experiments with OTOCs are a promising avenue for a computational software. OTOCs can inform us vital bodily details about a system, such because the butterfly velocity, which is vital for exactly measuring the efficient quantum quantity of a circuit. OTOC experiments with quick entangling gates supply a possible path for a primary beyond-classical demonstration of a computational software with a quantum processor. Certainly, in our experiment from 2021 we achieved an efficient constancy of Feff ~ 0.06 with an experimental signal-to-noise ratio of ~1, similar to an efficient quantity of ~250 gates and a computational value of 2×1012.

Whereas these early OTOC experiments will not be sufficiently complicated to outperform classical simulations, there’s a deep bodily cause why OTOC experiments are good candidates for the primary demonstration of a computational software. Many of the fascinating quantum phenomena accessible to near-term quantum processors which are arduous to simulate classically correspond to a quantum circuit exploring many, many quantum vitality ranges. Such evolutions are sometimes chaotic and commonplace time-order correlators (TOC) decay in a short time to a purely random common on this regime. There is no such thing as a experimental sign left. This doesn’t occur for OTOC measurements, which permits us to develop complexity at will, solely restricted by the error per gate. We anticipate {that a} discount of the error price by half would double the computational value, pushing this experiment to the beyond-classical regime.

Conclusion

Utilizing the efficient quantum quantity framework now we have developed, now we have decided the computational value of our RCS and OTOC experiments, in addition to a latest Floquet evolution experiment. Whereas none of those meet the necessities but for a computational software, we anticipate that with improved error charges, an OTOC experiment would be the first beyond-classical, helpful software of a quantum processor.