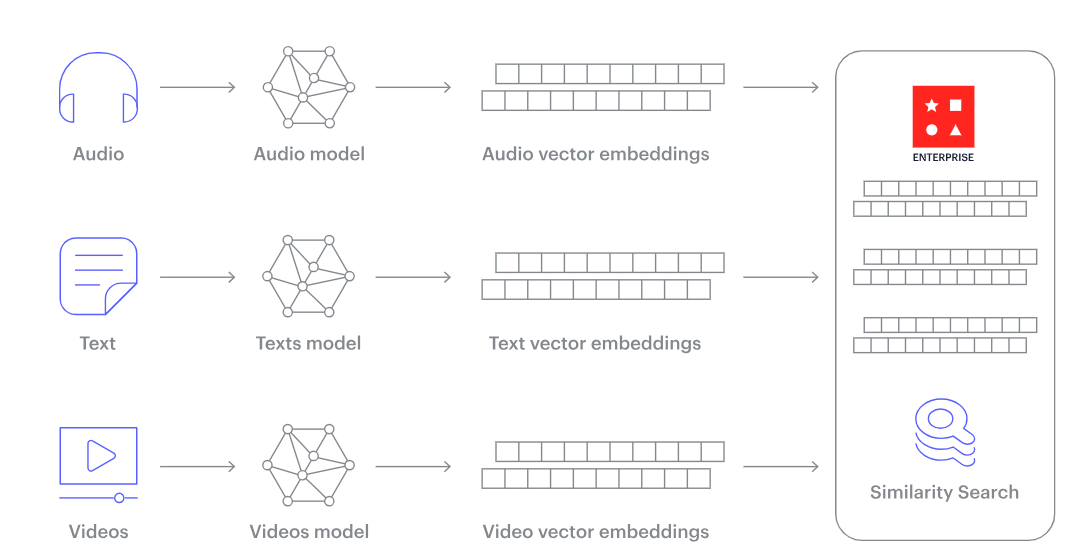

For giant-scale Generative AI purposes to work successfully, it wants good system to deal with a number of information. One such essential system is the vector database. What units this database aside is its skill to offers with many sorts of information like textual content, sound, photos, and movies in a quantity/vector kind.

What are Vector Databases?

Vector database is a specialised storage system designed to deal with high-dimensional vectors effectively. These vectors, which will be regarded as factors in a multi-dimensional area, usually symbolize embeddings or compressed representations of extra complicated information like pictures, textual content, or sound.

Vector databases enable for fast similarity searches amongst these vectors, enabling fast retrieval of probably the most comparable objects from an enormous dataset.

Conventional Databases vs. Vector Databases

Vector Databases:

- Handles Excessive-Dimensional Information: Vector databases are designed to handle and retailer information in high-dimensional areas. That is notably helpful for purposes like machine studying, the place information factors (similar to pictures or textual content) will be represented as vectors in multi-dimensional areas.

- Optimized for Similarity Search: One standout options of vector databases is their skill to carry out similarity searches. As an alternative of querying information primarily based on precise matches, these databases enable customers to retrieve information that’s “comparable” to a given question, making them invaluable for duties like picture or textual content retrieval.

- Scalable for Giant Datasets: As AI and machine studying purposes proceed to develop, so does the quantity of information they course of. Vector databases are constructed to scale, guaranteeing that they’ll deal with huge quantities of information with out compromising on efficiency.

Conventional Databases:

- Structured Information Storage: Conventional databases, like relational databases, are designed to retailer structured information. This implies information is organized into predefined tables, rows, and columns, guaranteeing information integrity and consistency.

- Optimized for CRUD Operations: Conventional databases are primarily optimized for CRUD operations. This implies they’re designed to effectively create, learn, replace, and delete information entries, making them appropriate for a variety of purposes, from net providers to enterprise software program.

- Fastened Schema: One of many defining traits of many conventional databases is their mounted schema. As soon as the database construction is outlined, making modifications will be complicated and time-consuming. This rigidity ensures information consistency however will be much less versatile than the schema-less or dynamic schema nature of some fashionable databases.

Conventional databases usually wrestle with the complexity of embeddings, a problem readily addressed by vector databases.

Vector Representations

Central to the functioning of vector databases is the basic idea of representing various types of information utilizing numeric vectors. Let’s take a picture for instance. Once you see an image of a cat, whereas it would simply be an lovable feline picture for us, for a machine it may be remodeled into a singular 512-dimensional vector similar to:

[0.23, 0.54, 0.32, …, 0.12, 0.45, 0.90]

With vector databases, Generative AI utility can do extra issues. It may possibly discover info primarily based on that means and bear in mind issues for a very long time. Apparently, this methodology is not restricted to pictures alone. Textual information stuffed with contextual and semantic meanings, will also be put into vector kinds as effectively.

Generative AI and The Want for Vector Databases

Generative AI usually includes embeddings. Take, as an example, phrase embeddings in pure language processing (NLP). Phrases or sentences are remodeled into vectors that seize semantic that means. When producing human-like textual content, fashions have to quickly evaluate and retrieve related embeddings, guaranteeing that the generated textual content maintains contextual meanings.

Equally, in picture or sound era, embeddings play an important position in encoding patterns and options. For these fashions to operate optimally, they require a database that enables for instantaneous retrieval of comparable vectors, making vector databases an integral part of the generative AI puzzle.

Creating embeddings for pure language normally includes utilizing pre-trained fashions similar to:

- GPT-3 and GPT-4: OpenAI’s GPT-3 (Generative Pre-trained Transformer 3) has been a monumental mannequin within the NLP group with 175 billion parameters. Following it, GPT-4, with a fair bigger variety of parameters, continues to push the boundaries in producing high-quality embeddings. These fashions are educated on various datasets, enabling them to create embeddings that seize a wide selection of linguistic nuances.

- BERT and its Variants: BERT (Bidirectional Encoder Representations from Transformers) by Google, is one other important mannequin that has seen varied updates and iterations like RoBERTa, and DistillBERT. BERT’s bidirectional coaching, which reads textual content in each instructions, is especially adept at understanding the context surrounding a phrase.

- ELECTRA: A more moderen mannequin that’s environment friendly and performs at par with a lot bigger fashions like GPT-3 and BERT whereas requiring much less computing assets. ELECTRA discriminates between actual and pretend information throughout pre-training, which helps in producing extra refined embeddings.

Understanding the above course of:

Initially, an embedding mannequin is employed to remodel the specified content material into vector embeddings. As soon as generated, these embeddings are then saved inside a vector database. For straightforward traceability and relevance, these saved embeddings keep a hyperlink or reference to the unique content material they had been derived from.

Later, when a person or system poses a query to the applying, the identical embedding mannequin jumps into motion. It transforms this question into corresponding embeddings. These newly fashioned embeddings then search the vector database, looking for comparable vector representations. The embeddings recognized as matches have a direct affiliation with their unique content material, guaranteeing the person’s question is met with related and correct outcomes.

Rising Funding for Vector Database Newcomers

With AI’s rising reputation, many firms are placing extra money into vector databases to make their algorithms higher and sooner. This may be seen with the current investments in vector database startups like Pinecone, Chroma DB, and Weviate.

Giant cooperation like Microsoft have their very own instruments too. For instance, Azure Cognitive Search lets companies create AI instruments utilizing vector databases.

Oracle additionally lately introduced new options for its Database 23c, introducing an Built-in Vector Database. Named “AI Vector Search,” it’s going to have a brand new information sort, indexes, and search instruments to retailer and search by way of information like paperwork and pictures utilizing vectors. It helps Retrieval Augmented Technology (RAG), which mixes giant language fashions with enterprise information for higher solutions to language questions with out sharing personal information.

Main Issues of Vector Databases

Distance Metrics

The effectiveness of a similarity search is determined by the chosen distance metric. Frequent metrics embody Euclidean distance and cosine similarity, every catering to various kinds of vector distributions.

Indexing

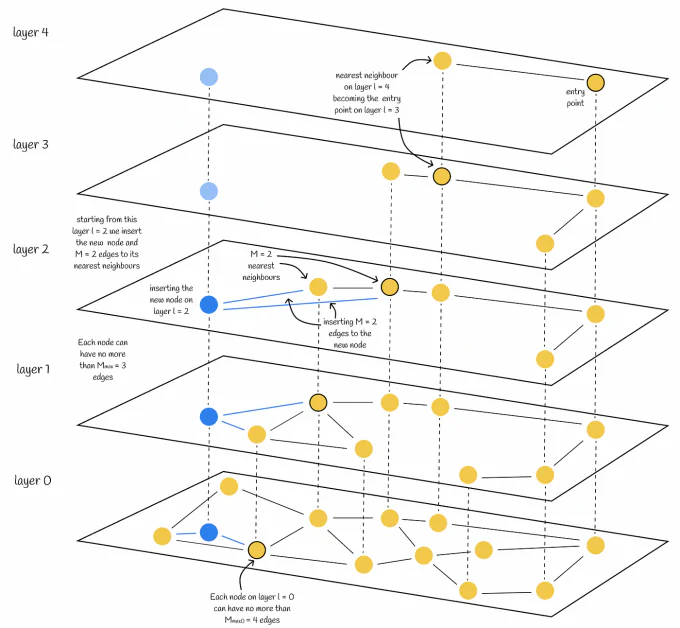

Given the high-dimensionality of vectors, conventional indexing strategies do not reduce it. Vector databases makes use of strategies like Hierarchical Navigable Small World (HNSW) graphs or Annoy timber, permitting for environment friendly partitioning of the vector area and fast nearest-neighbor searches.

Annoy tree (Supply)

Annoy is a technique that makes use of one thing referred to as binary search timber. It splits our information area many occasions and solely appears to be like at part of it to seek out shut neighbors.

Hierarchical Navigable Small World (HNSW) graphs (Supply)

HNSW graphs, however, are like networks. They join information factors in a particular technique to make looking out sooner. These graphs assist in shortly discovering shut factors within the information.

Scalability

As datasets develop, so does the problem of sustaining quick retrieval occasions. Distributed methods, GPU acceleration, and optimized reminiscence administration are some methods vector databases deal with scalability.

Position of Vector Databases: Implications and Alternatives

1. Coaching Information for Slicing-Edge Generative AI Fashions: Generative AI fashions, similar to DALL-E and GPT-3, are educated utilizing huge quantities of information. This information usually contains vectors extracted from a myriad of sources, together with pictures, texts, code, and different domains. Vector databases meticulously curate and handle these datasets, permitting AI fashions to assimilate and analyze the world’s data by figuring out patterns and relationships inside these vectors.

2. Advancing Few-Shot Studying: Few-shot studying is an AI coaching approach the place fashions are educated with restricted information. Vector databases amplify this strategy by sustaining a sturdy vector index. When a mannequin is uncovered to only a handful of vectors – say, a number of pictures of birds – it might swiftly extrapolate the broader idea of birds by recognizing similarities and relationships between these vectors.

3. Enhancing Recommender Techniques: Recommender methods makes use of vector databases to recommend content material carefully aligned with a person’s preferences. By analyzing a person’s conduct, profile, and queries, vectors indicative of their pursuits are extracted. The system then scans the vector database to seek out content material vectors that carefully resemble these curiosity vectors, guaranteeing exact suggestions.

4. Semantic Data Retrieval: Conventional search strategies depend on precise key phrase matches. Nevertheless, vector databases empower methods to know and retrieve content material primarily based on semantic similarity. Which means that searches turn into extra intuitive, specializing in the underlying that means of the question moderately than simply matching phrases. As an illustration, when customers enter a question, the corresponding vector is in contrast with vectors within the database to seek out content material that resonates with the question’s intent, not simply its phrasing.

5. Multimodal Search: Multimodal search is an rising approach that integrates information from a number of sources, like textual content, pictures, audio, and video. Vector databases function the spine of this strategy by permitting for the mixed evaluation of vectors from various modalities. This leads to a holistic search expertise, the place customers can retrieve info from a wide range of sources primarily based on a single question, resulting in richer insights and extra complete outcomes.

Conclusion

The AI world is altering quick. It is touching many industries, bringing good issues and new issues. The fast developments in Generative AI underscore the important position of vector databases in managing and analyzing multi-dimensional information.