Since launching our generative AI platform providing just some brief months in the past, we’ve seen, heard, and skilled intense and accelerated AI innovation, with outstanding breakthroughs. As a long-time machine studying advocate and business chief, I’ve witnessed many such breakthroughs, completely represented by the regular pleasure round ChatGPT, launched virtually a yr in the past.

And simply as ecosystems thrive with organic range, the AI ecosystem advantages from a number of suppliers. Interoperability and system flexibility have at all times been key to mitigating danger – in order that organizations can adapt and proceed to ship worth. However the unprecedented pace of evolution with generative AI has made optionality a essential functionality.

The market is altering so quickly that there aren’t any certain bets – immediately or within the close to future. This can be a assertion that we’ve heard echoed by our prospects and one of many core philosophies that underpinned lots of the progressive new generative AI capabilities introduced in our current Fall Launch.

Relying too closely upon anybody AI supplier might pose a danger as charges of innovation are disrupted. Already, there are over 180+ completely different open supply LLM fashions. The tempo of change is evolving a lot sooner than groups can apply it.

DataRobot’s philosophy has been that organizations must construct flexibility into their generative AI technique based mostly on efficiency, robustness, prices, and adequacy for the particular LLM activity being deployed.

As with all applied sciences, many LLMs include commerce offs or are extra tailor-made to particular duties. Some LLMs might excel at specific pure language operations like textual content summarization, present extra various textual content technology, and even be cheaper to function. In consequence, many LLMs will be best-in-class in several however helpful methods. A tech stack that gives flexibility to pick out or mix these choices ensures organizations maximize AI worth in a cost-efficient method.

DataRobot operates as an open, unified intelligence layer that lets organizations evaluate and choose the generative AI parts which can be proper for them. This interoperability results in higher generative AI outputs, improves operational continuity, and reduces single-provider dependencies.

With such a technique, operational processes stay unaffected if, say, a supplier is experiencing inside disruption. Plus, prices will be managed extra effectively by enabling organizations to make cost-performance tradeoffs round their LLMs.

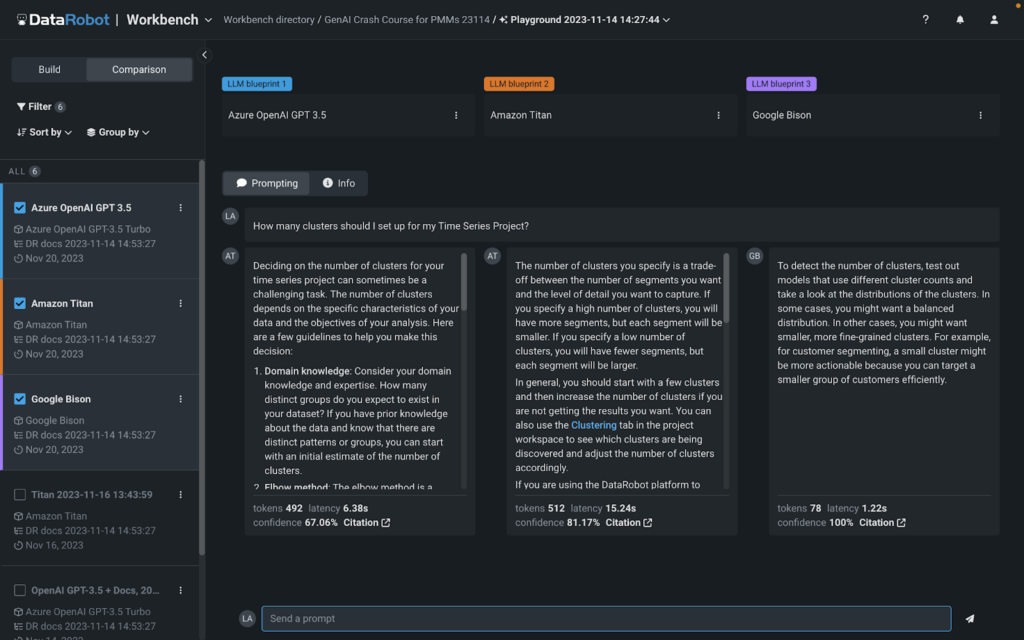

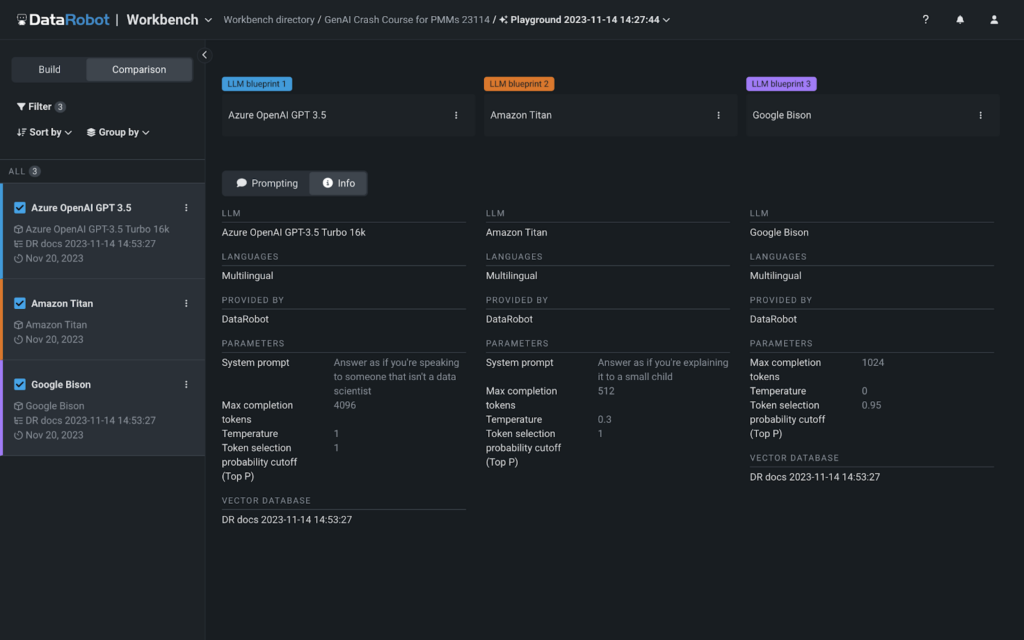

Throughout our Fall Launch, we introduced our new multi-provider LLM Playground. The primary-of-its-kind visible interface offers you with built-in entry to Google Cloud Vertex AI, Azure OpenAI, and Amazon Bedrock fashions to simply evaluate and experiment with completely different generative AI ‘recipes.’ You need to use any of the built-in LLMs in our playground or deliver your individual. Entry to those LLMs is obtainable out-of-the-box throughout experimentation, so there aren’t any extra steps wanted to begin constructing GenAI options in DataRobot.

With our new LLM Playground, we’ve made it straightforward to strive, check, and evaluate completely different GenAI “recipes” when it comes to fashion/tone, price, and relevance. We’ve made it straightforward to judge any mixture of foundational mannequin, vector database, chunking technique, and prompting technique. You are able to do this whether or not you like to construct with the platform UI or utilizing a pocket book. Having the LLM playground makes it straightforward so that you can flip backwards and forwards from code to visualizing your experiments facet by facet.

With DataRobot, you may as well hot-swap underlying parts (like LLMs) with out breaking manufacturing, in case your group’s wants change or the market evolves. This not solely permits you to calibrate your generative AI options to your precise necessities, but additionally ensures you keep technical autonomy with the entire better of breed parts proper at your fingertips.

You possibly can see beneath precisely how straightforward it’s to check completely different generative AI ‘recipes’ with our LLM Playground.

When you’ve chosen the fitting ’recipe’ for you, you’ll be able to shortly and simply transfer it, your vector database, and prompting methods into manufacturing. As soon as in manufacturing, you get full end-to-end generative AI lineage, monitoring, and reporting.

With DataRobot’s generative AI providing, organizations can simply select the fitting instruments for the job, safely lengthen their inside information to LLMs, whereas additionally measuring outputs for toxicity, truthfulness, and price amongst different KPIs. We wish to say, “we’re not constructing LLMs, we’re fixing the boldness downside for generative AI.”

The generative AI ecosystem is advanced – and altering every single day. At DataRobot, we guarantee that you’ve got a versatile and resilient strategy – consider it as an insurance coverage coverage and safeguards towards stagnation in an ever-evolving technological panorama, guaranteeing each information scientists’ agility and CIOs’ peace of thoughts. As a result of the truth is that a company’s technique shouldn’t be constrained to a single supplier’s world view, charge of innovation, or inside turmoil. It’s about constructing resilience and pace to evolve your group’s generative AI technique so as to adapt because the market evolves – which it may well shortly do!

You possibly can study extra about how else we’re fixing the ‘confidence downside’ by watching our Fall Launch occasion on-demand.

In regards to the writer

Ted Kwartler is the Area CTO at DataRobot. Ted units product technique for explainable and moral makes use of of knowledge expertise. Ted brings distinctive insights and expertise using information, enterprise acumen and ethics to his present and former positions at Liberty Mutual Insurance coverage and Amazon. Along with having 4 DataCamp programs, he teaches graduate programs on the Harvard Extension Faculty and is the writer of “Textual content Mining in Follow with R.” Ted is an advisor to the US Authorities Bureau of Financial Affairs, sitting on a Congressionally mandated committee known as the “Advisory Committee for Information for Proof Constructing” advocating for data-driven insurance policies.