Identical to some issues are too massive for one particular person to resolve, some duties are too advanced for a single AI agent. As an alternative, the very best strategy is to decompose issues into smaller, specialised items, the place a number of brokers work collectively as a staff.

That is the muse of multi-agent programs. Networks of brokers, every with particular roles, collaborating to resolve bigger issues.

When constructing multi-agent programs, you want a technique to coordinate how brokers work together. If each agent talks to each different agent instantly, issues shortly grow to be a tangled mess, making it arduous to scale, and arduous to debug. That’s the place the orchestrator sample is available in.

As an alternative of brokers making ad-hoc selections about the place to ship messages, a central orchestrator acts because the dad or mum node, deciding which agent ought to deal with a given process primarily based on context. The orchestrator takes in messages, interprets them, and routes them to the best agent on the proper time. This makes the system dynamic, adaptable, and scalable.

Consider it like a well-run dispatch heart.

As an alternative of particular person responders deciding the place to go, a central system evaluates incoming info and directs it effectively. This ensures that brokers don’t duplicate work or function in isolation, however can collaborate successfully with out hardcoded dependencies.

On this article, I’ll stroll by way of how one can construct an event-driven orchestrator for multi-agent programs utilizing Apache Flink and Apache Kafka, leveraging Flink to interpret and route messages whereas utilizing Kafka because the system’s short-term shared reminiscence.

Why Occasion-Pushed Brokers?

On the core of any multi-agent system is how brokers talk.

Request/response fashions, whereas easy to conceptualize, have a tendency to interrupt down when programs have to evolve, adapt to new info, or function in unpredictable environments. That’s why event-driven messaging, powered by applied sciences like Apache Kafka and Apache Flink, is often the higher mannequin for enterprise purposes.

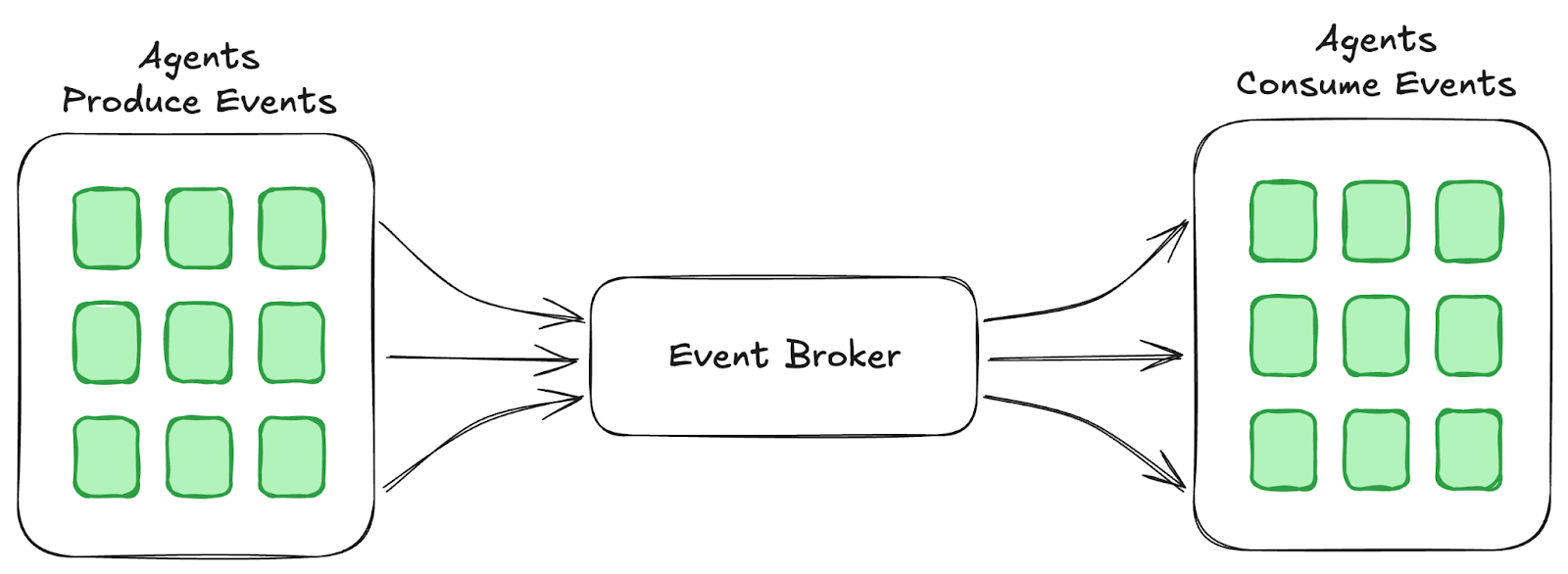

Occasion-Pushed Multi-Agent Communication

An event-driven structure permits brokers to speak dynamically with out inflexible dependencies, making them extra autonomous and resilient. As an alternative of hardcoding relationships, brokers react to occasions, enabling better flexibility, parallelism, and fault tolerance.

In the identical means that event-driven architectures present de-coupling for microservices and groups, they supply the identical benefits when constructing a multi-agent system. An agent is basically a stateful microservice with a mind, so lots of the identical patterns for constructing dependable distributed programs apply to brokers as properly.

Moreover, stream governance can confirm message construction, stopping malformed information from disrupting the system. That is usually lacking in current multi-agent frameworks at this time, making event-driven architectures much more compelling.

Orchestration: Coordinating Agentic Workflows

In advanced programs, brokers not often work in isolation.

Actual-world purposes require a number of brokers collaborating, dealing with distinct obligations whereas sharing context. This introduces challenges round process dependencies, failure restoration, and communication effectivity.

The orchestrator sample solves this by introducing a lead agent, or orchestrator, that directs different brokers in problem-solving. As an alternative of static workflows like conventional microservices, brokers generate dynamic execution plans, breaking down duties and adapting in actual time.

The Orchestrator Agent Sample

This flexibility, nonetheless, creates challenges:

-

Job Explosion – Brokers can generate unbounded duties, requiring useful resource administration.

-

Monitoring & Restoration – Brokers want a technique to observe progress, catch failures, and re-plan.

-

Scalability – The system should deal with an rising variety of agent interactions with out bottlenecks.

That is the place event-driven architectures shine.

With a streaming spine, brokers can react to new information instantly, observe dependencies effectively, and get better from failures gracefully, all with out centralized bottlenecks.

Agentic programs are basically dynamic, stateful, and adaptive—that means event-driven architectures are a pure match.

In the remainder of this text, I’ll break down a reference structure for event-driven multi-agent programs, displaying how one can implement an orchestrator sample utilizing Apache Flink and Apache Kafka, powering real-time agent decision-making at scale.

Multi-Agent Orchestration with Flink

Constructing scalable multi-agent programs requires real-time decision-making and dynamic routing of messages between brokers. That is the place Apache Flink performs a vital function.

Apache Flink is a stream processing engine designed to deal with stateful computations on unbounded streams of knowledge. In contrast to batch processing frameworks, Flink can course of occasions in actual time, making it an excellent instrument for orchestrating multi-agent interactions.

Revisiting the Orchestrator Sample

As mentioned earlier, multi-agent programs want an orchestrator to resolve which agent ought to deal with a given process. As an alternative of brokers making ad-hoc selections, the orchestrator ingests messages, interprets them utilizing an LLM, and routes them to the best agent.

To help this orchestration sample with Flink, Kafka is used because the messaging spine and Flink is the processing engine:

Powering Multi-Agent Orchestration with Flink

-

Message Manufacturing:

-

Flink Processing & Routing:

-

A Flink job listens to new messages in Kafka.

-

The message is handed to an LLM, which determines essentially the most applicable agent to deal with it.

-

The LLM’s determination relies on a structured Agent Definition, which incorporates:

-

Agent Identify – Distinctive identifier for the agent.

-

Description – The agent’s major operate.

-

Enter – Anticipated information format the agent processes enforced by a knowledge contract.

-

Output – The end result the agent generates.

-

-

-

Choice Output and Routing:

-

Agent Execution & Continuation:

-

The agent processes the message and writes updates again to the agent messages matter.

-

The Flink job detects these updates, reevaluates if further processing is required, and continues routing messages till the agent workflow is full.

-

Closing the Loop

This event-driven suggestions loop permits multi-agent programs to operate autonomously and effectively, guaranteeing:

-

Actual-time decision-making with no hardcoded workflows.

-

Scalable execution with decentralized agent interactions.

-

Seamless adaptability to new inputs and system adjustments.

Within the subsequent part, we’ll stroll by way of an instance implementation of this structure, together with Flink job definitions, Kafka matters, and LLM-based decision-making.

Constructing an Occasion-Pushed Multi-Agent System: A Palms-On Implementation

In earlier sections, we explored the orchestrator sample and why event-driven architectures are important for scaling multi-agent programs. Now, we’ll present how this structure works by strolling by way of a real-world use case: an AI-driven gross sales growth consultant (SDR) system that autonomously manages leads.

Occasion-Pushed AI Based mostly SDR utilizing a Multi-Agent System

To implement this technique, we make the most of Confluent Cloud, a totally managed service for Apache Kafka and Flink.

The AI SDR Multi-Agent System

The system consists of a number of specialised brokers that deal with totally different levels of the lead qualification and engagement course of. Every agent has an outlined function and operates independently inside an event-driven pipeline.

Brokers within the AI SDR System

-

Lead Ingestion Agent: Captures uncooked lead information, enriches it with further analysis, and generates a lead profile.

-

Lead Scoring Agent: Analyzes lead information to assign a precedence rating and decide the very best engagement technique.

-

Energetic Outreach Agent: Makes use of lead particulars and scores to generate customized outreach messages.

-

Nurture Marketing campaign Agent: Dynamically creates a sequence of emails primarily based on the place the lead originated and what their curiosity was.

-

Ship E mail Agent: Takes in emails and units up the marketing campaign to ship them.

The brokers don’t have any express dependencies on one another. They merely produce and devour occasions independently.

How Orchestration Works in Flink SQL

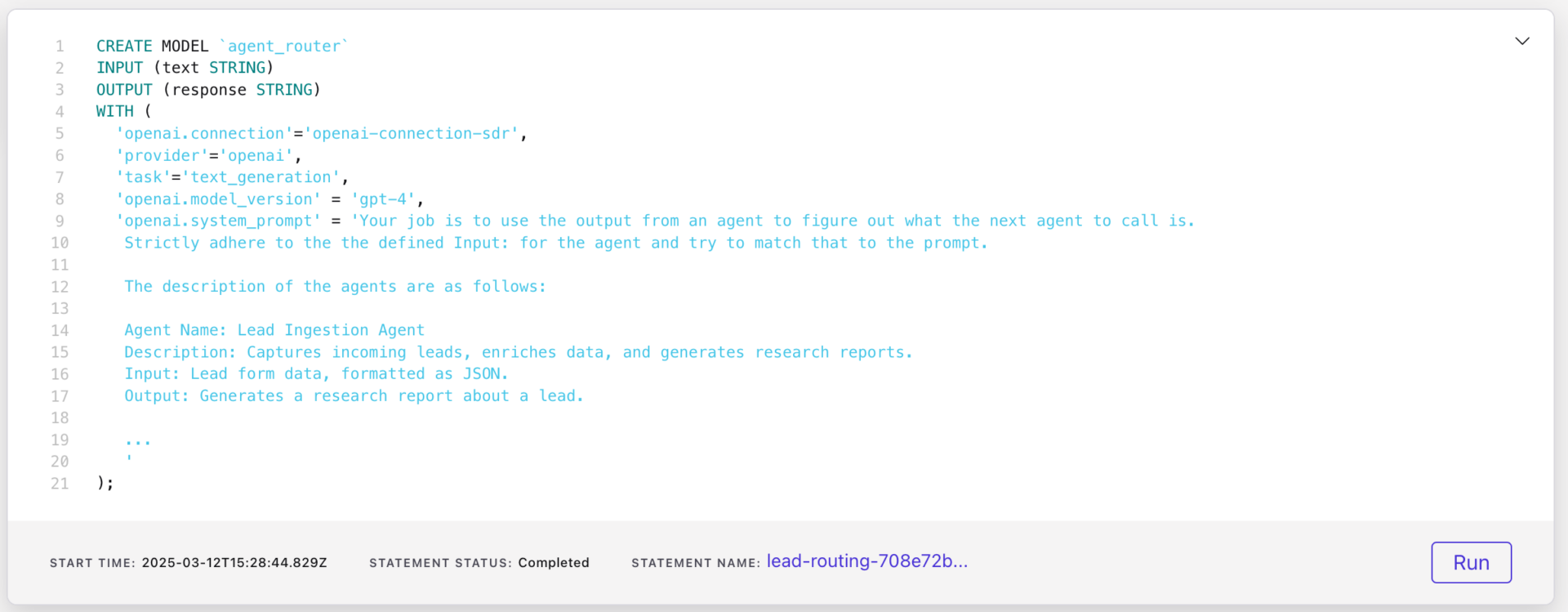

To find out which agent ought to course of an incoming message, the orchestrator makes use of exterior mannequin inference in Flink. This mannequin receives the message, evaluates its content material, and assigns it to the proper agent primarily based on predefined features.

The Flink SQL assertion to arrange the mannequin is proven under with an abbreviated model of the immediate used for performing the mapping operation.

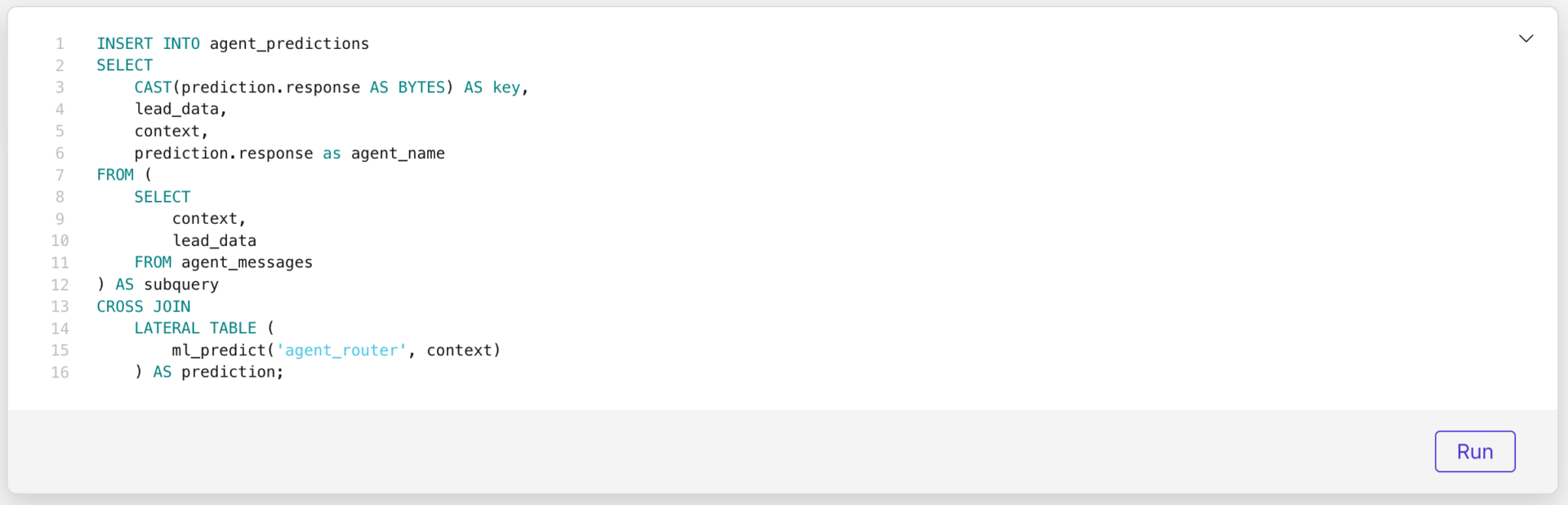

After creating the mannequin, we create a Flink job that makes use of this mannequin to course of incoming messages and assign them to the proper agent:

This routinely routes messages to the suitable agent, guaranteeing a seamless, clever workflow. Every agent processes its process and writes updates again to Kafka, permitting the following agent within the pipeline to take motion.

Executing Outreach

Within the demo software, leads are written from an internet site into MongoDB. A supply connector for MongoDB sends the leads into an incoming leads matter, the place they’re copied into the agent messages matter.

This motion kick begins the AI SDR automated course of.

The question above reveals that every one determination making and analysis is left to the orchestrator with no routing logic hard-coded. The LLM is reasoning on the very best motion to take primarily based upon agent descriptions and the payloads routed by way of the agent messages matter. On this means, we’ve constructed an orchestrator with just a few strains of code with the heavy lifting performed by the LLM.

Wrapping Up: The Way forward for Occasion-Pushed Multi-Agent Programs

The AI SDR system we’ve explored demonstrates how event-driven architectures allow multi-agent programs to function effectively, making real-time selections with out inflexible workflows. By leveraging Flink for message processing and routing and Kafka for short-term shared reminiscence, we obtain a scalable, autonomous orchestration framework that enables brokers to collaborate dynamically.

The important thing takeaway is that brokers are basically stateful microservices with a mind, and the identical event-driven ideas that scaled microservices apply to multi-agent programs. As an alternative of static, predefined workflows, we allow programs and groups to be de-coupled, adapt dynamically, reacting to new information because it arrives.

Whereas this weblog submit centered on the orchestrator sample, it’s necessary to notice that different patterns could be supported as properly. In some circumstances, extra express dependencies between brokers are obligatory to make sure reliability, consistency, or domain-specific constraints. For instance, sure workflows could require a strict sequence of agent execution to ensure transactional integrity or regulatory compliance. The secret’s discovering the best stability between flexibility and management relying on the applying’s wants.

For those who’re fascinated about constructing your personal event-driven agent system, take a look at the GitHub repository for the total implementation, together with Flink SQL examples and Kafka configurations.