For a lot of essential software features, together with streaming and e-commerce, monolithic structure is not enough. With present calls for for real-time occasion information and cloud service utilization, many fashionable functions, akin to Netflix and Lyft, have shifted to an event-driven microservices method. Separated microservices can function independently of each other and improve a code base’s adaptability and scalability.

However what’s an event-driven microservices structure, and why must you use it? We’ll study the foundational facets and create a whole blueprint for an event-driven microservices mission utilizing Python and Apache Kafka.

Utilizing Occasion-driven Microservices

Occasion-driven microservices mix two fashionable structure patterns: microservices architectures and event-driven architectures. Although microservices can pair with request-driven REST architectures, event-driven architectures have gotten more and more related with the rise of massive information and cloud platform environments.

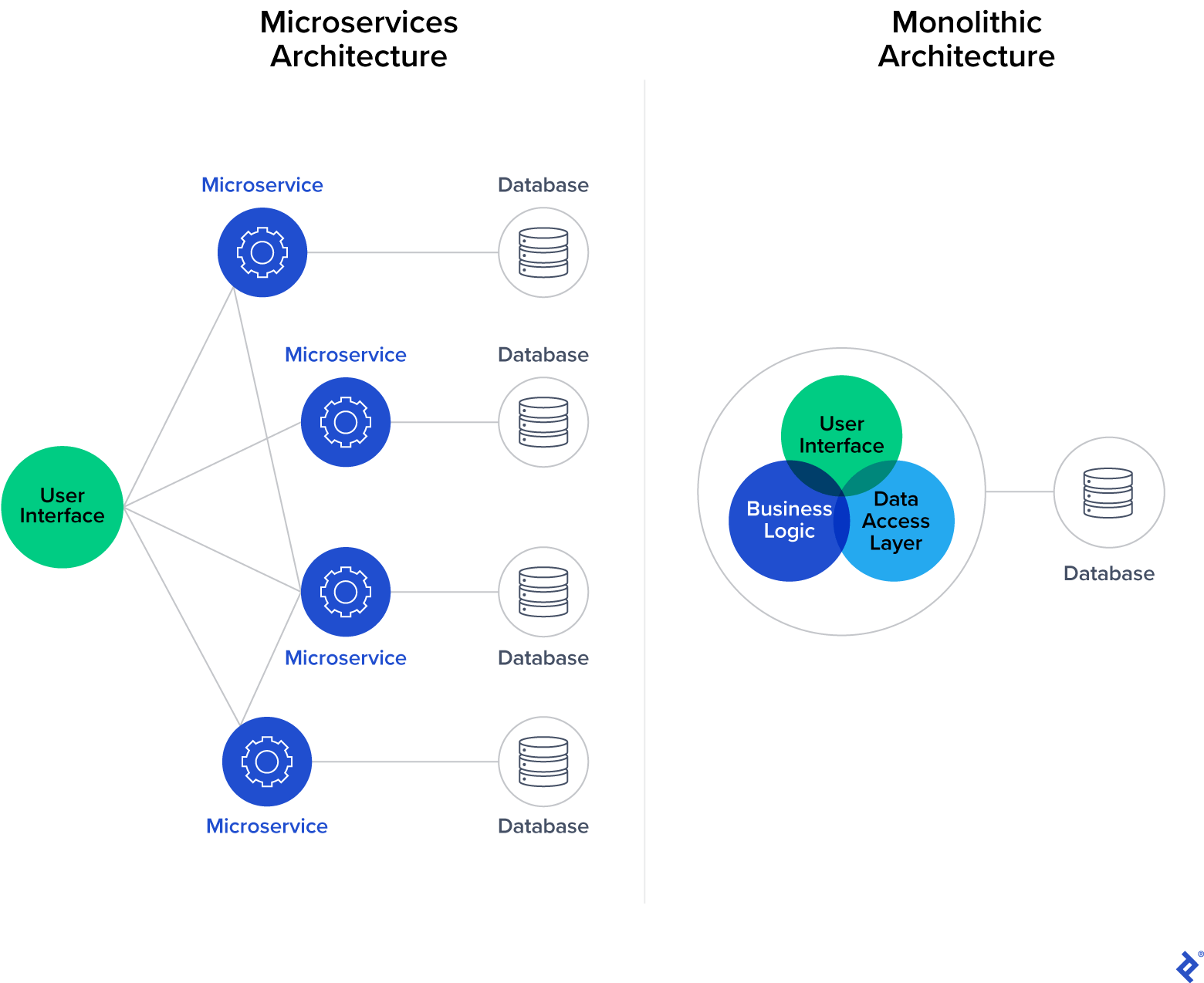

What Is a Microservices Structure?

A microservices structure is a software program improvement approach that organizes an software’s processes as loosely coupled companies. It’s a sort of service-oriented structure (SOA).

In a conventional monolithic construction, all software processes are inherently interconnected; if one half fails, the system goes down. Microservices architectures as an alternative group software processes into separate companies interacting with light-weight protocols, offering improved modularity and higher app maintainability and resiliency.

Although monolithic functions could also be easier to develop, debug, take a look at, and deploy, most enterprise-level functions flip to microservices as their normal, which permits builders to personal parts independently. Profitable microservices must be saved so simple as attainable and talk utilizing messages (occasions) which might be produced and despatched to an occasion stream or consumed from an occasion stream. JSON, Apache Avro, and Google Protocol Buffers are widespread selections for information serialization.

What Is an Occasion-driven Structure?

An event-driven structure is a design sample that buildings software program in order that occasions drive the habits of an software. Occasions are significant information generated by actors (i.e., human customers, exterior functions, or different companies).

Our instance mission options this structure; at its core is an event-streaming platform that manages communication in two methods:

- Receiving messages from actors that write them (normally known as publishers or producers)

- Sending messages to different actors that learn them (normally known as subscribers or customers)

In additional technical phrases, our event-streaming platform is software program that acts because the communication layer between companies and permits them to alternate messages. It could implement a wide range of messaging patterns, akin to publish/subscribe or point-to-point messaging, in addition to message queues.

Utilizing an event-driven structure with an event-streaming platform and microservices presents a wealth of advantages:

- Asynchronous communications: The power to independently multitask permits companies to react to occasions each time they’re prepared as an alternative of ready on a earlier job to complete earlier than beginning the subsequent one. Asynchronous communications facilitate real-time information processing and make functions extra reactive and maintainable.

- Full decoupling and suppleness: The separation of producer and client parts signifies that companies solely have to work together with the event-streaming platform and the info format they will produce or devour. Companies can comply with the single duty precept and scale independently. They’ll even be applied by separate improvement groups utilizing distinctive know-how stacks.

- Reliability and scalability: The asynchronous, decoupled nature of event-driven architectures additional amplifies app reliability and scalability (that are already benefits of microservices structure design).

With event-driven architectures, it’s straightforward to create companies that react to any system occasion. You can even create semi-automatic pipelines that embody some guide actions. (For instance, a pipeline for automated consumer payouts would possibly embody a guide safety verify triggered by unusually massive payout values earlier than transferring funds.)

Selecting the Venture Tech Stack

We are going to create our mission utilizing Python and Apache Kafka paired with Confluent Cloud. Python is a strong, dependable normal for a lot of varieties of software program initiatives; it boasts a big neighborhood and plentiful libraries. It’s a sensible choice for creating microservices as a result of its frameworks are suited to REST and event-driven functions (e.g., Flask and Django). Microservices written in Python are additionally generally used with Apache Kafka.

Apache Kafka is a well known event-streaming platform that makes use of a publish/subscribe messaging sample. It’s a widespread selection for event-driven architectures as a consequence of its intensive ecosystem, scalability (the results of its fault-tolerance skills), storage system, and stream processing skills.

Lastly, we’ll use Confluent as our cloud platform to effectively handle Kafka and supply out-of-the-box infrastructure. AWS MSK is one other glorious choice in case you’re utilizing AWS infrastructure, however Confluent is simpler to arrange as Kafka is the core a part of its system and it presents a free tier.

Implementing the Venture Blueprint

We’ll arrange our Kafka microservices instance in Confluent Cloud, create a easy message producer, then arrange and enhance it to optimize scalability. By the tip of this tutorial, we could have a functioning message producer that efficiently sends information to our cloud cluster.

Kafka Setup

We’ll first create a Kafka cluster. Kafka clusters host Kafka servers that facilitate communication. Producers and customers interface with the servers utilizing Kafka subjects (classes storing information).

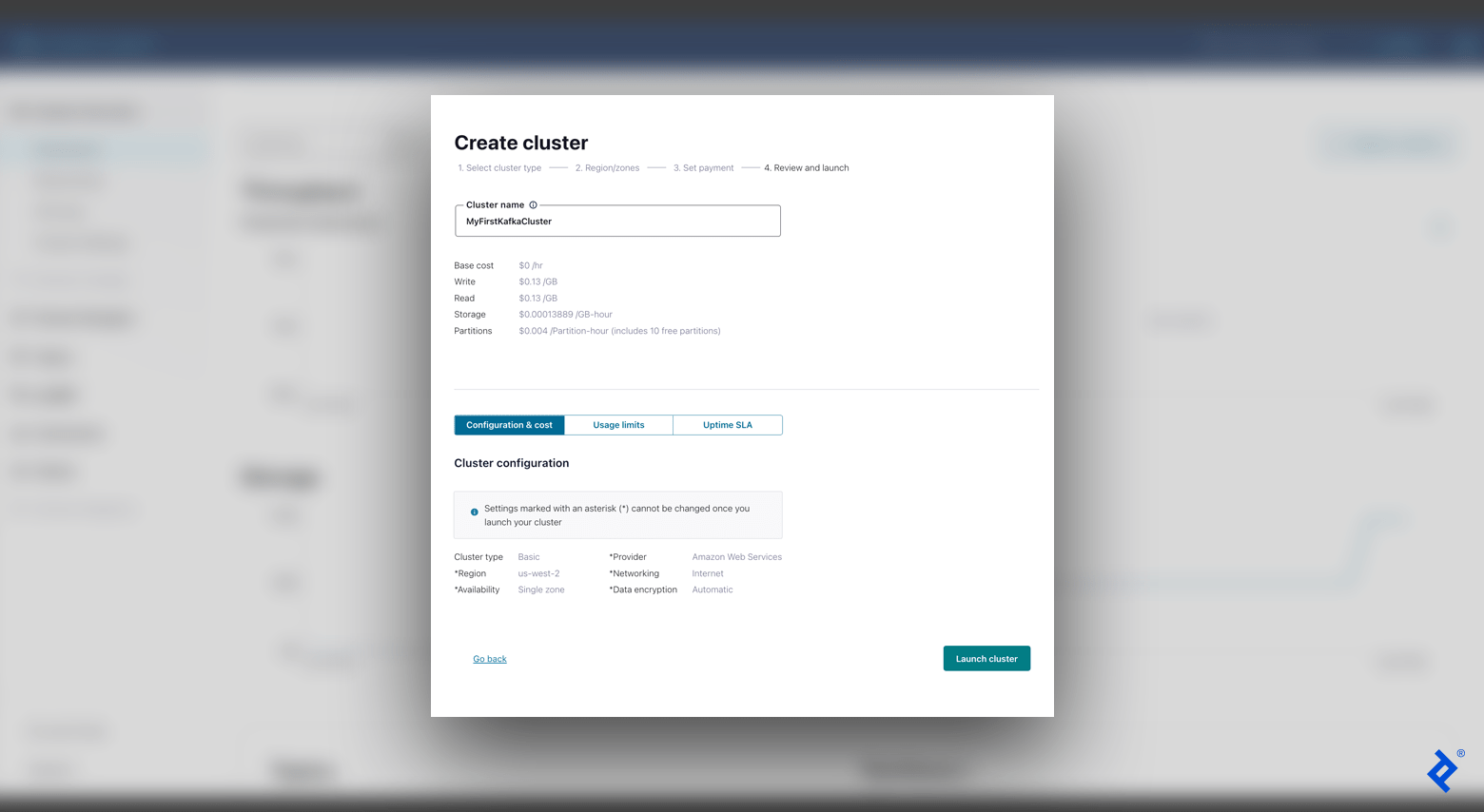

- Join Confluent Cloud. When you create an account, the welcome web page seems with choices for creating a brand new Kafka cluster. Choose the Fundamental configuration.

- Select a cloud supplier and area. It is best to optimize your selections for the most effective cloud ping outcomes out of your location. One choice is to decide on AWS and carry out a cloud ping take a look at (click on HTTP Ping) to establish the most effective area. (For the scope of our tutorial, we’ll depart the “Single zone” choice chosen within the “Availability” discipline.)

- The following display screen asks for a cost setup, which we will skip since we’re on a free tier. After that, we’ll enter our cluster title (e.g., “MyFirstKafkaCluster”), affirm our settings, and choose Launch cluster.

With a working cluster, we’re able to create our first subject. Within the left-hand menu bar, navigate to Matters and click on Create subject. Add a subject title (e.g., “MyFirstKafkaTopic”) and proceed with the default configurations (together with setting six partitions).

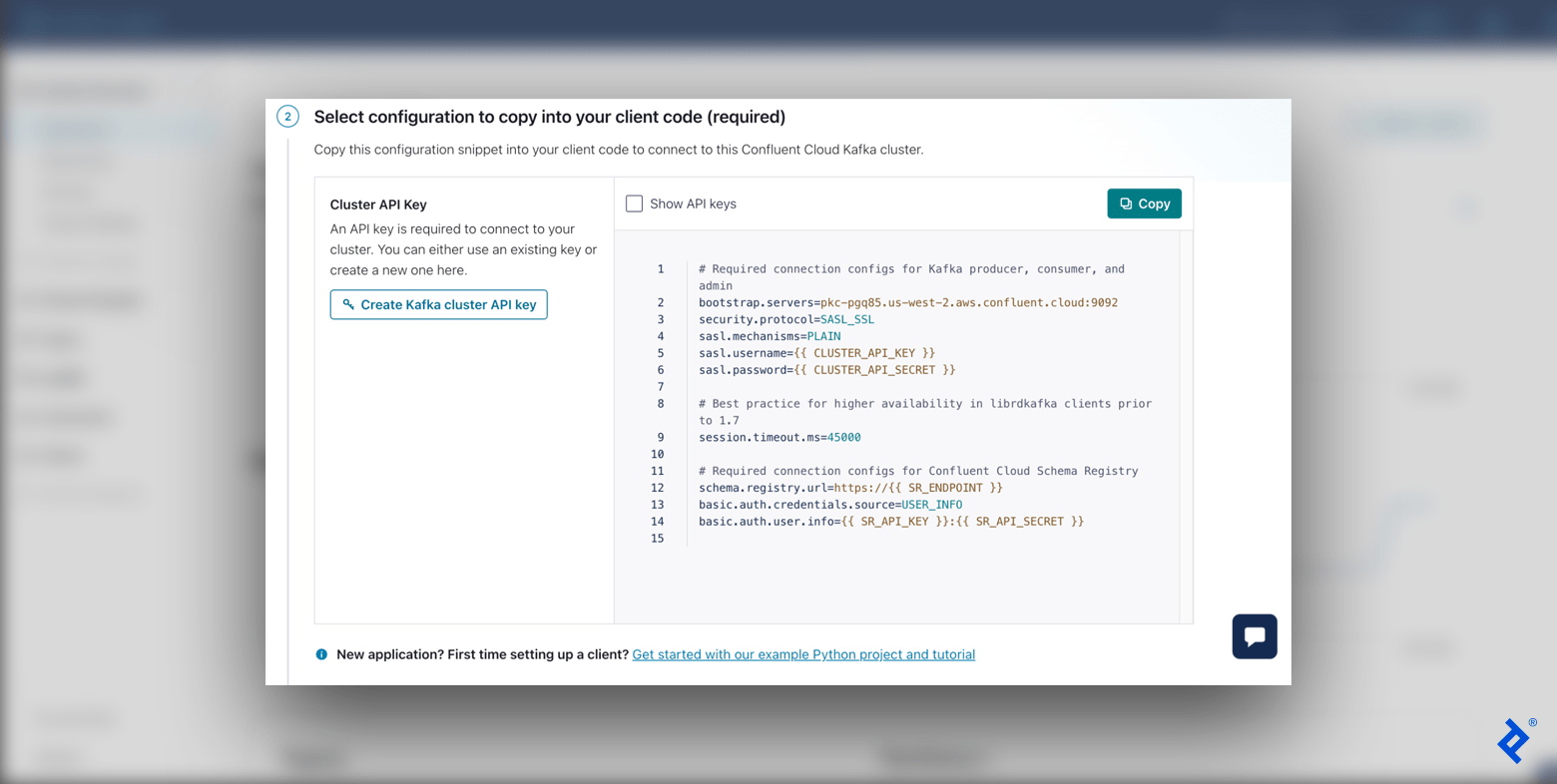

Earlier than creating our first message, we should arrange our consumer. We will simply Configure a consumer from our newly created subject overview (alternatively, within the left-hand menu bar, navigate to Shoppers). We’ll use Python as our language after which click on Create Kafka cluster API key.

At this level, our event-streaming platform is lastly able to obtain messages from our producer.

Easy Message Producer

Our producer generates occasions and sends them to Kafka. Let’s write some code to create a easy message producer. I like to recommend organising a digital atmosphere for our mission since we can be putting in a number of packages in our surroundings.

First, we’ll add our surroundings variables from the API configuration from Confluent Cloud. To do that in our digital atmosphere, we’ll add export SETTING=worth for every setting beneath to the tip of our activate file (alternatively, you may add SETTING=worth to your .env file):

export KAFKA_BOOTSTRAP_SERVERS=<bootstrap.servers>

export KAFKA_SECURITY_PROTOCOL=<safety.protocol>

export KAFKA_SASL_MECHANISMS=<sasl.mechanisms>

export KAFKA_SASL_USERNAME=<sasl.username>

export KAFKA_SASL_PASSWORD=<sasl.password>

Be sure to interchange every entry along with your Confluent Cloud values (for instance, <sasl.mechanisms> must be PLAIN), along with your API key and secret because the username and password. Run supply env/bin/activate, then printenv. Our new settings ought to seem, confirming that our variables have been appropriately up to date.

We can be utilizing two Python packages:

We’ll run the command pip set up confluent-kafka python-dotenv to put in these. There are a lot of different packages for Kafka in Python which may be helpful as you develop your mission.

Lastly, we’ll create our fundamental producer utilizing our Kafka settings. Add a simple_producer.py file:

# simple_producer.py

import os

from confluent_kafka import KafkaException, Producer

from dotenv import load_dotenv

def predominant():

settings = {

'bootstrap.servers': os.getenv('KAFKA_BOOTSTRAP_SERVERS'),

'safety.protocol': os.getenv('KAFKA_SECURITY_PROTOCOL'),

'sasl.mechanisms': os.getenv('KAFKA_SASL_MECHANISMS'),

'sasl.username': os.getenv('KAFKA_SASL_USERNAME'),

'sasl.password': os.getenv('KAFKA_SASL_PASSWORD'),

}

producer = Producer(settings)

producer.produce(

subject='MyFirstKafkaTopic',

key=None,

worth='MyFirstValue-111',

)

producer.flush() # Watch for the affirmation that the message was obtained

if __name__ == '__main__':

load_dotenv()

predominant()

With this easy code we create our producer and ship it a easy take a look at message. To check the consequence, run python3 simple_producer.py:

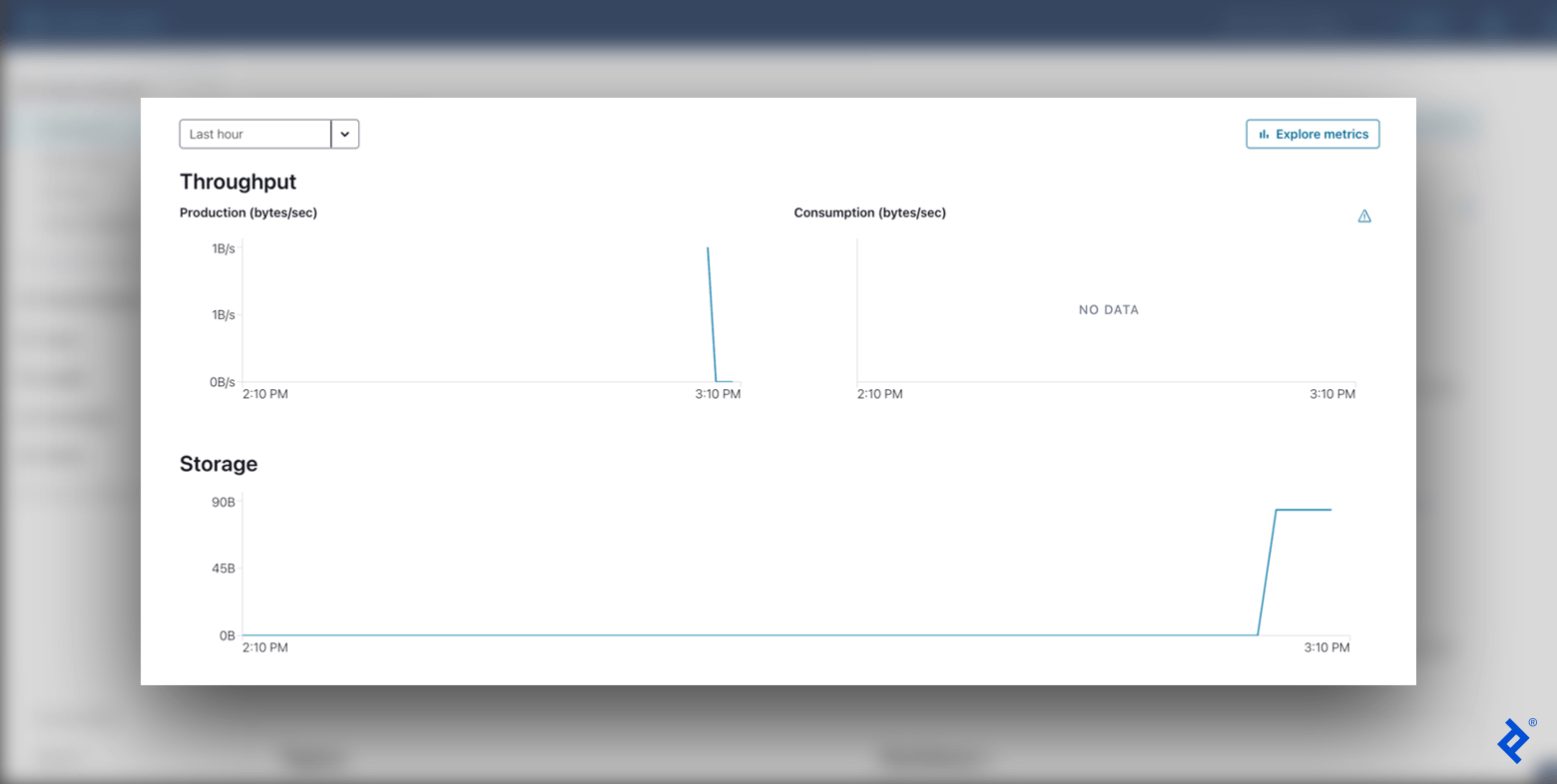

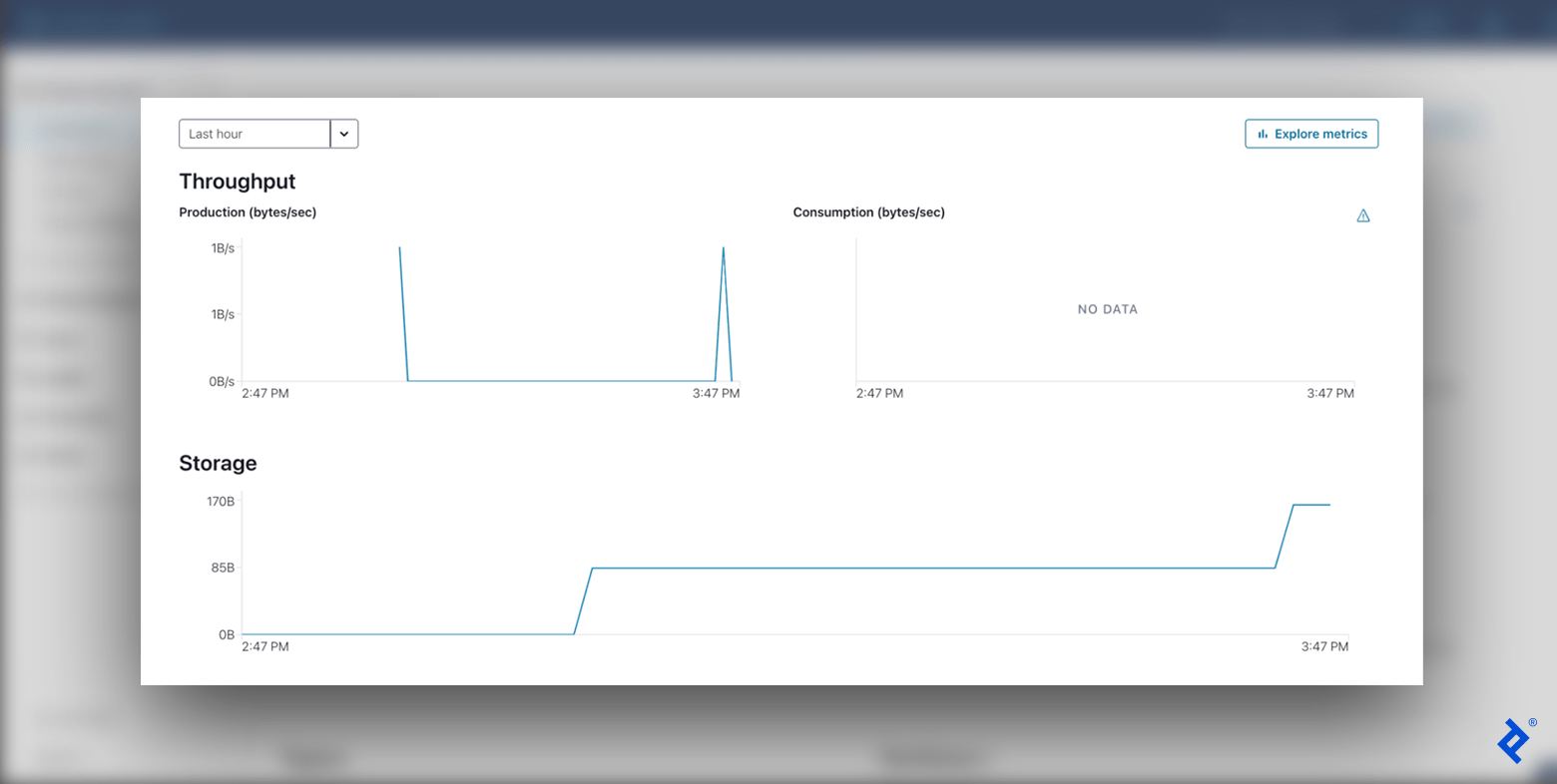

Checking our Kafka cluster’s Cluster Overview > Dashboard, we’ll see a brand new information level on our Manufacturing graph for the message despatched.

Customized Message Producer

Our producer is up and operating. Let’s reorganize our code to make our mission extra modular and OOP-friendly. This can make it simpler so as to add companies and scale our mission sooner or later. We’ll break up our code into 4 recordsdata:

-

kafka_settings.py: Holds our Kafka configurations. -

kafka_producer.py: Incorporates a customizedproduce()technique and error dealing with. -

kafka_producer_message.py: Handles completely different enter information varieties. -

advanced_producer.py: Runs our remaining app utilizing our customized lessons.

First, our KafkaSettings class will encapsulate our Apache Kafka settings, so we will simply entry these from our different recordsdata with out repeating code:

# kafka_settings.py

import os

class KafkaSettings:

def __init__(self):

self.conf = {

'bootstrap.servers': os.getenv('KAFKA_BOOTSTRAP_SERVERS'),

'safety.protocol': os.getenv('KAFKA_SECURITY_PROTOCOL'),

'sasl.mechanisms': os.getenv('KAFKA_SASL_MECHANISMS'),

'sasl.username': os.getenv('KAFKA_SASL_USERNAME'),

'sasl.password': os.getenv('KAFKA_SASL_PASSWORD'),

}

Subsequent, our KafkaProducer permits us to customise our produce() technique with help for numerous errors (e.g., an error when the message measurement is just too massive), and likewise mechanically flushes messages as soon as produced:

# kafka_producer.py

from confluent_kafka import KafkaError, KafkaException, Producer

from kafka_producer_message import ProducerMessage

from kafka_settings import KafkaSettings

class KafkaProducer:

def __init__(self, settings: KafkaSettings):

self._producer = Producer(settings.conf)

def produce(self, message: ProducerMessage):

strive:

self._producer.produce(message.subject, key=message.key, worth=message.worth)

self._producer.flush()

besides KafkaException as exc:

if exc.args[0].code() == KafkaError.MSG_SIZE_TOO_LARGE:

move # Deal with the error right here

else:

increase exc

In our instance’s try-except block, we skip over the message whether it is too massive for the Kafka cluster to devour. Nevertheless, you need to replace your code in manufacturing to deal with this error appropriately. Confer with the confluent-kafka documentation for an entire checklist of error codes.

Now, our ProducerMessage class handles several types of enter information and appropriately serializes them. We’ll add performance for dictionaries, Unicode strings, and byte strings:

# kafka_producer_message.py

import json

class ProducerMessage:

def __init__(self, subject: str, worth, key=None) -> None:

self.subject = f'{subject}'

self.key = key

self.worth = self.convert_value_to_bytes(worth)

@classmethod

def convert_value_to_bytes(cls, worth):

if isinstance(worth, dict):

return cls.from_json(worth)

if isinstance(worth, str):

return cls.from_string(worth)

if isinstance(worth, bytes):

return cls.from_bytes(worth)

increase ValueError(f'Improper message worth sort: {sort(worth)}')

@classmethod

def from_json(cls, worth):

return json.dumps(worth, indent=None, sort_keys=True, default=str, ensure_ascii=False)

@classmethod

def from_string(cls, worth):

return worth.encode('utf-8')

@classmethod

def from_bytes(cls, worth):

return worth

Lastly, we will construct our app utilizing our newly created lessons in advanced_producer.py:

# advanced_producer.py

from dotenv import load_dotenv

from kafka_producer import KafkaProducer

from kafka_producer_message import ProducerMessage

from kafka_settings import KafkaSettings

def predominant():

settings = KafkaSettings()

producer = KafkaProducer(settings)

message = ProducerMessage(

subject='MyFirstKafkaTopic',

worth={"worth": "MyFirstKafkaValue"},

key=None,

)

producer.produce(message)

if __name__ == '__main__':

load_dotenv()

predominant()

We now have a neat abstraction above the confluent-kafka library. Our customized producer possesses the identical performance as our easy producer with added scalability and suppleness, able to adapt to numerous wants. We might even change the underlying library totally if we wished to, which units our mission up for fulfillment and long-term maintainability.

After operating python3 advanced_producer.py, we see but once more that information has been despatched to our cluster within the Cluster Overview > Dashboard panel of Confluent Cloud. Having despatched one message with the straightforward producer, and a second with our customized producer, we now see two spikes in manufacturing throughput and a rise in total storage used.

Wanting Forward: From Producers to Customers

An event-driven microservices structure will improve your mission and enhance its scalability, flexibility, reliability, and asynchronous communications. This tutorial has given you a glimpse of those advantages in motion. With our enterprise-scale producer up and operating, sending messages efficiently to our Kafka dealer, the subsequent steps can be to create a client to learn these messages from different companies and add Docker to our software.

The editorial workforce of the Toptal Engineering Weblog extends its gratitude to E. Deniz Toktay for reviewing the code samples and different technical content material offered on this article.