Giant language fashions, also called basis fashions, have gained important traction within the subject of machine studying. These fashions are pre-trained on giant datasets, which permits them to carry out effectively on a wide range of duties with out requiring as a lot coaching information. Study how one can simply deploy a pre-trained basis mannequin utilizing the DataRobot MLOps capabilities, then put the mannequin into manufacturing. By leveraging the ability of a pre-trained mannequin, it can save you time and assets whereas nonetheless reaching excessive efficiency in your machine studying purposes.

What Are Giant Language Fashions?

The creation of basis fashions is among the key developments within the subject of huge language fashions that’s creating a number of pleasure and curiosity amongst information scientists and machine studying engineers. These fashions are skilled on large quantities of textual content information utilizing deep studying algorithms. They’ve the power to generate human-like language that’s coherent and related in a given context and to course of and perceive pure language at a stage that was beforehand considered not possible. In consequence, they’ve the potential to revolutionize the way in which that we work together with machines and clear up a variety of machine studying issues.

These developments have allowed researchers to create fashions that may carry out a variety of pure language processing duties, akin to machine translation, summarization, query answering and even dialogue technology. They can be used for inventive duties, akin to producing life like textual content, which will be helpful for a wide range of purposes, akin to producing product descriptions or creating information articles.

Total, the current developments in giant language fashions are very thrilling, and have the potential to significantly enhance our skill to unravel machine studying issues and work together with machines in a extra pure and intuitive approach.

Get Began with Language Fashions Utilizing Hugging Face

As many machine studying practitioners already know, one straightforward option to get began with language fashions is through the use of Hugging Face. Hugging Face mannequin hub is a platform providing a group of pre-trained fashions that may be simply downloaded and used for a variety of pure language processing duties.

To get began with a language mannequin from the Hugging Face mannequin hub, you merely want to put in the Hugging Face library in your native pocket book or DataRobot Notebooks if that’s what you utilize. In case you already run your experiments on the DataRobot GUI, you might even add it as a customized job.

As soon as put in, you’ll be able to select a mannequin that fits your wants. Then you need to use the mannequin to carry out duties akin to textual content technology, classification, and translation. The fashions are straightforward to make use of and will be fine-tuned to your particular wants, making them a strong device for fixing a wide range of pure language processing issues.

In case you don’t wish to arrange a neighborhood runtime atmosphere, you will get began with a Google Colab pocket book on a CPU/GPU/TPU runtime, obtain your mannequin, and get the mannequin predictions in just some traces.

For instance, getting began with a BERT mannequin for query answering (bert-large-uncased-whole-word-masking-finetuned-squad) is as straightforward as executing these traces:

!pip set up transformers==4.25.1

from transformers import AutoTokenizer, TFBertForQuestionAnswering

MODEL = "bert-large-uncased-whole-word-masking-finetuned-squad"

tokenizer = AutoTokenizer.from_pretrained(MODEL)

mannequin = TFBertForQuestionAnswering.from_pretrained(MODEL)Deploying Language Fashions to Manufacturing

After you check out some fashions, probably additional fine-tune them in your particular use circumstances, and get them prepared for manufacturing, you’ll want a serving atmosphere to host your artifacts. Apart from simply an atmosphere to serve the mannequin, you’ll want to watch its efficiency, well being, information and prediction drift, and a simple approach of retraining it with out disturbing your manufacturing workflows and your downstream purposes that devour your mannequin’s output.

That is the place the DataRobot MLOps comes into play. DataRobot MLOps providers present a platform for internet hosting and deploying customized mannequin packages in varied ML frameworks akin to PyTorch, Tensorflow, ONNX, and sk-learn, permitting organizations to simply combine their pre-trained fashions into their present purposes and devour them for his or her enterprise wants.

To host a pre-trained language mannequin on DataRobot MLOps providers, you merely must add the mannequin to the platform, construct its runtime atmosphere along with your customized dependency packages, and deploy it on DataRobot servers. Your deployment will likely be prepared in a couple of minutes, after which you’ll be able to ship your prediction requests to your deployment endpoint and luxuriate in your mannequin in manufacturing.

Whereas you are able to do all these operations from the DataRobot UI, right here we’ll present you methods to implement the end-to-end workflow, utilizing the Datarobot API in a pocket book atmosphere. So, let’s get began!

You’ll be able to observe alongside this tutorial by creating a brand new Google Colab pocket book or by copying our pocket book from our DataRobot Neighborhood Repository and operating the copied pocket book on Google Colab.

Set up dependencies

!pip set up transformers==4.25.1 datarobot==3.0.2

from transformers import AutoTokenizer, TFBertForQuestionAnswering

import numpy as npObtain the BERT mannequin from HuggingFace on the pocket book atmosphere

MODEL = "bert-large-uncased-whole-word-masking-finetuned-squad"

tokenizer = AutoTokenizer.from_pretrained(MODEL)

mannequin = TFBertForQuestionAnswering.from_pretrained(MODEL)

BASE_PATH = "/content material/datarobot_blogpost"

tokenizer.save_pretrained(BASE_PATH)

mannequin.save_pretrained(BASE_PATH)

Deploy to DataRobot

Create the inference (glue) script, ie. the customized.py file.

This inference script (customized.py file) acts because the glue between your mannequin artifacts and the Customized Mannequin execution in DataRobot. If that is the primary time you’re making a customized mannequin on DataRobot MLOps, our public repository will likely be a terrific start line, with many extra examples for mannequin templates in several ML frameworks and for various mannequin sorts, akin to binary or multiclass classification, regression, anomaly detection, or unstructured fashions just like the one we’ll be constructing in our instance.

%%writefile $BASE_PATH/customized.py

"""

Copyright 2021 DataRobot, Inc. and its associates.

All rights reserved.

That is proprietary supply code of DataRobot, Inc. and its associates.

Launched underneath the phrases of DataRobot Software and Utility Settlement.

"""

import json

import os.path

import os

import tensorflow as tf

import pandas as pd

from transformers import AutoTokenizer, TFBertForQuestionAnswering

import io

def load_model(input_dir):

tokenizer = AutoTokenizer.from_pretrained(input_dir)

tf_model = TFBertForQuestionAnswering.from_pretrained(

input_dir, return_dict=True

)

return tf_model, tokenizer

def log_for_drum(msg):

os.write(1, f"n{msg}n".encode("UTF-8"))

def _get_answer_in_text(output, input_ids, idx, tokenizer):

answer_start = tf.argmax(output.start_logits, axis=1).numpy()[idx]

answer_end = (tf.argmax(output.end_logits, axis=1) + 1).numpy()[idx]

reply = tokenizer.convert_tokens_to_string(

tokenizer.convert_ids_to_tokens(input_ids[answer_start:answer_end])

)

return reply

def score_unstructured(mannequin, information, question, **kwargs):

world model_load_duration

tf_model, tokenizer = mannequin

# Assume batch enter is shipped with mimetype:"textual content/csv"

# Deal with as single prediction enter if no mimetype is about

is_batch = kwargs["mimetype"] == "textual content/csv"

if is_batch:

input_pd = pd.read_csv(io.StringIO(information), sep="|")

input_pairs = checklist(zip(input_pd["abstract"], input_pd["question"]))

begin = time.time()

inputs = tokenizer.batch_encode_plus(

input_pairs, add_special_tokens=True, padding=True, return_tensors="tf"

)

input_ids = inputs["input_ids"].numpy()

output = tf_model(inputs)

responses = []

for i, row in input_pd.iterrows():

reply = _get_answer_in_text(output, input_ids[i], i, tokenizer)

response = {

"summary": row["abstract"],

"query": row["question"],

"reply": reply,

}

responses.append(response)

pred_duration = time.time() - begin

to_return = json.dumps(

{

"predictions": responses,

"pred_duration": pred_duration,

}

)

else:

data_dict = json.masses(information)

summary, query = data_dict["abstract"], data_dict["question"]

begin = time.time()

inputs = tokenizer(

query,

summary,

add_special_tokens=True,

padding=True,

return_tensors="tf",

)

input_ids = inputs["input_ids"].numpy()[0]

output = tf_model(inputs)

reply = _get_answer_in_text(output, input_ids, 0, tokenizer)

pred_duration = time.time() - begin

to_return = json.dumps(

{

"summary": summary,

"query": query,

"reply": reply,

"pred_duration": pred_duration,

}

)

return to_returnCreate the necessities file

%%writefile $BASE_PATH/necessities.txt

transformersAdd mannequin artifacts and inference script to DataRobot

import datarobot as dr

def deploy_to_datarobot(folder_path, env_name, model_name, descr):

API_TOKEN = "YOUR_API_TOKEN" #Please discuss with https://docs.datarobot.com/en/docs/platform/account-mgmt/acct-settings/api-key-mgmt.html to get your token

dr.Shopper(token=API_TOKEN, endpoint="https://app.datarobot.com/api/v2/")

onnx_execution_env = dr.ExecutionEnvironment.checklist(search_for=env_name)[0]

custom_model = dr.CustomInferenceModel.create(

identify=model_name,

target_type=dr.TARGET_TYPE.UNSTRUCTURED,

description=descr,

language="python"

)

print(f"Creating customized mannequin model on {onnx_execution_env}...")

model_version = dr.CustomModelVersion.create_clean(

custom_model_id=custom_model.id,

base_environment_id=onnx_execution_env.id,

folder_path=folder_path,

maximum_memory=4096 * 1024 * 1024,

)

print(f"Created {model_version}.")

variations = dr.CustomModelVersion.checklist(custom_model.id)

sorted_versions = sorted(variations, key=lambda v: v.label)

latest_version = sorted_versions[-1]

print("Constructing the execution atmosphere with dependency packages...")

build_info = dr.CustomModelVersionDependencyBuild.start_build(

custom_model_id=custom_model.id,

custom_model_version_id=latest_version.id,

max_wait=3600,

)

print(f"Setting construct accomplished with {build_info.build_status}.")

print("Creating mannequin deployment...")

default_prediction_server = dr.PredictionServer.checklist()[0]

deployment = dr.Deployment.create_from_custom_model_version(latest_version.id,

label=model_name,

description=descr,

default_prediction_server_id=default_prediction_server.id,

max_wait=600,

significance=None)

print(f"{deployment} is prepared!")

return deploymentCreate the mannequin deployment

deployment = deploy_to_datarobot(BASE_PATH,

"Keras",

"blog-bert-tf-questionAnswering",

"Pretrained BERT mannequin, fine-tuned on SQUAD for query answering")Check with prediction requests

The next script is designed to make predictions in opposition to your deployment, and you may seize the identical script by opening up your DataRobot account, going to the Deployments tab, opening the deployment you simply created, going to the Predictions tab, after which opening up the Prediction API Scripting Code -> Single part.

It’ll seem like the instance under the place you’ll see your individual API_KEY and DATAROBOT_KEY stuffed in.

"""

Utilization:

python datarobot-predict.py <input-file> [mimetype] [charset]

This instance makes use of the requests library which you'll be able to set up with:

pip set up requests

We extremely suggest that you just replace SSL certificates with:

pip set up -U urllib3[secure] certifi

"""

import sys

import json

import requests

API_URL = 'https://mlops-dev.dynamic.orm.datarobot.com/predApi/v1.0/deployments/{deployment_id}/predictionsUnstructured'

API_KEY = 'YOUR_API_KEY'

DATAROBOT_KEY = 'YOUR_DATAROBOT_KEY'

# Do not change this. It's enforced server-side too.

MAX_PREDICTION_FILE_SIZE_BYTES = 52428800 # 50 MB

class DataRobotPredictionError(Exception):

"""Raised if there are points getting predictions from DataRobot"""

def make_datarobot_deployment_unstructured_predictions(information, deployment_id, mimetype, charset):

"""

Make unstructured predictions on information supplied utilizing DataRobot deployment_id supplied.

See docs for particulars:

https://app.datarobot.com/docs/predictions/api/dr-predapi.html

Parameters

----------

information : bytes

Bytes information learn from supplied file.

deployment_id : str

The ID of the deployment to make predictions with.

mimetype : str

Mimetype describing information being despatched.

If mimetype begins with 'textual content/' or equal to 'software/json',

information will likely be decoded with supplied or default(UTF-8) charset

and handed into the 'score_unstructured' hook carried out in customized.py supplied with the mannequin.

In case of different mimetype values information is handled as binary and handed with out decoding.

charset : str

Charset ought to match the contents of the file, if file is textual content.

Returns

-------

information : bytes

Arbitrary information returned by unstructured mannequin.

Raises

------

DataRobotPredictionError if there are points getting predictions from DataRobot

"""

# Set HTTP headers. The charset ought to match the contents of the file.

headers = {

'Content material-Sort': '{};charset={}'.format(mimetype, charset),

'Authorization': 'Bearer {}'.format(API_KEY),

'DataRobot-Key': DATAROBOT_KEY,

}

url = API_URL.format(deployment_id=deployment_id)

# Make API request for predictions

predictions_response = requests.put up(

url,

information=information,

headers=headers,

)

_raise_dataroboterror_for_status(predictions_response)

# Return uncooked response content material

return predictions_response.content material

def _raise_dataroboterror_for_status(response):

"""Increase DataRobotPredictionError if the request fails together with the response returned"""

strive:

response.raise_for_status()

besides requests.exceptions.HTTPError:

err_msg = '{code} Error: {msg}'.format(

code=response.status_code, msg=response.textual content)

elevate DataRobotPredictionError(err_msg)

def datarobot_predict_file(filename, deployment_id, mimetype="textual content/csv", charset="utf-8"):

"""

Return an exit code on script completion or error. Codes > 0 are errors to the shell.

Additionally helpful as a utilization demonstration of

`make_datarobot_deployment_unstructured_predictions(information, deployment_id, mimetype, charset)`

"""

information = open(filename, 'rb').learn()

data_size = sys.getsizeof(information)

if data_size >= MAX_PREDICTION_FILE_SIZE_BYTES:

print((

'Enter file is just too giant: {} bytes. '

'Max allowed dimension is: {} bytes.'

).format(data_size, MAX_PREDICTION_FILE_SIZE_BYTES))

return 1

strive:

predictions = make_datarobot_deployment_unstructured_predictions(information, deployment_id, mimetype, charset)

return predictions

besides DataRobotPredictionError as exc:

pprint(exc)

return None

def datarobot_predict(input_dict, deployment_id, mimetype="software/json", charset="utf-8"):

"""

Return an exit code on script completion or error. Codes > 0 are errors to the shell.

Additionally helpful as a utilization demonstration of

`make_datarobot_deployment_unstructured_predictions(information, deployment_id, mimetype, charset)`

"""

information = json.dumps(input_dict).encode(charset)

data_size = sys.getsizeof(information)

if data_size >= MAX_PREDICTION_FILE_SIZE_BYTES:

print((

'Enter file is just too giant: {} bytes. '

'Max allowed dimension is: {} bytes.'

).format(data_size, MAX_PREDICTION_FILE_SIZE_BYTES))

return 1

strive:

predictions = make_datarobot_deployment_unstructured_predictions(information, deployment_id, mimetype, charset)

return json.masses(predictions)['answer']

besides DataRobotPredictionError as exc:

pprint(exc)

return NoneNow that we have now the auto-generated script to make our predictions, it’s time to ship a check prediction request. Let’s create a JSON to ask a query to our question-answering BERT mannequin. We’ll give it an extended summary for the knowledge, and the query primarily based on this summary.

test_input = {"summary": "Healthcare duties (e.g., affected person care through illness therapy) and biomedical analysis (e.g., scientific discovery of recent therapies) require skilled data that's restricted and costly. Basis fashions current clear alternatives in these domains because of the abundance of knowledge throughout many modalities (e.g., photographs, textual content, molecules) to coach basis fashions, in addition to the worth of improved pattern effectivity in adaptation on account of the price of skilled time and data. Additional, basis fashions might enable for improved interface design (§2.5: interplay) for each healthcare suppliers and sufferers to work together with AI programs, and their generative capabilities recommend potential for open-ended analysis issues like drug discovery. Concurrently, they arrive with clear dangers (e.g., exacerbating historic biases in medical datasets and trials). To responsibly unlock this potential requires participating deeply with the sociotechnical issues of knowledge sources and privateness in addition to mannequin interpretability and explainability, alongside efficient regulation of using basis fashions for each healthcare and biomedicine.", "query": "The place can we use basis fashions?"}

datarobot_predict(test_input, deployment.id)And see that our mannequin returns the reply within the mannequin response, as we anticipated.

> each healthcare and biomedicine

Simply Monitor Machine Studying Fashions with DataRobot MLOps

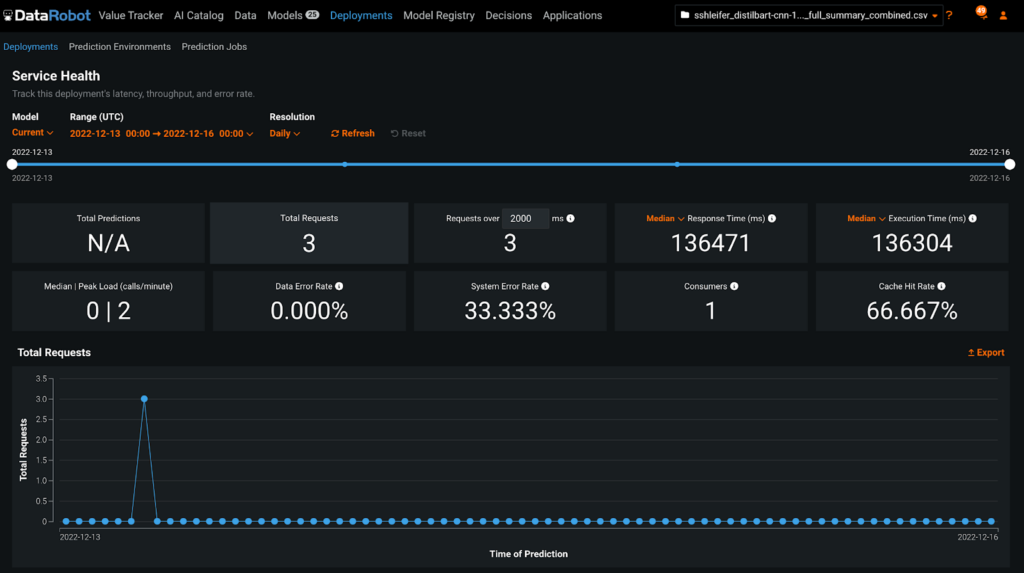

Now that we have now our question-answering mannequin up and operating efficiently, let’s observe our service well being dashboard in DataRobot MLOps. As we ship prediction requests to our mannequin, the Service Well being tab will mirror the newly acquired requests and allow us to keep watch over our mannequin’s metrics.

Later, if we wish to replace our deployment with a more moderen model of the pretrained mannequin artifact or replace our customized inference script, we use the API or the Customized Mannequin Workshop UI once more to make any mandatory adjustments on our deployment flawlessly.

Begin Utilizing Giant Language Fashions

By internet hosting a language mannequin with DataRobot MLOps, organizations can benefit from the ability and suppleness of huge language fashions with out having to fret in regards to the technical particulars of managing and deploying the mannequin.

On this weblog put up, we confirmed how straightforward it’s to host a big language mannequin as a DataRobot customized mannequin in just a few minutes by operating an end-to-end script. You could find the end-to-end pocket book within the DataRobot group repository, make a replica of it to edit in your wants, and stand up to hurry with your individual mannequin in manufacturing.

In regards to the creator

Senior Machine Studying Engineer, DataRobot

Aslı Sabancı Demiröz is a Senior Machine Studying Engineer at DataRobot. She holds a BS in Laptop Engineering with a double main in Management Engineering from Istanbul Technical College. Working within the workplace of the CTO, she enjoys being on the coronary heart of DataRobot’s R&D to drive innovation. Her ardour lies within the deep studying area and she or he particularly enjoys creating highly effective integrations between platform and software layers within the ML ecosystem, aiming to make the entire higher than the sum of the components.