This weblog was written in collaboration with David Roberts (Analytics Engineering Supervisor), Kevin P. Buchan Jr (Assistant Vice President, Analytics), and Yubin Park (Chief Knowledge and Analytics Officer) at ApolloMed. Take a look at the answer accelerator to obtain the notebooks illustrating use of the ApolloMed High quality Engine.

At Apollo Medical Holdings (ApolloMed), we allow unbiased doctor teams to offer high-quality affected person care at an inexpensive value. On this planet of value-based care, “high quality” is often outlined by standardized measures which quantify efficiency on healthcare processes, outcomes, and affected person satisfaction. A canonical instance is the “Transitions of Care” measure, which tracks the proportion of sufferers discharged from the hospital which obtain essential observe up providers, e.g. their major care supplier is notified, any new medicines are reconciled with their authentic routine, and they’re promptly seen by a healthcare supplier. If any of those occasions don’t happen and the affected person meets qualifying standards, there’s a high quality “hole”, i.e. one thing did not occur that ought to have, or vice versa.

Inside value-based contracts, high quality measures are tied to monetary efficiency for payers and supplier teams, creating a cloth incentive to offer and produce proof of top of the range care. The stakes are excessive, each for discharged sufferers who aren’t receiving follow-ups and the projected revenues of supplier teams, well being programs, and payers.

Key Challenges in Managing High quality Hole Closure

Quite a few, non-interoperable sources

A company’s high quality group might monitor and pursue hole closure for 30+ distinct high quality measures, throughout quite a lot of contracts. Usually, the information required to trace hole closure originates from the same variety of Excel spreadsheet reviews, all with subtly assorted content material and unstable information definitions. Merging such spreadsheets is a difficult activity for software program builders, to not point out scientific workers. Whereas these reviews are vital because the supply of fact for a well being plan’s view of efficiency, ingesting all of them is an inherently unstable, unscalable course of. Whereas all of us hope that current advances in LLM-based programming might change this equation, for the time being, many groups relearn the next on a month-to-month foundation…

Poor Transparency

If the standard group is lucky to have assist wrangling this hydra, they nonetheless might obtain incomplete, non-transparent data. If a care hole is closed (excellent news), the standard group needs to know who’s accountable.

- Which declare was proof of the observe up go to?

- Was the first care supplier concerned, or another person?

If a care hole stays open (dangerous information), the standard group directs their inquiries to as many exterior events (payers, well being plans) as there are reviews. Which information sources had been used? Labs? EHR information? Claims solely? What assumptions had been made in processing them?

To reply these questions, many analytics groups try to duplicate the standard measures on their very own datasets. However how are you going to guarantee excessive constancy to NCQA business customary measures?

Knowledge Missingness

Whereas interoperability has improved, it stays largely not possible to compile complete, longitudinal medical information in the US. Well being plans measuring high quality accommodate this difficulty by permitting “supplemental information submissions”, whereby suppliers submit proof of providers, circumstances, or outcomes which aren’t recognized to the well being plan. Therefore, suppliers profit from an inner system monitoring high quality gaps as a examine and steadiness in opposition to well being plan reviews. When discrepancies with well being plans reviews are recognized, suppliers can submit supplemental information to make sure their high quality scores are correct.

Resolution: Operating HEDISⓇ Measures on Databricks

At ApolloMed, we determined to take a primary rules method to measuring high quality. Slightly than rely solely on reviews from exterior events, we applied and obtained NCQA certification on over 20 measures, along with customized measures requested by our high quality group. We then deployed our high quality engine inside our Databricks Delta Lake. All advised, we achieved a 5x runtime financial savings over our earlier method. In the meanwhile, our HEDISⓇ engine runs over 1,000,000 members by 20+ measures for 2 measurement years in roughly 2.5 hours!

Frankly, we’re thrilled with the consequence. Databricks enabled us to:

- consolidate an advanced course of right into a single device

- cut back runtime

- present enhanced transparency to our stakeholders

- lower your expenses

Scaling is Trivial with Pyspark

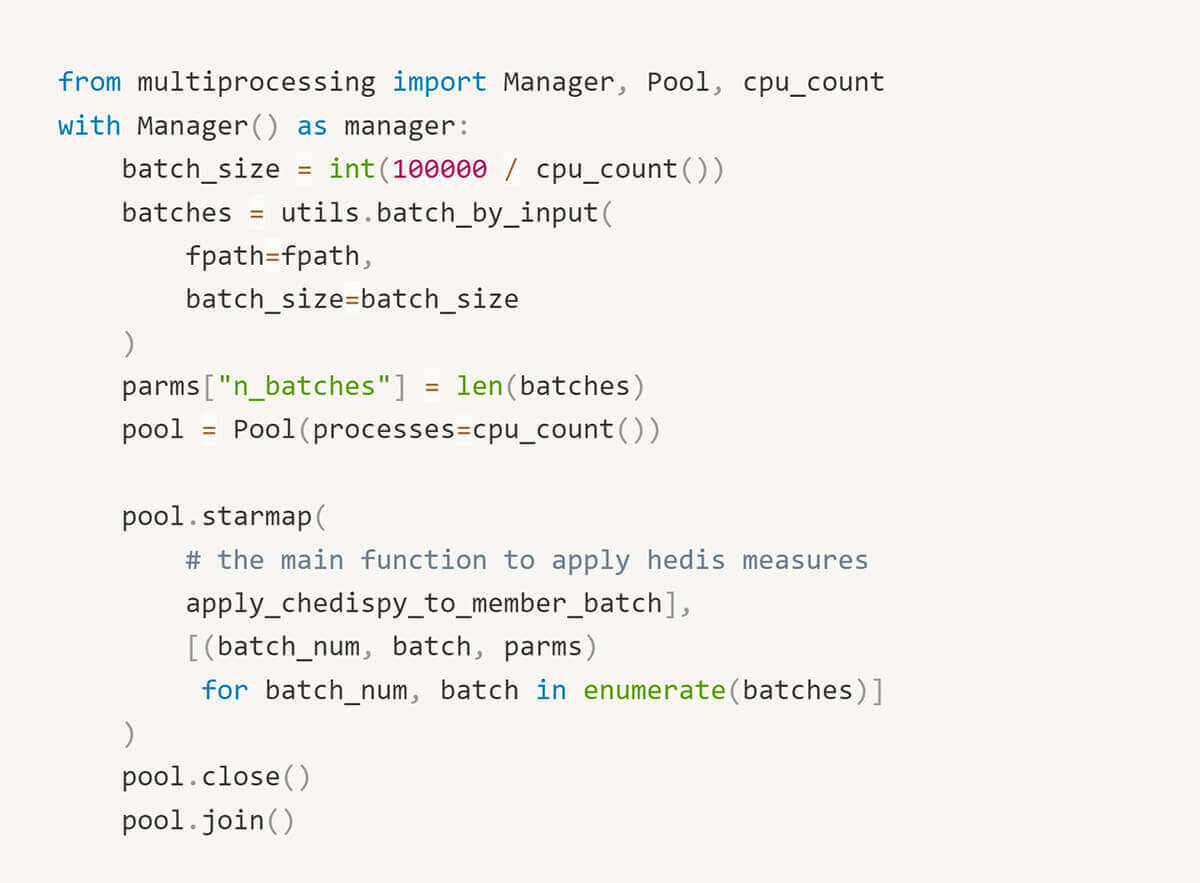

In our earlier implementation, an Ubuntu VM extracted HEDISⓇ inputs as JSON paperwork from an on-prem SQL server. The VM was expensive (operating 24×7) with 16 CPUs to help Python pool-based parallelization.

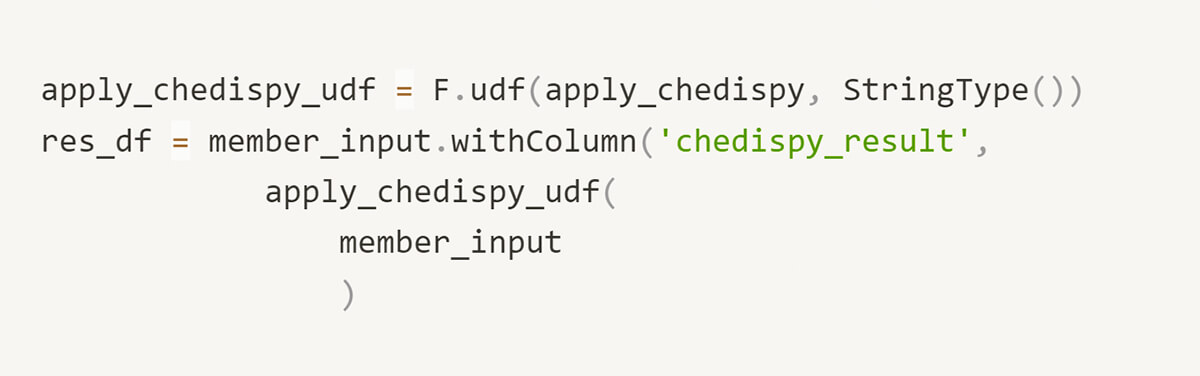

With pyspark, we merely register a Spark Consumer-Outlined Operate (UDF) and depend on the framework to handle parallelization. This not solely yielded vital efficiency advantages, it’s less complicated to learn and talk to teammates. With the flexibility to trivially scale clusters as vital, we’re assured our implementation can help the wants of a rising enterprise. Furthermore, with Databricks, you pay for what you want. We anticipate to cut back compute value by a minimum of 1/2 in transitioning to Databricks Jobs clusters.

Parallel Processing Earlier than…

Parallel Processing After…

Traceability Is Enabled by Default

As information practitioners, we’re steadily queried by stakeholders to clarify surprising modifications. Whereas cumbersome, this activity is essential to retaining the arrogance of customers and guaranteeing that they belief the information we offer. If we have carried out our job properly, modifications to measures mirror variance within the underlying information distributions. Different occasions, we make errors. Both means, we’re accountable to help our stakeholders to know the supply of a change.

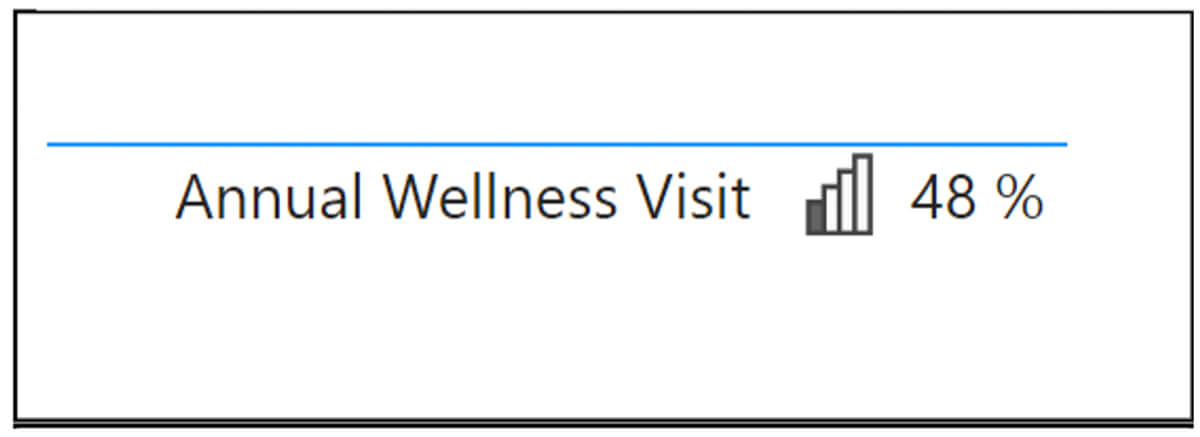

A screenshotted measure. “Why did this modification?! It was 52% final Thursday”

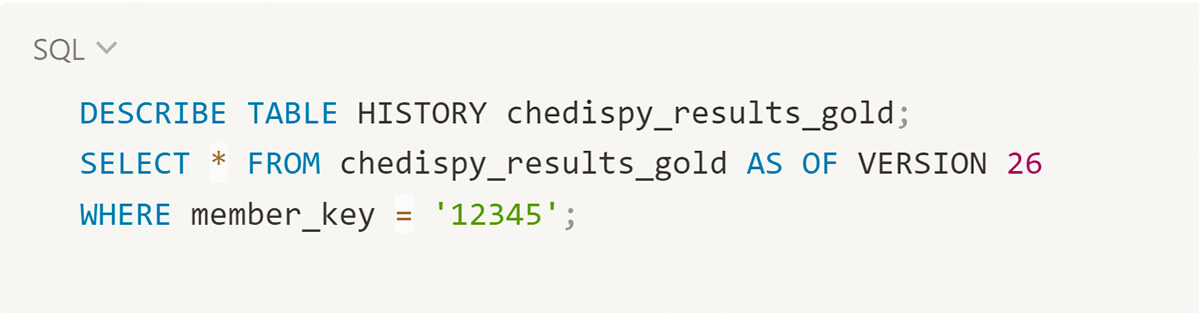

Within the Databricks Delta Lake, the means to revert datasets is a default. With a Delta Desk, we are able to simply examine a earlier model of a member’s file to debug a problem.

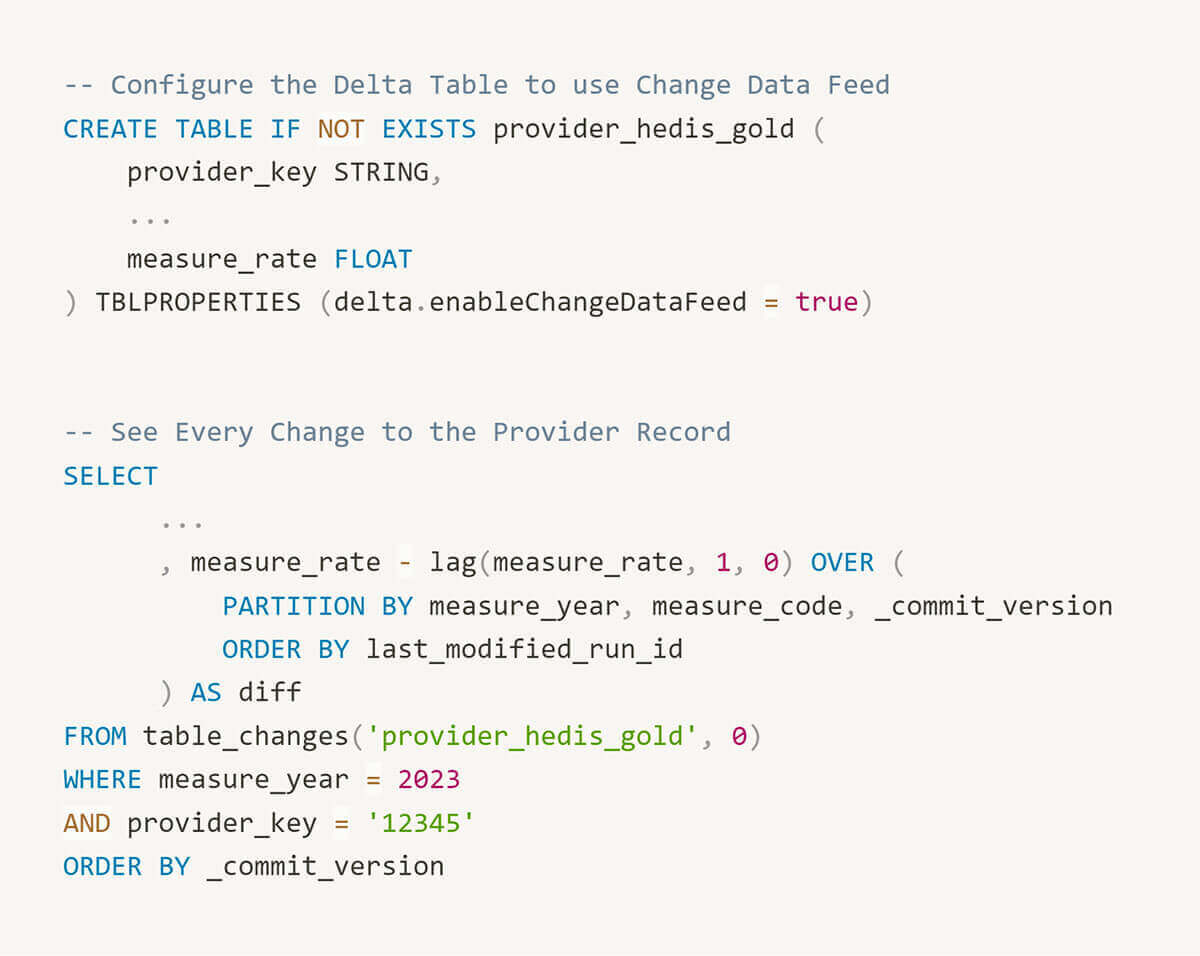

We now have additionally enabled change information seize on smaller mixture tables which tracks element degree modifications. This functionality permits us to simply reproduce and visualize how a charge is altering over time.

Dependable Pipelines with Standardized Codecs

Our high quality efficiency estimates are a key driver pushing us to develop a complete affected person information repository. Slightly than studying of poorly managed diabetes as soon as a month by way of well being plan reviews, we favor to ingest HL7 lab feeds every day. In the long run, organising dependable information pipelines utilizing uncooked, standardized information sources will facilitate broader use circumstances, e.g. customized high quality measures and machine studying fashions skilled on complete affected person information. As Micky Tripathi marches on and U.S. interoperability improves, we’re cultivating the interior capability to ingest uncooked information sources as they grow to be out there.

Enhanced Transparency

We coded the ApolloMed high quality engine out to offer the actionable particulars we have at all times needed as customers of high quality reviews. Which lab met the numerator for this measure? Why was this member excluded from a denominator? This transparency helps report customers perceive the measures they’re accountable to and facilitates hole closures.

Keen on studying extra?

The ApolloMed high quality engine has 20+ NCQA licensed measures is now out there to be deployed in your Databricks surroundings. To be taught extra, please assessment our Databricks answer accelerator or attain out to [email protected] for additional particulars.