(Nanowerk Information) Researchers at Tohoku College and the College of California, Santa Barbara have proven a proof-of-concept of energy-efficient pc suitable with present synthetic intelligence (AI). It makes use of a stochastic conduct of nanoscale spintronics gadgets and is especially appropriate for probabilistic computation issues similar to inference and sampling.

They introduced the outcomes on the IEEE Worldwide Electron Gadgets Assembly (IEDM 2023) on December 12, 2023 (“{Hardware} Demonstration of Feedforward Stochastic Neural Networks with Quick MTJ-based p-bits”).

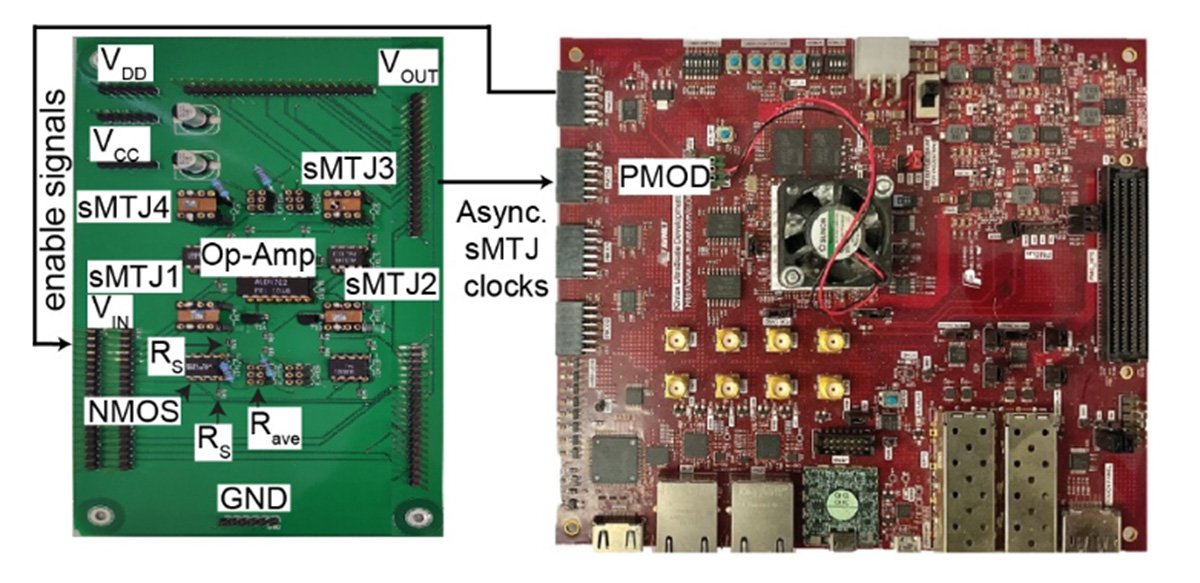

{A photograph} of the proof-of-concept of the spintronic probabilistic pc consisting of sMTJ-based p-bit unit (left facet) and Discipline-Programmable Gate Array (FPGA) (proper facet). (Picture: Shunsuke Fukami, Kerem Camsari et al.)

With the slowing down of Moore’s Legislation, there was an rising demand for domain-specific {hardware}. Probabilistic pc with naturally stochastic constructing blocks (probabilistic bits, or p-bits) is a consultant instance owing to its potential functionality to effectively handle numerous computationally arduous duties in machine studying (ML) and synthetic intelligence (AI). Simply as quantum computer systems are a pure match for inherently quantum issues, room-temperature probabilistic computer systems are appropriate for intrinsically probabilistic algorithms, that are extensively used for coaching machines and computational arduous issues in optimization, sampling, and so forth.

Lately, researchers from Tohoku College and the College of California Santa Barbara have proven that sturdy and totally asynchronous (clockless) probabilistic computer systems will be effectively realized at scale utilizing a probabilistic spintronic machine known as stochastic magnetic tunnel junction (sMTJ) interfaced with highly effective Discipline Programmable Gate Arrays (FPGA).

{A photograph} of the proof-of-concept of the spintronic probabilistic pc consisting of sMTJ-based p-bit unit (left facet) and Discipline-Programmable Gate Array (FPGA) (proper facet). (Picture: Shunsuke Fukami, Kerem Camsari et al.)

With the slowing down of Moore’s Legislation, there was an rising demand for domain-specific {hardware}. Probabilistic pc with naturally stochastic constructing blocks (probabilistic bits, or p-bits) is a consultant instance owing to its potential functionality to effectively handle numerous computationally arduous duties in machine studying (ML) and synthetic intelligence (AI). Simply as quantum computer systems are a pure match for inherently quantum issues, room-temperature probabilistic computer systems are appropriate for intrinsically probabilistic algorithms, that are extensively used for coaching machines and computational arduous issues in optimization, sampling, and so forth.

Lately, researchers from Tohoku College and the College of California Santa Barbara have proven that sturdy and totally asynchronous (clockless) probabilistic computer systems will be effectively realized at scale utilizing a probabilistic spintronic machine known as stochastic magnetic tunnel junction (sMTJ) interfaced with highly effective Discipline Programmable Gate Arrays (FPGA).

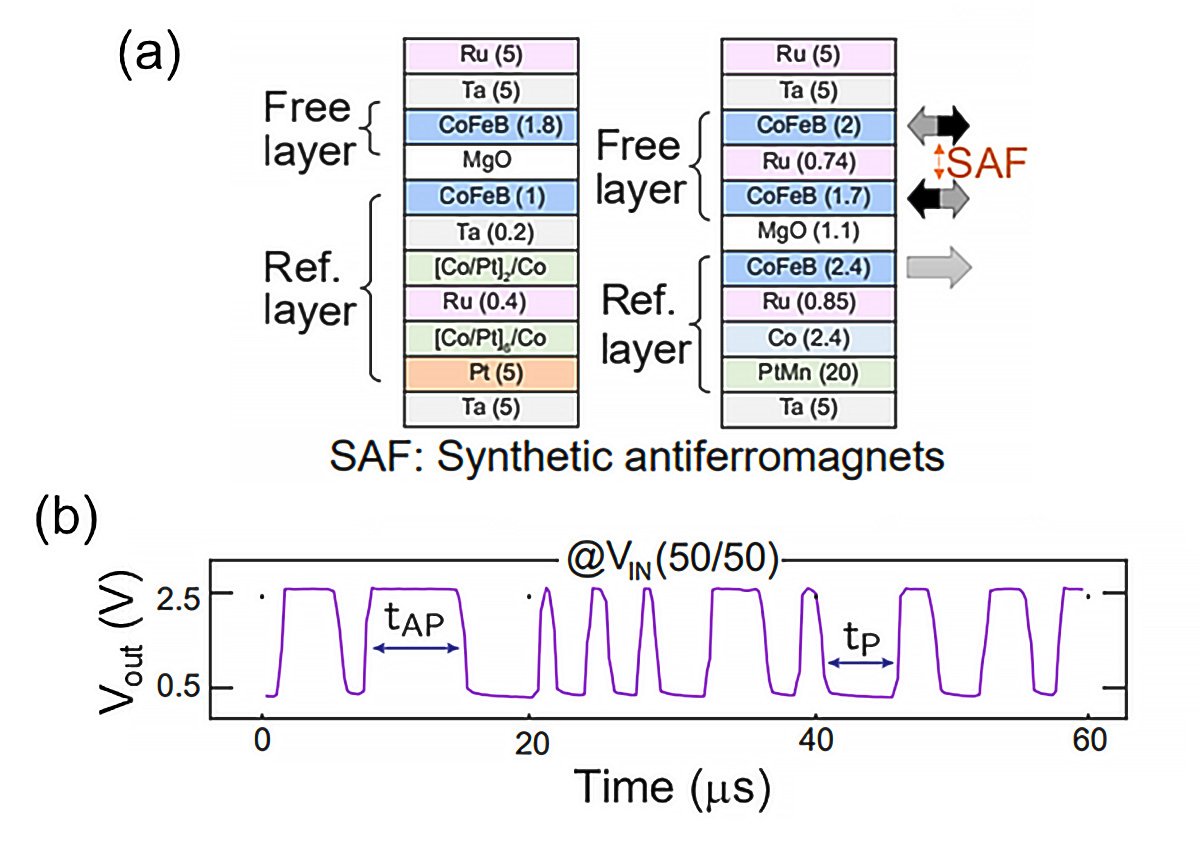

(a) Stack construction utilized in earlier (left) and current (proper) works. (b) Measured output sign of the p-bit displaying microsecond random telegraph noise. (Picture: Shunsuke Fukami, Kerem Camsari et al.)

Till now, nevertheless, sMTJ-based probabilistic computer systems have been solely able to implementing recurrent neural community, and growing the scheme to implement feedforward neural networks have been awaited. “Because the feedforward neural networks underpin most fashionable AI purposes, augmenting probabilistic computer systems towards this path ought to be a pivotal step to hit the market and improve the computational capabilities of AI” stated Professor Kerem Camsari, the Principal Investigator on the College of California, Santa Barbara.

Within the latest breakthrough to be introduced on the IEDM 2023, the researchers have made two essential state-of-the-art advances. First, leveraging earlier works by the Tohoku College crew on stochastic magnetic tunnel junctions on the machine stage, they’ve demonstrated the quickest p-bits on the circuit stage through the use of in-plane sMTJs, fluctuating each ~microsecond or so, about three orders of magnitude sooner than the earlier reviews. Second, by implementing an replace order on the computing {hardware} stage and leveraging layer-by-layer parallelism, they’ve demonstrated the essential operation of the Bayesian community for instance of feedforward stochastic neural networks.

(a) Stack construction utilized in earlier (left) and current (proper) works. (b) Measured output sign of the p-bit displaying microsecond random telegraph noise. (Picture: Shunsuke Fukami, Kerem Camsari et al.)

Till now, nevertheless, sMTJ-based probabilistic computer systems have been solely able to implementing recurrent neural community, and growing the scheme to implement feedforward neural networks have been awaited. “Because the feedforward neural networks underpin most fashionable AI purposes, augmenting probabilistic computer systems towards this path ought to be a pivotal step to hit the market and improve the computational capabilities of AI” stated Professor Kerem Camsari, the Principal Investigator on the College of California, Santa Barbara.

Within the latest breakthrough to be introduced on the IEDM 2023, the researchers have made two essential state-of-the-art advances. First, leveraging earlier works by the Tohoku College crew on stochastic magnetic tunnel junctions on the machine stage, they’ve demonstrated the quickest p-bits on the circuit stage through the use of in-plane sMTJs, fluctuating each ~microsecond or so, about three orders of magnitude sooner than the earlier reviews. Second, by implementing an replace order on the computing {hardware} stage and leveraging layer-by-layer parallelism, they’ve demonstrated the essential operation of the Bayesian community for instance of feedforward stochastic neural networks.

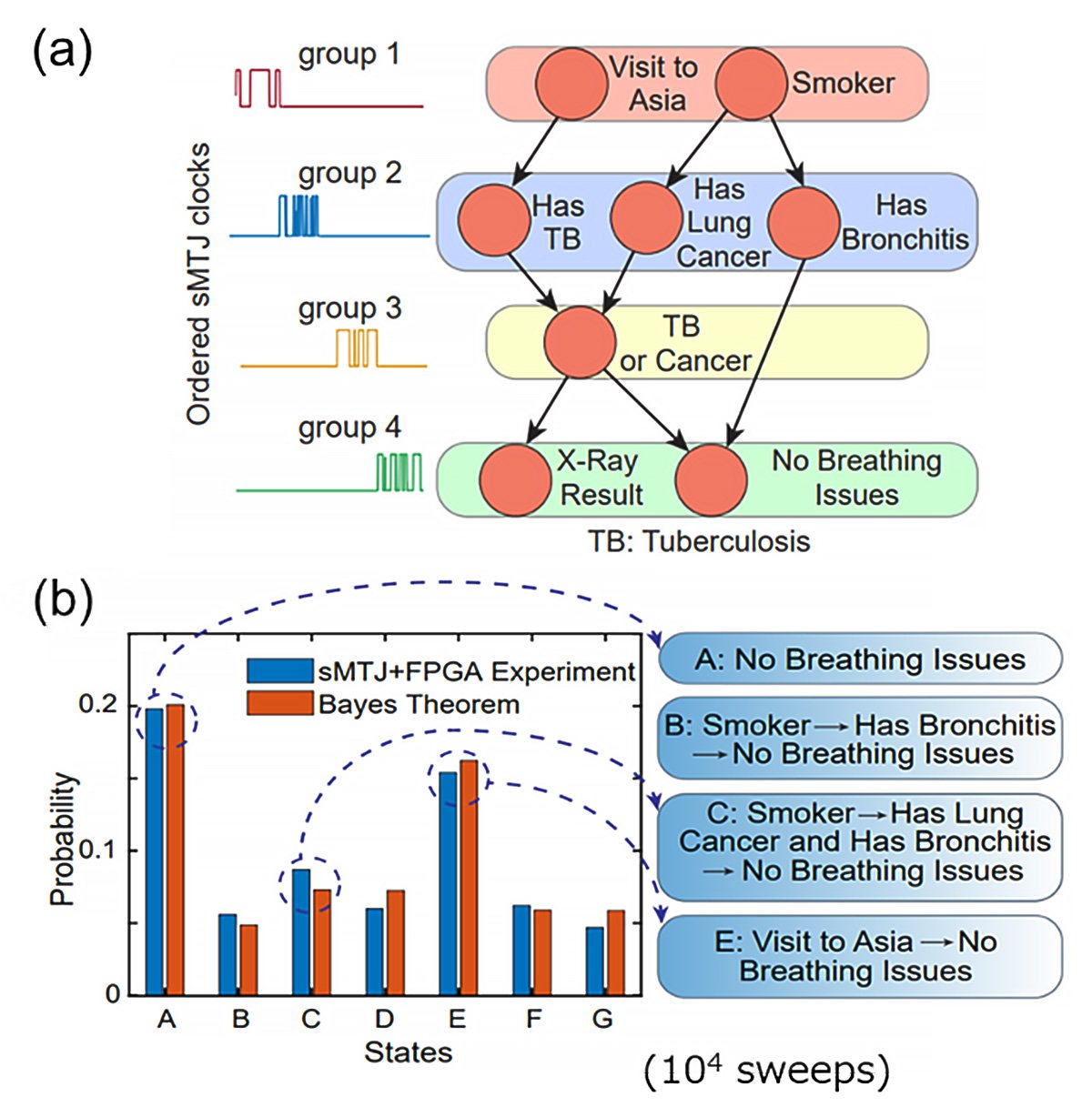

(a) Output sign from the sMTJ-based p-bit enforced to carry out Bayesian community. The Asia community, a textbook instance of the Bayesian community is examined. (b) Experimental outcomes of the operation. (Picture: Shunsuke Fukami, Kerem Camsari et al.)

“Present demonstrations are small-scale, nevertheless, these designs will be scaled up by making use of CMOS-compatible Magnetic RAM (MRAM) know-how, enabling important advances in machine studying purposes whereas additionally unlocking the potential for environment friendly {hardware} realization of deep/convolutional neural networks,” stated Professor Shunsuke Fukami, the Principal Investigator at Tohoku College.

(a) Output sign from the sMTJ-based p-bit enforced to carry out Bayesian community. The Asia community, a textbook instance of the Bayesian community is examined. (b) Experimental outcomes of the operation. (Picture: Shunsuke Fukami, Kerem Camsari et al.)

“Present demonstrations are small-scale, nevertheless, these designs will be scaled up by making use of CMOS-compatible Magnetic RAM (MRAM) know-how, enabling important advances in machine studying purposes whereas additionally unlocking the potential for environment friendly {hardware} realization of deep/convolutional neural networks,” stated Professor Shunsuke Fukami, the Principal Investigator at Tohoku College.