Managing the surroundings of an software in a distributed computing surroundings could be difficult. Guaranteeing that every one nodes have the mandatory surroundings to execute code and figuring out the precise location of the consumer’s code are complicated duties. Apache Spark™ gives varied strategies akin to Conda, venv, and PEX; see additionally Find out how to Handle Python Dependencies in PySpark in addition to submit script choices like --jars, --packages, and Spark configurations like spark.jars.*. These choices enable customers to seamlessly deal with dependencies of their clusters.

Nevertheless, the present help for managing dependencies in Apache Spark has limitations. Dependencies can solely be added statically and can’t be modified throughout runtime. Because of this you could all the time set the dependencies earlier than beginning your Driver. To deal with this situation, we’ve got launched session-based dependency administration help in Spark Join, ranging from Apache Spark 3.5.0. This new characteristic means that you can replace Python dependencies dynamically throughout runtime. On this weblog put up, we are going to focus on the excellent strategy to controlling Python dependencies throughout runtime utilizing Spark Join in Apache Spark.

Session-based Artifacts in Spark Join

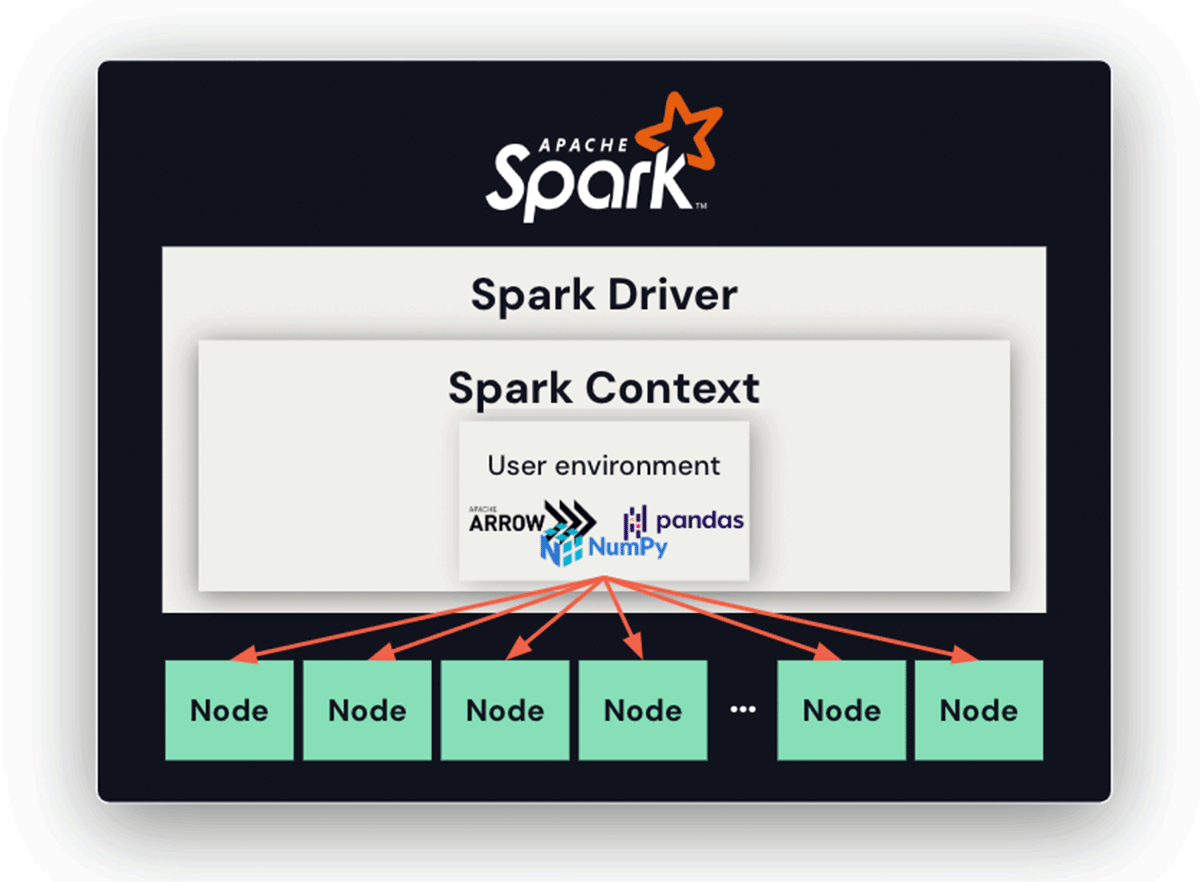

When utilizing the Spark Driver with out Spark Join, the Spark Context provides the archive (consumer surroundings) which is later routinely unpacked on the nodes, guaranteeing that every one nodes possess the mandatory dependencies to execute the job. This performance simplifies dependency administration in a distributed computing surroundings, minimizing the danger of surroundings contamination and making certain that every one nodes have the supposed surroundings for execution. Nevertheless, this could solely be set as soon as statically earlier than beginning the Spark Context and Driver, limiting flexibility.

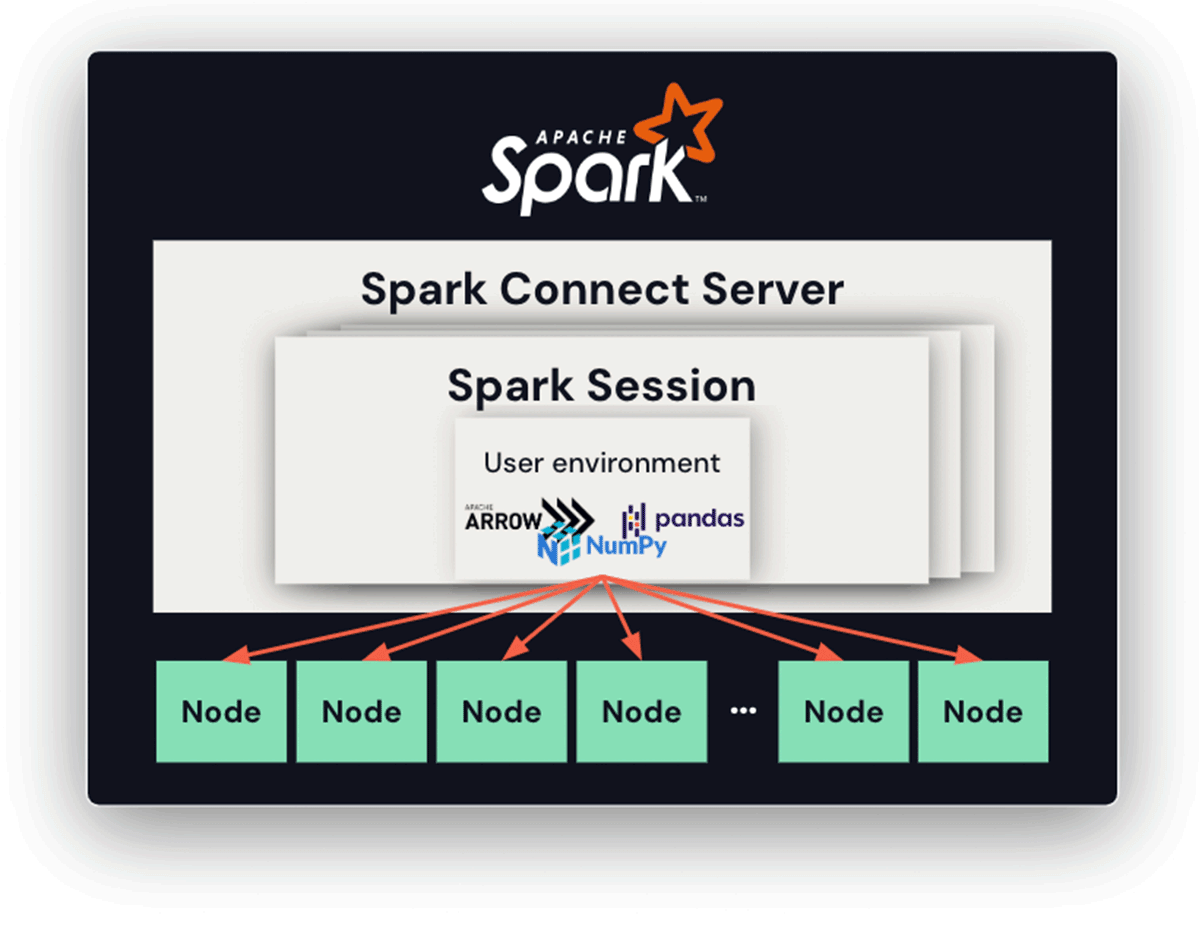

With Spark Join, dependency administration turns into extra intricate because of the extended lifespan of the join server and the potential for a number of classes and purchasers – every with its personal Python variations, dependencies, and environments. The proposed resolution is to introduce session-based archives. On this strategy, every session has a devoted listing the place all associated Python information and archives are saved. When Python employees are launched, the present working listing is about to this devoted listing. This ensures that every session can entry its particular set of dependencies and environments, successfully mitigating potential conflicts.

Utilizing Conda

Conda is a extremely widespread Python package deal administration system many make the most of. PySpark customers can leverage Conda environments on to package deal their third-party Python packages. This may be achieved by leveraging conda-pack, a library designed to create relocatable Conda environments.

The next instance demonstrates making a packed Conda surroundings that’s later unpacked in each the driving force and executor to allow session-based dependency administration. The surroundings is packed into an archive file, capturing the Python interpreter and all related dependencies.

import conda_pack

import os

# Pack the present surroundings ('pyspark_conda_env') to 'pyspark_conda_env.tar.gz'.

# Or you'll be able to run 'conda pack' in your shell.

conda_pack.pack()

spark.addArtifact(

f"{os.environ.get('CONDA_DEFAULT_ENV')}.tar.gz#surroundings",

archive=True)

spark.conf.set(

"spark.sql.execution.pyspark.python", "surroundings/bin/python")

# Any further, Python employees on executors use the `pyspark_conda_env` Conda

# surroundings.Utilizing PEX

Spark Join helps utilizing PEX to bundle Python packages collectively. PEX is a software that generates a self-contained Python surroundings. It features equally to Conda or virtualenv, however a .pex file is an executable by itself.

Within the following instance, a .pex file is created for each the driving force and executor to make the most of for every session. This file incorporates the desired Python dependencies offered by means of the pex command.

# Pack the present env to pyspark_pex_env.pex'.

pex $(pip freeze) -o pyspark_pex_env.pexAfter you create the .pex file, now you can ship them to the session-based surroundings so your session makes use of the remoted .pex file.

spark.addArtifact("pyspark_pex_env.pex",file=True)

spark.conf.set(

"spark.sql.execution.pyspark.python", "pyspark_pex.env.pex")

# Any further, Python employees on executors use the `pyspark_conda_env` venv surroundings.Utilizing Virtualenv

Virtualenv is a Python software to create remoted Python environments. Since Python 3.3.0, a subset of its options has been built-in into Python as an ordinary library beneath the venv module. The venv module could be leveraged for Python dependencies by utilizing venv-pack in an identical manner as conda-pack. The instance beneath demonstrates session-based dependency administration with venv.

import venv_pack

import os

# Pack the present venv to 'pyspark_conda_env.tar.gz'.

# Or you'll be able to run 'venv-pack' in your shell.

venv_pack.pack(output='pyspark_venv.tar.gz')

spark.addArtifact(

"pyspark_venv.tar.gz#surroundings",

archive=True)

spark.conf.set(

"spark.sql.execution.pyspark.python", "surroundings/bin/python")

# Any further, Python employees on executors use your venv surroundings.Conclusion

Apache Spark gives a number of choices, together with Conda, virtualenv, and PEX, to facilitate transport and administration of Python dependencies with Spark Join dynamically throughout runtime in Apache Spark 3.5.0, which overcomes the limitation of static Python dependency administration.

Within the case of Databricks notebooks, we offer a extra elegant resolution with a user-friendly interface for Python dependencies to handle this drawback. Moreover, customers can instantly make the most of pip and Conda for Python dependency administration. Reap the benefits of these options at the moment with a free trial on Databricks.