We stay in a world of nice pure magnificence — of majestic mountains, dramatic seascapes, and serene forests. Think about seeing this magnificence as a chicken does, flying previous richly detailed, three-dimensional landscapes. Can computer systems be taught to synthesize this sort of visible expertise? Such a functionality would enable for brand spanking new sorts of content material for video games and digital actuality experiences: for example, stress-free inside an immersive flythrough of an infinite nature scene. However current strategies that synthesize new views from photos have a tendency to permit for under restricted digicam movement.

In a analysis effort we name Infinite Nature, we present that computer systems can be taught to generate such wealthy 3D experiences just by viewing nature movies and images. Our newest work on this theme, InfiniteNature-Zero (offered at ECCV 2022) can produce high-resolution, high-quality flythroughs ranging from a single seed picture, utilizing a system educated solely on nonetheless pictures, a breakthrough functionality not seen earlier than. We name the underlying analysis downside perpetual view era: given a single enter view of a scene, how can we synthesize a photorealistic set of output views equivalent to an arbitrarily lengthy, user-controlled 3D path via that scene? Perpetual view era may be very difficult as a result of the system should generate new content material on the opposite facet of huge landmarks (e.g., mountains), and render that new content material with excessive realism and in excessive decision.

| Instance flythrough generated with InfiniteNature-Zero. It takes a single enter picture of a pure scene and synthesizes an extended digicam path flying into that scene, producing new scene content material because it goes. |

Background: Studying 3D Flythroughs from Movies

To ascertain the fundamentals of how such a system may work, we’ll describe our first model, “Infinite Nature: Perpetual View Era of Pure Scenes from a Single Picture” (offered at ICCV 2021). In that work we explored a “be taught from video” strategy, the place we collected a set of on-line movies captured from drones flying alongside coastlines, with the concept we may be taught to synthesize new flythroughs that resemble these actual movies. This set of on-line movies known as the Aerial Shoreline Imagery Dataset (ACID). With a view to discover ways to synthesize scenes that reply dynamically to any desired 3D digicam path, nonetheless, we couldn’t merely deal with these movies as uncooked collections of pixels; we additionally needed to compute their underlying 3D geometry, together with the digicam place at every body.

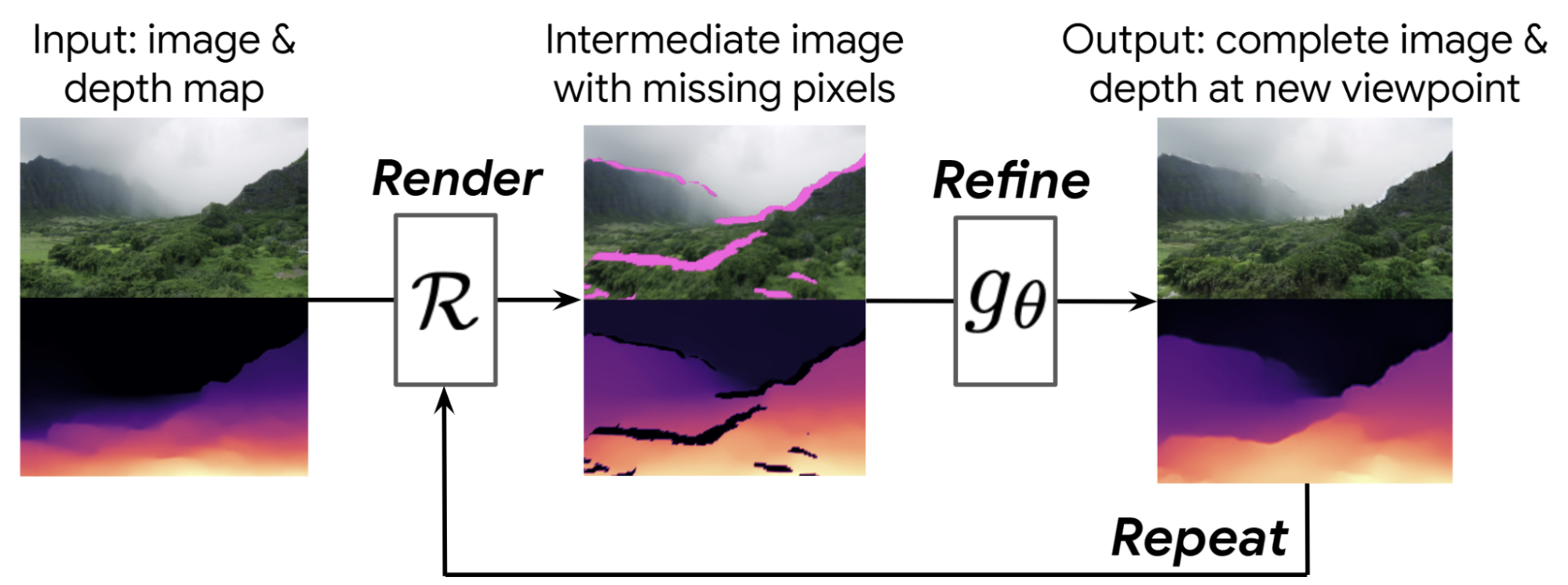

The fundamental thought is that we be taught to generate flythroughs step-by-step. Given a beginning view, like the primary picture within the determine beneath, we first compute a depth map utilizing single-image depth prediction strategies. We then use that depth map to render the picture ahead to a brand new digicam viewpoint, proven within the center, leading to a brand new picture and depth map from that new viewpoint.

Nevertheless, this intermediate picture has some issues — it has holes the place we are able to see behind objects into areas that weren’t seen within the beginning picture. It’s also blurry, as a result of we at the moment are nearer to things, however are stretching the pixels from the earlier body to render these now-larger objects.

To deal with these issues, we be taught a neural picture refinement community that takes this low-quality intermediate picture and outputs an entire, high-quality picture and corresponding depth map. These steps can then be repeated, with this synthesized picture as the brand new start line. As a result of we refine each the picture and the depth map, this course of might be iterated as many instances as desired — the system routinely learns to generate new surroundings, like mountains, islands, and oceans, because the digicam strikes additional into the scene.

We practice this render-refine-repeat synthesis strategy utilizing the ACID dataset. Particularly, we pattern a video from the dataset after which a body from that video. We then use this technique to render a number of new views transferring into the scene alongside the identical digicam trajectory as the bottom reality video, as proven within the determine beneath, and evaluate these rendered frames to the corresponding floor reality video frames to derive a coaching sign. We additionally embody an adversarial setup that tries to tell apart synthesized frames from actual photos, encouraging the generated imagery to seem extra sensible.

The ensuing system can generate compelling flythroughs, as featured on the challenge webpage, together with a “flight simulator” Colab demo. Not like prior strategies on video synthesis, this technique permits the person to interactively management the digicam and might generate for much longer digicam paths.

InfiniteNature-Zero: Studying Flythroughs from Nonetheless Photographs

One downside with this primary strategy is that video is troublesome to work with as coaching knowledge. Excessive-quality video with the proper of digicam movement is difficult to seek out, and the aesthetic high quality of a person video body typically can’t evaluate to that of an deliberately captured nature {photograph}. Subsequently, in “InfiniteNature-Zero: Studying Perpetual View Era of Pure Scenes from Single Photos”, we construct on the render-refine-repeat technique above, however devise a option to be taught perpetual view synthesis from collections of nonetheless photographs — no movies wanted. We name this technique InfiniteNature-Zero as a result of it learns from “zero” movies. At first, this may look like an unattainable job — how can we practice a mannequin to generate video flythroughs of scenes when all it’s ever seen are remoted photographs?

To resolve this downside, we had the important thing perception that if we take a picture and render a digicam path that kinds a cycle — that’s, the place the trail loops again such that the final picture is from the identical viewpoint as the primary — then we all know that the final synthesized picture alongside this path ought to be the identical because the enter picture. Such cycle consistency offers a coaching constraint that helps the mannequin be taught to fill in lacking areas and improve picture decision throughout every step of view era.

Nevertheless, coaching with these digicam cycles is inadequate for producing lengthy and steady view sequences, in order in our unique work, we embody an adversarial technique that considers lengthy, non-cyclic digicam paths, just like the one proven within the determine above. Particularly, if we render T frames from a beginning body, we optimize our render-refine-repeat mannequin such {that a} discriminator community can’t inform which was the beginning body and which was the ultimate synthesized body. Lastly, we add a element educated to generate high-quality sky areas to extend the perceived realism of the outcomes.

With these insights, we educated InfiniteNature-Zero on collections of panorama photographs, which can be found in massive portions on-line. A number of ensuing movies are proven beneath — these exhibit lovely, numerous pure surroundings that may be explored alongside arbitrarily lengthy digicam paths. In comparison with our prior work — and to prior video synthesis strategies — these outcomes exhibit vital enhancements in high quality and variety of content material (particulars out there in the paper).

| A number of nature flythroughs generated by InfiniteNature-Zero from single beginning photographs. |

Conclusion

There are a variety of thrilling future instructions for this work. As an example, our strategies at present synthesize scene content material primarily based solely on the earlier body and its depth map; there isn’t any persistent underlying 3D illustration. Our work factors in the direction of future algorithms that may generate full, photorealistic, and constant 3D worlds.

Acknowledgements

Infinite Nature and InfiniteNature-Zero are the results of a collaboration between researchers at Google Analysis, UC Berkeley, and Cornell College. The important thing contributors to the work represented on this submit embody Angjoo Kanazawa, Andrew Liu, Richard Tucker, Zhengqi Li, Noah Snavely, Qianqian Wang, Varun Jampani, and Ameesh Makadia.