Posted by Jay Ji, Senior Product Supervisor, Google PI; Christian Frueh, Software program Engineer, Google Analysis and Pedro Vergani, Employees Designer, Perception UX

A customizable AI-powered character template that demonstrates the ability of LLMs to create interactive experiences with depth

Google’s Companion Innovation group has developed a sequence of Generative AI templates to showcase how combining Giant Language Fashions with current Google APIs and applied sciences can clear up particular business use instances.

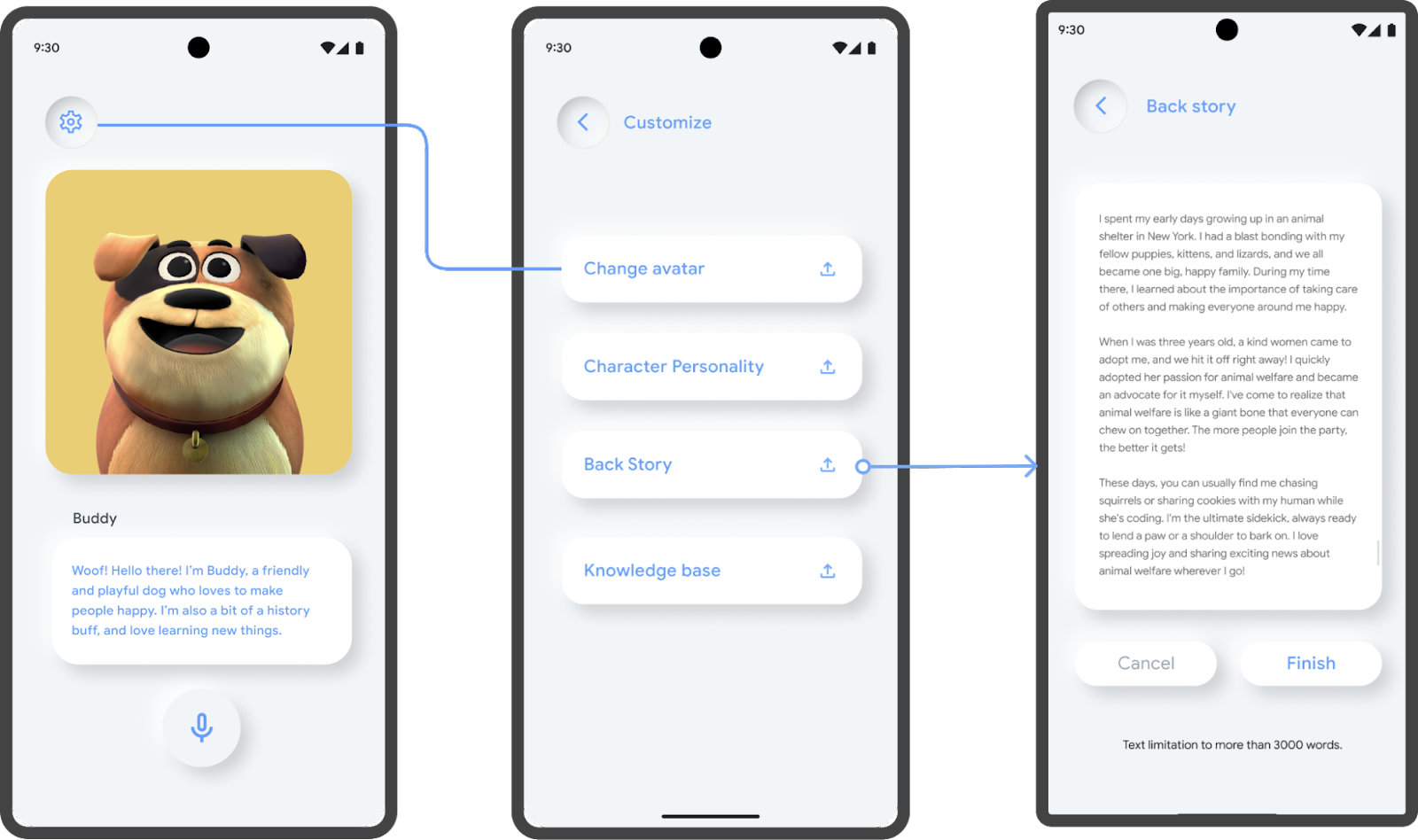

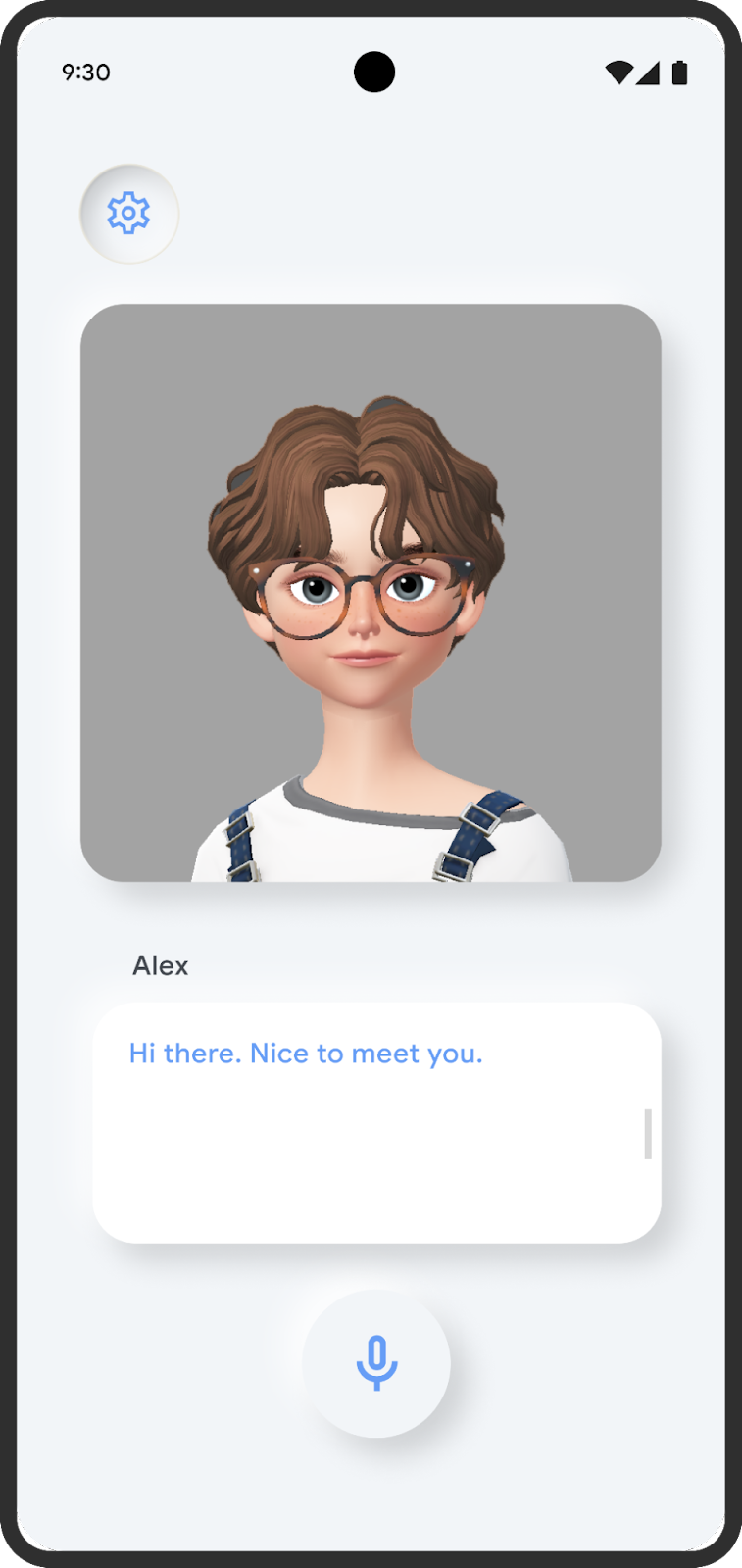

Speaking Character is a customizable 3D avatar builder that permits builders to carry an animated character to life with Generative AI. Each builders and customers can configure the avatar’s character, backstory and information base, and thus create a specialised skilled with a novel perspective on any given subject. Then, customers can work together with it in each textual content or verbal dialog.

|

As one instance, we’ve outlined a base character mannequin, Buddy. He’s a pleasant canine that we’ve given a backstory, character and information base such that customers can converse about typical canine life experiences. We additionally present an instance of how character and backstory could be modified to imagine the persona of a dependable insurance coverage agent – or anything for that matter.

|

Our code template is meant to serve two most important objectives:

First, present builders and customers with a take a look at interface to experiment with the highly effective idea of immediate engineering for character growth and leveraging particular datasets on prime of the PaLM API to create distinctive experiences.

Second, showcase how Generative AI interactions could be enhanced past easy textual content or chat-led experiences. By leveraging cloud providers akin to speech-to-text and text-to-speech, and machine studying fashions to animate the character, builders can create a vastly extra pure expertise for customers.

Potential use instances of this sort of expertise are numerous and embody utility akin to interactive inventive instrument in growing characters and narratives for gaming or storytelling; tech assist even for complicated methods or processes; customer support tailor-made for particular services or products; for debate follow, language studying, or particular topic training; or just for bringing model property to life with a voice and the flexibility to work together with.

Technical Implementation

Interactions

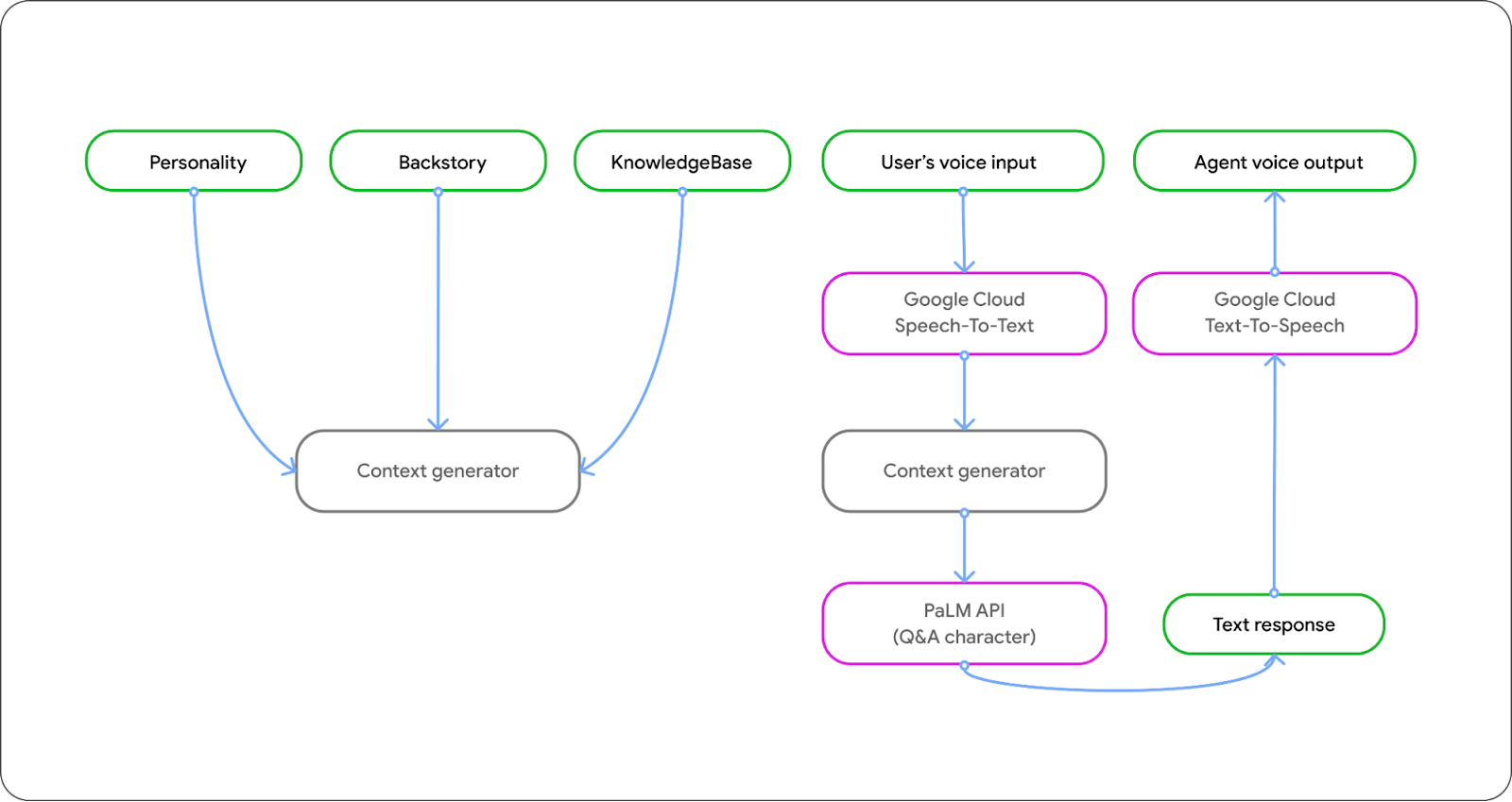

We use a number of separate expertise parts to allow a 3D avatar to have a pure dialog with customers. First, we use Google’s speech-to-text service to transform speech inputs to textual content, which is then fed into the PaLM API. We then use text-to-speech to generate a human-sounding voice for the language mannequin’s response.

|

Animation

To allow an interactive visible expertise, we created a ‘speaking’ 3D avatar that animates primarily based on the sample and intonation of the generated voice. Utilizing the MediaPipe framework, we leveraged a brand new audio-to-blendshapes machine studying mannequin for producing facial expressions and lip actions that synchronize to the voice sample.

Blendshapes are management parameters which are used to animate 3D avatars utilizing a small set of weights. Our audio-to-blendshapes mannequin predicts these weights from speech enter in real-time, to drive the animated avatar. This mannequin is educated from ‘speaking head’ movies utilizing Tensorflow, the place we use 3D face monitoring to be taught a mapping from speech to facial blendshapes, as described on this paper.

As soon as the generated blendshape weights are obtained from the mannequin, we make use of them to morph the facial expressions and lip movement of the 3D avatar, utilizing the open supply JavaScript 3D library three.js.

Character Design

In crafting Buddy, our intent was to discover forming an emotional bond between customers and its wealthy backstory and distinct character. Our goal was not simply to raise the extent of engagement, however to reveal how a personality, for instance one imbued with humor, can form your interplay with it.

A content material author developed a fascinating backstory to floor this character. This backstory, together with its information base, is what provides depth to its character and brings it to life.

We additional sought to include recognizable non-verbal cues, like facial expressions, as indicators of the interplay’s development. For example, when the character seems deep in thought, it is a signal that the mannequin is formulating its response.

Immediate Construction

Lastly, to make the avatar simply customizable with easy textual content inputs, we designed the immediate construction to have three components: character, backstory, and information base. We mix all three items to at least one massive immediate, and ship it to the PaLM API because the context.

|

Partnerships and Use Instances

ZEPETO, beloved by Gen Z, is an avatar-centric social universe the place customers can absolutely customise their digital personas, discover style traits, and interact in vibrant self-expression and digital interplay. Our Speaking Character template permits customers to create their very own avatars, gown them up in several garments and equipment, and work together with different customers in digital worlds. We’re working with ZEPETO and have examined their metaverse avatar with over 50 blendshapes with nice outcomes.

|

“Seeing an AI character come to life as a ZEPETO avatar and converse with such fluidity and depth is really inspiring. We imagine a mix of superior language fashions and avatars will infinitely develop what is feasible within the metaverse, and we’re excited to be part of it.”– Daewook Kim, CEO, ZEPETO

The demo shouldn’t be restricted to metaverse use instances, although. The demo exhibits how characters can carry textual content corpus or information bases to life in any area.

For instance in gaming, LLM powered NPCs may enrich the universe of a recreation and deepen consumer expertise by way of pure language conversations discussing the sport’s world, historical past and characters.

In training, characters could be created to characterize completely different topics a scholar is to check, or have completely different characters representing completely different ranges of issue in an interactive academic quiz situation, or representing particular characters and occasions from historical past to assist folks study completely different cultures, locations, folks and occasions.

In commerce, the Speaking Character equipment could possibly be used to carry manufacturers and shops to life, or to energy retailers in an eCommerce market and democratize instruments to make their shops extra participating and personalised to present higher consumer expertise. It could possibly be used to create avatars for purchasers as they discover a retail surroundings and gamify the expertise of purchasing in the true world.

Much more broadly, any model, services or products can use this demo to carry a speaking agent to life that may work together with customers primarily based on any information set of tone of voice, performing as a model ambassador, customer support consultant, or gross sales assistant.

Open Supply and Developer Help

Google’s Companion Innovation group has developed a sequence of Generative AI Templates showcasing the chances when combining LLMs with current Google APIs and applied sciences to unravel particular business use instances. Every template was launched at I/O in Might this 12 months, and open-sourced for builders and companions to construct upon.

We’ll work carefully with a number of companions on an EAP that permits us to co-develop and launch particular options and experiences primarily based on these templates, as and when the API is launched in every respective market (APAC timings TBC). Speaking Agent may even be open sourced so builders and startups can construct on prime of the experiences we’ve created. Google’s Companion Innovation group will proceed to construct options and instruments in partnership with native markets to develop on the R&D already underway. View the venture on GitHub right here.

Acknowledgements

We want to acknowledge the invaluable contributions of the next folks to this venture: Mattias Breitholtz, Yinuo Wang, Vivek Kwatra, Tyler Mullen, Chuo-Ling Chang, Boon Panichprecha, Lek Pongsakorntorn, Zeno Chullamonthon, Yiyao Zhang, Qiming Zheng, Joyce Li, Xiao Di, Heejun Kim, Jonghyun Lee, Hyeonjun Jo, Jihwan Im, Ajin Ko, Amy Kim, Dream Choi, Yoomi Choi, KC Chung, Edwina Priest, Joe Fry, Bryan Tanaka, Sisi Jin, Agata Dondzik, Miguel de Andres-Clavera.