Has the day software program engineers have been ready for lastly arrived? Are giant language fashions (LLMs) going to show us all into higher software program engineers? Or are LLMs creating extra hype than performance for software program growth, and, on the similar time, plunging everybody right into a world the place it’s onerous to differentiate the superbly fashioned, but generally faux and incorrect, code generated by synthetic intelligence (AI) packages from verified and well-tested programs?

LLMs and Their Potential Affect on the Way forward for Software program Engineering

This weblog submit, which builds on concepts launched within the IEEE paper Utility of Giant Language Fashions to Software program Engineering Duties: Alternatives, Dangers, and Implications by Ipek Ozkaya, focuses on alternatives and cautions for LLMs in software program growth, the implications of incorporating LLMs into software-reliant programs, and the areas the place extra analysis and improvements are wanted to advance their use in software program engineering. The response of the software program engineering neighborhood to the accelerated advances that LLMs have demonstrated because the closing quarter of 2022 has ranged from snake oil to no assist for programmers to the tip of programming and laptop science training as we all know it to revolutionizing the software program growth course of. As is commonly the case, the reality lies someplace within the center, together with new alternatives and dangers for builders utilizing LLMs.

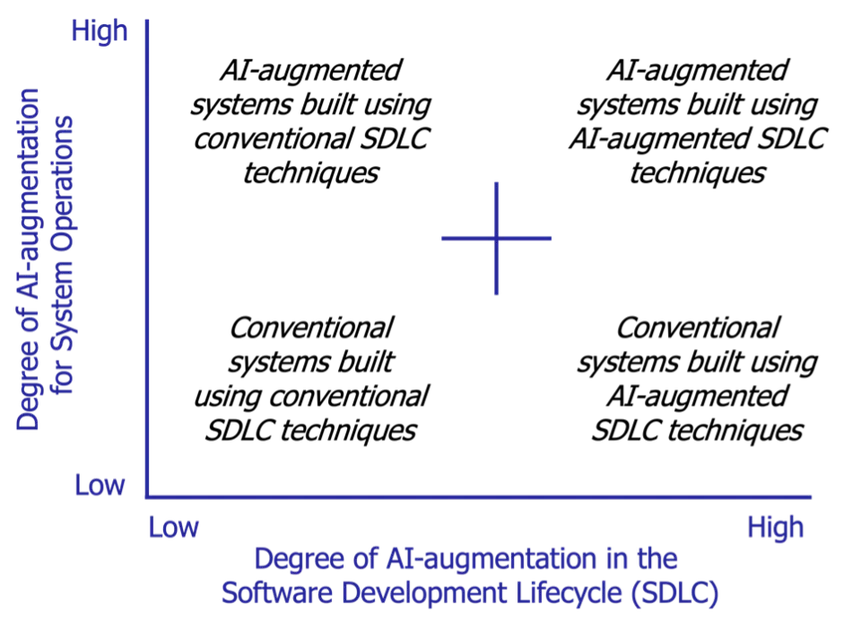

Analysis agendas have anticipated that the way forward for software program engineering would come with an AI-augmented software program growth lifecycle (SDLC), the place each software program engineers and AI-enabled instruments share roles, corresponding to copilot, pupil, knowledgeable, and supervisor. For instance, our November 2021 e book Architecting the Way forward for Software program Engineering: A Nationwide Agenda for Software program Engineering Analysis and Improvement describes a analysis path towards people and AI-enabled instruments working as trusted collaborators. Nonetheless, at the moment (a 12 months earlier than ChatGPT was launched to the general public), we didn’t anticipate these alternatives for collaboration to emerge so quickly. The determine beneath, subsequently, expands upon the imaginative and prescient offered in our 2021 e book to codify the diploma to which AI augmentation will be utilized in each system operations and the software program growth lifecycle (Determine 1), starting from typical strategies to completely AI-augmented strategies.

- Typical programs constructed utilizing typical SDLC strategies—This quadrant represents a low diploma of AI augmentation for each system operations and the SDLC, which is the baseline of most software-reliant tasks right now. An instance is a listing administration system that makes use of conventional database queries for operations and is developed utilizing typical SDLC processes with none AI-based instruments or strategies.

- Typical programs constructed utilizing AI-augmented strategies—This quadrant represents an rising space of R&D within the software program engineering neighborhood, the place system operations have a low diploma of AI augmentation, however AI-augmented instruments and strategies are used within the SDLC. An instance is an internet site internet hosting service the place the content material just isn’t AI augmented, however the growth course of employs AI-based code mills (corresponding to GitHub Copilot), AI-based code overview instruments (corresponding to Codiga), and/or AI-based testing instruments (corresponding to DiffBlue Cowl).

- AI-augmented programs constructed utilizing typical SDLC strategies—This quadrant represents a excessive diploma of AI augmentation in programs, particularly in operations, however makes use of typical strategies within the SDLC. An instance is a suggestion engine in an e-commerce platform that employs machine studying (ML) algorithms to personalize suggestions, however the software program itself is developed, examined, and deployed utilizing typical Agile strategies.

- AI-augmented programs constructed utilizing AI-augmented strategies—This quadrant represents the head of AI augmentation, with a excessive diploma of AI-augmentation for each programs operations and the SDLC. An instance is a self-driving automotive system that makes use of ML algorithms for navigation and determination making, whereas additionally utilizing AI-driven code mills, core overview and restore instruments, unit check technology, and DevOps instruments for software program growth, testing, and deployment.

This weblog submit focuses on implications of LLMs primarily within the lower-right quadrant (i.e., typical programs constructed utilizing AI-augmented SDLC strategies). Future weblog posts will handle the opposite AI-augmented quadrants.

Utilizing LLMs to Carry out Particular Software program Improvement Lifecycle Actions

The preliminary hype round utilizing LLMs for software program growth has already began to chill down, and expectations are actually extra reasonable. The dialog has shifted from anticipating LLMs to exchange software program builders (i.e., synthetic intelligence) to contemplating LLMs as companions and specializing in the place to greatest apply them (i.e., augmented intelligence). The examine of prompts is an early instance of how LLMs are already impacting software program engineering. Prompts are directions given to an LLM to implement guidelines, automate processes, and guarantee particular qualities (and portions) of generated output. Prompts are additionally a type of programming that may customise the outputs and interactions with an LLM.

Immediate engineering is an rising self-discipline that research interactions with—and programming of—rising LLM computational programs to resolve complicated issues by way of pure language interfaces. An integral part of this self-discipline is immediate patterns, that are like software program patterns however concentrate on capturing reusable options to issues confronted when interacting with LLMs. Such patterns elevate the examine of LLM interactions from particular person advert hoc examples to a extra dependable and repeatable engineering self-discipline that formalizes and codifies elementary immediate buildings, their capabilities, and their ramifications.

Many software program engineering duties can profit from utilizing extra subtle instruments, together with LLMs, with the assistance of related immediate engineering methods and extra subtle fashions. Indulge us for a second and assume that we’ve solved thorny points (corresponding to belief, ethics, and copyright possession) as we enumerate potential use circumstances the place LLMs can create advances in productiveness for software program engineering duties, with manageable dangers:

- analyze software program lifecycle information—Software program engineers should overview and analyze many varieties of information in giant challenge repositories, together with necessities paperwork, software program structure and design paperwork, check plans and information, compliance paperwork, defect lists, and so forth, and with many variations over the software program lifecycle. LLMs may also help software program engineers quickly analyze these giant volumes of data to determine inconsistencies and gaps which might be in any other case onerous for people to seek out with the identical diploma of scalability, accuracy, and energy.

- analyze code—Software program engineers utilizing LLMs and immediate engineering patterns can work together with code in new methods to search for gaps or inconsistencies. With infrastructure-as-code (IaC) and code-as-data approaches, corresponding to CodeQL, LLMs may also help software program engineers discover code in new ways in which contemplate a number of sources (starting from requirement specs to documentation to code to check circumstances to infrastructure) and assist discover inconsistencies between these varied sources.

- just-in-time developer suggestions—Functions of LLMs in software program growth have been obtained with skepticism, some deserved and a few undeserved. Whereas the code generated by present AI assistants, corresponding to Copilot, could incur extra safety points, in time this may enhance as LLMs are educated on extra completely vetted information units. Giving builders syntactic corrections as they write code additionally helps scale back time spent in code conformance checking.

- improved testing—Builders typically shortcut the duty of producing unit exams. The power to simply generate significant check circumstances by way of AI-enabled instruments can enhance total check effectiveness and protection and consequently assist enhance system high quality.

- software program structure growth and evaluation—Early adopters are already utilizing design vocabulary-driven prompts to information code technology utilizing LLMs. Utilizing multi-model inputs to speak, analyze, or counsel snippets of software program designs by way of pictures or diagrams with supporting textual content is an space of future analysis and may also help increase the information and affect of software program architects.

- documentation—There are numerous functions of LLMs to doc artifacts within the software program growth course of, starting from contracting language to regulatory necessities and inline feedback of difficult code. When LLMs are given particular information, corresponding to code, they will create cogent feedback or documentation. The reverse can also be true in that when LLMs are given a number of paperwork, folks can question LLMs utilizing immediate engineering to generate summaries and even solutions to particular questions quickly. For instance, if a software program engineer should observe an unfamiliar software program commonplace or software program acquisition coverage, they will present the software program commonplace or coverage doc to an LLM and use immediate engineering to summarize, doc, ask particular questions, and even ask for examples. LLMs speed up the training of engineers who should use this information to develop and maintain software-reliant programs.

- programming language translation—Legacy software program and brownfield growth is the norm for a lot of programs developed and sustained right now. Organizations typically discover language translation efforts when they should modernize their programs. Whereas some good instruments exist to help language translation, this course of will be costly and error inclined. Parts of code will be translated to different programming languages utilizing LLMs. Performing such translations at velocity with elevated accuracy offers builders with extra time to fill different software program growth gaps, corresponding to specializing in rearchitecting and producing lacking exams.

Advancing Software program Engineering Utilizing LLMs

Does generative AI actually characterize a extremely productive future for software program growth? The slew of merchandise coming into the sector in software program growth automation, together with (however actually not restricted to) AI coding assistant instruments, corresponding to Copilot, CodiumAI, Tabnine, SinCode, and CodeWhisperer, place their merchandise with this promise. The chance (and problem) for the software program engineering neighborhood is to find whether or not the fast-paced enhancements in LLM-based AI assistants basically change how builders have interaction with and carry out software program growth actions.

For instance, an AI-augmented SDLC will possible have totally different process flows, efficiencies, and roadblocks than the present growth lifecycles of Agile and iterative growth workflows. Specifically, slightly than serious about steps of growth as necessities, design, implementation, check, and deploy, LLMs can bundle these duties collectively, significantly when mixed with latest LLM-based instruments and plug-ins, corresponding to LangChain and ChatGPT Superior Knowledge Evaluation. This integration could affect the variety of hand-offs and the place they occur, shifting process dependencies inside the SDLC.

Whereas the thrill round LLMs continues, the jury continues to be out on whether or not AI-augmented software program growth powered by generative AI instruments and different automated strategies and instruments will obtain the next formidable aims:

- 10x or extra discount in useful resource wants and error charges

- help for builders in managing ripple results of adjustments in complicated programs

- discount within the want for in depth testing and evaluation

- modernization of the DoD codebases from reminiscence unsafe languages to reminiscence secure ones with a fraction of effort required

- help for certification and assurance issues understanding that there’s unpredictable emergent habits challenges

- enabling evaluation of accelerating software program dimension and complexity by elevated automation

Even when a fraction of the above is achieved, it should affect the circulate of actions within the SDLC, possible enabling and accelerating the shift-left actions in software program engineering. The software program engineering neighborhood has a chance to form the longer term analysis on creating and making use of LLMs by gaining first-hand information of how LLMs work and by asking key questions on the right way to use them successfully and ethically.

Cautions to Take into account When Making use of LLMs in Software program Engineering

It is very important additionally acknowledge the drawbacks of making use of LLMs to software program engineering. The probabilistic and randomized number of the subsequent phrase in developing the outputs in LLMs can provide the tip consumer the impression of correctness, but the content material typically comprises errors, that are known as “hallucinations.” Hallucinations are a terrific concern for anybody who blindly applies the output generated by LLMs with out taking the effort and time to confirm the outcomes. Whereas important enhancements in fashions have been made just lately, a number of areas of warning encompass their technology and use, together with

- information high quality and bias—LLMs require monumental quantities of coaching information to study language patterns, and their outputs are extremely depending on the info that they’re educated on. Any points that exist within the coaching information, corresponding to biases and errors, shall be amplified by LLMs. For instance, ChatGPT-4 was initially educated on information by September 2021, which meant the mannequin’s suggestions have been unaware of knowledge from the previous two years till just lately. Nonetheless, the standard and representativeness of the coaching information has a major affect on the mannequin’s efficiency and generalizability, so errors propagate simply.

- privateness and safety—Privateness and safety are key issues in utilizing LLMs, particularly in environments, such because the DoD and intelligence communities, the place data is commonly managed or labeled. The favored press is stuffed with examples of leaking proprietary or delicate data. For instance, Samsung staff just lately admitted that they unwittingly disclosed confidential information and code to ChatGPT. Making use of these open fashions in delicate settings not solely dangers yielding defective outcomes, but additionally dangers unknowingly releasing confidential data and propagating it to others.

- content material possession—LLMs are generated utilizing content material developed by others, which can include proprietary data and content material creators’ mental property. Coaching on such information utilizing patterns in beneficial output creates plagiarism issues. Some content material is boilerplate, and the power to generate output in appropriate and comprehensible methods creates alternatives for improved effectivity. Nonetheless, different content material, together with code, just isn’t trivial to distinguish whether or not it’s human or machine generated, particularly the place particular person contributions or issues corresponding to certification matter. In the long term, the rising recognition of LLMs will possible create boundaries round information sharing and open-source software program and open science. In a latest instance, Japan’s authorities decided that copyrights will not be enforceable with information utilized in AI coaching. Methods to point possession and even stop sure information from getting used to coach these fashions will possible emerge, although such strategies and attributes to enrich LLMs will not be but widespread.

- carbon footprint—Huge quantities of computing energy is required to coach deep studying fashions, which is elevating issues in regards to the affect on carbon footprint. Analysis in numerous coaching strategies, algorithmic efficiencies, and ranging allocation of computing assets will possible enhance. As well as, improved information assortment and storage strategies are anticipated to ultimately scale back the affect of LLMs on the setting, however growth of such strategies continues to be in its early part.

- explainability and unintended consequence—Explainability of deep studying and ML fashions is a basic concern in AI, together with (however not restricted to) LLMs. Customers search to grasp the reasoning behind the suggestions, particularly if such fashions shall be utilized in safety-, mission-, or business-critical settings. Dependence on the standard of the info and the shortcoming to hint the suggestions to the supply enhance belief issues. As well as, since LLM coaching sequences are generated utilizing a randomized probabilistic method, explainability of correctness of the suggestions creates added challenges.

Areas of Analysis and Innovation in LLMs

The cautions and dangers related to LLMs described on this submit inspire the necessity for brand new analysis and improvements. We’re already beginning to see an elevated analysis focus in basis fashions. Different areas of analysis are additionally rising, corresponding to creating built-in growth environments with the newest LLM capabilities and dependable information assortment and use strategies which might be focused for software program engineering. Listed below are some analysis areas associated to software program engineering the place we anticipate to see important focus and progress within the close to future:

- accelerating upstream software program engineering actions—LLMs’ potential to help in documentation-related actions extends to software program acquisition pre-award documentation preparation and post-award reporting and milestone actions. For instance, LLMs will be utilized as a problem-solving device to assist groups tasked with assessing the standard or efficiency of software-reliant acquisition packages by aiding acquirers and builders to research giant repositories of paperwork associated to supply choice, milestone critiques, and check actions.

- generalizability of fashions—LLMs at present work by pretraining on a big corpus of content material followed by fine-tuning on particular duties. Though the architecture of an LLM is process unbiased, its software for particular duties requires additional fine-tuning with a considerably giant variety of examples. Researchers are already specializing in generalizing these fashions to functions the place information are sparse (often called few-shot studying).

- new clever built-in growth environments (IDEs)—If we’re satisfied by preliminary proof that some programming duties will be accelerated and improved in correctness by LLM-based AI assistants, then typical built-in growth environments (IDEs) might want to incorporate these assistants. This course of has already begun with the mixing of LLMs into fashionable IDEs, corresponding to Android Studio, IntelliJ, and Visible Studio. When clever assistants are built-in in IDEs, the software program growth course of turns into extra interactive with the device infrastructure whereas requiring extra experience from builders to help in vetting the outcomes.

- creation of domain-specific LLMs—Given the restrictions in coaching information and potential privateness and safety issues, the power to coach LLMs which might be particular to sure domains and duties offers a chance to handle the dangers in safety, privateness, and proprietary data, whereas reaping the advantages of generative AI capabilities. Creating domain-specific LLMs is a brand new frontier with alternatives to leverage LLMs whereas lowering the chance of hallucinations, which is especially necessary within the healthcare and monetary domains. FinGPT is one instance of a domain-specific LLM.

- information as a unit of computation—Essentially the most essential enter that drives the following technology of AI improvements just isn’t solely the algorithms, but additionally information. A major portion of laptop science and software program engineering expertise will thus shift to information science and information engineer careers. Furthermore, we want extra tool-supported innovations in information assortment, information high quality evaluation, and information possession rights administration. This analysis space has important gaps that require talent units spanning laptop science, coverage, and engineering, in addition to deep information in safety, privateness, and ethics.

The Method Ahead in LLM Innovation for Software program Engineering

After the 2 winters of AI within the late Seventies and early Nineteen Nineties, we’ve entered not solely a interval of AI blossoms, but additionally exponential development in funding, use, and alarm about AI. Advances in LLMs no doubt are large contributors to this development of all three. Whether or not the following part of improvements in AI-enabled software program engineering consists of capabilities past our creativeness or it turns into one more AI winter largely is dependent upon our capacity to (1) proceed technical improvements and (2) follow software program engineering and laptop science with the very best stage of moral requirements and accountable conduct. We should be daring in experimenting with the potential of LLMs to enhance software program growth, but even be cautious and never neglect the basic ideas and practices of engineering ethics, rigor, and empirical validation.

As described above, there are numerous alternatives for analysis in innovation for making use of LLMs in software program engineering. On the SEI we’ve ongoing initiatives that embody figuring out DoD-relevant situations and experimenting with the appliance of LLMs, as properly pushing the boundaries of making use of generative AI applied sciences to software program engineering duties. We are going to report our progress within the coming months. One of the best alternatives for making use of LLMs within the software program engineering lifecycle could also be within the actions that play to the strengths of LLMs, which is a subject we’ll discover intimately in upcoming blogs.