NVIDIA’s AI Enterprise software program proven at Supercomputing ‘23 connects accelerated computing to massive language mannequin use instances.

On the Supercomputing ‘23 convention in Denver on Nov. 13, NVIDIA introduced expanded availability of the NVIDIA GH200 Grace Hopper Superchip for high-performance computing and HGX H200 Programs and Cloud Situations for AI coaching.

Leap to:

NVIDIA HGX GH200 supercomputer enhances generative AI and high-performance computing workloads

The HGX GH200 supercomputing platform, which is constructed on the NVIDIA H200 Tensor Core GPU, shall be out there by way of server producers and {hardware} suppliers which have partnered with NVIDIA. The HGX GH200 is predicted to begin transport from cloud suppliers and producers in Q2 2024.

Amazon Net Companies, Google Cloud, Microsoft Azure, CoreWeave, Lambda, Vultr and Oracle Cloud Infrastructure will provide H200-based cases in 2024.

NVIDIA HGX H200 options the next:

- NVIDIA H200 Tensor Core GPU for generative AI and high-performance computing workloads that require large quantities of reminiscence (141 GB of reminiscence at 4.8 terabytes per second).

- Doubling inference pace on Llama 2, a 70 billion-parameter LLM, in comparison with the NVIDIA H100.

- Interoperable with the NVIDIA GH200 Grace Hopper Superchip with HBM3e.

- Deployable in any sort of information heart, together with on servers with present companions ASRock Rack, ASUS, Dell Applied sciences, Eviden, GIGABYTE, Hewlett Packard Enterprise, Ingrasys, Lenovo, QCT, Supermicro, Wistron and Wiwynn.

- Can present inference and coaching for the most important LLM fashions past 175 billion parameters.

- Over 32 petaflops of FP8 deep studying compute and 1.1TB of combination high-bandwidth reminiscence.

“To create intelligence with generative AI and HPC functions, huge quantities of information should be effectively processed at excessive pace utilizing massive, quick GPU reminiscence,” stated Ian Buck, vp of hyperscale and HPC at NVIDIA, in a press launch.

NVIDIA’s GH200 chip is suited to supercomputing and AI coaching

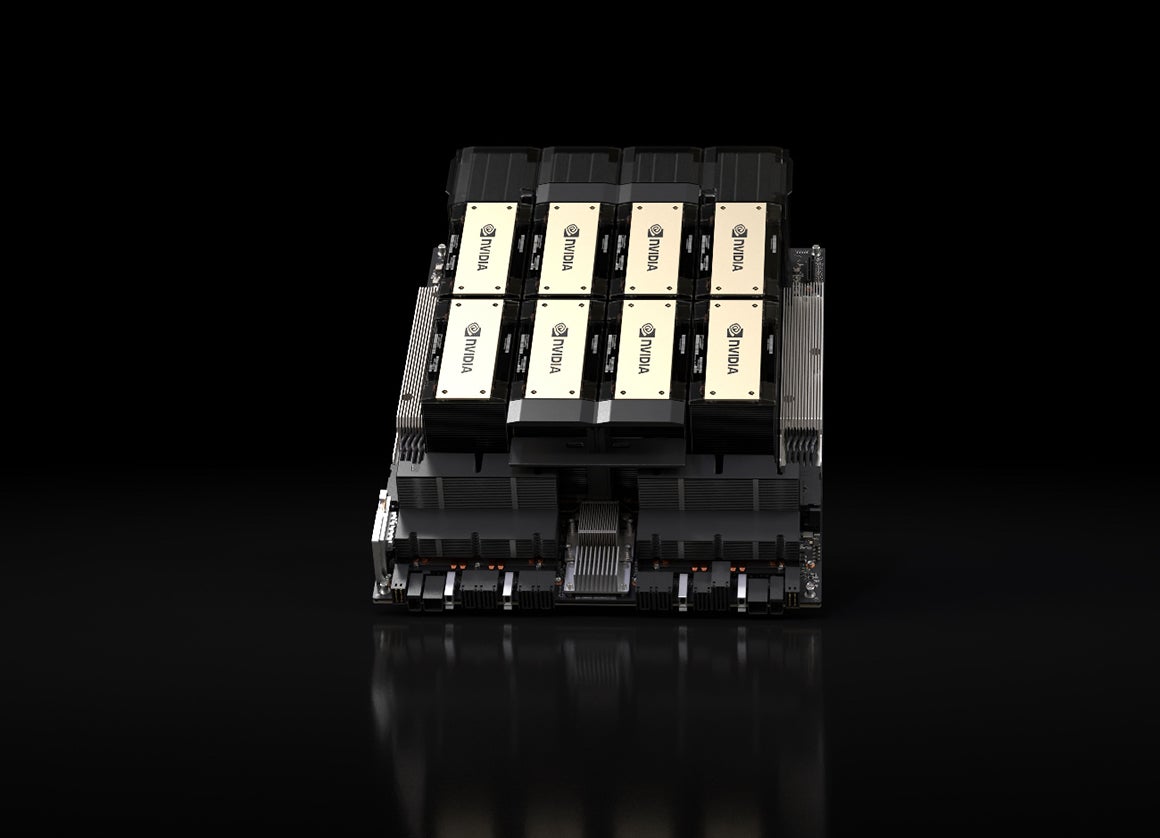

NVIDIA will now provide HPE Cray EX2500 supercomputers with the GH200 chip (Determine A) for enhanced supercomputing and AI coaching. HPE introduced a supercomputing answer for generative AI made up in a part of NVIDIA’s HPE Cray EX2500 supercomputer configuration.

Determine A

The GH200 contains Arm-based NVIDIA Grace CPU and Hopper GPU architectures utilizing NVIDIA NVLink-C2C interconnect expertise. The GH200 shall be packaged inside programs from Dell Applied sciences, Eviden, Hewlett Packard Enterprise, Lenovo, QCT and Supermicro, NVIDIA introduced at Supercomputing ’23.

SEE: NVIDIA introduced AI training-as-a-service in July (TechRepublic)

“Organizations are quickly adopting generative AI to speed up enterprise transformations and technological breakthroughs,” stated Justin Hotard, government vp and common supervisor of HPC, AI and Labs at HPE, in a weblog publish. “Working with NVIDIA, we’re excited to ship a full supercomputing answer for generative AI, powered by applied sciences like Grace Hopper, which is able to make it straightforward for purchasers to speed up large-scale AI mannequin coaching and tuning at new ranges of effectivity.”

What can the GH200 allow?

Tasks like HPE’s present that supercomputing has functions for generative AI coaching, which may very well be utilized in enterprise computing. The GH200 interoperates with the NVIDIA AI Enterprise suite of software program for workloads resembling speech, recommender programs and hyperscale inference. It may very well be used together with an enterprise’s information to run massive language fashions skilled on the enterprise’s information.

NVIDIA makes new supercomputing analysis heart partnerships

NVIDIA introduced partnerships with supercomputing facilities all over the world. Germany’s Jülich Supercomputing Centre’s scientific supercomputer, JUPITER, will use GH200 superchips. JUPITER shall be used to create AI basis fashions for local weather and climate analysis, materials science, drug discovery, industrial engineering and quantum computing for the scientific neighborhood. The Texas Superior Computing Heart’s Vista supercomputer and the College of Bristol’s upcoming Isambard-AI supercomputer can even use GH200 superchips.

A wide range of cloud suppliers provide GH200 entry

Cloud suppliers Lambda and Vultr provide NVIDIA GH200 in early entry now. Oracle Cloud Infrastructure and CoreWeave plan to supply NVIDIA GH200 cases sooner or later, beginning in Q1 2024 for CoreWeave; Oracle didn’t specify a date.