The Service Employee API is the Dremel of the online platform. It presents extremely broad utility whereas additionally yielding resiliency and higher efficiency. For those who’ve not used Service Employee but—and also you couldn’t be blamed if that’s the case, as it hasn’t seen large adoption as of 2020—it goes one thing like this:

Article Continues Under

- On the preliminary go to to an internet site, the browser registers what quantities to a client-side proxy powered by a comparably paltry quantity of JavaScript that—like a Internet Employee—runs by itself thread.

- After the Service Employee’s registration, you may intercept requests and determine how to reply to them within the Service Employee’s

fetch()occasion.

What you determine to do with requests you intercept is a) your name and b) will depend on your web site. You’ll be able to rewrite requests, precache static belongings throughout set up, present offline performance, and—as will probably be our eventual focus—ship smaller HTML payloads and higher efficiency for repeat guests.

Getting out of the woods#section2

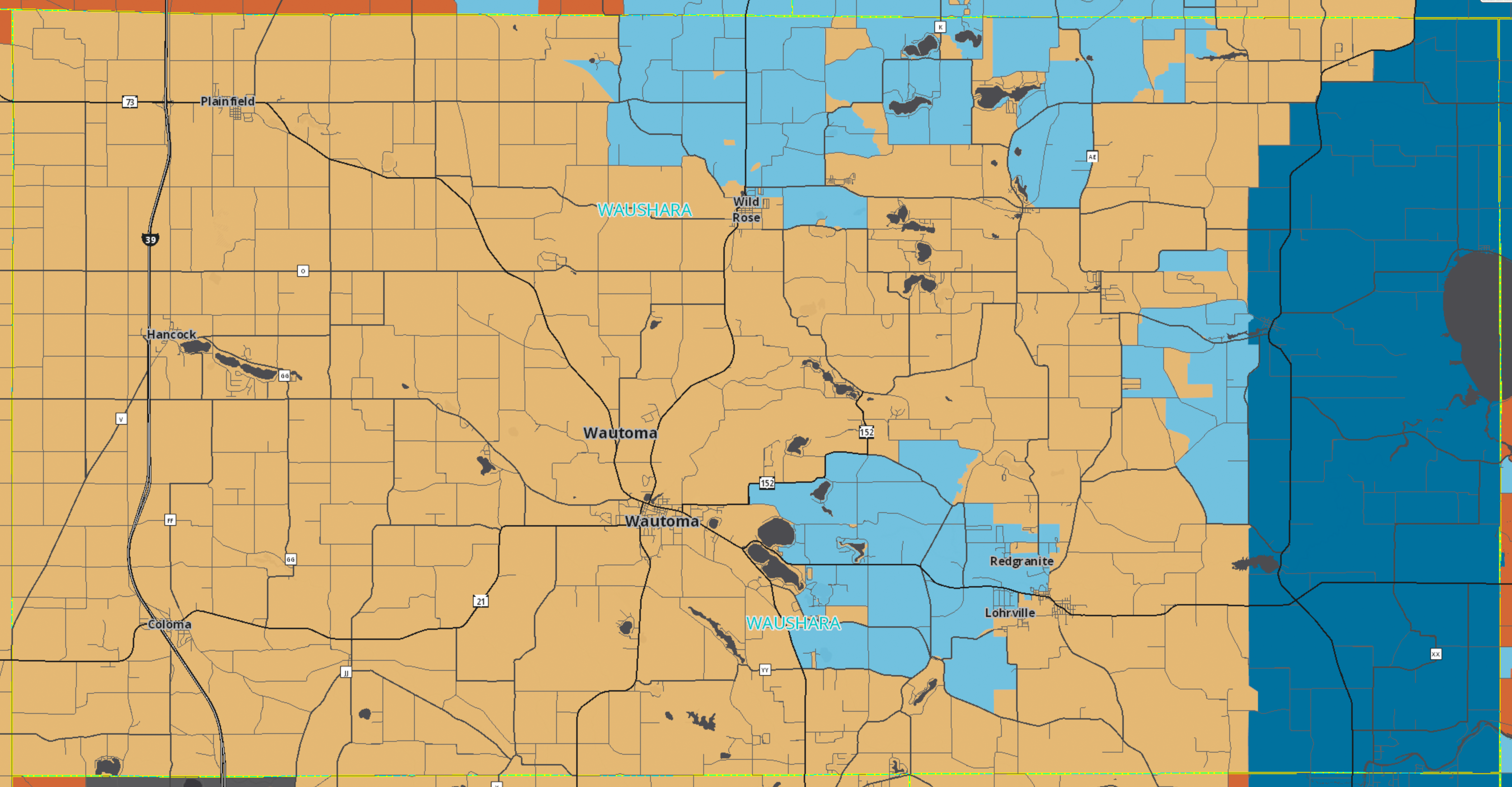

Weekly Timber is a consumer of mine that gives logging companies in central Wisconsin. For them, a quick web site is significant. Their enterprise is positioned in Waushara County, and like many rural stretches in america, community high quality and reliability isn’t nice.

Wisconsin has farmland for days, however it additionally has loads of forests. While you want an organization that cuts logs, Google might be your first cease. How briskly a given logging firm’s web site is is perhaps sufficient to get you trying elsewhere when you’re left ready too lengthy on a crappy community connection.

I initially didn’t consider a Service Employee was needed for Weekly Timber’s web site. In spite of everything, if issues have been lots quick to start out with, why complicate issues? Then again, understanding that my consumer companies not simply Waushara County, however a lot of central Wisconsin, even a barebones Service Employee could possibly be the type of progressive enhancement that provides resilience within the locations it is perhaps wanted most.

The primary Service Employee I wrote for my consumer’s web site—which I’ll seek advice from henceforth because the “normal” Service Employee—used three well-documented caching methods:

- Precache CSS and JavaScript belongings for all pages when the Service Employee is put in when the window’s load occasion fires.

- Serve static belongings out of

CacheStorageif accessible. If a static asset isn’t inCacheStorage, retrieve it from the community, then cache it for future visits. - For HTML belongings, hit the community first and place the HTML response into

CacheStorage. If the community is unavailable the following time the customer arrives, serve the cached markup fromCacheStorage.

These are neither new nor particular methods, however they supply two advantages:

- Offline functionality, which is helpful when community circumstances are spotty.

- A efficiency enhance for loading static belongings.

That efficiency enhance translated to a 42% and 48% lower within the median time to First Contentful Paint (FCP) and Largest Contentful Paint (LCP), respectively. Higher but, these insights are based mostly on Actual Person Monitoring (RUM). Meaning these features aren’t simply theoretical, however an actual enchancment for actual individuals.

CacheStorage. As a result of the Service Employee doesn’t have to entry the community, it takes about 23 milliseconds to “obtain” the asset from CacheStorage.This efficiency enhance is from bypassing the community solely for static belongings already in CacheStorage—notably render-blocking stylesheets. An identical profit is realized after we depend on the HTTP cache, solely the FCP and LCP enhancements I simply described are compared to pages with a primed HTTP cache with out an put in Service Employee.

For those who’re questioning why CacheStorage and the HTTP cache aren’t equal, it’s as a result of the HTTP cache—not less than in some circumstances—should still contain a visit to the server to confirm asset freshness. Cache-Management’s immutable flag will get round this, however immutable doesn’t have nice assist but. An extended max-age worth works, too, however the mixture of Service Employee API and CacheStorage provides you much more flexibility.

Particulars apart, the takeaway is that the only and most well-established Service Employee caching practices can enhance efficiency. Doubtlessly greater than what well-configured Cache-Management headers can present. Even so, Service Employee is an unbelievable know-how with much more prospects. It’s attainable to go farther, and I’ll present you the way.

A greater, sooner Service Employee#section3

The online loves itself some “innovation,” which is a phrase we equally like to throw round. To me, true innovation isn’t after we create new frameworks or patterns solely for the advantage of builders, however whether or not these innovations profit individuals who find yourself utilizing no matter it’s we slap up on the internet. The precedence of constituencies is a factor we should respect. Customers above all else, at all times.

The Service Employee API’s innovation house is appreciable. How you’re employed inside that house can have a giant impact on how the online is skilled. Issues like navigation preload and ReadableStream have taken Service Employee from nice to killer. We are able to do the next with these new capabilities, respectively:

- Scale back Service Employee latency by parallelizing Service Employee startup time and navigation requests.

- Stream content material in from

CacheStorageand the community.

Furthermore, we’re going to mix these capabilities and pull out yet one more trick: precache header and footer partials, then mix them with content material partials from the community. This not solely reduces how a lot information we obtain from the community, however it additionally improves perceptual efficiency for repeat visits. That’s innovation that helps everybody.

Grizzled, I flip to you and say “let’s do that.”

Laying the groundwork#section4

If the thought of mixing precached header and footer partials with community content material on the fly looks as if a Single Web page Software (SPA), you’re not far off. Like an SPA, you’ll want to use the “app shell” mannequin to your web site. Solely as an alternative of a client-side router plowing content material into one piece of minimal markup, you must consider your web site as three separate elements:

- The header.

- The content material.

- The footer.

For my consumer’s web site, that appears like this:

CacheStorage, whereas the Content material partial is retrieved from the community except the consumer is offline.The factor to recollect right here is that the person partials don’t must be legitimate markup within the sense that every one tags have to be closed inside every partial. The one factor that counts within the last sense is that the mixture of those partials should be legitimate markup.

To start out, you’ll have to precache separate header and footer partials when the Service Employee is put in. For my consumer’s web site, these partials are served from the /partial-header and /partial-footer pathnames:

self.addEventListener("set up", occasion => {

const cacheName = "fancy_cache_name_here";

const precachedAssets = [

"/partial-header", // The header partial

"/partial-footer", // The footer partial

// Other assets worth precaching

];

occasion.waitUntil(caches.open(cacheName).then(cache => {

return cache.addAll(precachedAssets);

}).then(() => {

return self.skipWaiting();

}));

});Each web page should be fetchable as a content material partial minus the header and footer, in addition to a full web page with the header and footer. That is key as a result of the preliminary go to to a web page received’t be managed by a Service Employee. As soon as the Service Employee takes over, you then serve content material partials and assemble them into full responses with the header and footer partials from CacheStorage.

In case your website is static, this implies producing a complete different mess of markup partials that you could rewrite requests to within the Service Employee’s fetch() occasion. In case your web site has a again finish—as is the case with my consumer—you need to use an HTTP request header to instruct the server to ship full pages or content material partials.

The laborious half is placing all of the items collectively—however we’ll just do that.

Placing all of it collectively#section5

Writing even a fundamental Service Employee could be difficult, however issues get actual difficult actual quick when assembling a number of responses into one. One cause for that is that to be able to keep away from the Service Employee startup penalty, we’ll have to arrange navigation preload.

Implementing navigation preload#section6

Navigation preload addresses the issue of Service Employee startup time, which delays navigation requests to the community. The very last thing you wish to do with a Service Employee is maintain up the present.

Navigation preload should be explicitly enabled. As soon as enabled, the Service Employee received’t maintain up navigation requests throughout startup. Navigation preload is enabled within the Service Employee’s activate occasion:

self.addEventListener("activate", occasion => {

const cacheName = "fancy_cache_name_here";

const preloadAvailable = "navigationPreload" in self.registration;

occasion.waitUntil(caches.keys().then(keys => {

return Promise.all([

keys.filter(key => {

return key !== cacheName;

}).map(key => {

return caches.delete(key);

}),

self.clients.claim(),

preloadAvailable ? self.registration.navigationPreload.enable() : true

]);

}));

});As a result of navigation preload isn’t supported in every single place, now we have to do the standard characteristic test, which we retailer within the above instance within the preloadAvailable variable.

Moreover, we have to use Promise.all() to resolve a number of asynchronous operations earlier than the Service Employee prompts. This consists of pruning these outdated caches, in addition to ready for each shoppers.declare() (which tells the Service Employee to say management instantly fairly than ready till the following navigation) and navigation preload to be enabled.

A ternary operator is used to allow navigation preload in supporting browsers and keep away from throwing errors in browsers that don’t. If preloadAvailable is true, we allow navigation preload. If it isn’t, we go a Boolean that received’t have an effect on how Promise.all() resolves.

With navigation preload enabled, we have to write code in our Service Employee’s fetch() occasion handler to utilize the preloaded response:

self.addEventListener("fetch", occasion => {

const { request } = occasion;

// Static asset dealing with code omitted for brevity

// ...

// Examine if this can be a request for a doc

if (request.mode === "navigate") {

const networkContent = Promise.resolve(occasion.preloadResponse).then(response => {

if (response) {

addResponseToCache(request, response.clone());

return response;

}

return fetch(request.url, {

headers: {

"X-Content material-Mode": "partial"

}

}).then(response => {

addResponseToCache(request, response.clone());

return response;

});

}).catch(() => {

return caches.match(request.url);

});

// Extra to come back...

}

});Although this isn’t the whole lot of the Service Employee’s fetch() occasion code, there’s rather a lot that wants explaining:

- The preloaded response is out there in

occasion.preloadResponse. Nonetheless, as Jake Archibald notes, the worth ofoccasion.preloadResponsewill probably beundefinedin browsers that don’t assist navigation preload. Subsequently, we should gooccasion.preloadResponsetoPromise.resolve()to keep away from compatibility points. - We adapt within the ensuing

thencallback. If occasion.preloadResponseis supported, we use the preloaded response and add it toCacheStoragethrough anaddResponseToCache()helper operate. If not, we ship afetch()request to the community to get the content material partial utilizing a customizedX-Content material-Modeheader with a price ofpartial. - Ought to the community be unavailable, we fall again to essentially the most just lately accessed content material partial in

CacheStorage. - The response—no matter the place it was procured from—is then returned to a variable named

networkContentthat we use later.

How the content material partial is retrieved is difficult. With navigation preload enabled, a particular Service-Employee-Navigation-Preload header with a price of true is added to navigation requests. We then work with that header on the again finish to make sure the response is a content material partial fairly than the complete web page markup.

Nonetheless, as a result of navigation preload isn’t accessible in all browsers, we ship a special header in these eventualities. In Weekly Timber’s case, we fall again to a customized X-Content material-Mode header. In my consumer’s PHP again finish, I’ve created some helpful constants:

<?php

// Is that this a navigation preload request?

outline("NAVIGATION_PRELOAD", isset($_SERVER["HTTP_SERVICE_WORKER_NAVIGATION_PRELOAD"]) && stristr($_SERVER["HTTP_SERVICE_WORKER_NAVIGATION_PRELOAD"], "true") !== false);

// Is that this an express request for a content material partial?

outline("PARTIAL_MODE", isset($_SERVER["HTTP_X_CONTENT_MODE"]) && stristr($_SERVER["HTTP_X_CONTENT_MODE"], "partial") !== false);

// If both is true, this can be a request for a content material partial

outline("USE_PARTIAL", NAVIGATION_PRELOAD === true || PARTIAL_MODE === true);

?>From there, the USE_PARTIAL fixed is used to adapt the response:

<?php

if (USE_PARTIAL === false) {

require_once("partial-header.php");

}

require_once("consists of/house.php");

if (USE_PARTIAL === false) {

require_once("partial-footer.php");

}

?>The factor to be hip to right here is that it’s best to specify a Differ header for HTML responses to take the Service-Employee-Navigation-Preload (and on this case, the X-Content material-Mode header) under consideration for HTTP caching functions—assuming you’re caching HTML in any respect, which might not be the case for you.

With our dealing with of navigation preloads full, we will then transfer onto the work of streaming content material partials from the community and stitching them along with the header and footer partials from CacheStorage right into a single response that the Service Employee will present.

Streaming partial content material and stitching collectively responses#section7

Whereas the header and footer partials will probably be accessible nearly instantaneously as a result of they’ve been in CacheStorage for the reason that Service Employee’s set up, it’s the content material partial we retrieve from the community that would be the bottleneck. It’s due to this fact important that we stream responses so we will begin pushing markup to the browser as rapidly as attainable. ReadableStream can do that for us.

This ReadableStream enterprise is a mind-bender. Anybody who tells you it’s “simple” is whispering candy nothings to you. It’s laborious. After I wrote my very own operate to merge streamed responses and tousled a essential step—which ended up not bettering web page efficiency, thoughts you—I modified Jake Archibald’s mergeResponses() operate to go well with my wants:

async operate mergeResponses (responsePromises) {

const readers = responsePromises.map(responsePromise => {

return Promise.resolve(responsePromise).then(response => {

return response.physique.getReader();

});

});

let doneResolve,

doneReject;

const accomplished = new Promise((resolve, reject) => {

doneResolve = resolve;

doneReject = reject;

});

const readable = new ReadableStream({

async pull (controller) {

const reader = await readers[0];

strive {

const { accomplished, worth } = await reader.learn();

if (accomplished) {

readers.shift();

if (!readers[0]) {

controller.shut();

doneResolve();

return;

}

return this.pull(controller);

}

controller.enqueue(worth);

} catch (err) {

doneReject(err);

throw err;

}

},

cancel () {

doneResolve();

}

});

const headers = new Headers();

headers.append("Content material-Sort", "textual content/html");

return {

accomplished,

response: new Response(readable, {

headers

})

};

}As traditional, there’s rather a lot happening:

mergeResponses()accepts an argument namedresponsePromises, which is an array ofResponseobjects returned from both a navigation preload,fetch(), orcaches.match(). Assuming the community is out there, it will at all times comprise three responses: two fromcaches.match()and (hopefully) one from the community.- Earlier than we will stream the responses within the

responsePromisesarray, we should mapresponsePromisesto an array containing one reader for every response. Every reader is used later in aReadableStream()constructor to stream every response’s contents. - A promise named

accomplishedis created. In it, we assign the promise’sresolve()andreject()capabilities to the exterior variablesdoneResolveanddoneReject, respectively. These will probably be used within theReadableStream()to sign whether or not the stream is completed or has hit a snag. - The brand new

ReadableStream()occasion is created with a reputation ofreadable. As responses stream in fromCacheStorageand the community, their contents will probably be appended toreadable. - The stream’s

pull()methodology streams the contents of the primary response within the array. If the stream isn’t canceled one way or the other, the reader for every response is discarded by calling the readers array’sshift()methodology when the response is absolutely streamed. This repeats till there aren’t any extra readers to course of. - When all is completed, the merged stream of responses is returned as a single response, and we return it with a

Content material-Sortheader worth oftextual content/html.

That is a lot easier when you use TransformStream, however relying on if you learn this, that might not be an possibility for each browser. For now, we’ll have to stay with this strategy.

Now let’s revisit the Service Employee’s fetch() occasion from earlier, and apply the mergeResponses() operate:

self.addEventListener("fetch", occasion => {

const { request } = occasion;

// Static asset dealing with code omitted for brevity

// ...

// Examine if this can be a request for a doc

if (request.mode === "navigate") {

// Navigation preload/fetch() fallback code omitted.

// ...

const { accomplished, response } = await mergeResponses([

caches.match("/partial-header"),

networkContent,

caches.match("/partial-footer")

]);

occasion.waitUntil(accomplished);

occasion.respondWith(response);

}

});On the finish of the fetch() occasion handler, we go the header and footer partials from CacheStorage to the mergeResponses() operate, and go the consequence to the fetch() occasion’s respondWith() methodology, which serves the merged response on behalf of the Service Employee.

Are the outcomes definitely worth the trouble?#section8

This can be a lot of stuff to do, and it’s difficult! You may mess one thing up, or perhaps your web site’s structure isn’t well-suited to this actual strategy. So it’s vital to ask: are the efficiency advantages definitely worth the work? For my part? Sure! The artificial efficiency features aren’t dangerous in any respect:

Artificial assessments don’t measure efficiency for something besides the particular gadget and web connection they’re carried out on. Even so, these assessments have been performed on a staging model of my consumer’s web site with a low-end Nokia 2 Android telephone on a throttled “Quick 3G” connection in Chrome’s developer instruments. Every class was examined ten occasions on the homepage. The takeaways listed here are:

- No Service Employee in any respect is barely sooner than the “normal” Service Employee with easier caching patterns than the streaming variant. Like, ever so barely sooner. This can be because of the delay launched by Service Employee startup, nonetheless, the RUM information I’ll go over reveals a special case.

- Each LCP and FCP are tightly coupled in eventualities the place there’s no Service Employee or when the “normal” Service Employee is used. It is because the content material of the web page is fairly easy and the CSS is pretty small. The Largest Contentful Paint is often the opening paragraph on a web page.

- Nonetheless, the streaming Service Employee decouples FCP and LCP as a result of the header content material partial streams in straight away from

CacheStorage. - Each FCP and LCP are decrease within the streaming Service Employee than in different circumstances.

The advantages of the streaming Service Employee for actual customers is pronounced. For FCP, we obtain an 79% enchancment over no Service Employee in any respect, and a 63% enchancment over the “normal” Service Employee. The advantages for LCP are extra delicate. In comparison with no Service Employee in any respect, we notice a 41% enchancment in LCP—which is unbelievable! Nonetheless, in comparison with the “normal” Service Employee, LCP is a contact slower.

As a result of the lengthy tail of efficiency is vital, let’s have a look at the ninety fifth percentile of FCP and LCP efficiency:

The ninety fifth percentile of RUM information is a superb place to evaluate the slowest experiences. On this case, we see that the streaming Service Employee confers a 40% and 51% enchancment in FCP and LCP, respectively, over no Service Employee in any respect. In comparison with the “normal” Service Employee, we see a discount in FCP and LCP by 19% and 43%, respectively. If these outcomes appear a bit squirrely in comparison with artificial metrics, keep in mind: that’s RUM information for you! You by no means know who’s going to go to your web site on which gadget on what community.

Whereas each FCP and LCP are boosted by the myriad advantages of streaming, navigation preload (in Chrome’s case), and sending much less markup by stitching collectively partials from each CacheStorage and the community, FCP is the clear winner. Perceptually talking, the profit is pronounced, as this video would counsel:

Now ask your self this: If that is the type of enchancment we will count on on such a small and easy web site, what may we count on on an internet site with bigger header and footer markup payloads?

Caveats and conclusions#section9

Are there trade-offs with this on the event aspect? Oh yeah.

As Philip Walton has famous, a cached header partial means the doc title should be up to date in JavaScript on every navigation by altering the worth of doc.title. It additionally means you’ll have to replace the navigation state in JavaScript to replicate the present web page if that’s one thing you do in your web site. Word that this shouldn’t trigger indexing points, as Googlebot crawls pages with an unprimed cache.

There may be some challenges on websites with authentication. For instance, in case your website’s header shows the present authenticated consumer on log in, you will have to replace the header partial markup supplied by CacheStorage in JavaScript on every navigation to replicate who’s authenticated. You might be able to do that by storing fundamental consumer information in localStorage and updating the UI from there.

There are definitely different challenges, however it’ll be as much as you to weigh the user-facing advantages versus the event prices. For my part, this strategy has broad applicability in functions similar to blogs, advertising web sites, information web sites, ecommerce, and different typical use circumstances.

All in all, although, it’s akin to the efficiency enhancements and effectivity features that you just’d get from an SPA. Solely the distinction is that you just’re not changing time-tested navigation mechanisms and grappling with all of the messiness that entails, however enhancing them. That’s the half I believe is de facto vital to contemplate in a world the place client-side routing is all the craze.

“What about Workbox?,” you may ask—and also you’d be proper to. Workbox simplifies rather a lot in the case of utilizing the Service Employee API, and also you’re not fallacious to achieve for it. Personally, I desire to work as near the steel as I can so I can acquire a greater understanding of what lies beneath abstractions like Workbox. Even so, Service Employee is tough. Use Workbox if it fits you. So far as frameworks go, its abstraction price could be very low.

No matter this strategy, I believe there’s unbelievable utility and energy in utilizing the Service Employee API to scale back the quantity of markup you ship. It advantages my consumer and all of the folks that use their web site. Due to Service Employee and the innovation round its use, my consumer’s web site is quicker within the far-flung elements of Wisconsin. That’s one thing I be ok with.

Particular due to Jake Archibald for his precious editorial recommendation, which, to place it mildly, significantly improved the standard of this text.