Machine studying and AI builders are wanting to get their palms on PyTorch 2.0, which was unveiled in late 2022 and is because of develop into accessible this month. Among the many options greeting keen ML builders is a compiler in addition to new optimizations for CPUs.

PyTorch is a well-liked machine studying library developed by Fb’s AI Analysis lab (FAIR) and launched to open supply in 2016. The Python-based library, which was developed atop the Torch scientific computing framework, is used to construct and prepare neural networks, similar to these used for giant language fashions (LLMs), similar to GPT-4, and laptop imaginative and prescient purposes.

The primary experimental launch of PyTorch 2.0 was unveiled in December by the PyTorch Basis, which was arrange underneath the Linux Basis simply three months earlier. Now the PyTorch Basis is gearing as much as launch the primary secure launch of PyTorch 2.0 this month.

Among the many largest enhancements in PyTorch 2.0 is torch.compile. In accordance with the PyTorch Basis, the brand new compiler is designed to be a lot sooner than the earlier on-the-fly era of code supplied within the default “keen mode” in PyTorch 1.0.

The brand new compiler wraps various applied sciences into the library, together with TorchDynamo, AOTAutograd, PrimTorch and TorchInductor. All of those have been developed in Python, versus C++ (which Python is appropriate with). The launch of two.0 “begins the transfer” again to Python from C++, the PyTorch Basis says, including “it is a substantial new course for PyTorch.”

“From day one, we knew the efficiency limits of keen execution,” the PyTorch Basis writes. “In July 2017, we began our first analysis mission into growing a Compiler for PyTorch. The compiler wanted to make a PyTorch program quick, however not at the price of the PyTorch expertise. Our key standards was to protect sure sorts of flexibility–assist for dynamic shapes and dynamic applications which researchers use in numerous phases of exploration.”

The PyTorch Basis expects customers to start out within the non-compiled “keen mode,” which makes use of dynamic on-the-fly code generator, and remains to be accessible in 2.0. But it surely expects the builders to shortly transfer as much as the compiled mode utilizing the porch.compile command, which might be accomplished with the addition of a single line of code, it says.

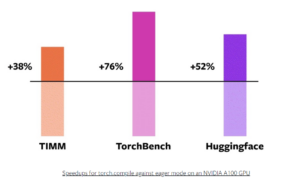

Customers can count on to see a 43% enhance in compilation time with 2.0 over 1.0, in response to the PyTorch Basis. This quantity comes from benchmark checks that PyTorch Basis ran utilizing PyTorch 2.0 on an Nvidia A100 GPU towards 163 open supply fashions, together with HuggingFace Tranformers, TIMM, and TorchBench.

In accordance with PyTorch Basis, the brand new compiler ran 21% sooner when utilizing Float32 precision mode and ran 51% sooner when utilizing Automated Blended Precision (AMP) mode. The brand new torch.compile mode labored 93% of the time, the muse mentioned.

“Within the roadmap of PyTorch 2.x we hope to push the compiled mode additional and additional when it comes to efficiency and scalability. A few of this work is in-flight,” the PyTorch Basis mentioned. “A few of this work has not began but. A few of this work is what we hope to see, however don’t have the bandwidth to do ourselves.”

One of many firms serving to to develop PyTorch 2.0 is Intel. The chipmaker contributed to numerous components of the brand new compiler stack, together with TorchInductor, GNN, INT8 inference optimization, and the oneDNN Graph API.

Intel’s Susan Kahler, who works on AI/ML merchandise and options, described the contributions to the brand new compiler in a weblog.

“The TorchInductor CPU backend is sped up by leveraging the applied sciences from the Intel Extension for PyTorch for Conv/GEMM ops with post-op fusion and weight prepacking, and PyTorch ATen CPU kernels for memory-bound ops with specific vectorization on high of OpenMP-based thread parallelization,” she wrote.

PyTorch and Google’s TensorFlow are the 2 hottest deep studying frameworks. Hundreds of organizations around the globe are growing deep studying purposes utilizing PyTorch, and it’s use is rising.

The launch of PyTorch 2.0 will assist to speed up growth of deep studying and AI purposes, says Luca Antiga the CTO of Lightning AI and one of many main maintainers of PyTorch Lightning

“PyTorch 2.0 embodies the way forward for deep studying frameworks,” Antiga says. “The chance to seize a PyTorch program with successfully no person intervention and get huge on-device speedups and program manipulation out of the field unlocks an entire new dimension for AI builders.”

Associated Objects:

GPT-4 Has Arrived: Right here’s What to Know

OpenXLA Delivers Flexibility for ML Apps

PyTorch Upgrades to Cloud TPUs, Hyperlinks to R