Because the launch of Databricks on Google Cloud in early 2021, Databricks and Google Cloud have been partnering collectively to additional combine the Databricks platform into the cloud ecosystem and its native companies. Databricks is constructed on or tightly built-in with many Google Cloud native companies as we speak, together with Cloud Storage, Google Kubernetes Engine, and BigQuery. Databricks and Google Cloud are excited to announce an MLflow and Vertex AI deployment plugin to speed up the mannequin improvement lifecycle.

Why is MLOps troublesome as we speak?

The usual DevOps practices adopted by software program corporations that enable for fast iteration and experimentation usually don’t translate nicely to information scientists. These practices embody each human and technological ideas akin to workflow administration, supply management, artifact administration, and CICD. Given the added complexity of the character of machine studying (mannequin monitoring and mannequin drift), MLOps is troublesome to place into apply as we speak, and MLOps course of wants the suitable tooling.

Immediately’s machine studying (ML) ecosystem features a numerous set of instruments that may specialize and serve a portion of the ML lifecycle, however not many present a full finish to finish resolution – for this reason Databricks teamed up with Google Cloud to construct a seamless integration that leverages the very best of MLflow and Vertex AI to permit Knowledge Scientists to soundly practice their fashions, Machine Studying Engineers to productionalize and serve that mannequin, and Mannequin Customers to get their predictions for enterprise wants.

MLflow is an open supply library developed by Databricks to handle the total ML lifecycle, together with experimentation, reproducibility, deployment, and a central mannequin registry. Vertex AI is Google Cloud’s unified synthetic intelligence platform that gives an end-to-end ML resolution, from mannequin coaching to mannequin deployment. Knowledge scientists and machine studying engineers will be capable of deploy their fashions into manufacturing on Vertex AI for real-time mannequin serving utilizing pre-built Prediction pictures and guaranteeing mannequin high quality and freshness utilizing mannequin monitoring instruments due to this new plugin, which permits them to coach their fashions on Databricks’ Managed MLflow whereas using the facility of Apache Spark™ and open supply Delta Lake (in addition to its packaged ML Runtime, AutoML, and Mannequin Registry).

Observe: The plugin additionally has been examined and works nicely with open supply MLflow.

Technical Demo

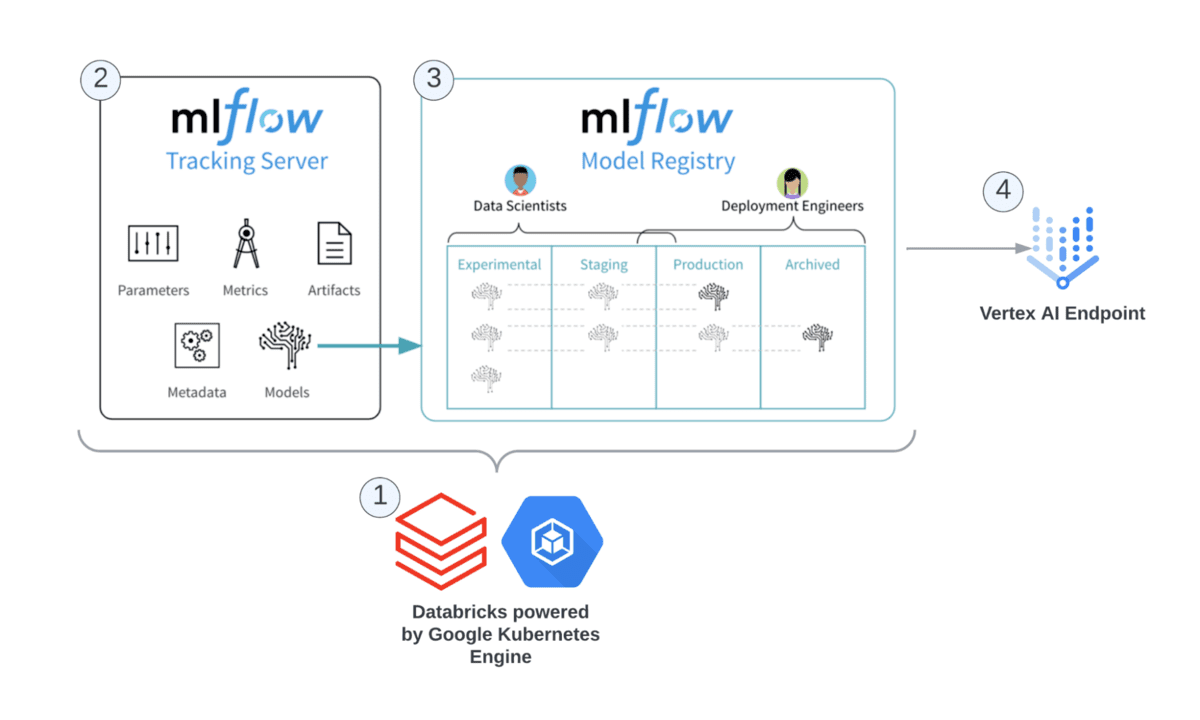

Let’s present you construct an end-to-end MLOps resolution utilizing MLflow and Vertex AI. We’ll practice a easy scikit-learn diabetes mannequin with MLflow, put it aside into the Mannequin Registry, and deploy it right into a Vertex AI endpoint.

Earlier than we start, it’s essential to grasp what goes on behind the scenes when utilizing this integration. Wanting on the reference structure under, you possibly can see the Databricks elements and Google Cloud companies used for this integration:

Observe: The next steps will assume that you’ve a Databricks Google Cloud workspace deployed with the suitable permissions to Vertex AI and Cloud Construct arrange on Google Cloud.

Step 1: Create a Service Account with the suitable permissions to entry Vertex AI assets and connect it to your cluster with MLR 10.x.

Step 2: Obtain the google-cloud-mlflow plugin from PyPi onto your cluster. You are able to do this by downloading instantly onto your cluster as a library or run the next pip command in a pocket book hooked up to your cluster:

%pip set up google-cloud-mlflowStep 3: In your pocket book, import the next packages:

import mlflow from mlflow.deployments import get_deploy_client from sklearn.model_selection import train_test_split from sklearn.datasets import load_diabetes from sklearn.ensemble import RandomForestRegressor import pandas as pd import numpy as npStep 3: Practice, take a look at, and autolog a scikit-learn experiment, together with the hyperparameters used and take a look at outcomes with MLflow.

# load dataset db = load_diabetes() X = db.information y = db.goal X_train, X_test, y_train, y_test = train_test_split(X, y) # mlflow.sklearn.autolog() requires mlflow 1.11.0 or above. mlflow.sklearn.autolog() # With autolog() enabled, all mannequin parameters, a mannequin rating, and the fitted mannequin are routinely logged. with mlflow.start_run() as run: # Set the mannequin parameters. n_estimators = 100 max_depth = 6 max_features = 3 # Create and practice mannequin. rf = RandomForestRegressor(n_estimators = n_estimators, max_depth = max_depth, max_features = max_features) rf.match(X_train, y_train) # Use the mannequin to make predictions on the take a look at dataset. predictions = rf.predict(X_test) mlflow.end_run()Step 4: Log the mannequin into the MLflow Registry, which saves mannequin artifacts into Google Cloud Storage.

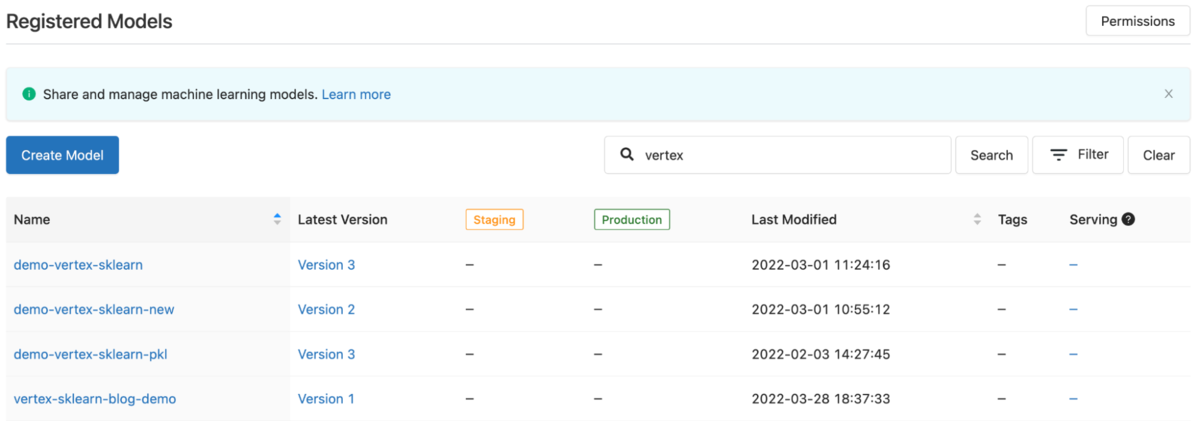

model_name = "vertex-sklearn-blog-demo" mlflow.sklearn.log_model(rf, model_name, registered_model_name=model_name)

Registered Fashions within the MLflow Mannequin Registry Step 5: Programmatically get the most recent model of the mannequin utilizing the MLflow Monitoring Consumer. In an actual case situation you’ll possible transition the mannequin from stage to manufacturing in your CICD course of as soon as the mannequin has met manufacturing requirements.

shopper = mlflow.monitoring.MLflowClient() model_version_infos = shopper.search_model_versions(f"identify="{model_name}"") model_version = max([int(model_version_info.version) for model_version_info in model_version_infos]) model_uri=f"fashions:/{model_name}/{model_version}" # model_uri must be fashions:/vertex-sklearn-blog-demo/1Step 6: Instantiate the Vertex AI shopper and deploy to an endpoint utilizing simply three traces of code.

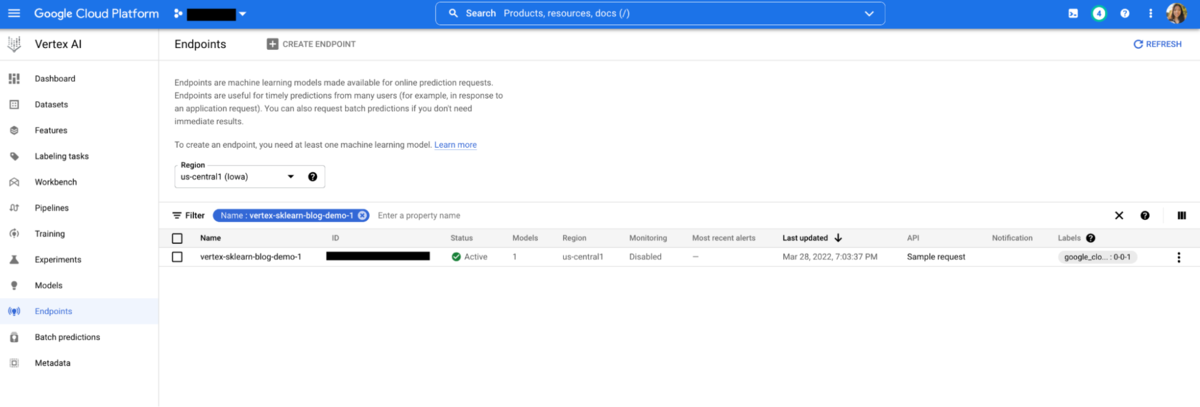

# Actually easy Vertex shopper instantiation vtx_client = mlflow.deployments.get_deploy_client("google_cloud") deploy_name = f"{model_name}-{model_version}" # Deploy to Vertex AI utilizing three traces of code! Observe: If utilizing python > 3.7, this may occasionally take as much as 20 minutes. deployment = vtx_client.create_deployment( identify=deploy_name, model_uri=model_uri)Step 7: Verify the UI in Vertex AI and see the printed mannequin.

Vertex AI within the Google Cloud Console Step 8: Invoke the endpoint utilizing the plugin throughout the pocket book for batch inference. In a real-case manufacturing situation, you’ll possible invoke the endpoint from an online service or utility for actual time inference.

# Use the .predict() technique from the identical plugin predictions = vtx_client.predict(deploy_name, X_test)Your predictions ought to return the next Prediction class, which you’ll be able to proceed to parse right into a pandas dataframe and use for what you are promoting wants:

Prediction(predictions=[108.8213062661298, 121.8157069007118, 196.7929187443363, 159.9036896543356, 276.4400040206476, 100.4831327904369, 98.03313768162721, 170.2935904379434, 123.854209126032, 200.582723610864, 243.8882952682826, 89.56782205639794, 225.6276360204631, 183.9313416074667, 182.1405547852122, 179.3878755228988, 149.3434367420051, ...Conclusion

As you can see, MLOps doesn’t have to be difficult. Using the end to end MLflow to Vertex AI solution, data teams can go from development to production in matters of days vs. weeks, months, or sometimes never! For a live demo of the end to end workflow, check out the on-demand session “Accelerating MLOps Using Databricks and Vertex AI on Google Cloud” during DAIS 2022.

To start your ML journey today, import the demo notebook into your workspace today. First-time customers can take advantage of partnership credits and start a free Databricks on Google Cloud trial. For any questions, please reach out to us using this contact form.