Final Up to date on November 23, 2022

Structuring the info pipeline in a method that it may be effortlessly linked to your deep studying mannequin is a vital facet of any deep learning-based system. PyTorch packs all the things to just do that.

Whereas within the earlier tutorial, we used easy datasets, we’ll must work with bigger datasets in actual world eventualities with the intention to absolutely exploit the potential of deep studying and neural networks.

On this tutorial, you’ll discover ways to construct customized datasets in PyTorch. Whereas the main focus right here stays solely on the picture information, ideas realized on this session will be utilized to any type of dataset akin to textual content or tabular datasets. So, right here you’ll study:

- Learn how to work with pre-loaded picture datasets in PyTorch.

- Learn how to apply torchvision transforms on preloaded datasets.

- Learn how to construct customized picture dataset class in PyTorch and apply varied transforms on it.

Let’s get began.

Loading and Offering Datasets in PyTorch

Image by Uriel SC. Some rights reserved.

This tutorial is in three elements; they’re

- Preloaded Datasets in PyTorch

- Making use of Torchvision Transforms on Picture Datasets

- Constructing Customized Picture Datasets

Quite a lot of preloaded datasets akin to CIFAR-10, MNIST, Trend-MNIST, and so forth. can be found within the PyTorch area library. You’ll be able to import them from torchvision and carry out your experiments. Moreover, you may benchmark your mannequin utilizing these datasets.

We’ll transfer on by importing Trend-MNIST dataset from torchvision. The Trend-MNIST dataset contains 70,000 grayscale photos in 28×28 pixels, divided into ten lessons, and every class accommodates 7,000 photos. There are 60,000 photos for coaching and 10,000 for testing.

Let’s begin by importing a number of libraries we’ll use on this tutorial.

|

import torch from torch.utils.information import Dataset from torchvision import datasets import torchvision.transforms as transforms import numpy as np import matplotlib.pyplot as plt torch.manual_seed(42) |

Let’s additionally outline a helper perform to show the pattern components within the dataset utilizing matplotlib.

|

def imshow(sample_element, form = (28, 28)): plt.imshow(sample_element[0].numpy().reshape(form), cmap=“grey’) plt.title(‘Label=” + str(sample_element[1])) plt.present() |

Now, we’ll load the Trend-MNIST dataset, utilizing the perform FashionMNIST() from torchvision.datasets. This perform takes some arguments:

root: specifies the trail the place we’re going to retailer our information.prepare: signifies whether or not it’s prepare or check information. We’ll set it to False as we don’t but want it for coaching.obtain: set toTrue, which means it’s going to obtain the info from the web.remodel: permits us to make use of any of the obtainable transforms that we have to apply on our dataset.

|

dataset = datasets.FashionMNIST( root=‘./information’, prepare=False, obtain=True, remodel=transforms.ToTensor() ) |

Let’s examine the category names together with their corresponding labels we’ve within the Trend-MNIST dataset.

|

lessons = dataset.lessons print(lessons) |

It prints

|

[‘T-shirt/top’, ‘Trouser’, ‘Pullover’, ‘Dress’, ‘Coat’, ‘Sandal’, ‘Shirt’, ‘Sneaker’, ‘Bag’, ‘Ankle boot’] |

Equally, for sophistication labels:

|

print(dataset.class_to_idx) |

It prints

|

{‘T-shirt/high’: 0, ‘Trouser’: 1, ‘Pullover’: 2, ‘Costume’: 3, ‘Coat’: 4, ‘Sandal’: 5, ‘Shirt’: 6, ‘Sneaker’: 7, ‘Bag’: 8, ‘Ankle boot’: 9} |

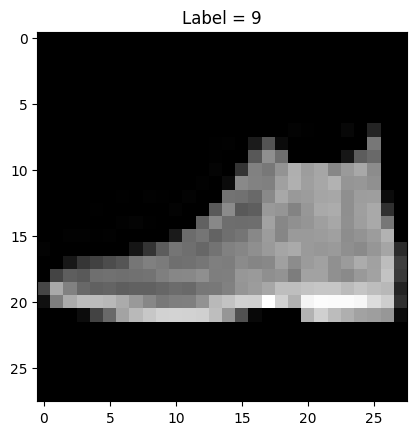

Right here is how we will visualize the primary factor of the dataset with its corresponding label utilizing the helper perform outlined above.

First factor of the Trend MNIST dataset

In lots of circumstances, we’ll have to use a number of transforms earlier than feeding the pictures to neural networks. As an illustration, lots of instances we’ll must RandomCrop the pictures for information augmentation.

As you may see beneath, PyTorch allows us to select from quite a lot of transforms.

This exhibits all obtainable remodel capabilities:

|

[‘AugMix’, ‘AutoAugment’, ‘AutoAugmentPolicy’, ‘CenterCrop’, ‘ColorJitter’, ‘Compose’, ‘ConvertImageDtype’, ‘ElasticTransform’, ‘FiveCrop’, ‘GaussianBlur’, ‘Grayscale’, ‘InterpolationMode’, ‘Lambda’, ‘LinearTransformation’, ‘Normalize’, ‘PILToTensor’, ‘Pad’, ‘RandAugment’, ‘RandomAdjustSharpness’, ‘RandomAffine’, ‘RandomApply’, ‘RandomAutocontrast’, ‘RandomChoice’, ‘RandomCrop’, ‘RandomEqualize’, ‘RandomErasing’, ‘RandomGrayscale’, ‘RandomHorizontalFlip’, ‘RandomInvert’, ‘RandomOrder’, ‘RandomPerspective’, ‘RandomPosterize’, ‘RandomResizedCrop’, ‘RandomRotation’, ‘RandomSolarize’, ‘RandomVerticalFlip’, ‘Resize’, ‘TenCrop’, ‘ToPILImage’, ‘ToTensor’, ‘TrivialAugmentWide’, ...] |

For instance, let’s apply the RandomCrop remodel to the Trend-MNIST photos and convert them to a tensor. We are able to use remodel.Compose to mix a number of transforms as we realized from the earlier tutorial.

|

randomcrop_totensor_transform = transforms.Compose([transforms.CenterCrop(16), transforms.ToTensor()]) dataset = datasets.FashionMNIST(root=‘./information’, prepare=False, obtain=True, remodel=randomcrop_totensor_transform) print(“form of the primary information pattern: “, dataset[0][0].form) |

This prints

|

form of the primary information pattern: torch.Dimension([1, 16, 16]) |

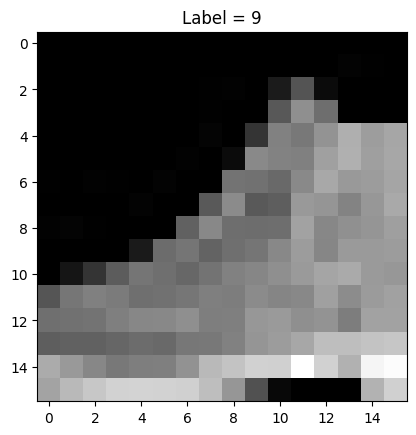

As you may see picture has now been cropped to $16times 16$ pixels. Now, let’s plot the primary factor of the dataset to see how they’ve been randomly cropped.

|

imshow(dataset[0], form=(16, 16)) |

This exhibits the next picture

Cropped picture from Trend MNIST dataset

Placing all the things collectively, the entire code is as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

import torch from torch.utils.information import Dataset from torchvision import datasets import torchvision.transforms as transforms import numpy as np import matplotlib.pyplot as plt torch.manual_seed(42)

def imshow(sample_element, form = (28, 28)): plt.imshow(sample_element[0].numpy().reshape(form), cmap=‘grey’) plt.title(‘Label=” + str(sample_element[1])) plt.present()

dataset = datasets.FashionMNIST( root=“./information’, prepare=False, obtain=True, remodel=transforms.ToTensor() )

lessons = dataset.lessons print(lessons) print(dataset.class_to_idx)

imshow(dataset[0])

randomcrop_totensor_transform = transforms.Compose([transforms.CenterCrop(16), transforms.ToTensor()]) dataset = datasets.FashionMNIST( root=‘./information’, prepare=False, obtain=True, remodel=randomcrop_totensor_transform) )

print(“form of the primary information pattern: “, dataset[0][0].form) imshow(dataset[0], form=(16, 16)) |

Till now we’ve been discussing prebuilt datasets in PyTorch, however what if we’ve to construct a customized dataset class for our picture dataset? Whereas within the earlier tutorial we solely had a easy overview in regards to the elements of the Dataset class, right here we’ll construct a customized picture dataset class from scratch.

Firstly, within the constructor we outline the parameters of the category. The __init__ perform within the class instantiates the Dataset object. The listing the place photos and annotations are saved is initialized together with the transforms if we wish to apply them on our dataset later. Right here we assume we’ve some photos in a listing construction like the next:

|

attface/ |– imagedata.csv |– s1/ | |– 1.png | |– 2.png | |– 3.png | … |– s2/ | |– 1.png | |– 2.png | |– 3.png | … … |

and the annotation is a CSV file like the next, positioned beneath the foundation listing of the pictures (i.e., “attface” above):

|

s1/1.png,1 s1/2.png,1 s1/3.png,1 … s12/1.png,12 s12/2.png,12 s12/3.png,12 |

the place the primary column of the CSV information is the trail to the picture and the second column is the label.

Equally, we outline the __len__ perform within the class that returns the overall variety of samples in our picture dataset whereas the __getitem__ methodology reads and returns a knowledge factor from the dataset at a given index.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

import os import pandas as pd import numpy as np from torchvision.io import learn_picture

# creating object for our picture dataset class CustomDatasetForImages(Dataset): # defining constructor def __init__(self, annotations, listing, remodel=None): # listing containing the pictures self.listing = listing annotations_file_dir = os.path.be a part of(self.listing, annotations) # loading the csv with information about photos self.labels = pd.read_csv(annotations_file_dir) # remodel to be utilized on photos self.remodel = remodel

# Variety of photos in dataset self.len = self.labels.form[0]

# getting the size def __len__(self): return len(self.labels)

# getting the info objects def __getitem__(self, idx): # defining the picture path image_path = os.path.be a part of(self.listing, self.labels.iloc[idx, 0]) # studying the pictures picture = read_image(image_path) # corresponding class labels of the pictures label = self.labels.iloc[idx, 1]

# apply the remodel if not set to None if self.remodel: picture = self.remodel(picture)

# returning the picture and label return picture, label |

Now, we will create our dataset object and apply the transforms on it. We assume the picture information are positioned beneath the listing named “attface” and the annotation CSV file is at “attface/imagedata.csv”. Then the dataset is created as follows:

|

listing = “attface” annotations = “imagedata.csv” custom_dataset = CustomDatasetForImages(annotations=annotations, listing=listing) |

Optionally, you may add the remodel perform to the dataset as properly:

|

randomcrop_totensor_transform = transforms.RandomCrop(16) dataset = CustomDatasetForImages(annotations=annotations, listing=listing, remodel=randomcrop_totensor_transform) |

You should use this practice picture dataset class to any of your datasets saved in your listing and apply the transforms in your necessities.

On this tutorial, you realized the way to work with picture datasets and transforms in PyTorch. Notably, you realized:

- Learn how to work with pre-loaded picture datasets in PyTorch.

- Learn how to apply torchvision transforms on pre-loaded datasets.

- Learn how to construct customized picture dataset class in PyTorch and apply varied transforms on it.