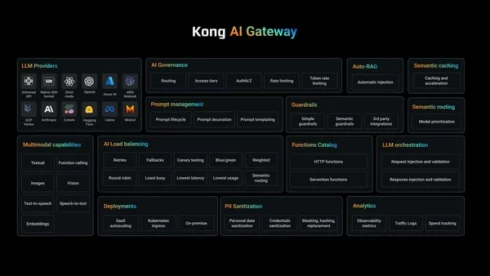

Kong has introduced updates to its AI Gateway, a platform for governance and safety of LLMs and different AI assets.

One of many new options in AI Gateway 3.10 is a RAG Injector to scale back LLM hallucinations by robotically querying the vector database and inserting related knowledge to make sure the LLM is augmenting the outcomes with recognized data sources, the corporate defined.

This improves safety as properly by placing the vector database behind the Kong AI Gateway, and likewise improves developer productiveness by permitting them to concentrate on issues apart from making an attempt to scale back hallucinations.

One other replace in AI Gateway 3.10 is an computerized personally identifiable info (PII) sanitization plugin to guard over 20 classes of PII throughout 12 completely different languages. It really works with most main AI suppliers, and may run on the international platform degree in order that builders don’t have to manually code the sanitization into each software they construct.

In line with Kong, different comparable sanitization choices are sometimes restricted to changing delicate knowledge with a token or eradicating it completely, however this plugin optionally reinserts the sanitized knowledge into the response earlier than it reaches the top consumer, guaranteeing they can get the info they want with out compromising privateness.

“As synthetic intelligence continues to evolve, organizations should undertake strong AI infrastructure to harness its full potential,” stated Marco Palladino, CTO and co-founder of Kong. “With this newest model of AI Gateway, we’re equipping our clients with the instruments essential to implement Agentic AI securely and successfully, guaranteeing seamless integration with out compromising consumer expertise. Furthermore, we’re serving to clear up a few of the greatest challenges with LLMs, corresponding to slicing down on hallucinations and enhancing knowledge safety and governance.”