Deep studying fashions have made spectacular progress in imaginative and prescient, language, and different modalities, notably with the rise of large-scale pre-training. Such fashions are most correct when utilized to check knowledge drawn from the identical distribution as their coaching set. Nevertheless, in observe, the info confronting fashions in real-world settings not often match the coaching distribution. As well as, the fashions will not be well-suited for functions the place predictive efficiency is simply a part of the equation. For fashions to be dependable in deployment, they need to be capable to accommodate shifts in knowledge distribution and make helpful choices in a broad array of eventualities.

In “Plex: In direction of Reliability Utilizing Pre-trained Giant Mannequin Extensions”, we current a framework for dependable deep studying as a brand new perspective a couple of mannequin’s talents; this consists of various concrete duties and datasets for stress-testing mannequin reliability. We additionally introduce Plex, a set of pre-trained massive mannequin extensions that may be utilized to many various architectures. We illustrate the efficacy of Plex within the imaginative and prescient and language domains by making use of these extensions to the present state-of-the-art Imaginative and prescient Transformer and T5 fashions, which leads to vital enchancment of their reliability. We’re additionally open-sourcing the code to encourage additional analysis into this method.

Framework for Reliability

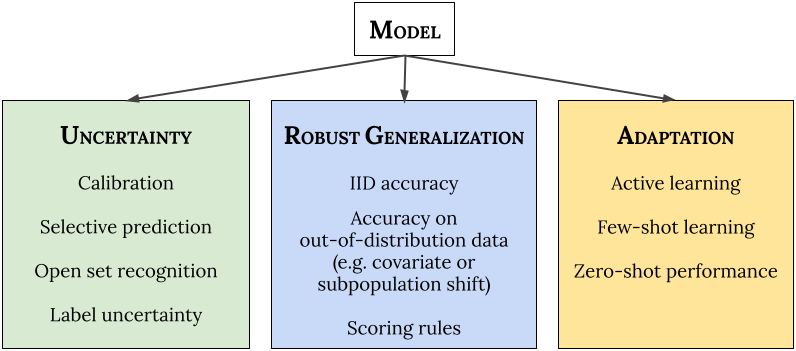

First, we discover perceive the reliability of a mannequin in novel eventualities. We posit three basic classes of necessities for dependable machine studying (ML) methods: (1) they need to precisely report uncertainty about their predictions (“know what they don’t know”); (2) they need to generalize robustly to new eventualities (distribution shift); and (3) they need to be capable to effectively adapt to new knowledge (adaptation). Importantly, a dependable mannequin ought to purpose to do nicely in all of those areas concurrently out-of-the-box, with out requiring any customization for particular person duties.

- Uncertainty displays the imperfect or unknown data that makes it troublesome for a mannequin to make correct predictions. Predictive uncertainty quantification permits a mannequin to compute optimum choices and helps practitioners acknowledge when to belief the mannequin’s predictions, thereby enabling sleek failures when the mannequin is prone to be fallacious.

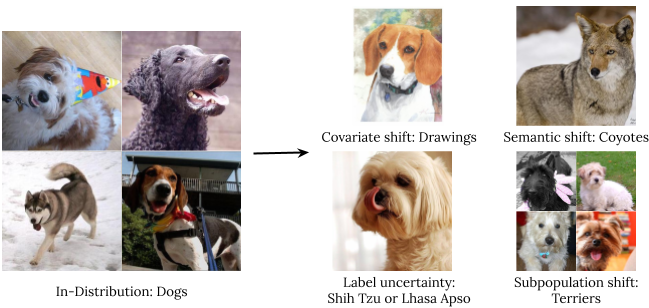

- Sturdy Generalization entails an estimate or forecast about an unseen occasion. We examine 4 varieties of out-of-distribution knowledge: covariate shift (when the enter distribution modifications between coaching and utility and the output distribution is unchanged), semantic (or class) shift, label uncertainty, and subpopulation shift.

Sorts of distribution shift utilizing an illustration of ImageNet canine. - Adaptation refers to probing the mannequin’s talents over the course of its studying course of. Benchmarks usually consider on static datasets with pre-defined train-test splits. Nevertheless, in lots of functions, we’re all in favour of fashions that may rapidly adapt to new datasets and effectively study with as few labeled examples as doable.

We apply 10 varieties of duties to seize the three reliability areas — uncertainty, strong generalization, and adaptation — and to make sure that the duties measure a various set of fascinating properties in every space. Collectively the duties comprise 40 downstream datasets throughout imaginative and prescient and pure language modalities: 14 datasets for fine-tuning (together with few-shot and lively studying–based mostly adaptation) and 26 datasets for out-of-distribution analysis.

Plex: Pre-trained Giant Mannequin Extensions for Imaginative and prescient and Language

To enhance reliability, we develop ViT-Plex and T5-Plex, constructing on massive pre-trained fashions for imaginative and prescient (ViT) and language (T5), respectively. A key function of Plex is extra environment friendly ensembling based mostly on submodels that every make a prediction that’s then aggregated. As well as, Plex swaps every structure’s linear final layer with a Gaussian course of or heteroscedastic layer to higher characterize predictive uncertainty. These concepts had been discovered to work very nicely for fashions educated from scratch on the ImageNet scale. We prepare the fashions with various sizes as much as 325 million parameters for imaginative and prescient (ViT-Plex L) and 1 billion parameters for language (T5-Plex L) and pre-training dataset sizes as much as 4 billion examples.

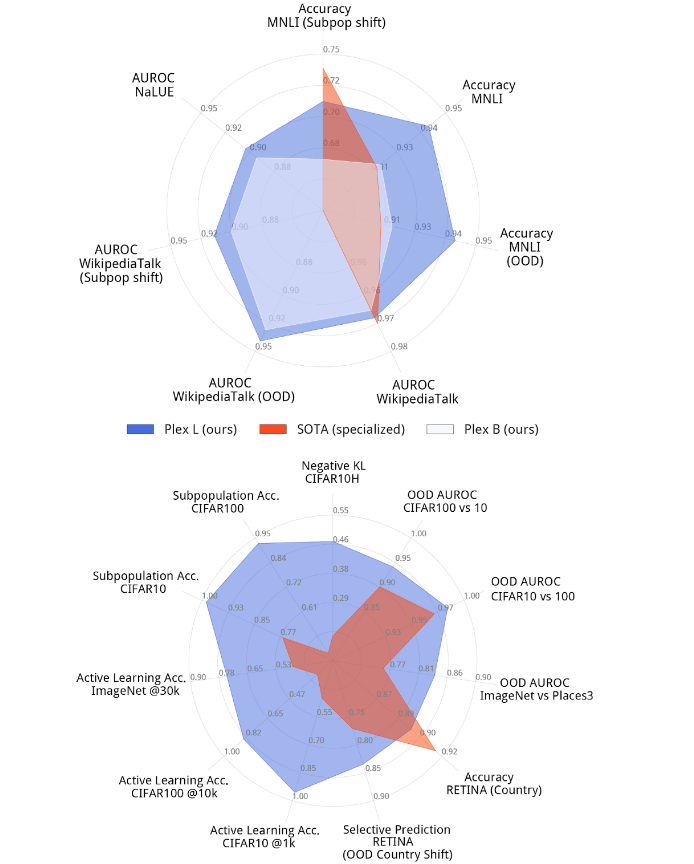

The next determine illustrates Plex’s efficiency on a choose set of duties in comparison with the prevailing state-of-the-art. The highest-performing mannequin for every process is normally a specialised mannequin that’s extremely optimized for that downside. Plex achieves new state-of-the-art on most of the 40 datasets. Importantly, Plex achieves robust efficiency throughout all duties utilizing the out-of-the-box mannequin output with out requiring any customized designing or tuning for every process.

Plex in Motion for Totally different Reliability Duties

We spotlight Plex’s reliability on choose duties beneath.

Open Set Recognition

We present Plex’s output within the case the place the mannequin should defer prediction as a result of the enter is one which the mannequin doesn’t assist. This process is called open set recognition. Right here, predictive efficiency is an element of a bigger decision-making situation the place the mannequin might abstain from ensuring predictions. Within the following determine, we present structured open set recognition: Plex returns a number of outputs and alerts the particular a part of the output about which the mannequin is unsure and is probably going out-of-distribution.

Label Uncertainty

In real-world datasets, there’s typically inherent ambiguity behind the bottom fact label for every enter. For instance, this will likely come up resulting from human rater ambiguity for a given picture. On this case, we’d just like the mannequin to seize the complete distribution of human perceptual uncertainty. We showcase Plex beneath on examples from an ImageNet variant we constructed that gives a floor fact label distribution.

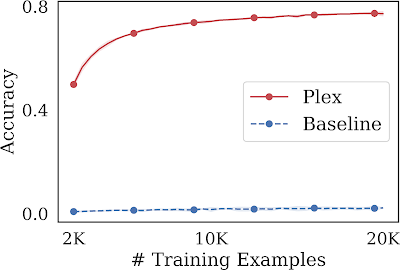

Energetic Studying

We look at a big mannequin’s capacity to not solely study over a hard and fast set of knowledge factors, but additionally take part in realizing which knowledge factors to study from within the first place. One such process is called lively studying, the place at every coaching step, the mannequin selects promising inputs amongst a pool of unlabeled knowledge factors on which to coach. This process assesses an ML mannequin’s label effectivity, the place label annotations could also be scarce, and so we want to maximize efficiency whereas minimizing the variety of labeled knowledge factors used. Plex achieves a major efficiency enchancment over the identical mannequin structure with out pre-training. As well as, even with fewer coaching examples, it additionally outperforms the state-of-the-art pre-trained methodology, BASE, which reaches 63% accuracy at 100K examples.

Study extra

Try our paper right here and an upcoming contributed speak in regards to the work on the ICML 2022 pre-training workshop on July 23, 2022. To encourage additional analysis on this course, we’re open-sourcing all code for coaching and analysis as a part of Uncertainty Baselines. We additionally present a demo that reveals use a ViT-Plex mannequin checkpoint. Layer and methodology implementations use Edward2.

Acknowledgements

We thank all of the co-authors for contributing to the undertaking and paper, together with Andreas Kirsch, Clara Huiyi Hu, Du Phan, D. Sculley, Honglin Yuan, Jasper Snoek, Jeremiah Liu, Jie Ren, Joost van Amersfoort, Karan Singhal, Kehang Han, Kelly Buchanan, Kevin Murphy, Mark Collier, Mike Dusenberry, Neil Band, Nithum Thain, Rodolphe Jenatton, Tim G. J. Rudner, Yarin Gal, Zachary Nado, Zelda Mariet, Zi Wang, and Zoubin Ghahramani. We additionally thank Anusha Ramesh, Ben Adlam, Dilip Krishnan, Ed Chi, Neil Houlsby, Rif A. Saurous, and Sharat Chikkerur for his or her useful suggestions, and Tom Small and Ajay Nainani for serving to with visualizations.