Some of the necessary facets in machine studying is hyperparameter optimization, as discovering the appropriate hyperparameters for a machine studying job could make or break a mannequin’s efficiency. Internally, we repeatedly use Google Vizier because the default platform for hyperparameter optimization. All through its deployment during the last 5 years, Google Vizier has been used greater than 10 million instances, over an unlimited class of functions, together with machine studying functions from imaginative and prescient, reinforcement studying, and language but additionally scientific functions reminiscent of protein discovery and {hardware} acceleration. As Google Vizier is ready to preserve observe of use patterns in its database, such information, often consisting of optimization trajectories termed research, comprise very priceless prior info on life like hyperparameter tuning aims, and are thus extremely engaging for creating higher algorithms.

Whereas there have been many earlier strategies for meta-learning over such information, such strategies share one main widespread disadvantage: their meta-learning procedures rely closely on numerical constraints such because the variety of hyperparameters and their worth ranges, and thus require all duties to make use of the very same whole hyperparameter search house (i.e., tuning specs). Extra textual info within the examine, reminiscent of its description and parameter names, are additionally not often used, but can maintain significant details about the kind of job being optimized. Such a disadvantage turns into extra exacerbated for bigger datasets, which regularly comprise vital quantities of such significant info.

Immediately in “In direction of Studying Common Hyperparameter Optimizers with Transformers”, we’re excited to introduce the OptFormer, one of many first Transformer-based frameworks for hyperparameter tuning, realized from large-scale optimization information utilizing versatile text-based representations. Whereas quite a few works have beforehand demonstrated the Transformer’s sturdy skills throughout numerous domains, few have touched on its optimization-based capabilities, particularly over textual content house. Our core findings display for the primary time some intriguing algorithmic skills of Transformers: 1) a single Transformer community is able to imitating extremely complicated behaviors from a number of algorithms over lengthy horizons; 2) the community is additional able to predicting goal values very precisely, in lots of instances surpassing Gaussian Processes, that are generally utilized in algorithms reminiscent of Bayesian Optimization.

Method: Representing Research as Tokens

Quite than solely utilizing numerical information as widespread with earlier strategies, our novel strategy as a substitute makes use of ideas from pure language and represents all of the examine information as a sequence of tokens, together with textual info from preliminary metadata. Within the animation beneath, this contains “CIFAR10”, “studying charge”, “optimizer sort”, and “Accuracy”, which informs the OptFormer of a picture classification job. The OptFormer then generates new hyperparameters to attempt on the duty, predicts the duty accuracy, and at last receives the true accuracy, which will likely be used to generate the following spherical’s hyperparameters. Utilizing the T5X codebase, the OptFormer is skilled in a typical encoder-decoder style utilizing normal generative pretraining over a variety of hyperparameter optimization aims, together with actual world information collected by Google Vizier, in addition to public hyperparameter (HPO-B) and blackbox optimization benchmarks (BBOB).

Imitating Insurance policies

Because the OptFormer is skilled over optimization trajectories by numerous algorithms, it might now precisely imitate such algorithms concurrently. By offering a text-based immediate within the metadata for the designated algorithm (e.g. “Regularized Evolution”), the OptFormer will imitate the algorithm’s conduct.

|

| Over an unseen check perform, the OptFormer produces practically similar optimization curves as the unique algorithm. Imply and normal deviation error bars are proven. |

Predicting Goal Values

As well as, the OptFormer might now predict the target worth being optimized (e.g. accuracy) and supply uncertainty estimates. We in contrast the OptFormer’s prediction with a normal Gaussian Course of and located that the OptFormer was in a position to make considerably extra correct predictions. This may be seen beneath qualitatively, the place the OptFormer’s calibration curve carefully follows the perfect diagonal line in a goodness-of-fit check, and quantitatively by means of normal mixture metrics reminiscent of log predictive density.

Combining Each: Mannequin-based Optimization

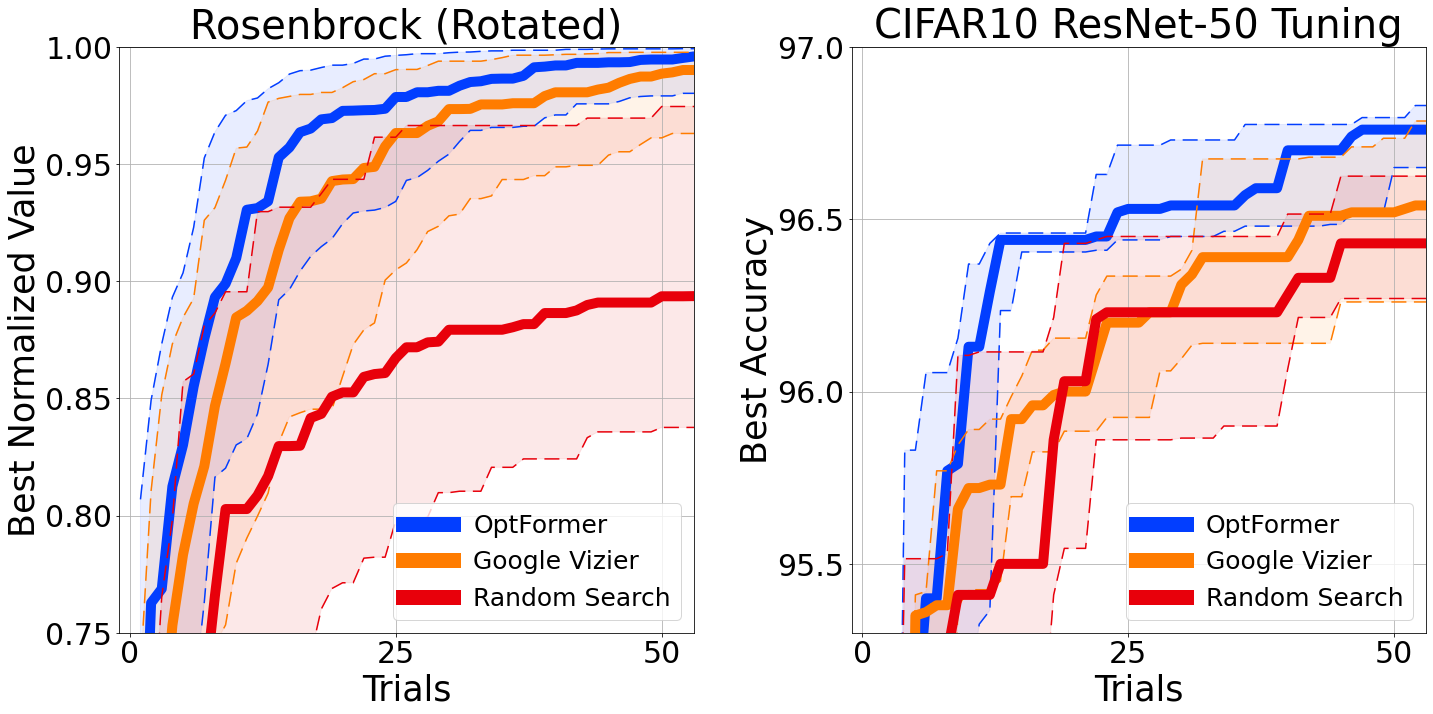

We might now use the OptFormer’s perform prediction functionality to higher information our imitated coverage, much like strategies present in Bayesian Optimization. Utilizing Thompson Sampling, we might rank our imitated coverage’s ideas and solely choose one of the best in line with the perform predictor. This produces an augmented coverage able to outperforming our industry-grade Bayesian Optimization algorithm in Google Vizier when optimizing basic artificial benchmark aims and tuning the training charge hyperparameters of a normal CIFAR-10 coaching pipeline.

|

| Left: Greatest-so-far optimization curve over a basic Rosenbrock perform. Proper: Greatest-so-far optimization curve over hyperparameters for coaching a ResNet-50 on CIFAR-10 through init2winit. Each instances use 10 seeds per curve, and error bars at twenty fifth and seventy fifth percentiles. |

Conclusion

All through this work, we found some helpful and beforehand unknown optimization capabilities of the Transformer. Sooner or later, we hope to pave the way in which for a common hyperparameter and blackbox optimization interface to make use of each numerical and textual information to facilitate optimization over complicated search areas, and combine the OptFormer with the remainder of the Transformer ecosystem (e.g. language, imaginative and prescient, code) by leveraging Google’s huge assortment of offline AutoML information.

Acknowledgements

The next members of DeepMind and the Google Analysis Mind Group carried out this analysis: Yutian Chen, Xingyou Music, Chansoo Lee, Zi Wang, Qiuyi Zhang, David Dohan, Kazuya Kawakami, Greg Kochanski, Arnaud Doucet, Marc’aurelio Ranzato, Sagi Perel, and Nando de Freitas.

We wish to additionally thank Chris Dyer, Luke Metz, Kevin Murphy, Yannis Assael, Frank Hutter, and Esteban Actual for offering priceless suggestions, and additional thank Sebastian Pineda Arango, Christof Angermueller, and Zachary Nado for technical discussions on benchmarks. As well as, we thank Daniel Golovin, Daiyi Peng, Yingjie Miao, Jack Parker-Holder, Jie Tan, Lucio Dery, and Aleksandra Faust for a number of helpful conversations.

Lastly, we thank Tom Small for designing the animation for this put up.