The Member Expertise

An insured member usually experiences their healthcare in two settings. The primary, and most relatable, is that with their healthcare suppliers, each major care physicians (PCPs) and specialists throughout a spread of varied inpatient and ambulatory settings. The opposite expertise encompasses the entire interactions with their well being plan, which consists of annual profit enrollment, declare funds, care discovery portals, and, now and again, care administration groups which might be designed to help member care.

These separate interactions by themselves are pretty advanced – some examples embrace scheduling companies, supplier therapy throughout all varieties of power and acute circumstances, medical reimbursement, and adjudication by a posh and prolonged billing cycle. Pretty invisible to the member (aside from an in- or out-of-network supplier standing) is a 3rd interplay between the insurer and supplier that performs a important function in how healthcare is delivered, and that’s the supplier community providing.

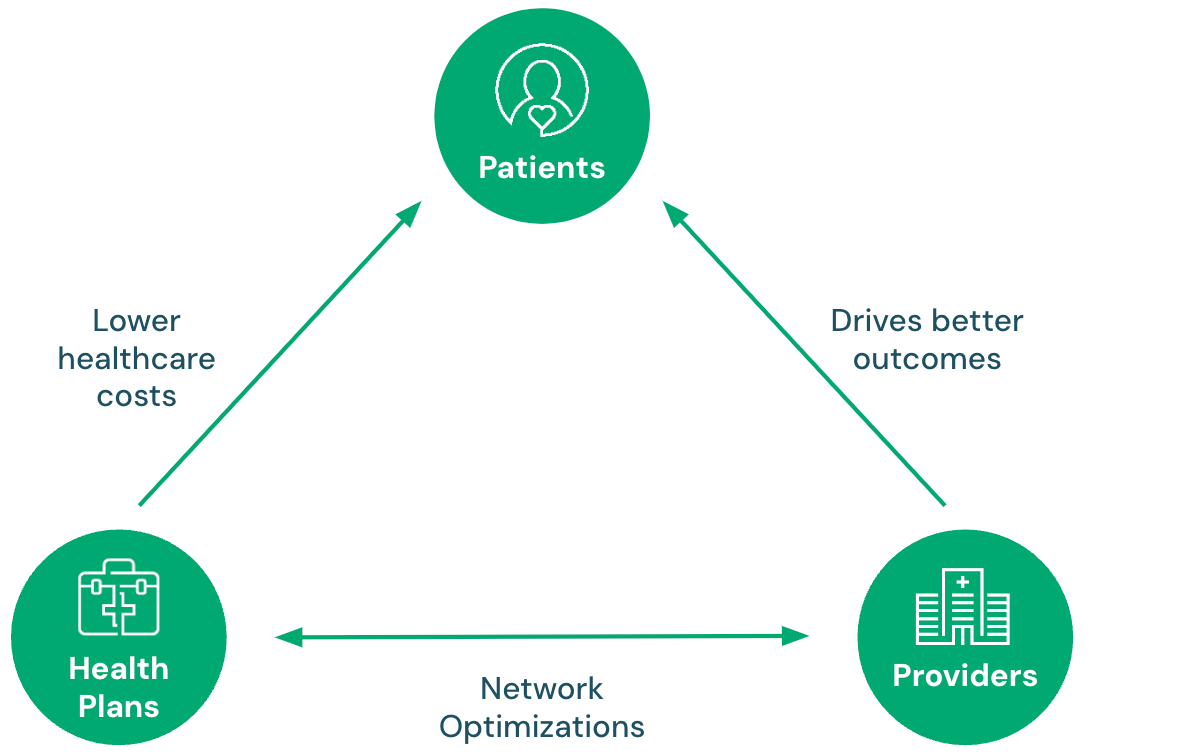

Well being plans routinely negotiate charges and credential suppliers to take part of their plan choices. These community choices differ throughout Medicare, Medicaid, and Industrial members, and might differ throughout employer plan sponsors. Several types of networks could be connected to completely different insurance coverage merchandise, providing completely different incentives to all events concerned. For instance, slender networks are supposed to supply decrease premiums and out-of-pocket prices in trade for having a smaller, native group of suppliers within the community.

Well being plans have incentives to optimize the supplier community providing to plan sponsors as a result of an optimum supplier community delivers higher high quality look after sufferers, at a decrease price. Such networks can higher synthesize care therapy plans, scale back fraud and waste, and provide equitable entry to care to call a couple of advantages.

Constructing an optimum community is less complicated stated than executed, nonetheless.

Optimizations Behind the Scenes

Optimization shouldn’t be easy. The Healthcare Effectiveness Information and Info Set (HEDIS) is a instrument utilized by greater than 90 % of U.S. well being plans to measure efficiency on essential dimensions of care and repair. A community excelling at a HEDIS measure of high quality comparable to Breast Most cancers Screening shouldn’t be helpful for a inhabitants that does not consist of girls over the age of fifty. Evaluation is fluid because the wants of a member inhabitants and strengths of a doctor group repeatedly evolve.

Compounding the evaluation of aligning membership must supplier capabilities is knowing who has entry to care from a geospatial standpoint. In different phrases, are members in a position to bodily entry acceptable supplier care as a result of that supplier is reachable when it comes to distance between areas. That is the place Databricks, constructed on the extremely scalable compute engine Apache Spark™, differentiates itself from historic approaches to the geospatial neighbor drawback.

Resolution Accelerator for Scalable Community Evaluation

Healthcare geospatial comparisons are typically phrased as “Who’re the closest ‘X’ suppliers positioned inside ‘Y’ distance of members?” That is the foundational query to know who can present the very best high quality of care or present speciality companies to a given member inhabitants. Answering this query traditionally falls into both geohashing, an method that basically subdivides house on a map and buckets factors in a grid collectively – permitting for scalability however resulting in outcomes that lack precision, or direct comparability of factors and distance which is correct however not scalable.

Databricks solves for each scalability and accuracy with a answer accelerator by leveraging numerous strengths throughout the Spark ecosystem. Enter framing matches the overall query of, given a “Y” radius, return the closest “X” areas, and knowledge enter requires latitude/longitude values and optionally accepts an identifier area that can be utilized to extra simply relate knowledge.

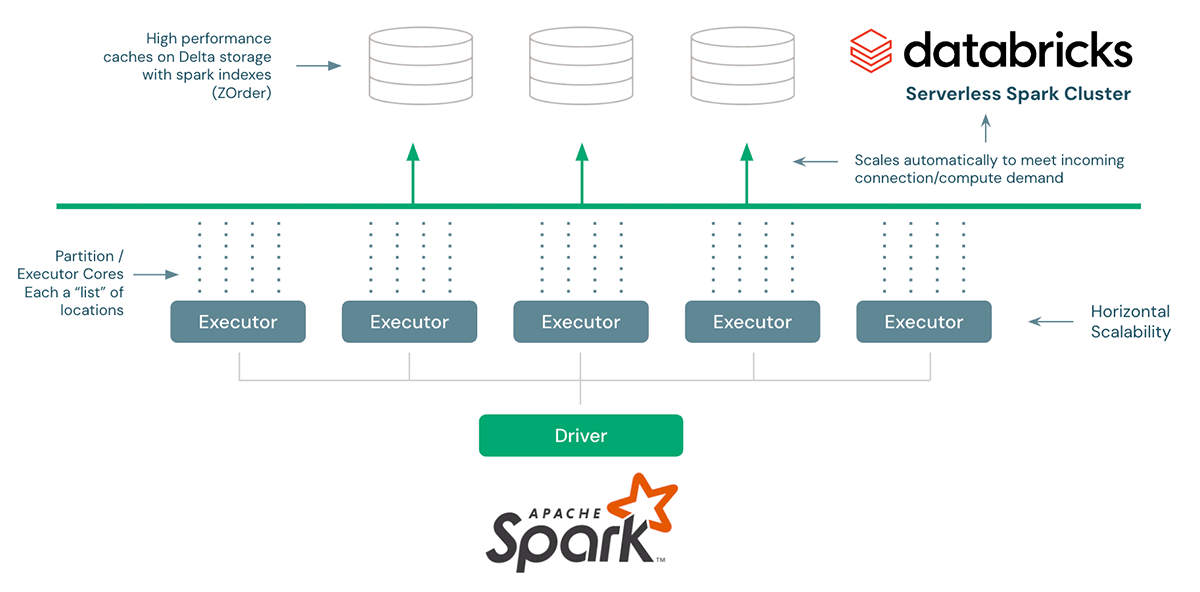

Configuration parameters within the accelerator embrace setting the diploma of parallelism to distribute compute for sooner runtimes,, a serverless connection string (serverless is a key element to the scalability and additional described under), and a brief working desk that’s used as a quick knowledge cache operation and optimized utilizing Spark Indexes (ZOrder) as a placeholder to your knowledge.

Output from this answer accelerator gives the origin location in addition to an array of all surrounding neighbors, their distances from the origin, and the search time for every document (to permit additional optimizations and tuning).

So how & why does this scalability work?

It is very important word that Spark is a horizontally scalable platform. Which means, it may scale related duties throughout an infinite variety of machines. Utilizing this sample, if we’re in a position to compute a extremely environment friendly calculation for one member and its nearest neighboring supplier areas, we will infinitely scale this answer utilizing Spark.

For the quick neighborhood search of a single member, we’d like a fairly environment friendly pruning approach in order that we don’t want to look the complete supplier dataset each single time and really quick knowledge retrieval (constantly sub-second response). The preliminary method to pruning makes use of a sort of geohash for this, however sooner or later will transfer to a extra environment friendly methodology with Databricks H3 representations. For very quick retrieval, we initially explored utilizing a cloud NoSQL, however we achieved drastically higher outcomes utilizing Databricks Serverless SQL and Spark indexes (the unique code for CosmosDB is included and could be applied on different NoSQLs). The structure for the Resolution Accelerator appears like this:

Spark historically has neither been environment friendly on small queries nor gives scalable JDBC connection administration to run numerous, massively parallel workloads. That is now not the case when utilizing the Databricks Lakehouse, which incorporates Serverless SQL and Delta Lake together with methods like ZOrder indexes. As well as, Databricks’ latest announcement of liquid clustering will provide an much more performant different to ZOrdering.

And at last, a fast word on scaling this accelerator. As a result of runtimes are depending on a mixture of non-trivial components just like the density of the areas, radius of search, and max outcomes returned, we due to this fact present sufficient visibility into efficiency to have the ability to tune this workload. The horizontal scale beforehand talked about happens by rising the variety of partitions within the configuration parameters. Some fast math with the overall variety of information, common lookup time, and variety of partitions tells you anticipated runtime. As a common rule, have 1 CPU aligned to every partition (this quantity can differ relying on circumstances).

Pattern Evaluation Use Circumstances

Evaluation at scale can present useful info like measuring equitable entry to care, offering price efficient suggestions on imaging or diagnostic testing areas, and with the ability to appropriately refer members to the very best performing suppliers reachable. Evaluating the appropriate website of look after a member, just like the aggressive dynamics seen in well being plan worth transparency, is a mixture of each worth and high quality.

These use instances end in tangible financial savings and higher outcomes for sufferers. As well as, nearest neighbor searches could be utilized past only for a well being plan community. Suppliers are in a position to establish affected person utilization patterns, provide chains can higher handle stock and re-routing, and pharmaceutical corporations can enhance detailing applications.

Extra Methods to Construct Smarter Networks with Higher High quality Information

We perceive not each healthcare group could also be ready the place they’re prepared to research supplier knowledge within the context of community optimization. Ribbon Well being, a company specializing in supplier listing administration and knowledge high quality, provides constructed on Databricks options to supply a foundational layer that may assist organizations extra rapidly and successfully handle their supplier knowledge.

Ribbon Well being is likely one of the early companions represented within the Databricks Market, an open market for exchanging knowledge merchandise comparable to datasets, notebooks, dashboards, and machine studying fashions. Now you can discover Ribbon Well being’s Supplier Listing & Location Listing on the Databricks Market so well being plans and care suppliers/navigators can begin utilizing this knowledge at the moment.

The info consists of NPIs, follow areas, contact info with confidence scores, specialties, location sorts, relative price and expertise, areas of focus, and accepted insurance coverage. The dataset additionally has broad protection, together with 99.9% of suppliers, 1.7M distinctive service areas, and insurance coverage protection for 90.1% of lives lined throughout all strains of enterprise and payers. The info is repeatedly checked and cross-checked to make sure probably the most up-to-date info is proven.

Supplier networks, given their function in price and high quality, are foundational to each the efficiency of the well being plan and member expertise. With these knowledge units, organizations can now extra effectively handle, customise, and preserve their very own supplier knowledge.