A protracted-standing drawback within the intersection of laptop imaginative and prescient and laptop graphics, view synthesis is the duty of making new views of a scene from a number of photos of that scene. This has obtained elevated consideration [1, 2, 3] since the introduction of neural radiance fields (NeRF). The issue is difficult as a result of to precisely synthesize new views of a scene, a mannequin must seize many sorts of data — its detailed 3D construction, supplies, and illumination — from a small set of reference photos.

On this put up, we current lately revealed deep studying fashions for view synthesis. In “Gentle Subject Neural Rendering” (LFNR), offered at CVPR 2022, we handle the problem of precisely reproducing view-dependent results through the use of transformers that study to mix reference pixel colours. Then in “Generalizable Patch-Primarily based Neural Rendering” (GPNR), to be offered at ECCV 2022, we handle the problem of generalizing to unseen scenes through the use of a sequence of transformers with canonicalized positional encoding that may be educated on a set of scenes to synthesize views of recent scenes. These fashions have some distinctive options. They carry out image-based rendering, combining colours and options from the reference photos to render novel views. They’re purely transformer-based, working on units of picture patches, and so they leverage a 4D mild discipline illustration for positional encoding, which helps to mannequin view-dependent results.

|

|

| We practice deep studying fashions which can be in a position to produce new views of a scene given a number of photos of it. These fashions are notably efficient when dealing with view-dependent results just like the refractions and translucency on the take a look at tubes. This animation is compressed; see the original-quality renderings right here. Supply: Lab scene from the NeX/Shiny dataset. |

Overview

The enter to the fashions consists of a set of reference photos and their digicam parameters (focal size, place, and orientation in area), together with the coordinates of the goal ray whose colour we wish to decide. To supply a brand new picture, we begin from the digicam parameters of the enter photos, acquire the coordinates of the goal rays (every similar to a pixel), and question the mannequin for every.

As a substitute of processing every reference picture utterly, we glance solely on the areas which can be more likely to affect the goal pixel. These areas are decided through epipolar geometry, which maps every goal pixel to a line on every reference body. For robustness, we take small areas round a variety of factors on the epipolar line, ensuing within the set of patches that may really be processed by the mannequin. The transformers then act on this set of patches to acquire the colour of the goal pixel.

Transformers are particularly helpful on this setting since their self-attention mechanism naturally takes units as inputs, and the eye weights themselves can be utilized to mix reference view colours and options to foretell the output pixel colours. These transformers comply with the structure launched in ViT.

|

| To foretell the colour of 1 pixel, the fashions take a set of patches extracted across the epipolar line of every reference view. Picture supply: LLFF dataset. |

Gentle Subject Neural Rendering

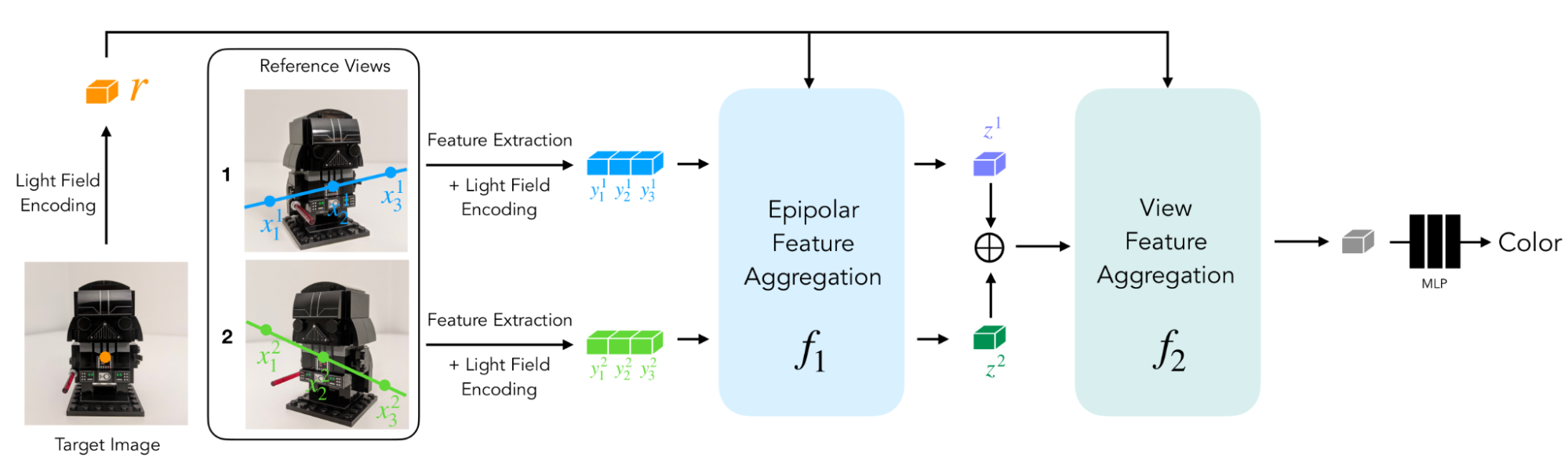

In Gentle Subject Neural Rendering (LFNR), we use a sequence of two transformers to map the set of patches to the goal pixel colour. The primary transformer aggregates data alongside every epipolar line, and the second alongside every reference picture. We will interpret the primary transformer as discovering potential correspondences of the goal pixel on every reference body, and the second as reasoning about occlusion and view-dependent results, that are widespread challenges of image-based rendering.

|

| LFNR makes use of a sequence of two transformers to map a set of patches extracted alongside epipolar traces to the goal pixel colour. |

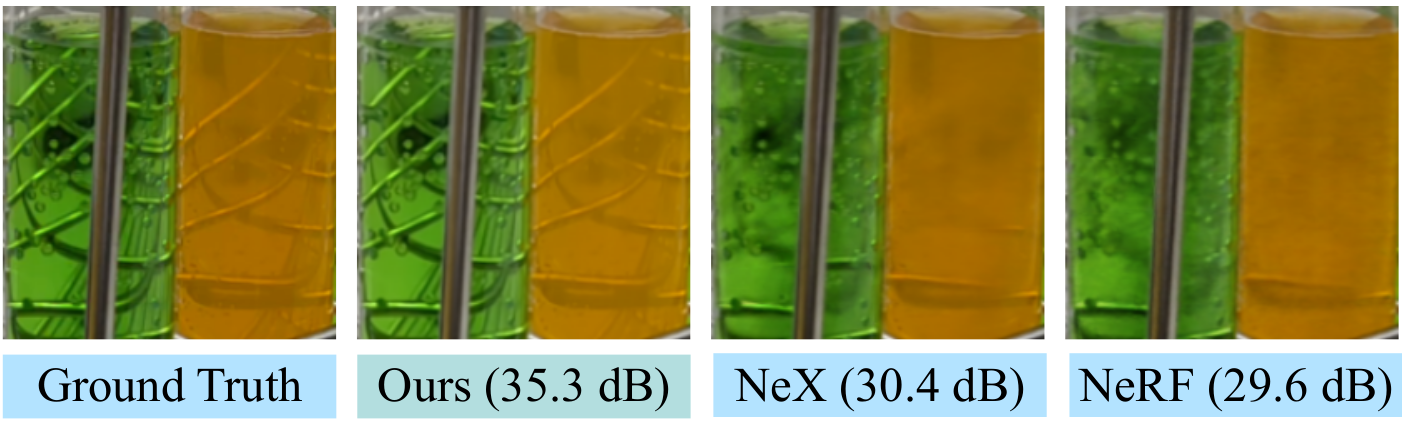

LFNR improved the state-of-the-art on the preferred view synthesis benchmarks (Blender and Actual Ahead-Dealing with scenes from NeRF and Shiny from NeX) with margins as massive as 5dB peak signal-to-noise ratio (PSNR). This corresponds to a discount of the pixel-wise error by an element of 1.8x. We present qualitative outcomes on difficult scenes from the Shiny dataset under:

|

| LFNR reproduces difficult view-dependent results just like the rainbow and reflections on the CD, reflections, refractions and translucency on the bottles. This animation is compressed; see the unique high quality renderings right here. Supply: CD scene from the NeX/Shiny dataset. |

|

| Prior strategies reminiscent of NeX and NeRF fail to breed view-dependent results just like the translucency and refractions within the take a look at tubes on the Lab scene from the NeX/Shiny dataset. See additionally our video of this scene on the prime of the put up and the unique high quality outputs right here. |

Generalizing to New Scenes

One limitation of LFNR is that the primary transformer collapses the data alongside every epipolar line independently for every reference picture. Which means that it decides which data to protect primarily based solely on the output ray coordinates and patches from every reference picture, which works properly when coaching on a single scene (as most neural rendering strategies do), nevertheless it doesn’t generalize throughout scenes. Generalizable strategies are necessary as a result of they are often utilized to new scenes while not having to retrain.

We overcome this limitation of LFNR in Generalizable Patch-Primarily based Neural Rendering (GPNR). We add a transformer that runs earlier than the opposite two and exchanges data between factors on the similar depth over all reference photos. For instance, this primary transformer appears on the columns of the patches from the park bench proven above and may use cues just like the flower that seems at corresponding depths in two views, which signifies a possible match. One other key thought of this work is to canonicalize the positional encoding primarily based on the goal ray, as a result of to generalize throughout scenes, it’s essential to characterize portions in relative and never absolute frames of reference. The animation under reveals an summary of the mannequin.

|

| GPNR consists of a sequence of three transformers that map a set of patches extracted alongside epipolar traces to a pixel colour. Picture patches are mapped through the linear projection layer to preliminary options (proven as blue and inexperienced packing containers). Then these options are successively refined and aggregated by the mannequin, ensuing within the remaining function/colour represented by the grey rectangle. Park bench picture supply: LLFF dataset. |

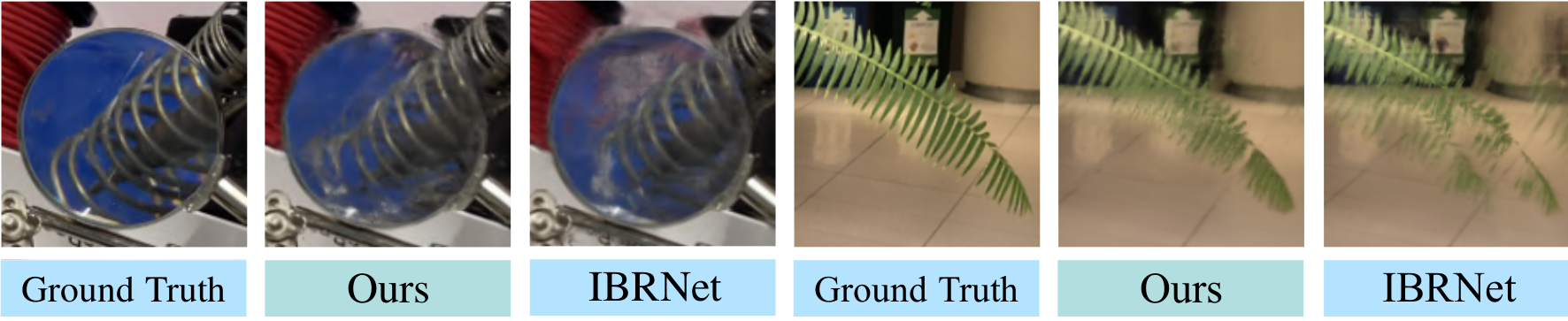

To judge the generalization efficiency, we practice GPNR on a set of scenes and take a look at it on new scenes. GPNR improved the state-of-the-art on a number of benchmarks (following IBRNet and MVSNeRF protocols) by 0.5–1.0 dB on common. On the IBRNet benchmark, GPNR outperforms the baselines whereas utilizing solely 11% of the coaching scenes. The outcomes under present new views of unseen scenes rendered with no fine-tuning.

|

| GPNR-generated views of held-out scenes, with none superb tuning. This animation is compressed; see the unique high quality renderings right here. Supply: IBRNet collected dataset. |

|

| Particulars of GPNR-generated views on held-out scenes from NeX/Shiny (left) and LLFF (proper), with none superb tuning. GPNR reproduces extra precisely the main points on the leaf and the refractions via the lens when put next in opposition to IBRNet. |

Future Work

One limitation of most neural rendering strategies, together with ours, is that they require digicam poses for every enter picture. Poses are usually not simple to acquire and usually come from offline optimization strategies that may be gradual, limiting doable purposes, reminiscent of these on cellular gadgets. Analysis on collectively studying view synthesis and enter poses is a promising future course. One other limitation of our fashions is that they’re computationally costly to coach. There’s an lively line of analysis on quicker transformers which could assist enhance our fashions’ effectivity. For the papers, extra outcomes, and open-source code, you possibly can take a look at the tasks pages for “Gentle Subject Neural Rendering” and “Generalizable Patch-Primarily based Neural Rendering“.

Potential Misuse

In our analysis, we goal to precisely reproduce an current scene utilizing photos from that scene, so there may be little room to generate pretend or non-existing scenes. Our fashions assume static scenes, so synthesizing transferring objects, reminiscent of individuals, is not going to work.

Acknowledgments

All of the arduous work was achieved by our superb intern – Mohammed Suhail – a PhD scholar at UBC, in collaboration with Carlos Esteves and Ameesh Makadia from Google Analysis, and Leonid Sigal from UBC. We’re grateful to Corinna Cortes for supporting and inspiring this venture.

Our work is impressed by NeRF, which sparked the current curiosity in view synthesis, and IBRNet, which first thought of generalization to new scenes. Our mild ray positional encoding is impressed by the seminal paper Gentle Subject Rendering and our use of transformers comply with ViT.

Video outcomes are from scenes from LLFF, Shiny, and IBRNet collected datasets.