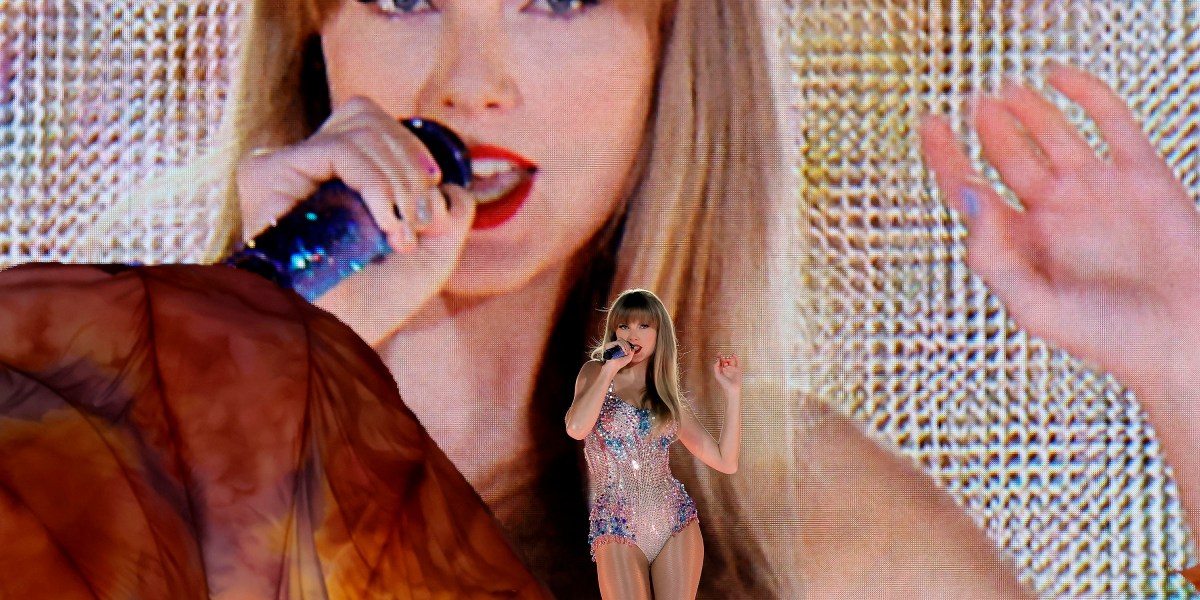

I can solely think about the way you have to be feeling after sexually specific deepfake movies of you went viral on X. Disgusted. Distressed, maybe. Humiliated, even.

I’m actually sorry that is occurring to you. No one deserves to have their picture exploited like that. However in the event you aren’t already, I’m asking you to be livid.

Livid that that is occurring to you and so many different ladies and marginalized folks all over the world. Livid that our present legal guidelines are woefully inept at defending us from violations like this. Livid that males (as a result of let’s face it, it’s principally males doing this) can violate us in such an intimate method and stroll away unscathed and unidentified. Livid that the businesses that allow this materials to be created and shared broadly face no penalties both, and may revenue off such a horrendous use of their expertise.

Deepfake porn has been round for years, however its newest incarnation is its worst one but. Generative AI has made it ridiculously simple and low-cost to create real looking deepfakes. And practically all deepfakes are made for porn. Just one picture plucked off social media is sufficient to generate one thing satisfactory. Anybody who has ever posted or had a photograph printed of them on-line is a sitting duck.

First, the unhealthy information. In the intervening time, we’ve got no good methods to battle this. I simply printed a narrative 3 ways we are able to fight nonconsensual deepfake porn, which embrace watermarks and data-poisoning instruments. However the actuality is that there is no such thing as a neat technical repair for this drawback. The fixes we do have are nonetheless experimental and haven’t been adopted broadly by the tech sector, which limits their energy.

The tech sector has to this point been unwilling or unmotivated to make adjustments that might stop such materials from being created with their instruments or shared on their platforms. That’s the reason we’d like regulation.

Folks with energy, like your self, can battle with cash and attorneys. However low-income ladies, ladies of colour, ladies fleeing abusive companions, ladies journalists, and even kids are all seeing their likeness stolen and pornified, with no approach to search justice or assist. Any one in all your followers could possibly be damage by this improvement.

The excellent news is that the truth that this occurred to you means politicians within the US are listening. You could have a uncommon alternative, and momentum, to push by actual, actionable change.

I do know you battle for what is correct and aren’t afraid to talk up while you see injustice. There shall be intense lobbying in opposition to any guidelines that might have an effect on tech firms. However you’ve got a platform and the facility to persuade lawmakers throughout the board that guidelines to fight these types of deepfakes are a necessity. Tech firms and politicians must know that the times of dithering are over. The folks creating these deepfakes must be held accountable.

You as soon as precipitated an precise earthquake. Profitable the battle in opposition to nonconsensual deepfakes would have an much more earth-shaking impression.