Whereas mannequin design and coaching knowledge are key components in a deep neural community’s (DNN’s) success, less-often mentioned is the particular optimization technique used for updating the mannequin parameters (weights). Coaching DNNs includes minimizing a loss perform that measures the discrepancy between the bottom reality labels and the mannequin’s predictions. Coaching is carried out by backpropagation, which adjusts the mannequin weights through gradient descent steps. Gradient descent, in flip, updates the weights by utilizing the gradient (i.e., by-product) of the loss with respect to the weights.

The best weight replace corresponds to stochastic gradient descent, which, in each step, strikes the weights within the damaging path with respect to the gradients (with an acceptable step dimension, a.okay.a. the studying price). Extra superior optimization strategies modify the path of the damaging gradient earlier than updating the weights by utilizing data from the previous steps and/or the native properties (such because the curvature data) of the loss perform across the present weights. As an illustration, a momentum optimizer encourages transferring alongside the typical path of previous updates, and the AdaGrad optimizer scales every coordinate primarily based on the previous gradients. These optimizers are generally often called first-order strategies since they often modify the replace path utilizing solely data from the first-order by-product (i.e., gradient). Extra importantly, the parts of the burden parameters are handled independently from one another.

Extra superior optimization, similar to Shampoo and Okay-FAC, seize the correlations between gradients of parameters and have been proven to enhance convergence, decreasing the variety of iterations and enhancing the standard of the answer. These strategies seize details about the native adjustments of the derivatives of the loss, i.e., adjustments in gradients. Utilizing this extra data, higher-order optimizers can uncover far more environment friendly replace instructions for coaching fashions by considering the correlations between completely different teams of parameters. On the draw back, calculating higher-order replace instructions is computationally dearer than first-order updates. The operation makes use of extra reminiscence for storing statistics and includes matrix inversion, thus hindering the applicability of higher-order optimizers in observe.

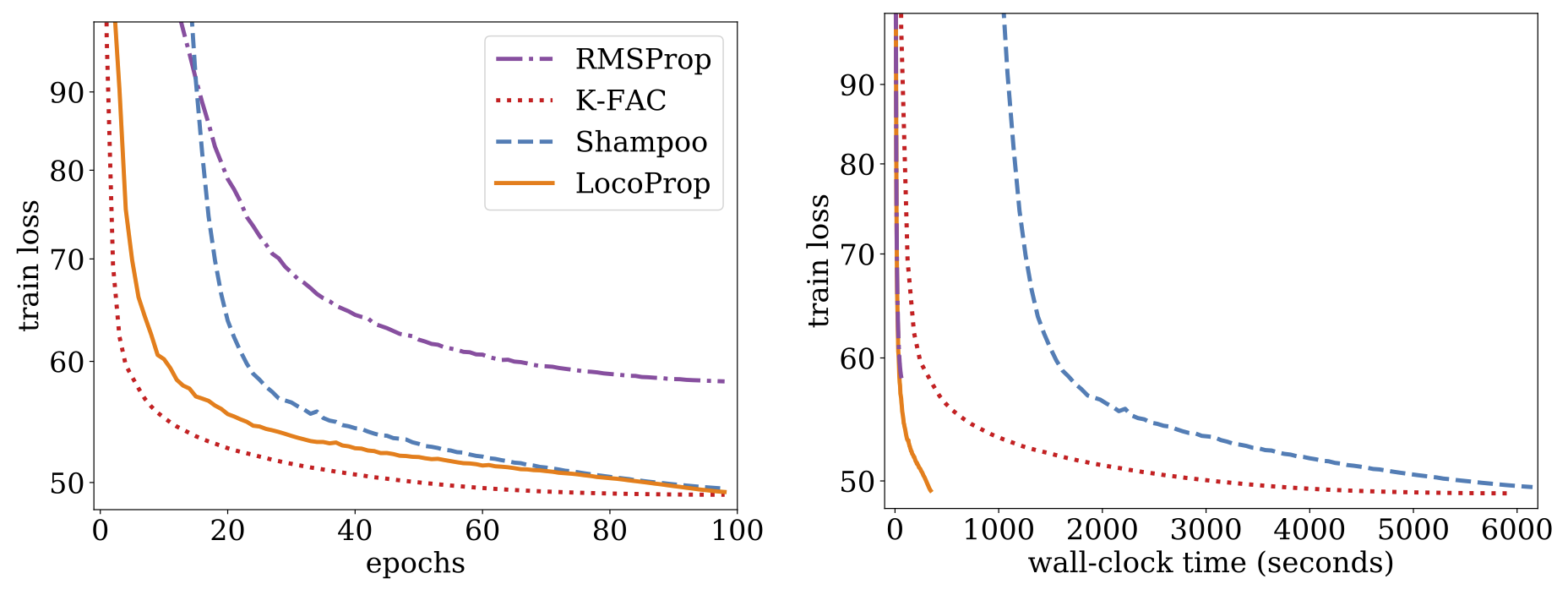

In “LocoProp: Enhancing BackProp through Native Loss Optimization”, we introduce a brand new framework for coaching DNN fashions. Our new framework, LocoProp, conceives neural networks as a modular composition of layers. Usually, every layer in a neural community applies a linear transformation on its inputs, adopted by a non-linear activation perform. Within the new development, every layer is allotted its personal weight regularizer, output goal, and loss perform. The loss perform of every layer is designed to match the activation perform of the layer. Utilizing this formulation, coaching minimizes the native losses for a given mini-batch of examples, iteratively and in parallel throughout layers. Our technique performs a number of native updates per batch of examples utilizing a first-order optimizer (like RMSProp), which avoids computationally costly operations such because the matrix inversions required for higher-order optimizers. Nonetheless, we present that the mixed native updates look quite like a higher-order replace. Empirically, we present that LocoProp outperforms first-order strategies on a deep autoencoder benchmark and performs comparably to higher-order optimizers, similar to Shampoo and Okay-FAC, with out the excessive reminiscence and computation necessities.

Technique

Neural networks are usually seen as composite capabilities that remodel mannequin inputs into output representations, layer by layer. LocoProp adopts this view whereas decomposing the community into layers. Specifically, as an alternative of updating the weights of the layer to reduce the loss perform on the output, LocoProp applies pre-defined native loss capabilities particular to every layer. For a given layer, the loss perform is chosen to match the activation perform, e.g., a tanh loss can be chosen for a layer with a tanh activation. Every layerwise loss measures the discrepancy between the layer’s output (for a given mini-batch of examples) and a notion of a goal output for that layer. Moreover, a regularizer time period ensures that the up to date weights don’t drift too removed from the present values. The mixed layerwise loss perform (with an area goal) plus regularizer is used as the brand new goal perform for every layer.

Maybe the best loss perform one can consider for a layer is the squared loss. Whereas the squared loss is a sound alternative of a loss perform, LocoProp takes under consideration the potential non-linearity of the activation capabilities of the layers and applies layerwise losses tailor-made to the activation perform of every layer. This allows the mannequin to emphasise areas on the enter which are extra vital for the mannequin prediction whereas deemphasizing the areas that don’t have an effect on the output as a lot. Under we present examples of tailor-made losses for the tanh and ReLU activation capabilities.

|

| Loss capabilities induced by the (left) tanh and (proper) ReLU activation capabilities. Every loss is extra delicate to the areas affecting the output prediction. As an illustration, ReLU loss is zero so long as each the prediction (â) and the goal (a) are damaging. It is because the ReLU perform utilized to any damaging quantity equals zero. |

After forming the target in every layer, LocoProp updates the layer weights by repeatedly making use of gradient descent steps on its goal. The replace sometimes makes use of a first-order optimizer (like RMSProp). Nonetheless, we present that the general conduct of the mixed updates carefully resembles higher-order updates (proven under). Thus, LocoProp gives coaching efficiency near what higher-order optimizers obtain with out the excessive reminiscence or computation wanted for higher-order strategies, similar to matrix inverse operations. We present that LocoProp is a versatile framework that enables the restoration of well-known algorithms and allows the development of latest algorithms through completely different selections of losses, targets, and regularizers. LocoProp’s layerwise view of neural networks additionally permits updating the weights in parallel throughout layers.

Experiments

In our paper, we describe experiments on the deep autoencoder mannequin, which is a generally used baseline for evaluating the efficiency of optimization algorithms. We carry out intensive tuning on a number of generally used first-order optimizers, together with SGD, SGD with momentum, AdaGrad, RMSProp, and Adam, in addition to the higher-order Shampoo and Okay-FAC optimizers, and examine the outcomes with LocoProp. Our findings point out that the LocoProp technique performs considerably higher than first-order optimizers and is corresponding to these of higher-order, whereas being considerably quicker when run on a single GPU.

Abstract and Future Instructions

We launched a brand new framework, referred to as LocoProp, for optimizing deep neural networks extra effectively. LocoProp decomposes neural networks into separate layers with their very own regularizer, output goal, and loss perform and applies native updates in parallel to reduce the native aims. Whereas utilizing first-order updates for the native optimization issues, the mixed updates carefully resemble higher-order replace instructions, each theoretically and empirically.

LocoProp gives flexibility to decide on the layerwise regularizers, targets, and loss capabilities. Thus, it permits the event of latest replace guidelines primarily based on these selections. Our code for LocoProp is out there on-line on GitHub. We’re presently engaged on scaling up concepts induced by LocoProp to a lot bigger scale fashions; keep tuned!

Acknowledgments

We want to thank our co-author, Manfred Okay. Warmuth, for his important contributions and provoking imaginative and prescient. We want to thank Sameer Agarwal for discussions this work from a composite capabilities perspective, Vineet Gupta for discussions and growth of Shampoo, Zachary Nado on Okay-FAC, Tom Small for growth of the animation used on this blogpost and eventually, Yonghui Wu and Zoubin Ghahramani for offering us with a nurturing analysis setting within the Google Mind Workforce.