The position of variety has been a topic of dialogue in varied fields, from biology to sociology. Nevertheless, a current examine from North Carolina State College’s Nonlinear Synthetic Intelligence Laboratory (NAIL) opens an intriguing dimension to this discourse: variety inside synthetic intelligence (AI) neural networks.

The Energy of Self-Reflection: Tuning Neural Networks Internally

William Ditto, professor of physics at NC State and director of NAIL, and his workforce constructed an AI system that may “look inward” and regulate its neural community. The method permits the AI to find out the quantity, form, and connection energy between its neurons, providing the potential for sub-networks with completely different neuronal varieties and strengths.

“We created a check system with a non-human intelligence, a synthetic intelligence, to see if the AI would select variety over the dearth of variety and if its selection would enhance the efficiency of the AI,” says Ditto. “The important thing was giving the AI the flexibility to look inward and be taught the way it learns.”

Not like standard AI that makes use of static, similar neurons, Ditto’s AI has the “management knob for its personal mind,” enabling it to have interaction in meta-learning, a course of that enhances its studying capability and problem-solving expertise. “Our AI may additionally determine between numerous or homogenous neurons,” Ditto states, “And we discovered that in each occasion the AI selected variety as a option to strengthen its efficiency.”

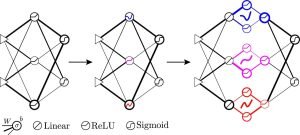

Development from standard synthetic neural community to numerous neural community to realized numerous neural community. Line thicknesses symbolize weights

Efficiency Metrics: Range Trumps Uniformity

The analysis workforce measured the AI’s efficiency with a regular numerical classifying train and located exceptional outcomes. Typical AIs, with their static and homogenous neural networks, managed a 57% accuracy fee. In distinction, the meta-learning, numerous AI reached a staggering 70% accuracy.

In keeping with Ditto, the diversity-based AI reveals as much as 10 occasions extra accuracy in fixing extra complicated duties, similar to predicting a pendulum’s swing or the movement of galaxies. “Certainly, we additionally noticed that as the issues turn into extra complicated and chaotic, the efficiency improves much more dramatically over an AI that doesn’t embrace variety,” he elaborates.

The Implications: A Paradigm Shift in AI Growth

The findings of this examine have far-reaching implications for the event of AI applied sciences. They counsel a paradigm shift from the at present prevalent ‘one-size-fits-all’ neural community fashions to dynamic, self-adjusting ones.

“We’ve got proven that in the event you give an AI the flexibility to look inward and be taught the way it learns it’ll change its inner construction — the construction of its synthetic neurons — to embrace variety and enhance its capability to be taught and resolve issues effectively and extra precisely,” Ditto concludes. This might be particularly pertinent in purposes that require excessive ranges of adaptability and studying, from autonomous autos to medical diagnostics.

This analysis not solely shines a highlight on the intrinsic worth of variety but in addition opens up new avenues for AI analysis and improvement, underlining the necessity for dynamic and adaptable neural architectures. With ongoing assist from the Workplace of Naval Analysis and different collaborators, the subsequent section of analysis is eagerly awaited.

By embracing the ideas of variety internally, AI techniques stand to realize considerably when it comes to efficiency and problem-solving talents, probably revolutionizing our method to machine studying and AI improvement.