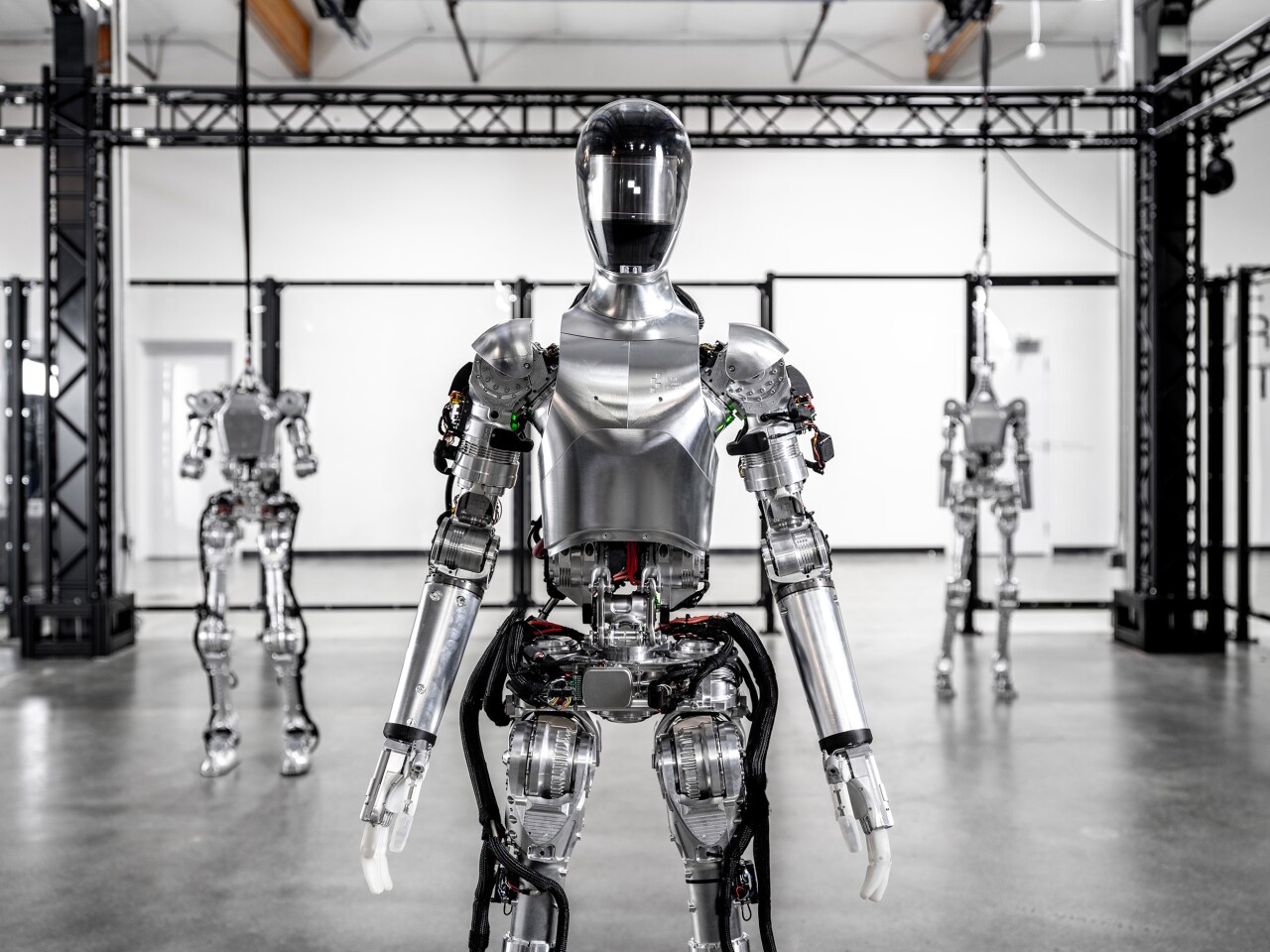

After simply 12 months of improvement, Determine has launched video footage of its humanoid robotic strolling – and it is wanting fairly sprightly in comparison with its business competitors. It is our first take a look at a prototype that ought to be doing helpful work inside months.

Determine is taking a bluntly pragmatic method to humanoid robotics. It would not care about operating, leaping, or doing backflips; its robotic is designed to get to work and make itself helpful as shortly as doable, beginning with simple jobs involving transferring issues round in a warehouse-type setting, after which increasing its skills to take over an increasing number of duties.

Staffed by a gaggle of some 60-odd humanoid and AI business veterans that founder Brett Adcock lured away from main firms like Boston Dynamics, Google Deepmind, Tesla and Apple, Determine is hitting the general-purpose robotic employee house with the identical breakneck velocity that Adcock’s former firm Archer did when it arrived late to the eVTOL occasion.

Try the video beneath, displaying “dynamic bipedal strolling,” which the group achieved in lower than 12 months. Adcock believes that is a document for a model new humanoid initiative.

Determine Standing Replace – Dynamic Strolling

It is a brief video, however the Determine prototype strikes comparatively shortly and easily, in comparison with the considerably unsteadier-looking gait demonstrated by Tesla’s Optimus prototype again in Could.

And whereas Determine’s not but able to launch video, Adcock tells us it is doing loads of different issues too; choosing issues up, transferring them round and navigating the world, all autonomously, however not all concurrently but.

The group’s objective is to indicate this prototype doing helpful work by the top of the yr, and Adcock is assured that they will get there. We caught up with him for a video chat, and what follows beneath is an edited transcript.

Determine

Loz: Are you able to clarify slightly extra about torque-controlled strolling versus place and velocity primarily based strolling?

Brett Adcock: There’s two completely different kinds that people have used all through the years. Place and velocity management is mainly simply dictating the angles of all of the joints, it appears to be fairly prescriptive about the way you stroll, monitoring the middle of mass and middle of strain. So that you type of get a really Honda ASIMO type of strolling. They name it ZMP, zero second level.

It is type of sluggish, they’re at all times centering the load over one of many toes. It isn’t very dynamic, within the sense you could put strain on the world and attempt to actually perceive and react to what’s taking place. The true world’s not excellent, it is at all times slightly bit messy. So mainly, torque management permits us to measure torque, or moments within the joints itself. Each joint has slightly torque sensor in it. And that enables us to be extra dynamic within the setting. So we will perceive what forces we’re placing on the world and react to these instantaneously. It’s extremely rather more fashionable and we expect it is the trail towards human-level efficiency of a really complicated world.

Is it analogous to the best way that people understand and stability?

We type of sense torque and strain on objects, like we will like contact the bottom and we perceive that we’re touching the bottom, issues like that. So once you work with positions and velocities, you do not actually know if you happen to’re making an affect with the world.

We’re not the one ones; torque managed strolling’s what most likely all the latest teams have carried out. Boston Dynamics does that with Atlas. It is on the bleeding fringe of what the most effective teams on the planet have been demonstrating. So this is not the primary time it has been demonstrated, however doing it’s actually troublesome for each the software program and {hardware} facet. I believe what’s fascinating for us right here is that there is only a few teams commercially which can be making an attempt to go after the business market which have arms, and which can be dynamically strolling by the world.

That is the primary large verify for us – to have the ability to technically present that we’re capable of do it, and do it nicely. And the subsequent large push for us is to combine all of the autonomous programs into the robotic, which we’re doing actively proper now, in order that the robotic can do finish to finish purposes and helpful work autonomously.

Determine

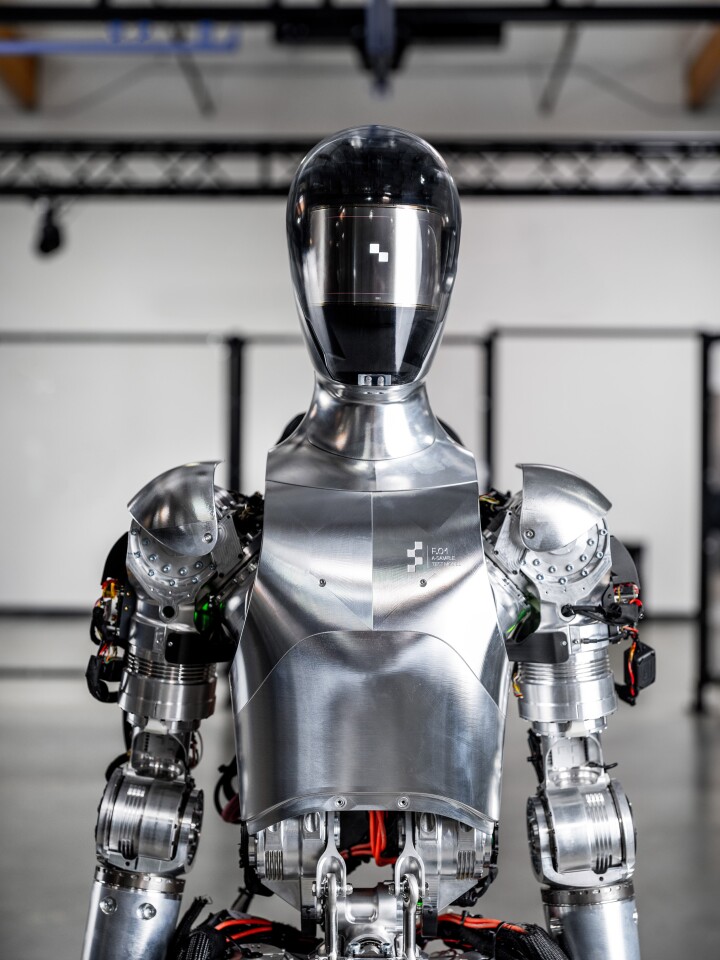

Alright, so I’ve obtained some photographs right here of the robotic prototype. Are these arms near those which can be already doing dynamic gripping and manipulation?

Yep. These are our in-house designed arms, with silicone soft-grip fingertips. They’re the arms that we’ll use as we go right into a manufacturing setting too.

Okay. And what’s it in a position to take action far?

We have been capable of do each single-handed and bimanual manipulation, grabbing objects with two arms and transferring them round. We have been capable of do guide manipulation of packing containers and bins and different belongings that we see in warehouse and manufacturing environments. We have additionally carried out single-handed grips of various shopper purposes, so like, baggage and chips and different kinds of issues. And we have carried out these fairly efficiently in our lab at this level.

Proper. And that is through teleoperation, or have you ever obtained some autonomous actions operating?

Each. Yeah, we have carried out teleoperation work, principally from an AR coaching perspective, after which a lot of the work we have carried out is with totally end-to-end programs that aren’t teleoperated.

Okay, so it is choosing issues up by itself, and transferring them round, and placing them down. And we’re speaking on the field scale right here, or the smaller, extra complicated merchandise stage?

We have carried out some complicated gadgets and single-handed grabs, however we have carried out a bunch of labor on having the ability to seize tote bins and different kinds of bins. So yeah, I’d say bins, packing containers, carts, particular person shopper gadgets, we have been capable of seize these efficiently and transfer them round.

Determine

And the the facial display is built-in and up and operating?

Yeah, primarily based on what the robotic’s really doing, we show a distinct kind of utility and design language on the display. So we have carried out some early work on human-machine interplay, round how we will present the people on the planet what the robotic’s actively doing and what we’ll be doing subsequent. You need to know the robotic’s powered on, you wanna know, when it is actively in a job, what it plans to do after that job. We’re taking a look at speaking intent through video and doubtlessly audio.

Proper, you may need it talking?

Yep. I’d need to know what to do subsequent and would possibly need instructions from you. You would possibly need to ask it like, why are you doing this proper now? And you may want a response again.

Proper, so that you’re constructing that stuff in already?

We’re making an attempt to! We’re doing early stuff there.

So what are essentially the most highly effective joints, and what sort of torque are these motors placing out?

The knee and hip have over 200 Newton meters.

Okay. And aside from strolling, can it squat down at this level?

We’re not going to indicate it but, however it may possibly squat down, we have picked up bins and different issues now, and moved them round. We’ve got a complete shakeout routine with loads of completely different actions, to check vary of movement. Reaching up excessive and down low…

Morning yoga!

Tesla’s doing yoga, so perhaps we’ll be a yoga teacher.

Perhaps the Pilates room is free! Very cool. So that is the quickest you are conscious that any firm has managed to get a get a robotic up and strolling by itself?

Yeah, I imply if you happen to take a look at the time we spent in direction of engineering this robotic, it has been like 12 months. I do not actually know anyone that is gotten right here higher or sooner. I do not actually know. I occur to suppose it is most likely one of many quickest in historical past to do it.

Determine

Okay. So what are you guys hoping to display subsequent?

Subsequent is for us to have the ability to do end-to-end business purposes; actual work. And to have all our autonomy and AI programs operating on board. After which be capable to transfer the varieties of things round which can be central to what our clients want. Constructing extra robots, and getting the autonomy working very well.

Okay. And what number of robots have you ever obtained totally assembled at this level?

We’ve got 5 items of that model in our facility, however there are completely different maturity ranges. Some are simply the torso, some are totally constructed, some are strolling, some are near strolling, issues like that.

Gotcha. And have you ever began pushing them round with brooms but?

Yeah, we have carried out some first rate quantity of push restoration testing… It at all times feels bizarre pushing the robotic, however yeah, we have carried out an honest quantity of that work.

We higher push them whereas we will, proper? They will be pushing us quickly sufficient.

Yeah, for certain!

Okay, so it is capable of get well from a push whereas it is strolling?

We have not carried out that precise factor, however you may push it when it is standing. The main target actually hasn’t been making it strong to massive disturbances whereas strolling. It is principally been to get the locomotion management proper, then get this entire system doing end-to-end utility work, after which we’ll most likely spend extra time doing extra strong push restoration and different issues like that into early subsequent yr. We’ll get the end-to-end purposes operating, after which we’ll mature that, make it increased efficiency, make it sooner. After which extra strong, mainly. We would like you to see the robotic doing actual work this yr, that is our objective.

Determine

Gotcha. Can it decide itself up from a fall at this stage?

We’ve got designed it to do this, however we have not demonstrated that.

It sounds such as you’ve obtained a lot of the main constructing blocks in place, to get these early “decide issues up and put them down” type of purposes taking place. Is it at the moment able to strolling whereas carrying a load?

Yeah, we have really walked whereas carrying a load already.

Okay. So what are the important thing stuff you’ve nonetheless obtained to knock over earlier than you may display that helpful work?

It is actually stitching every part collectively very well and ensuring that notion programs can see the world, that we do not collide with the world, like, the knees do not hit when we will seize issues. Make certain the arms aren’t colliding with the objects on the planet. We’ve got manipulation and notion insurance policies within the AI facet that we need to combine into system to do it totally finish to finish.

There’s loads of little issues to have a look at. We need to do higher movement planning, or doing different kinds of management work to make it way more strong so it may possibly do issues over and over and get well from failures. So there’s a complete host of smaller issues that we’re all making an attempt to do nicely to sew collectively. We have demonstrated the primary primary finish to finish utility in our lab, and we simply must make it way more strong.

What was that first utility?

It is a warehouse and manufacturing-related goal… Principally, transferring objects round our facility.

When it comes to SLAM and navigation, perceiving the world, the place’s that stuff at?

We’re localizing now in a map that we’re constructing in actual time. We’ve got notion insurance policies which can be operating on actual time, together with occupancy and object detection. Constructing slightly 3D simulation of the world, and labeling objects to know what they’re. That is what your Tesla does when it is driving down the highway.

After which we now have manipulation insurance policies for grabbing objects we’re going by, after which behaviors which can be type of serving to to sew that collectively. We’ve got an enormous board about find out how to combine all these streams and make them work reliably on the robotic that we have developed within the final yr.

Determine

Proper. So it’s very camera-based is it? No time of flight type of stuff?

As of proper now, we’re utilizing seven RGB cameras. It is obtained 360-degree imaginative and prescient, it may possibly see behind it, to the edges, it may possibly look down.

So when it comes to the strolling video, is that one-to-one velocity?

It is one to at least one, yeah.

It strikes a bit!

It is superior, proper?

What kind of velocity is it at this level?

Perhaps a meter a second, perhaps rather less.

Appears to be like a bit faster than loads of the competitors.

Yep. Appears to be like fairly clean too. So yeah, it is fairly good, proper? It is most likely among the greatest strolling I’ve seen out of among the humanoids. Clearly Boston Dynamics has carried out a terrific job on the non-commercial analysis facet – like PETMAN, that is most likely the most effective humanoid strolling gait of all time.

That was the navy wanting factor, proper?

Yeah, somebody dressed him up in a fuel masks.

He had some swagger, that man! Are your leg motors and whatnot ample to begin getting it operating sooner or later, leaping, that form of stuff? I do know that is probably not in your wheelhouse.

We do not need to try this stuff. We do not need to soar and do parkour and backflips and field jumps. Like, regular people do not try this. We simply need to do human work.

Due to Determine’s Brett Adcock and Lee Randaccio for his or her help with this story.

Supply: Determine