That is the second publish in a sequence by Rockset’s CTO Dhruba Borthakur on Designing the Subsequent Era of Information Techniques for Actual-Time Analytics. We’ll be publishing extra posts within the sequence within the close to future, so subscribe to our weblog so you do not miss them!

Posts printed thus far within the sequence:

- Why Mutability Is Important for Actual-Time Information Analytics

- Dealing with Out-of-Order Information in Actual-Time Analytics Purposes

- Dealing with Bursty Visitors in Actual-Time Analytics Purposes

- SQL and Advanced Queries Are Wanted for Actual-Time Analytics

- Why Actual-Time Analytics Requires Each the Flexibility of NoSQL and Strict Schemas of SQL Techniques

Corporations in every single place have upgraded, or are at present upgrading, to a fashionable information stack, deploying a cloud native event-streaming platform to seize quite a lot of real-time information sources.

So why are their analytics nonetheless crawling by in batches as a substitute of actual time?

It’s in all probability as a result of their analytics database lacks the options essential to ship data-driven selections precisely in actual time. Mutability is crucial functionality, however shut behind, and intertwined, is the flexibility to deal with out-of-order information.

Out-of-order information are time-stamped occasions that for a variety of causes arrive after the preliminary information stream has been ingested by the receiving database or information warehouse.

On this weblog publish, I’ll clarify why mutability is a must have for dealing with out-of-order information, the three explanation why out-of-order information has turn out to be such a problem immediately and the way a contemporary mutable real-time analytics database handles out-of-order occasions effectively, precisely and reliably.

The Problem of Out-of-Order Information

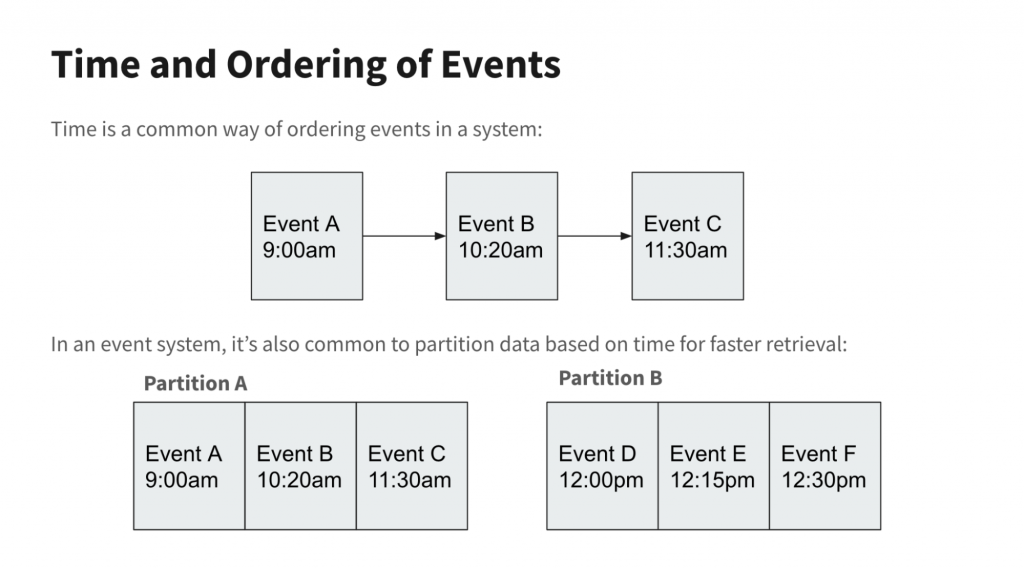

Streaming information has been round because the early Nineties underneath many names — occasion streaming, occasion processing, occasion stream processing (ESP), and so on. Machine sensor readings, inventory costs and different time-ordered information are gathered and transmitted to databases or information warehouses, which bodily retailer them in time-series order for quick retrieval or evaluation. In different phrases, occasions which might be shut in time are written to adjoining disk clusters or partitions.

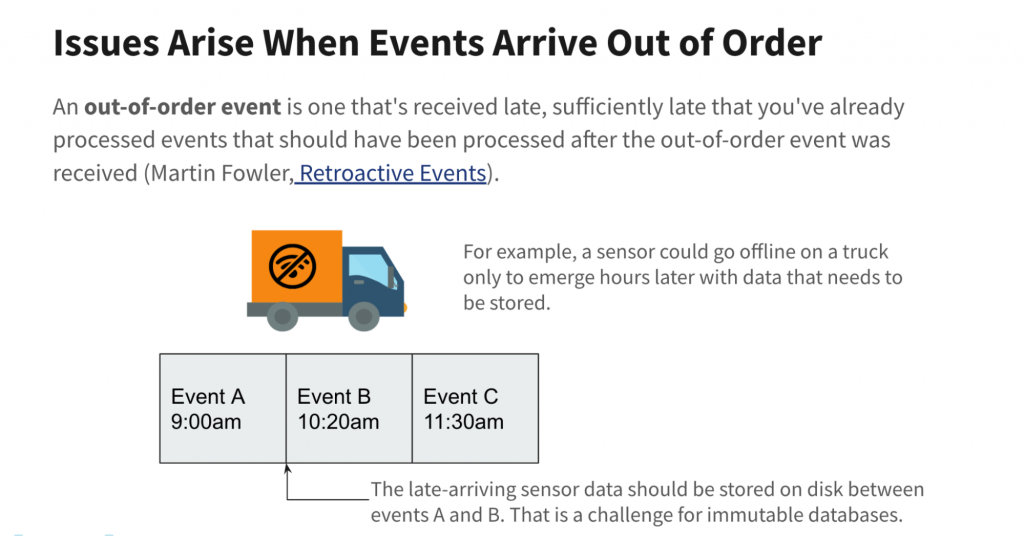

Ever since there was streaming information, there was out-of-order information. The sensor transmitting the real-time location of a supply truck might go offline due to a useless battery or the truck touring out of wi-fi community vary. An internet clickstream could possibly be interrupted if the web site or occasion writer crashes or has web issues. That clickstream information would should be re-sent or backfilled, doubtlessly after the ingesting database has already saved it.

Transmitting out-of-order information just isn’t the difficulty. Most streaming platforms can resend information till it receives an acknowledgment from the receiving database that it has efficiently written the information. That is named at-least-once semantics.

The problem is how the downstream database shops updates and late-arriving information. Conventional transactional databases, akin to Oracle or MySQL, have been designed with the belief that information would should be repeatedly up to date to take care of accuracy. Consequently, operational databases are virtually at all times absolutely mutable in order that particular person information may be simply up to date at any time.

Immutability and Updates: Pricey and Dangerous for Information Accuracy

Against this, most information warehouses, each on-premises and within the cloud, are designed with immutable information in thoughts, storing information to disk completely because it arrives. All updates are appended moderately than written over present information information.

This has some advantages. It prevents unintended deletions, for one. For analytics, the important thing boon of immutability is that it permits information warehouses to speed up queries by caching information in quick RAM or SSDs with out fear that the supply information on disk has modified and turn out to be outdated.

(Martin Fowler: Retroactive Occasion)

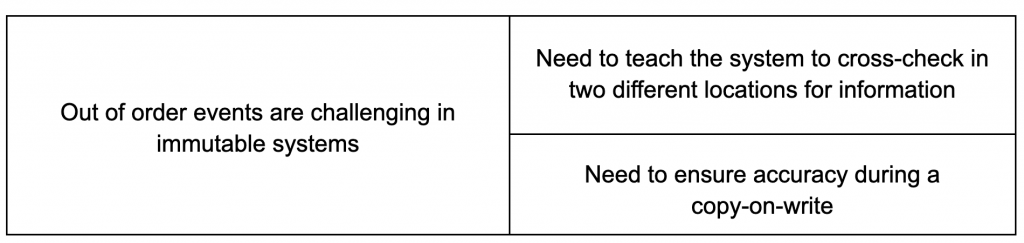

Nonetheless, immutable information warehouses are challenged by out-of-order time-series information since no updates or adjustments may be inserted into the unique information information.

In response, immutable information warehouse makers have been pressured to create workarounds. One technique utilized by Snowflake, Apache Druid and others is named copy-on-write. When occasions arrive late, the information warehouse writes the brand new information and rewrites already-written adjoining information so as to retailer every little thing accurately to disk in the best time order.

One other poor answer to take care of updates in an immutable information system is to maintain the unique information in Partition A (see diagram above) and write late-arriving information to a distinct location, Partition B. The appliance, and never the information system, has to maintain monitor of the place all linked-but-scattered information are saved, in addition to any ensuing dependencies. This observe is named referential integrity, and it ensures that the relationships between the scattered rows of knowledge are created and used as outlined. As a result of the database doesn’t present referential integrity constraints, the onus is on the applying developer(s) to grasp and abide by these information dependencies.

Each workarounds have important issues. Copy-on-write requires a major quantity of processing energy and time — tolerable when updates are few however intolerably pricey and gradual as the quantity of out-of-order information rises. For instance, if 1,000 information are saved inside an immutable blob and an replace must be utilized to a single document inside that blob, the system must learn all 1,000 information right into a buffer, replace the document and write all 1,000 information again to a brand new blob on disk — and delete the previous blob. That is massively inefficient, costly and time-wasting. It could possibly rule out real-time analytics on information streams that sometimes obtain information out-of-order.

Utilizing referential integrity to maintain monitor of scattered information has its personal points. Queries have to be double-checked that they’re pulling information from the best places or run the danger of knowledge errors. Simply think about the overhead and confusion for an utility developer when accessing the newest model of a document. The developer should write code that inspects a number of partitions, de-duplicates and merges the contents of the identical document from a number of partitions earlier than utilizing it within the utility. This considerably hinders developer productiveness. Trying any question optimizations akin to data-caching additionally turns into way more difficult and riskier when updates to the identical document are scattered in a number of locations on disk.

The Drawback with Immutability At present

The entire above issues have been manageable when out-of-order updates have been few and velocity much less necessary. Nonetheless, the surroundings has turn out to be way more demanding for 3 causes:

1. Explosion in Streaming Information

Earlier than Kafka, Spark and Flink, streaming got here in two flavors: Enterprise Occasion Processing (BEP) and Advanced Occasion Processing (CEP). BEP supplied easy monitoring and instantaneous triggers for SOA-based techniques administration and early algorithmic inventory buying and selling. CEP was slower however deeper, combining disparate information streams to reply extra holistic questions.

BEP and CEP shared three traits:

- They have been supplied by massive enterprise software program distributors.

- They have been on-premises.

- They have been unaffordable for many firms.

Then a brand new era of event-streaming platforms emerged. Many (Kafka, Spark and Flink) have been open supply. Most have been cloud native (Amazon Kinesis, Google Cloud Dataflow) or have been commercially tailored for the cloud (Kafka ⇒ Confluent, Spark ⇒ Databricks). They usually have been cheaper and simpler to begin utilizing.

This democratized stream processing and enabled many extra firms to start tapping into their pent-up provides of real-time information. Corporations that have been beforehand locked out of BEP and CEP started to reap web site consumer clickstreams, IoT sensor information, cybersecurity and fraud information, and extra.

Corporations additionally started to embrace change information seize (CDC) so as to stream updates from operational databases — suppose Oracle, MongoDB or Amazon DynamoDB — into their information warehouses. Corporations additionally began appending extra associated time-stamped information to present datasets, a course of known as information enrichment. Each CDC and information enrichment boosted the accuracy and attain of their analytics.

As all of this information is time-stamped, it might doubtlessly arrive out of order. This inflow of out-of-order occasions places heavy strain on immutable information warehouses, their workarounds not being constructed with this quantity in thoughts.

2. Evolution from Batch to Actual-Time Analytics

When firms first deployed cloud native stream publishing platforms together with the remainder of the trendy information stack, they have been advantageous if the information was ingested in batches and if question outcomes took many minutes.

Nonetheless, as my colleague Shruti Bhat factors out, the world goes actual time. To keep away from disruption by cutting-edge rivals, firms are embracing e-commerce buyer personalization, interactive information exploration, automated logistics and fleet administration, and anomaly detection to forestall cybercrime and monetary fraud.

These real- and near-real-time use circumstances dramatically slender the time home windows for each information freshness and question speeds whereas amping up the danger for information errors. To help that requires an analytics database able to ingesting each uncooked information streams in addition to out-of-order information in a number of seconds and returning correct ends in lower than a second.

The workarounds employed by immutable information warehouses both ingest out-of-order information too slowly (copy-on-write) or in a sophisticated method (referential integrity) that slows question speeds and creates important information accuracy threat. Moreover creating delays that rule out real-time analytics, these workarounds additionally create further price, too.

3. Actual-Time Analytics Is Mission Important

At present’s disruptors usually are not solely data-driven however are utilizing real-time analytics to place rivals within the rear-view window. This may be an e-commerce web site that boosts gross sales by customized affords and reductions, a web based e-sports platform that retains gamers engaged by instantaneous, data-optimized participant matches or a building logistics service that ensures concrete and different supplies arrive to builders on time.

The flip facet, in fact, is that complicated real-time analytics is now completely very important to an organization’s success. Information have to be contemporary, right and updated in order that queries are error-free. As incoming information streams spike, ingesting that information should not decelerate your ongoing queries. And databases should promote, not detract from, the productiveness of your builders. That may be a tall order, however it’s particularly tough when your immutable database makes use of clumsy hacks to ingest out-of-order information.

How Mutable Analytics Databases Remedy Out-of-Order Information

The answer is straightforward and stylish: a mutable cloud native real-time analytics database. Late-arriving occasions are merely written to the parts of the database they might have been if they’d arrived on time within the first place.

Within the case of Rockset, a real-time analytics database that I helped create, particular person fields in an information document may be natively up to date, overwritten or deleted. There is no such thing as a want for costly and gradual copy-on-writes, a la Apache Druid, or kludgy segregated dynamic partitions.

Rockset goes past different mutable real-time databases, although. Rockset not solely repeatedly ingests information, but additionally can “rollup” the information as it’s being generated. By utilizing SQL to mixture information as it’s being ingested, this vastly reduces the quantity of knowledge saved (5-150x) in addition to the quantity of compute wanted queries (boosting efficiency 30-100x). This frees customers from managing gradual, costly ETL pipelines for his or her streaming information.

We additionally mixed the underlying RocksDB storage engine with our Aggregator-Tailer-Leaf (ALT) structure in order that our indexes are immediately, absolutely mutable. That ensures all information, even freshly-ingested out-of-order information, is obtainable for correct, ultra-fast (sub-second) queries.

Rockset’s ALT structure additionally separates the duties of storage and compute. This ensures easy scalability if there are bursts of knowledge site visitors, together with backfills and different out-of-order information, and prevents question efficiency from being impacted.

Lastly, RocksDB’s compaction algorithms mechanically merge previous and up to date information information. This ensures that queries entry the newest, right model of knowledge. It additionally prevents information bloat that will hamper storage effectivity and question speeds.

In different phrases, a mutable real-time analytics database designed like Rockset gives excessive uncooked information ingestion speeds, the native means to replace and backfill information with out-of-order information, all with out creating extra price, information error threat, or work for builders and information engineers. This helps the mission-critical real-time analytics required by immediately’s data-driven disruptors.

In future weblog posts, I’ll describe different must-have options of real-time analytics databases akin to bursty information site visitors and complicated queries. Or, you may skip forward and watch my latest discuss at the Hive on Designing the Subsequent Era of Information Techniques for Actual-Time Analytics, accessible under.

Embedded content material: https://www.youtube.com/watch?v=NOuxW_SXj5M

Dhruba Borthakur is CTO and co-founder of Rockset and is liable for the corporate’s technical path. He was an engineer on the database staff at Fb, the place he was the founding engineer of the RocksDB information retailer. Earlier at Yahoo, he was one of many founding engineers of the Hadoop Distributed File System. He was additionally a contributor to the open supply Apache HBase challenge.

Rockset is the real-time analytics database within the cloud for contemporary information groups. Get sooner analytics on brisker information, at decrease prices, by exploiting indexing over brute-force scanning.