The Databricks Lakehouse Platform offers a unified set of instruments for constructing, deploying, sharing, and sustaining enterprise-grade information options at scale. Databricks integrates with Google Cloud & Safety in your cloud account and manages and deploys cloud infrastructure in your behalf.

The overarching purpose of this text is to mitigate the next dangers:

- Information entry from a browser on the web or an unauthorized community utilizing the Databricks net utility.

- Information entry from a consumer on the web or an unauthorized community utilizing the Databricks API.

- Information entry from a consumer on the web or an unauthorized community utilizing the Cloud Storage (GCS) API.

- A compromised workload on the Databricks cluster writes information to an unauthorized storage useful resource on GCP or the web.

Databricks helps a number of GCP native instruments and companies that assist defend information in transit and at relaxation. One such service is VPC Service Controls, which offers a technique to outline safety perimeters round Google Cloud assets. Databricks additionally helps community safety controls, equivalent to firewall guidelines primarily based on community or safe tags. Firewall guidelines let you management inbound and outbound visitors to your GCE digital machines.

Encryption is one other essential element of knowledge safety. Databricks helps a number of encryption choices, together with customer-managed encryption keys, key rotation, and encryption at relaxation and in transit. Databricks-managed encryption keys are utilized by default and enabled out of the field. Clients may also convey their very own encryption keys managed by Google Cloud Key Administration Service (KMS).

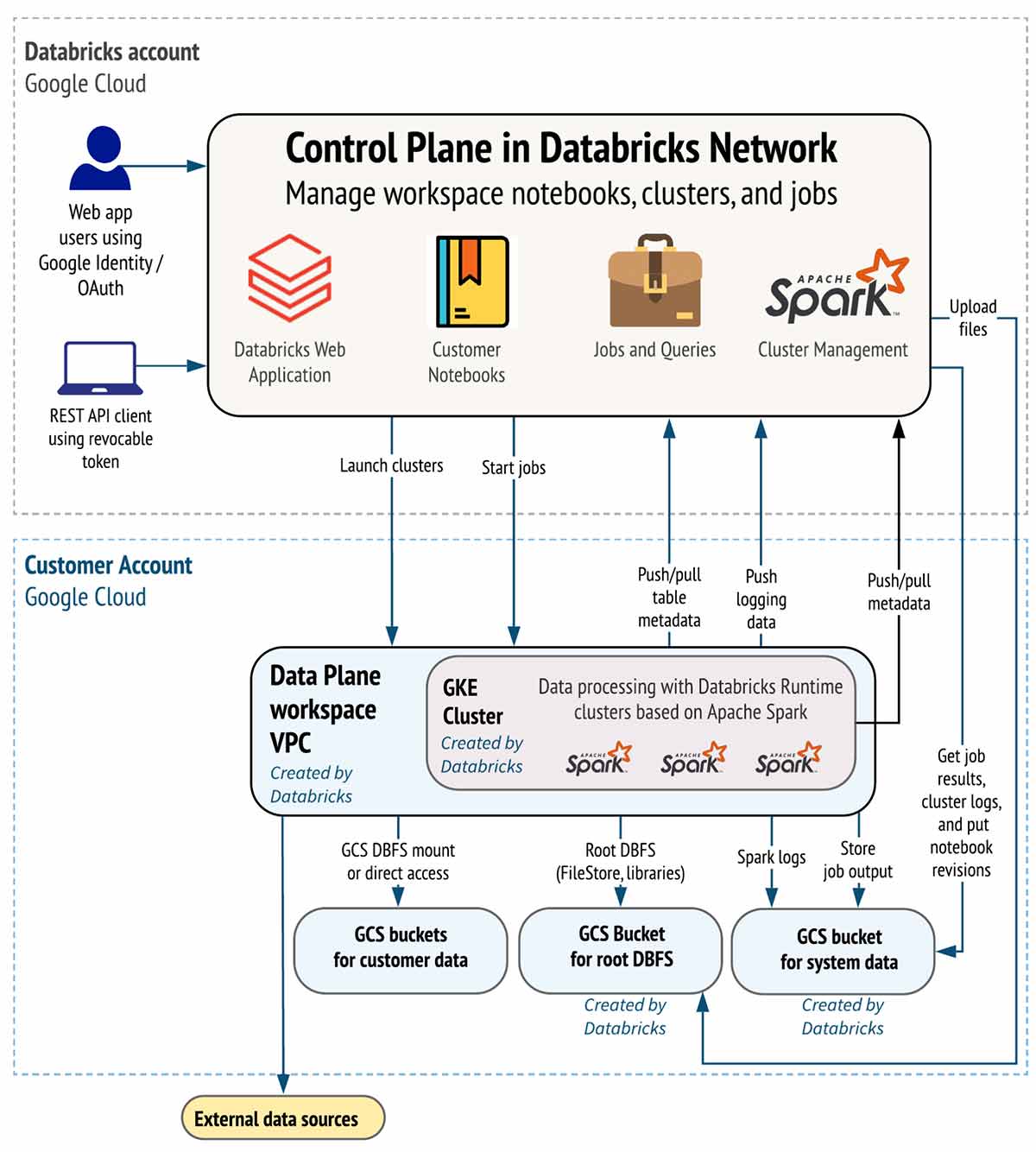

Earlier than we start, let us take a look at the Databricks deployment structure right here:

Databricks is structured to allow safe cross-functional crew collaboration whereas retaining a major quantity of backend companies managed by Databricks so you may keep targeted in your information science, information analytics, and information engineering duties.

Databricks operates out of a management aircraft and a information aircraft.

- The management aircraft consists of the backend companies that Databricks manages in its personal Google Cloud account. Pocket book instructions and different workspace configurations are saved within the management aircraft and encrypted at relaxation.

- Your Google Cloud account manages the information aircraft and is the place your information resides. That is additionally the place information is processed. You need to use built-in connectors so your clusters can connect with information sources to ingest information or for storage. You can too ingest information from exterior streaming information sources, equivalent to occasions information, streaming information, IoT information, and extra.

The next diagram represents the circulate of knowledge for Databricks on Google Cloud:

Excessive-level Structure

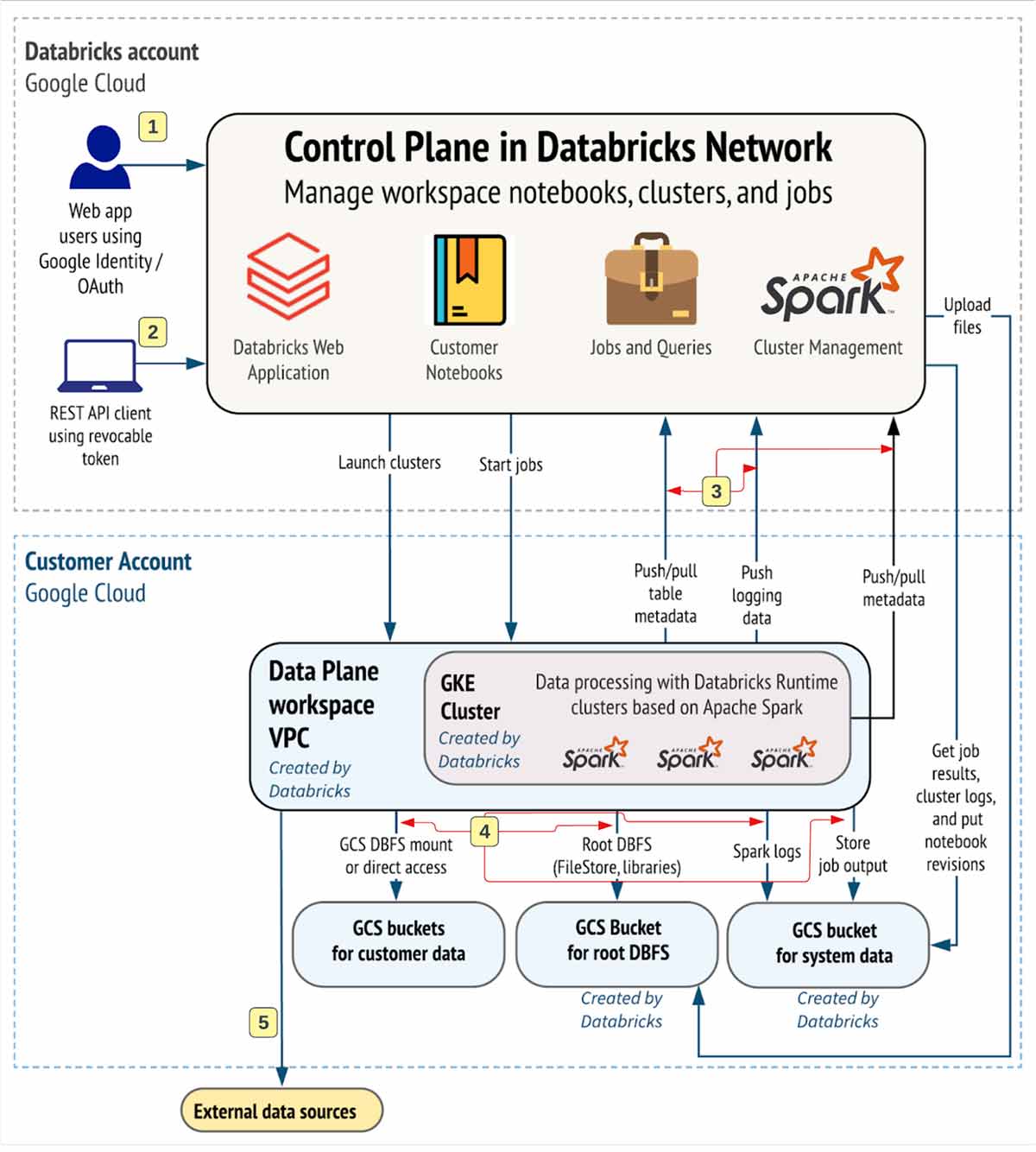

Community Communication Path

Let’s perceive the communication path we need to safe. Databricks could possibly be consumed by customers and functions in quite a few methods, as proven beneath:

A Databricks workspace deployment consists of the next community paths to safe

- Customers who entry Databricks net utility aka workspace

- Customers or functions that entry Databricks REST APIs

- Databricks information aircraft VPC community to the Databricks management aircraft service. This consists of the safe cluster connectivity relay and the workspace connection for the REST API endpoints.

- Dataplane to your storage companies

- Dataplane to exterior information sources e.g. bundle repositories like pypi or maven

From end-user perspective, the paths 1 & 2 require ingress controls and three,4,5 egress controls

On this article, our focus space is to safe egress visitors out of your Databricks workloads, present the reader with prescriptive steering on the proposed deployment structure, and whereas we’re at it, we’ll share greatest practices to safe ingress (consumer/consumer into Databricks) visitors as effectively.

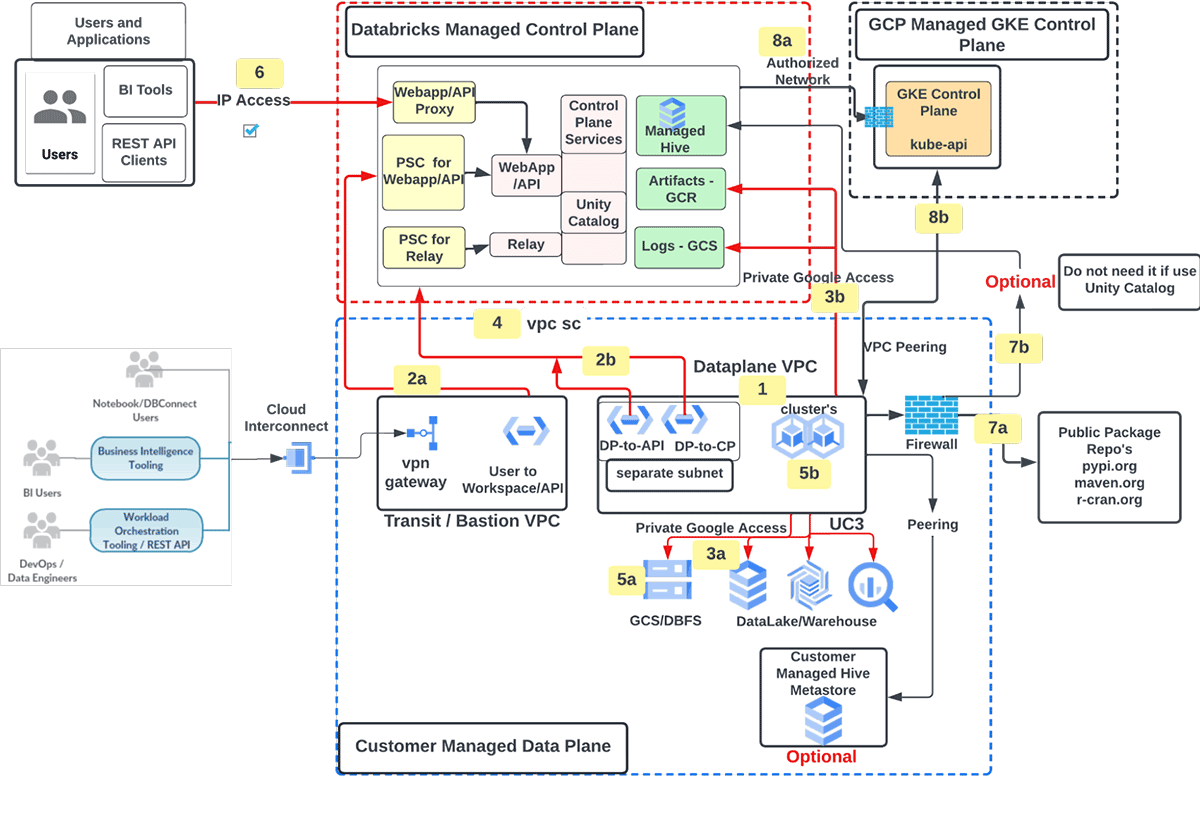

Proposed Deployment Structure

Create Databricks workspace on GCP with the next options

- Buyer managed GCP VPC for workspace deployment

- Personal Service Join (PSC) for Net utility/APIs (frontend) and Management aircraft (backend) visitors

- Consumer to Net Utility / APIs

- Information Aircraft to Management Aircraft

- Visitors to Google Companies over Personal Google Entry

- Buyer managed companies (e.g. GCS, BQ)

- Google Cloud Storage (GCS) for logs (well being telemetry and audit) and Google Container Registry (GCR) for Databricks runtime photos

- Databricks workspace (information aircraft) GCP undertaking secured utilizing VPC Service Controls (VPC SC)

- Buyer Managed Encryption keys

- Ingress management for Databricks workspace/APIs utilizing IP Entry listing

- Visitors to exterior information sources filtered by way of VPC firewall [optional]

- Egress to public bundle repo

- Egress to Databricks managed hive

- Databricks to GCP managed GKE management aircraft

- Databricks management aircraft to GKE management aircraft (kube-apiserver) visitors over licensed community

- Databricks information aircraft GKE cluster to GKE management aircraft over vpc peering

Important Studying

Earlier than you start, please guarantee that you’re aware of these matters

Stipulations

- A Google Cloud account.

- A Google Cloud undertaking within the account.

- A GCP VPC with three subnets precreated, see necessities right here

- A GCP IP vary for GKE grasp assets

- Use the Databricks Terraform supplier 1.13.0 or increased. At all times use the most recent model of the supplier.

- A Databricks on Google Cloud account within the undertaking.

- A Google Account and a Google service account (GSA) with the required permissions.

- To create a Databricks workspace, the required roles are defined right here. Because the GSA might provision further assets past Databricks workspace, for instance, personal DNS zone, A data, PSC endpoints and so forth, it’s higher to have a undertaking proprietor function in avoiding any permission-related points.

- In your native improvement machine, you have to have:

- The Terraform CLI: See Obtain Terraform on the web site.

- Terraform Google Cloud Supplier: There are a number of choices obtainable right here and right here to configure authentication for the Google Supplier. Databricks does not have any choice in how Google Supplier authentication is configured.

Keep in mind

- Each Shared VPC or standalone VPC are supported

- Google terraform supplier helps OAUTH2 entry token to authenticate GCP API calls and that is what now we have used to configure authentication for the google terraform supplier on this article.

- The entry tokens are short-lived (1 hour) and never auto refreshed

- Databricks terraform supplier relies upon upon the Google terraform supplier to provision GCP assets

- No adjustments, together with resizing subnet IP deal with area or altering PSC endpoints configuration is allowed submit workspace creation.

- In case your Google Cloud group coverage has domain-restricted sharing enabled, please be certain that each the Google Cloud buyer IDs for Databricks (C01p0oudw) and your individual group’s buyer ID are within the coverage’s allowed listing. See the Google article Setting the group coverage. Should you need assistance, contact your Databricks consultant earlier than provisioning the workspace.

- Make it possible for the service account used to create Databricks workspace has the required roles and permissions.

- You probably have VPC SC enabled in your GCP initiatives, please replace it per the ingress and egress guidelines listed right here.

- Perceive the IP deal with area necessities; a fast reference desk is out there over right here

- This is a listing of Gcloud instructions that you could be discover helpful

- Databricks does assist world entry settings in case you need Databricks workspace (PSC endpoint) to be accessed by a useful resource working in a distinct area from the place Databricks is.

Deployment Information

There are a number of methods to implement the proposed deployment structure

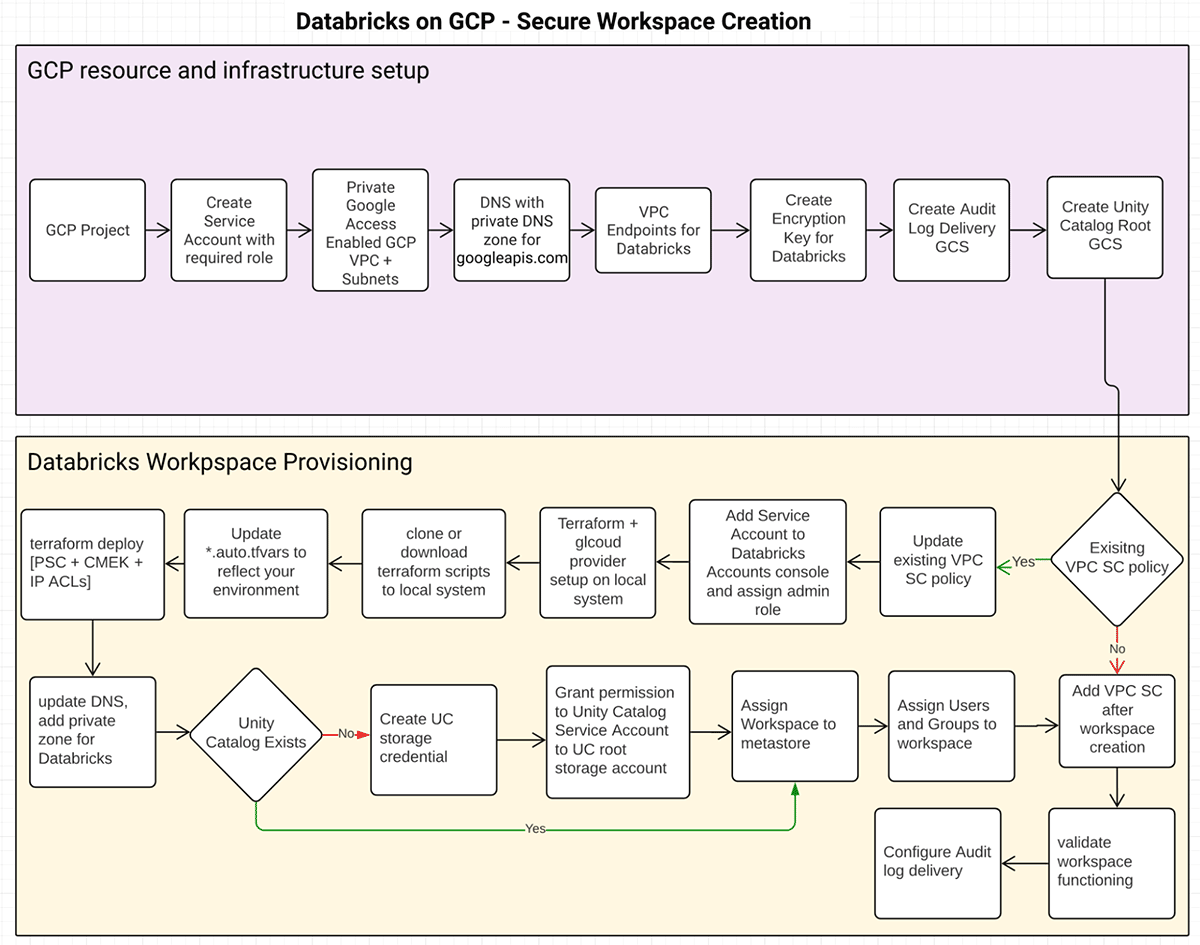

No matter the method you utilize, the useful resource creation circulate would seem like this:

GCP useful resource and infrastructure setup

This can be a prerequisite step. How the required infrastructure is provisioned, i.e. utilizing Terraform or Gcloud or GCP cloud console, is out of the scope of this text. This is a listing of GCP assets required:

| GCP Useful resource Kind | Objective | Particulars |

|---|---|---|

| Undertaking | Create Databricks Workspace (ws) | Undertaking necessities |

| Service Account | Used with Terraform to create ws | Databricks Required Position and Permission. Along with this you may additionally want further permissions relying upon the GCP assets you’re provisioning. |

| VPC + Subnets | Three subnets per ws | Community necessities |

| Personal Google Entry (PGA) | Retains visitors between Databricks management aircraft VPC and Clients VPC personal | Configure PGA |

| DNS for PGA | Personal DNS zone for personal api’s | DNS Setup |

| Personal Service Join Endpoints | Makes Databricks management aircraft companies obtainable over personal ip addresses.

Personal Endpoints must reside in its personal, separate subnet. |

Endpoint creation |

| Encryption Key | Buyer-managed Encryption key used with Databricks | Cloud KMS-based key, helps auto key rotation. Key could possibly be “software program” or “HSM” aka hardware-backed keys. |

| Google Cloud Storage Account for Audit Log Supply | Storage for Databricks audit log supply | Configure log supply |

| Google Cloud Storage (GCS) Account for Unity Catalog | Root storage for Unity Catalog | Configure Unity Catalog storage account |

| Add or replace VPC SC coverage | Add Databricks particular ingress and egress guidelines | Ingress & Egress yaml together with gcloud command to create a fringe. Databricks initiatives numbers and PSC attachment URI’s obtainable over right here. |

| Add/Replace Entry Degree utilizing Entry Context Supervisor | Add Databricks regional Management Aircraft NAT IP to your entry coverage in order that ingress visitors is just allowed from an enable listed IP | Checklist of Databricks regional management aircraft egress IP’s obtainable over right here |

Create Workspace

- Clone Terraform scripts from right here

- To maintain issues easy, grant undertaking proprietor function to the GSA on the service and shared VPC undertaking

- Replace *.vars recordsdata as per your setting setup

| Variable | Particulars |

|---|---|

| google_service_account_email | [NAME]@[PROJECT].iam.gserviceaccount.com |

| google_project_name | PROJECT the place information aircraft might be created |

| google_region | E.g. us-central1, supported areas |

| databricks_account_id | Find your account id |

| databricks_account_console_url | https://accounts.gcp.databricks.com |

| databricks_workspace_name | [ANY NAME] |

| databricks_admin_user | Present no less than one consumer e mail id. This consumer might be made workspace admin upon creation. This can be a required area. |

| google_shared_vpc_project | PROJECT the place VPC utilized by dataplane is situated. In case you are not utilizing Shared VPC then enter the identical worth as google_project_name |

| google_vpc_id | VPC ID |

| gke_node_subnet | NODE SUBNET identify aka PRIMARY subnet |

| gke_pod_subnet | POD SUBNET identify aka SECONDARY subnet |

| gke_service_subnet | SERVICE SUBNET SUBNET identify aka SECONDARY subnet |

| gke_master_ip_range | GKE management aircraft ip deal with vary. Must be /28 |

| cmek_resource_id | initiatives/[PROJECT]/places/[LOCATION]/keyRings/[KEYRING]/cryptoKeys/[KEY] |

| google_pe_subnet | A devoted subnet for personal endpoints, advisable dimension /28. Please assessment community topology choices obtainable earlier than continuing. For this deployment we’re utilizing the “Host Databricks customers (purchasers) and the Databricks dataplane on the identical community” possibility. |

| workspace_pe | Distinctive identify e.g. frontend-pe |

| relay_pe | Distinctive identify e.g. backend-pe |

| relay_service_attachment | Checklist of regional service attachment URI’s |

| workspace_service_attachment | Checklist of regional service attachment URI’s |

| private_zone_name | E.g. “databricks” |

| dns_name | gcp.databricks.com. (. is required ultimately) |

If you do not need to make use of the IP-access listing and wish to utterly lock down workspace entry (UI and APIs) exterior of your company community, then you definately would want to:

- Remark out databricks_workspace_conf and databricks_ip_access_list assets within the workspace.tf

- Replace databricks_mws_private_access_settings useful resource’s public_access_enabled setting from true to false within the workspace.tf

- Please be aware that Public_access_enabled setting can’t be modified after the workspace is created

- Just remember to have Interconnect Attachments aka vlanAttachments are created in order that visitors from on premise networks can attain GCP VPC (the place personal endpoints exist) over devoted interconnect connection.

Profitable Deployment Examine

Upon profitable deployment, the Terraform output would seem like this:

backend_end_psc_status = "Backend psc standing: ACCEPTED"

front_end_psc_status = "Frontend psc standing: ACCEPTED"

workspace_id = "workspace id: <UNIQUE-ID.N>"

ingress_firewall_enabled = "true"

ingress_firewall_ip_allowed = tolist([

"xx.xx.xx.xx",

"xx.xx.xx.xx/xx"

])

service_account = "Default SA connected to GKE nodes

[email protected]<PROJECT>.iam.gserviceaccount.com"

workspace_url = "https://<UNIQUE-ID.N>.gcp.databricks.com"

Publish Workspace Creation

- Validate that DNS data are created, observe this doc to grasp required A data.

- Configure Unity Catalog (UC)

- Assign Workspace to UC

- Add customers/teams to workspace by way of UC Id Federation

- Auto provision customers/teams out of your Id Suppliers

- Configure Audit Log Supply

- In case you are not utilizing UC and wish to use Databricks managed hive then add an egress firewall rule to your VPC as defined right here

Getting Began with Information Exfiltration Safety with Databricks on Google Cloud

We mentioned using cloud-native safety management to implement information exfiltration safety in your Databricks on GCP deployments, all of which could possibly be automated to allow information groups at scale. Another issues that you could be need to think about and implement as a part of this undertaking are: