On this four-part weblog sequence “Classes realized from constructing Cybersecurity Lakehouses,” we’ll talk about various challenges organizations face with knowledge engineering when constructing out a Lakehouse for cybersecurity knowledge, and supply some options, ideas, methods, and greatest practices that now we have used within the subject to beat them. If you wish to construct your individual Cybersecurity Lakehouse, this sequence will educate you on the challenges and supply a manner ahead.

Databricks has constructed a sensible low-code configuration resolution for effectively dealing with and standardizing cyber logs. Our Lakehouse platform simplifies knowledge engineering, facilitating a quicker shift to go looking, analytics, and streamed menace detection. It enhances your present SIEM and SOAR methods, enhancing your cybersecurity operations with out pointless complexity.

Partially one, we start with probably the most elementary component of any cyber analytics engine: uniform occasion timestamp extraction. Correct timestamps are among the many most essential parts in safety operations and incident response. With out accuracy, producing a sequence of occasions taken by system customers or unhealthy actors is unimaginable. On this weblog, we’ll have a look at among the methods out there to establish, extract, and rework occasion timestamp info right into a Delta Lake, such that they’re usable inside a cyber context.

Why is occasion time so essential?

Machine-generated log knowledge is messy at greatest. There are well-defined buildings for particular file sorts (JSON, YAML, CSV and many others.), however the content material and format of the info that makes up these information are largely left to the builders interpretation. Whereas time codecs exist (ISO 8601), adherence to them is proscribed and subjective – maybe log codecs predate these requirements, or geographic bias for a selected format drives how these timestamps are written.

Regardless of the numerous time codecs reported in logs, we’re liable for normalizing them to make sure interoperability with all log knowledge being acquired and analyzed in any cyber engine.

To emphasise the significance of interoperability between timestamps, contemplate among the duties a typical safety operations middle (SOC) must reply day by day.

- Which pc did the attacker compromise first?

- In what order did the attacker transfer from system to system?

- What actions occurred, and in what order as soon as the preliminary foothold had been established?

With out correct and unified timestamps, it’s unimaginable to generate a timeline of actions that occurred to reply these questions successfully. Beneath, we look at among the challenges and supply recommendation on methods to method them.

Timestamp Points

A number of or single column: Earlier than contemplating methods to parse an occasion timestamp, we should first isolate it. This may increasingly already occur mechanically in some log codecs or spark learn operations. Nevertheless, in others, it’s unlikely. For example, comma-separated values (CSV) information might be extracted by Spark as particular person columns. If the timestamp is remoted by a kind of, then nice! Nevertheless, a machine producing syslog knowledge doubtless lands as a single column, and the timestamp should be remoted utilizing common expressions.

Date and time codecs: These trigger a whole lot of confusion in log information. For example, ’12/06/12′ vs. ’06/12/12′. Each codecs are legitimate, however figuring out the day, month, and yr is difficult with out realizing the native system log format.

Timezone Identification: Much like knowledge and time codecs, some methods both report the timezone of the timestamp, whereas others assume an area time and don’t print the timezone in any respect. This is probably not a problem if all knowledge sources are reported and analyzed inside the identical time zone. Nevertheless, organizations want to research tens or a whole bunch of log sources from a number of time zones in right this moment’s related and world world.

Figuring out, extracting, and parsing occasion timestamps require constantly and successfully representing time inside our storage methods. Beneath is an instance of methods to extract and parse a timestamp from a syslog-style Apache internet server.

Extracting Timestamps Situation

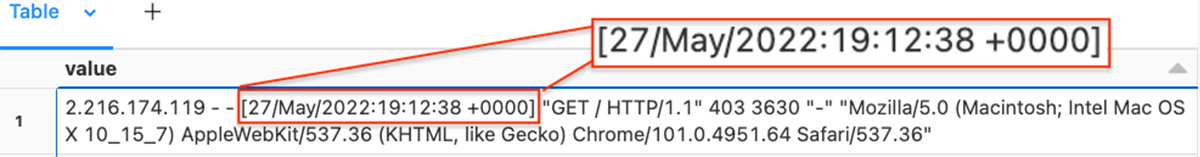

Within the following instance, we have a look at the usual Apache internet server log format. The info is generated as a textual content report and is learn as a single column (worth) in Databricks. Due to this fact, we have to extract the occasion timestamp utilizing a daily expression.

Instance regex to extract the occasion timestamp from a single column of knowledge:

from pyspark.sql.features import regexp_extract

TIMESTAMP_REGEX = '^([^ ]*) [^ ]* ([^ ]*) [([^]]*)]'

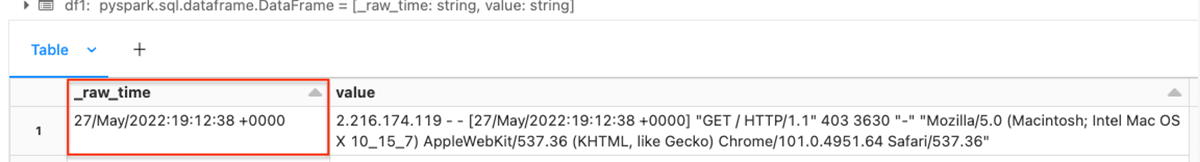

df1 = df.choose(regexp_extract("worth", TIMESTAMP_REGEX, 3).alias('_raw_time'), "*")

show(df1)We use the PySpark regexp_extract perform to extract the a part of the string that has the occasion timestamp, and create a column _raw_time with the matching characters.

Ensuing dataframe:

Parsing Timestamps

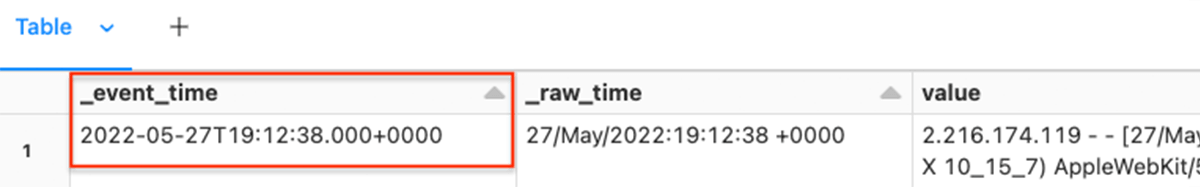

With the occasion timestamp extracted as a brand new column, we will now normalize it into an ISO 8601 normal timestamp.

To normalize the timestamp, we have to outline the format utilizing the date/time format modifiers and convert it to a unix-style timestamp earlier than reworking it to the ISO formatted timestamp format.

TIMESTAMP_FORMAT = "dd/MMM/yyyy:HH:mm:ss Z"Instance transformation to an ISO 8601 formatted occasion timestamp:

from pyspark.sql.features import to_timestamp, unix_timestamp, col

TIMESTAMP_FORMAT="dd/MMM/yyyy:HH:mm:ss Z"

df2 = df1.choose(

to_timestamp(unix_timestamp(col("_raw_time"), TIMESTAMP_FORMAT).forged("timestamp"), "dd-MM-yyyy HH:mm:ss.SSSZ").alias("_event_time")

)

show(df2)We use the PySpark features unix_timestamp and to_timestamp to generate the brand new metadata column _event_time.

Ensuing dataframe:

The ensuing column is forged to Timestamp Sort to make sure consistency and knowledge integrity.

Suggestions and greatest practices

In our journey with serving to many shoppers with cyber analytics, now we have gathered some invaluable recommendation and greatest practices that may considerably improve the ingest expertise.

Specific time format: When constructing parsers, explicitly setting the time format will considerably pace up the parse process when in comparison with passing a column to a generic library that should check many codecs to search out one which returns an correct timestamp column.

Column Naming: Prefix metadata columns with an underscore. This permits simple distinction between machine-generated knowledge and metadata, with the added bonus of showing left-justified by default in knowledge frames and tables.

Occasion Time vs. Ingest Time: Delays happen in knowledge transmission. Add a brand new metadata column for ingest time and create operational rigor to establish knowledge sources at the moment behind or lacking.

Defaults: Strategize over lacking or undetermined timestamps. Issues can and do go incorrect. Make a judgment name over methods to course of lacking timestamps. Among the ways now we have seen are:

- Set the date to zero (01/01/1970) and create operational rigor to establish and proper knowledge.

- Set the date to the present ingest time and create operational rigor to establish and proper knowledge

- Fail the pipeline totally

Conclusion

Properly-formed and correct occasion timestamps are vital for enterprise safety operations and incident response for producing occasion sequences and timelines to analyze cyber threats. With out interoperability throughout all knowledge sources, it’s unimaginable to keep up an efficient safety posture. Complexities corresponding to common expression extraction and parsing discrepancies in knowledge sources underpin this. In serving to many shoppers to construct out Cybersecurity Lakehouses, now we have created sensible options to hurry up this course of.

Get in Contact

On this weblog, we labored by way of a single instance of the numerous attainable timestamp extraction points encountered with semi-structured log information. If you wish to study extra about how Databricks cyber options can empower your group to establish and mitigate cyber threats, contact [email protected] and take a look at our new Lakehouse for Cybersecurity Functions webpage.