Coroutines are an exquisite approach of writing asynchronous, non-blocking code in Kotlin. Consider them as light-weight threads, as a result of that’s precisely what they’re. Light-weight threads intention to cut back context switching, a comparatively costly operation. Furthermore, you’ll be able to simply droop and cancel them anytime. Sounds nice, proper?

After realizing all the advantages of coroutines, you determined to present it a strive. You wrote your first coroutine and known as it from a non-suspendible, common perform… solely to search out out that your code doesn’t compile! You at the moment are trying to find a strategy to name your coroutine, however there are not any clear explanations about how to try this. It looks as if you aren’t alone on this quest: This developer obtained so pissed off that he’s given up on Kotlin altogether!

Does this sound acquainted to you? Or are you continue to searching for the very best methods to hyperlink coroutines to your non-coroutine code? If that’s the case, then this weblog put up is for you. On this article, we’ll share probably the most elementary coroutine gotcha that every one of us stumbled upon throughout our coroutines journey: Easy methods to name coroutines from common, blocking code?

We’ll present three other ways of bridging the hole between the coroutine and non-coroutine world:

- GlobalScope (higher not)

- runBlocking (watch out)

- Droop all the best way (go forward)

Earlier than we dive into these strategies, we’ll introduce you to some ideas that may provide help to perceive the other ways.

Suspending, blocking and non-blocking

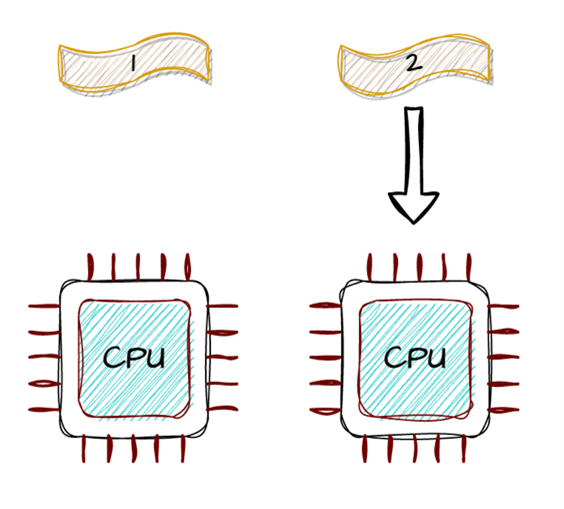

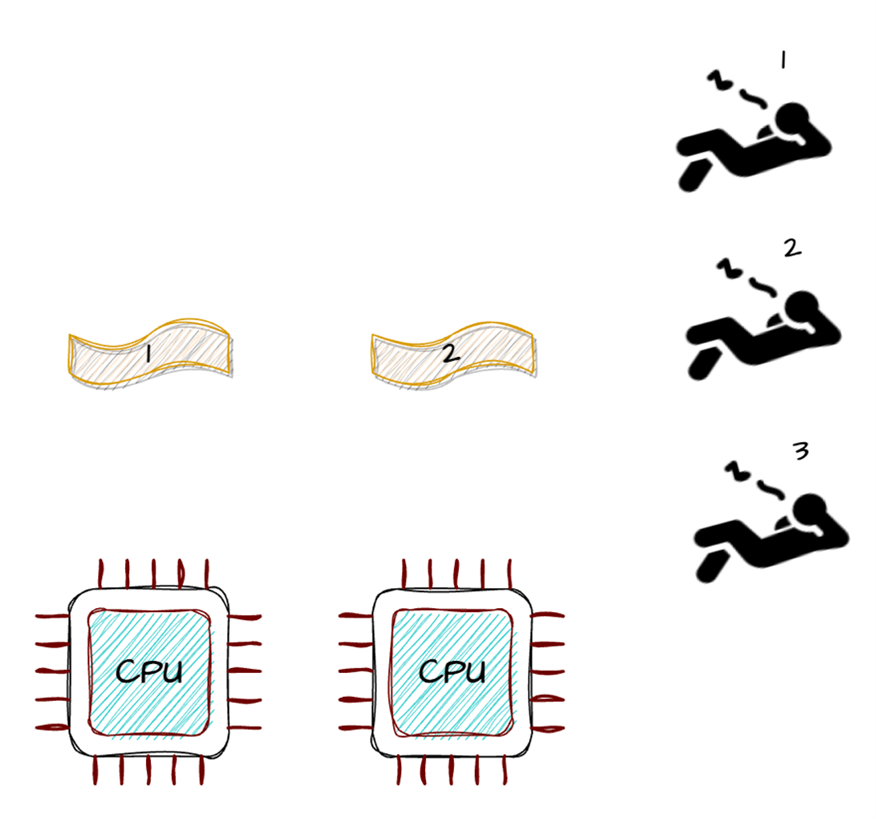

Coroutines run on threads and threads run on a CPU . To raised perceive our examples, it is useful to visualise which coroutine runs on which thread and which CPU that thread runs on. So, we’ll share our psychological image with you within the hopes that it’s going to additionally provide help to perceive the examples higher.

As we talked about earlier than, a thread runs on a CPU. Let’s begin by visualizing that relationship. Within the following image, we will see that thread 2 runs on CPU 2, whereas thread 1 is idle (and so is the primary CPU):

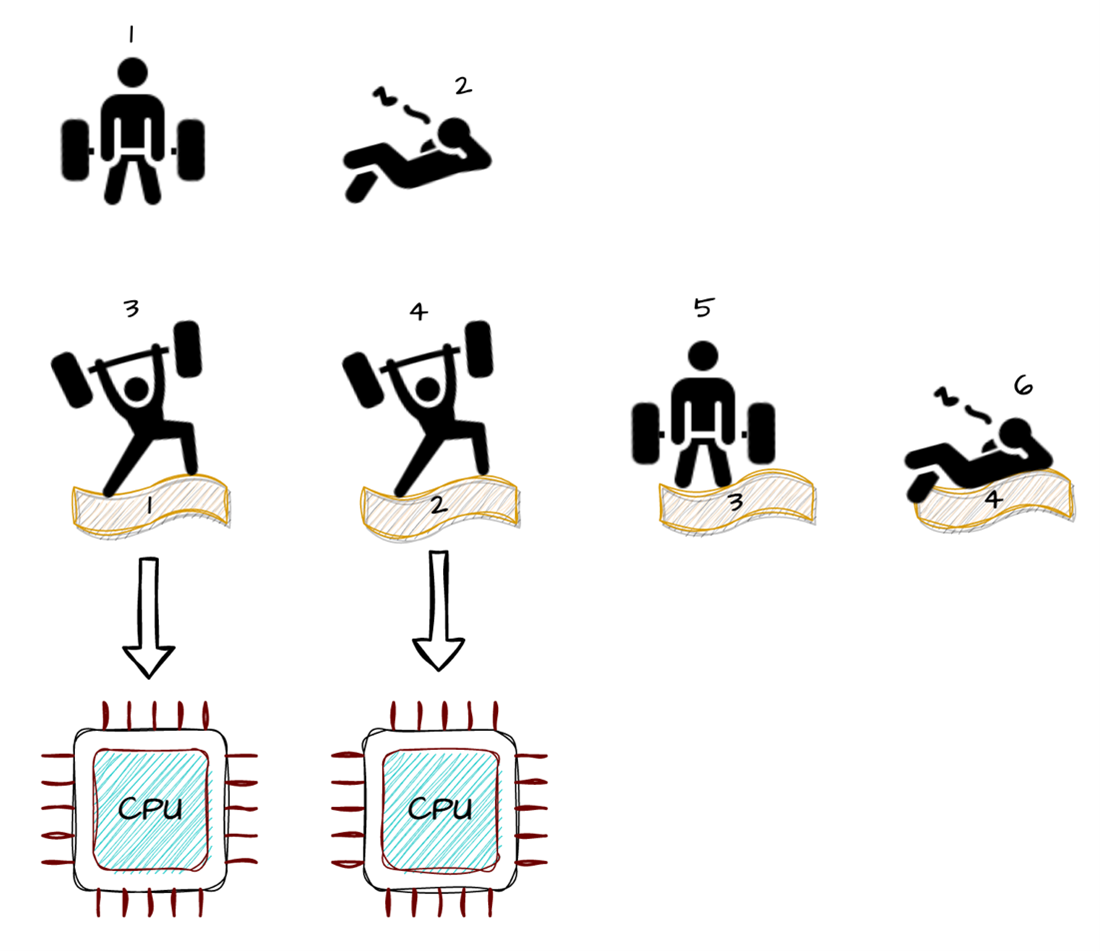

Put merely, a coroutine might be in three states, it may possibly both be:

1. Performing some work on a CPU (i.e., executing some code)

2. Ready for a thread or CPU to do some work on

3. Ready for some IO operation (e.g., a community name)

These three states are depicted under:

Recall {that a} coroutine runs on a thread. One essential factor to notice is that we will have extra threads than CPUs and extra coroutines than threads. That is fully regular as a result of switching between coroutines is extra light-weight than switching between threads. So, let’s contemplate a situation the place we’ve two CPUs, 4 threads, and 6 coroutines. On this case, the next image exhibits the doable eventualities which are related to this weblog put up.

Firstly, coroutines 1 and 5 are ready to get some work achieved. Coroutine 1 is ready as a result of it doesn’t have a thread to run on whereas thread 5 does have a thread however is ready for a CPU. Secondly, coroutines 3 and 4 are working, as they’re operating on a thread that’s burning CPU cycles. Lastly, coroutines 2 and 6 are ready for some IO operation to complete. Nonetheless, not like coroutine 2, coroutine 6 is occupying a thread whereas ready.

With this info we will lastly clarify the final two ideas it is advisable to find out about: 1) coroutine suspension and a pair of) blocking versus non-blocking (or asynchronous) IO.

Suspending a coroutine implies that the coroutine provides up its thread, permitting one other coroutine to make use of it. For instance, coroutine 4 may hand again its thread in order that one other coroutine, like coroutine 5, can use it. The coroutine scheduler finally decides which coroutine can go subsequent.

We are saying an IO operation is obstructing when a coroutine sits on its thread, ready for the operation to complete. That is exactly what coroutine 6 is doing. Coroutine 6 did not droop, and no different coroutine can use its thread as a result of it is blocking.

On this weblog put up, we’ll use the next easy perform that makes use of sleep to mimic each a blocking and a CPU intensive job. This works as a result of sleep has the peculiar function of blocking the thread it runs on, retaining the underlying thread busy.

personal enjoyable blockingTask(job: String, length: Lengthy) {

println("Began $tasktask on ${Thread.currentThread().identify}")

sleep(length)

println("Ended $tasktask on ${Thread.currentThread().identify}")

}Coroutine 2, nevertheless, is extra courteous – it suspended and lets one other coroutine use its thread whereas its ready for the IO operation to complete. It’s performing asynchronous IO.

In what follows, we’ll use a perform asyncTask to simulate a non-blocking job. It appears similar to our blockingTask, however the one distinction is that as an alternative of sleep we use delay. Versus sleep, delay is a suspending perform – it’s going to hand again its thread whereas ready.

personal droop enjoyable asyncTask(job: String, length: Lengthy) {

println("Began $job name on ${Thread.currentThread().identify}")

delay(length)

println("Ended $job name on ${Thread.currentThread().identify}")

}Now we’ve defined all of the ideas in place, it’s time to have a look at three other ways to name your coroutines.

Possibility 1: GlobalScope (higher not)

Suppose we’ve a suspendible perform that should name our blockingTask 3 times. We are able to launch a coroutine for every name, and every coroutine can run on any accessible thread:

personal droop enjoyable blockingWork() {

coroutineScope {

launch {

blockingTask("heavy", 1000)

}

launch {

blockingTask("medium", 500)

}

launch {

blockingTask("gentle", 100)

}

}

}

Take into consideration this program for some time: How a lot time do you anticipate it might want to end provided that we’ve sufficient CPUs to run three threads on the identical time? After which there may be the large query: How will you name blockingWork suspendible perform out of your common, non-suspendible code?

One doable approach is to name your coroutine in GlobalScope which isn’t certain to any job. Nonetheless, utilizing GlobalScope should be averted as it’s clearly documented as not protected to make use of (aside from in restricted use-cases). It may trigger reminiscence leaks, it isn’t certain to the precept of structured concurrency, and it’s marked as @DelicateCoroutinesApi. However why? Effectively, run it like this and see what occurs.

personal enjoyable runBlockingOnGlobalScope() {

GlobalScope.launch {

blockingWork()

}

}

enjoyable most important() {

val durationMillis = measureTimeMillis {

runBlockingOnGlobalScope()

}

println("Took: ${durationMillis}ms")

}Output:

Took: 83ms

Wow, that was fast! However the place did these print statements inside our blockingTask go? We solely see how lengthy it took to name the perform blockingWork, which additionally appears to be too quick – it ought to take no less than a second to complete, don’t you agree? This is likely one of the apparent issues with GlobalScope; it’s hearth and neglect. This additionally implies that whenever you cancel your most important calling perform all of the coroutines that had been triggered by it’s going to proceed operating someplace within the background. Say howdy to reminiscence leaks!

We may, after all, use job.be part of() to attend for the coroutine to complete. Nonetheless, the be part of perform can solely be known as from a coroutine context. Under, you’ll be able to see an instance of that. As you’ll be able to see, the entire perform remains to be a suspendible perform. So, we’re again to sq. one.

personal droop enjoyable runBlockingOnGlobalScope() {

val job = GlobalScope.launch {

blockingWork()

}

job.be part of() //can solely be known as inside coroutine context

}One other strategy to see the output could be to attend after calling GlobalScope.launch. Let’s wait for 2 seconds and see if we will get the right output:

personal enjoyable runBlockingOnGlobalScope() {

GlobalScope.launch {

blockingWork()

}

sleep(2000)

}

enjoyable most important() {

val durationMillis = measureTimeMillis {

runBlockingOnGlobalScope()

}

println("Took: ${durationMillis}ms")

}

Output:

Began gentle job on DefaultDispatcher-worker-4

Began heavy job on DefaultDispatcher-worker-2

Began medium job on DefaultDispatcher-worker-3

Ended gentle job on DefaultDispatcher-worker-4

Ended medium job on DefaultDispatcher-worker-3

Ended heavy job on DefaultDispatcher-worker-2

Took: 2092ms

The output appears to be appropriate now, however we blocked our most important perform for 2 seconds to make sure the work is finished. However what if the work takes longer than that? What if we don’t know the way lengthy the work will take? Not a really sensible answer, do you agree?

Conclusion: Higher not use GlobalScope to bridge the hole between your coroutine and non-coroutine code. It blocks the principle thread and should trigger reminiscence leaks.

Possibility 2a: runBlocking for blocking work (watch out)

The second strategy to bridge the hole between the coroutine and non-coroutine world is to make use of the runBlocking coroutine builder. In truth, we see this getting used everywhere. Nonetheless, the documentation warns us about two issues that may be simply ignored, runBlocking:

- blocks the thread that it’s known as from

- shouldn’t be known as from a coroutine

It’s express sufficient that we ought to be cautious with this runBlocking factor. To be trustworthy, after we learn the documentation, we struggled to grasp use runBlocking correctly. For those who really feel the identical, it might be useful to overview the next examples that illustrate how simple it’s to unintentionally degrade your coroutine efficiency and even block your program fully.

Clogging your program with runBlocking

Let’s begin with this instance the place we use runBlocking on the top-level of our program:

personal enjoyable runBlocking() {

runBlocking {

println("Began runBlocking on ${Thread.currentThread().identify}")

blockingWork()

}

}

enjoyable most important() {

val durationMillis = measureTimeMillis {

runBlocking()

}

println("Took: ${durationMillis}ms")

}Output:

Began runBlocking on most important

Began heavy job on most important

Ended heavy job on most important

Began medium job on most important

Ended medium job on most important

Began gentle job on most important

Ended gentle job on most important

Took: 1807ms

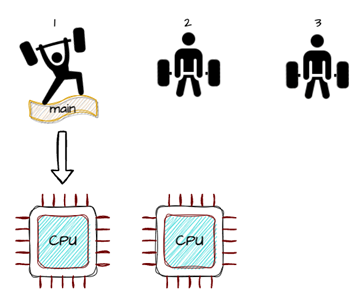

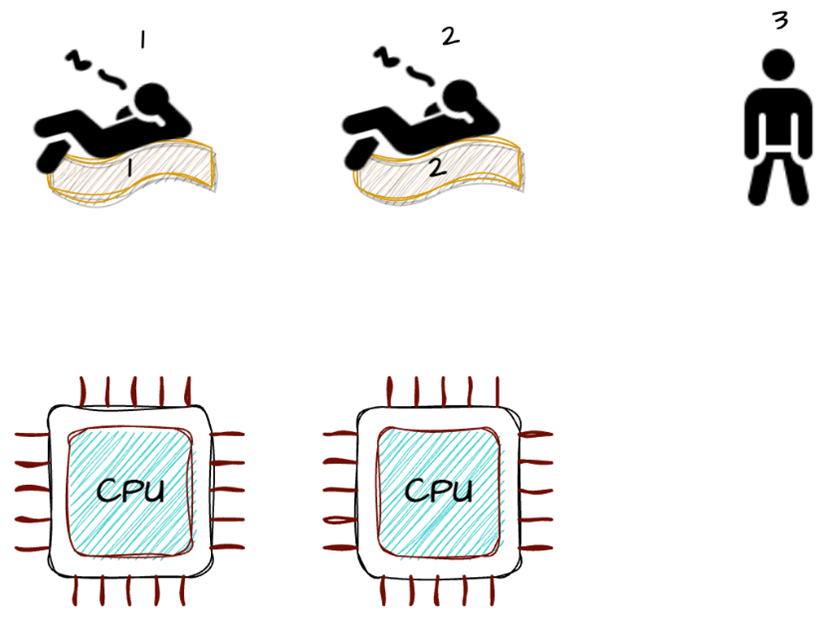

As you’ll be able to see, the entire program took 1800ms to finish. That’s longer than the second we anticipated it to take. It is because all our coroutines ran on the principle thread and blocked the principle thread for the entire time! In an image, this case would seem like this:

For those who solely have one thread, just one coroutine can do its work on this thread and all the opposite coroutines will merely have to attend. So, all jobs look ahead to one another to complete, as a result of they’re all blocking calls ready for this one thread to turn out to be free. See that CPU being unused there? Such a waste.

Unclogging runBlocking with a dispatcher

To dump the work to completely different threads, it is advisable to make use of Dispatchers. You possibly can name runBlocking with Dispatchers.Default to get the assistance of parallelism. This dispatcher makes use of a thread pool that has many threads as your machine’s variety of CPU cores (with a minimal of two). We used Dispatchers.Default for the sake of the instance, for blocking operations it’s prompt to make use of Dispatchers.IO.

personal enjoyable runBlockingOnDispatchersDefault() {

runBlocking(Dispatchers.Default) {

println("Began runBlocking on ${Thread.currentThread().identify}")

blockingWork()

}

}

enjoyable most important() {

val durationMillis = measureTimeMillis {

runBlockingOnDispatchersDefault()

}

println("Took: ${durationMillis}ms")

}Output:

Began runBlocking on DefaultDispatcher-worker-1

Began heavy job on DefaultDispatcher-worker-2

Began medium job on DefaultDispatcher-worker-3

Began gentle job on DefaultDispatcher-worker-4

Ended gentle job on DefaultDispatcher-worker-4

Ended medium job on DefaultDispatcher-worker-3

Ended heavy job on DefaultDispatcher-worker-2

Took: 1151ms

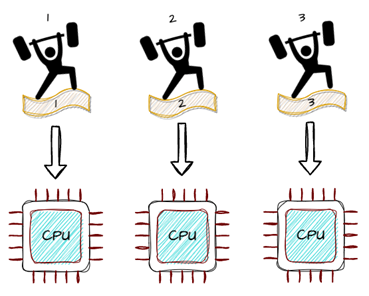

You’ll be able to see that our blocking calls at the moment are dispatched to completely different threads and operating in parallel. If we’ve three CPUs (our machine has), this case will look as follows:

Recall that the duties listed below are CPU intensive, which means that they may preserve the thread they run on busy. So, we managed to make a blocking operation in a coroutine and known as that coroutine from our common perform. We used dispatchers to get the benefit of parallelism. All good.

However what about non-blocking, suspendible calls that we’ve talked about at first? What can we do about them? Learn on to search out out.

Possibility 2b: runBlocking for non-blocking work (be very cautious)

Do not forget that we used sleep to imitate blocking duties. On this part we use the suspending delay perform to simulate non-blocking work. It doesn’t block the thread it runs on and when it’s idly ready, it releases the thread. It may proceed operating on a unique thread when it’s achieved ready and able to work. Under is an easy asynchronous name that’s achieved by calling delay:

personal droop enjoyable asyncTask(job: String, length: Lengthy) {

println(“Began $job name on ${Thread.currentThread().identify}”)

delay(length)

println(“Ended $job name on ${Thread.currentThread().identify}”)

}The output of the examples that comply with could fluctuate relying on what number of underlying threads and CPUs can be found for the coroutines to run on. To make sure this code behaves the identical on every machine, we’ll create our personal context with a dispatcher that has solely two threads. This fashion we simulate operating our code on two CPUs even when your machine has greater than that:

personal val context = Executors.newFixedThreadPool(2).asCoroutineDispatcher()Let’s launch a few coroutines calling this job. We anticipate that each time the duty waits, it releases the underlying thread, and one other job can take the accessible thread to do some work. Due to this fact, regardless that the under instance delays for a complete of three seconds, we anticipate it to take solely a bit longer than one second.

personal droop enjoyable asyncWork() {

coroutineScope {

launch {

asyncTask("sluggish", 1000)

}

launch {

asyncTask("one other sluggish", 1000)

}

launch {

asyncTask("yet one more sluggish", 1000)

}

}

}To name asyncWork from our non-coroutine code, we use asyncWork once more, however this time we use the context that we created above to make the most of multi-threading:

enjoyable most important() {

val durationMillis = measureTimeMillis {

runBlocking(context) {

asyncWork()

}

}

println("Took: ${durationMillis}ms")

}Output:

Began sluggish name on pool-1-thread-2

Began one other sluggish name on pool-1-thread-1

Began yet one more sluggish name on pool-1-thread-1

Ended one other sluggish name on pool-1-thread-1

Ended sluggish name on pool-1-thread-2

Ended yet one more sluggish name on pool-1-thread-1

Took: 1132ms

Wow, lastly a pleasant outcome! Now we have known as our asyncTask from a non-coroutine code, made use of the threads economically by utilizing a dispatcher and we blocked the principle thread for the least period of time. If we take an image precisely on the time all three coroutines are ready for the asynchronous name to finish, we see this:

Observe that each threads at the moment are free for different coroutines to make use of, whereas our three async coroutines are ready.

Nonetheless, it ought to be famous that the thread calling the coroutine remains to be blocked. So, it is advisable to watch out the place to make use of it. It’s good follow to name runBlocking solely on the top-level of your utility – from the principle perform or in your assessments . What may occur if you wouldn’t try this? Learn on to search out out.

Turning non-blocking calls into blocking calls with runBlocking

Assume you have got written some coroutines and also you name them in your common code by utilizing runBlocking similar to we did earlier than. After some time your colleagues determined so as to add a brand new coroutine name someplace in your code base. They invoked their asyncTask utilizing runblocking and made an async name in a non-coroutine perform notSoAsyncTask. Assume your present asyncWork perform must name this notSoAsyncTask:

personal enjoyable notSoAsyncTask(job: String, length: Lengthy) = runBlocking {

asyncTask(job, length)

}

personal droop enjoyable asyncWork() {

coroutineScope {

launch {

notSoAsyncTask("sluggish", 1000)

}

launch {

notSoAsyncTask("one other sluggish", 1000)

}

launch {

notSoAsyncTask("yet one more sluggish", 1000)

}

}

}The most important perform nonetheless runs on the identical context you created earlier than. If we now name the asyncWork perform, we’ll see completely different outcomes than our first instance:

enjoyable most important() {

val durationMillis = measureTimeMillis {

runBlocking(context) {

asyncWork()

}

}

println("Took: ${durationMillis}ms")

}Output:

Began one other sluggish name on pool-1-thread-1

Began sluggish name on pool-1-thread-2

Ended one other sluggish name on pool-1-thread-1

Ended sluggish name on pool-1-thread-2

Began yet one more sluggish name on pool-1-thread-1

Ended yet one more sluggish name on pool-1-thread-1

Took: 2080ms

You may not even notice the issue instantly as a result of as an alternative of working for 3 seconds, the code works for 2 seconds, and this may even look like a win at first look. As you’ll be able to see, our coroutines didn’t accomplish that a lot of an async work, didn’t make use of their suspension factors and simply labored in parallel as a lot as they may. Since there are solely two threads, one among our three coroutines waited for the preliminary two coroutines which had been hanging on their threads doing nothing, as illustrated by this determine:

It is a important problem as a result of our code misplaced the suspension performance by calling runBlocking in runBlocking.

For those who experiment with the code we offered above, you’ll uncover that you just lose all of the structural concurrency advantages of coroutines. Cancellations and exceptions from youngsters coroutines will probably be omitted and received’t be dealt with accurately.

Blocking your utility with runBlocking

Can we even do worse? We positive can! In truth, it’s simple to interrupt your complete utility with out realizing. Assume your colleague discovered it’s good follow to make use of a dispatcher and determined to make use of the identical context you have got created earlier than. That doesn’t sound so unhealthy, does it? However take a better look:

personal enjoyable blockingAsyncTask(job: String, length: Lengthy) = runBlocking(context) {

asyncTask(job, length)

}

personal droop enjoyable asyncWork() {

coroutineScope {

launch {

blockingAsyncTask("sluggish", 1000)

}

launch {

blockingAsyncTask("one other sluggish", 1000)

}

launch {

blockingAsyncTask("yet one more sluggish", 1000)

}

}

}

Performing the identical operation because the earlier instance however utilizing the context you have got created earlier than. Seems to be innocent sufficient, why not give it a strive?

enjoyable most important() {

val durationMillis = measureTimeMillis {

runBlocking(context) {

asyncWork()

}

}

println("Took: ${durationMillis}ms")

}

Output:

Began sluggish name on pool-1-thread-1

Aha, gotcha! It looks as if your colleagues created a impasse with out even realising. Now your most important thread is blocked and ready for any of the coroutines to complete, but none of them can get a thread to work on.

Conclusion: Watch out when utilizing runBlocking, when you use it wrongly it may possibly block your complete utility. For those who nonetheless determine to make use of it, then you’ll want to name it out of your most important perform (or in your assessments) and all the time present a dispatcher to run on.

Possibility 3: Droop all the best way (go forward)

You’re nonetheless right here, so that you didn’t flip your again on Kotlin coroutines but? Good. We’re right here for the final and the most suitable choice that we expect there may be: suspending your code all the best way as much as your highest calling perform. If that’s your utility’s most important perform, you’ll be able to droop your most important perform. Is your highest calling perform an endpoint (for instance in a Spring controller)? No downside, Spring integrates seamlessly with coroutines; simply you’ll want to use Spring WebFlux to completely profit from the non-blocking runtime supplied by Netty and Reactor.

Under we’re calling our suspendible asyncWork from a suspendible most important perform:

personal droop enjoyable asyncWork() {

coroutineScope {

launch {

asyncTask("sluggish", 1000)

}

launch {

asyncTask("one other sluggish", 1000)

}

launch {

asyncTask("yet one more sluggish", 1000)

}

}

}

droop enjoyable most important() {

val durationMillis = measureTimeMillis {

asyncWork()

}

println("Took: ${durationMillis}ms")

}

Output:

Began one other sluggish name on DefaultDispatcher-worker-2

Began sluggish name on DefaultDispatcher-worker-1

Began yet one more sluggish name on DefaultDispatcher-worker-3

Ended yet one more sluggish name on DefaultDispatcher-worker-1

Ended one other sluggish name on DefaultDispatcher-worker-3

Ended sluggish name on DefaultDispatcher-worker-2

Took: 1193ms

As you see, it really works asynchronously, and it respects all of the features of structural concurrency. That’s to say, when you get an exception or cancellation from any of the dad or mum’s little one coroutines, they are going to be dealt with as anticipated.

Conclusion: Go forward and droop all of the capabilities that decision your coroutine all the best way as much as your top-level perform. That is the most suitable choice for calling coroutines.

The most secure approach of bridging coroutines

Now we have explored the three flavours of bridging coroutines to the non-coroutine world, and we imagine that suspending your calling perform is the most secure strategy. Nonetheless, when you desire to keep away from suspending the calling perform, you should use runBlocking, however bear in mind that it requires extra warning. With this information, you now have a very good understanding of name your coroutines safely. Keep tuned for extra coroutine gotchas!