Have you ever ever puzzled how huge enterprise and client apps deal with that type of scale with concurrent customers? To deploy high-performance purposes at scale, a rugged operational database is crucial. Cloudera Operational Database (COD) is a high-performance and extremely scalable operational database designed for powering the largest knowledge purposes on the planet at any scale. Powered by Apache HBase and Apache Phoenix, COD ships out of the field with Cloudera Knowledge Platform (CDP) within the public cloud. It’s additionally multi-cloud prepared to fulfill what you are promoting the place it’s right now, whether or not AWS, Microsoft Azure, or GCP.

Help for cloud storage is a crucial functionality of COD that, along with the pre-existing assist for HDFS on native storage, gives a alternative of worth efficiency traits to the shoppers.

To know how COD delivers one of the best cost-efficient efficiency in your purposes, let’s dive into benchmarking outcomes evaluating COD utilizing cloud storage vs COD on premises.

Check Surroundings:

The efficiency comparability was executed to measure the efficiency variations between COD utilizing storage on Hadoop Distributed File System (HDFS) and COD utilizing cloud storage. We examined for 2 cloud storages, AWS S3 and Azure ABFS. These efficiency measurements had been executed on COD 7.2.15 runtime model.

The efficiency benchmark was executed to measure the next features:

- Learn-write workloads

- Learn solely workloads

The next configuration was used to setup a sidecar cluster:

- Runtime model: 7.2.15

- Variety of employee nodes: 10

The cluster working with HBase on cloud storage was configured with a mixed bucket cache measurement throughout the cluster as 32TB, with L2 bucket cache configured to make use of file-based cache storage on ephemeral storage volumes of 1.6TB capability every. We ensured that this bucket cache was warmed up nearly fully, i.e. all of the areas on all of the area servers had been learn into the bucket cache. That is executed mechanically each time the area servers are began.

All of the assessments had been run utilizing YCSB benchmarking instrument on COD with the next configurations:

- Amazon AWS

- COD Model: 1.22

- CDH: 7.2.14.2

- Apache HBase on HDFS

- No. of grasp nodes: 2 (m5.8xlarge)

- No. of chief nodes: 1 (m5.2xlarge)

- No. of gateway nodes: 1 (m5.2xlarge)

- No. of employee nodes: 20 (m5.2xlarge) (Storage as HDFS with HDD)

- Apache HBase on S3

- No. of grasp nodes: 2 (m5.2xlarge)

- No. of chief nodes: 1 (m5.2xlarge)

- No. of gateway nodes: 1 (m5.2xlarge)

- No. of employee nodes: 20 (i3.2xlarge) (Storage as S3)

- Microsoft Azure

- Apache HBase on HDFS

- No. of grasp nodes: 2 (Standard_D32_V3)

- No. of chief nodes: 1 (Standard_D8_V3)

- No. of gateway nodes: 1 (Standard_D8_V3)

- No. of employee nodes: 20 (Standard_D8_V3)

- Apache Hbase on ABFS

- No. of grasp nodes: 2 (Standard_D8a_V4)

- No. of chief nodes: 1 (Standard_D8a_V4)

- No. of gateway node: 1 (Standard_D8a_V4)

- No. of employee nodes: 20 (Standard_L8s_V2)

- Apache HBase on HDFS

Right here is a few vital data concerning the check methodology:

- Knowledge measurement

- Desk was loaded from 10 sidecar employee nodes (2 billion rows per sidecar node) onto 20 COD DB cluster employee nodes

- Efficiency benchmarking was executed utilizing the next YCSB workloads

- YCSB Workload C

- YCSB Workload A

- Replace heavy workload

- 50% learn, 50% write

- YCSB Workload F

- Learn-Modify-Replace workload

- 50% learn, 25% replace, 25% read-modify-update

The next parameters had been used to run the workloads utilizing YCSB:

- Every workload was run for 15 min (900 secs)

- Pattern set for working the workloads

- 1 billion rows

- 100 million batch

- Following elements had been thought of whereas finishing up the next efficiency runs:

- Total CPU exercise was under 5% earlier than beginning the run to make sure no main actions had been ongoing on the cluster

- Area server cache was warmed up (in case of Amazon AWS, with S3 and in case of Microsoft Azure, with ABFS) to the complete capability guaranteeing most quantity of information was in cache

- No different actions like main compaction had been taking place on the time of beginning the workloads

Essential findings

The check began by loading 20TB of information right into a COD cluster working HBase on HDFS. This load was carried out utilizing the ten node sidecar on the 20 node COD cluster working HBase on HDFS. Subsequently, a snapshot of this loaded knowledge was taken and restored to the opposite COD clusters working HBase on Amazon S3 and Microsoft Azure ABFS. The next observations had been made throughout this exercise:

- Loading instances = 52 hrs

- Snapshot time

- Cluster to cluster = ~70 min

- Cluster to cloud storage = ~70 min

- Cloud storage to cluster = ~3hrs

Key takeaways

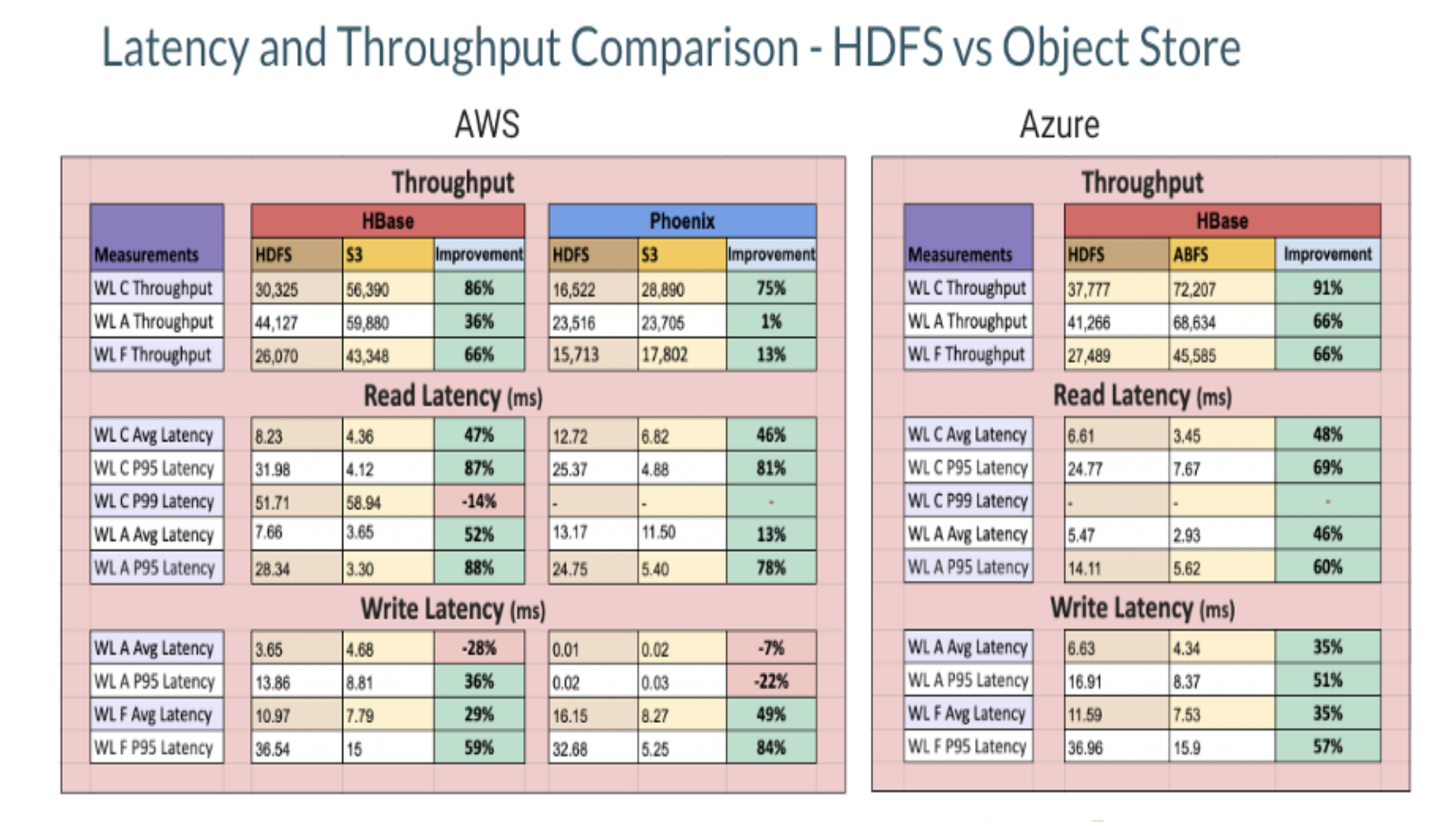

The next desk exhibits the throughput noticed on working the efficiency benchmarks:

Based mostly on the info proven above, we made the next observations:

- Total, the typical efficiency was higher for a S3 based mostly cluster with ephemeral cache by an element of 1.7x as in comparison with HBase working on HDFS on HDD.

- Learn throughput for S3 based mostly cluster is best by round 1.8x for each HBase and Phoenix as in comparison with the HDFS based mostly cluster.

- Some elements that have an effect on the efficiency of S3 are:

- Cache warming on S3: The cache must be warmed as much as its capability to get one of the best efficiency.

- AWS S3 throttling: With the rising variety of area servers and therefore, the variety of community requests to S3, AWS might throttle some requests for a number of seconds which can have an effect on the general efficiency. These limits are set on AWS sources for every account.

- Non atomic operations: Some operations like transfer do a whole lot of knowledge copy as a substitute of a easy rename and HBase depends closely on these operations.

- Gradual bulk delete operations: For every such operation, the motive force has to carry out a number of operations like itemizing, creating, deleting which leads to a slower efficiency.

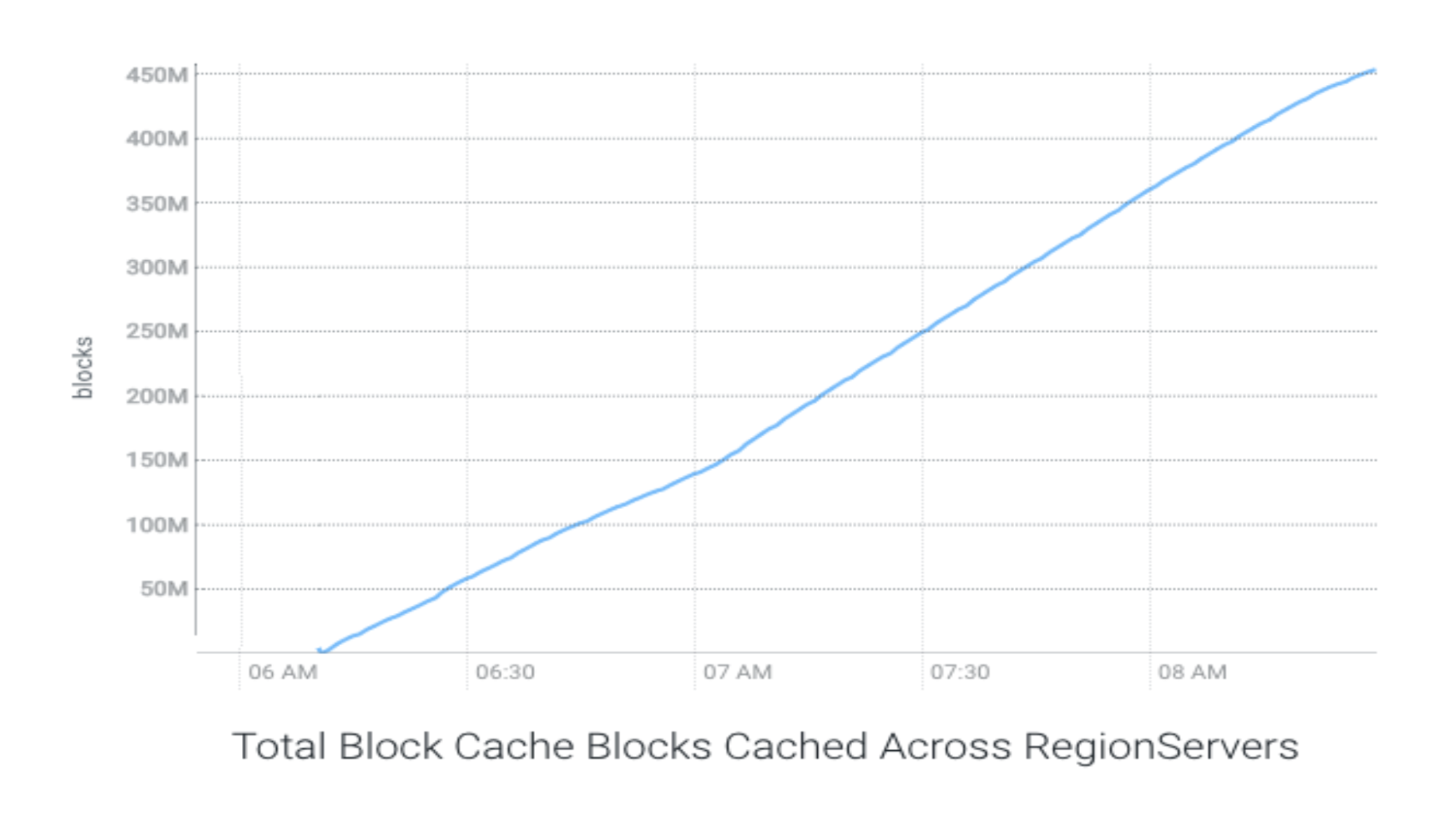

As talked about above, the cache was warmed to its full capability in case of S3 based mostly cluster. This cache warming took round 130 minutes with a mean throughput of two.62 GB/s.

The next chart exhibits the cache warming throughput with S3:

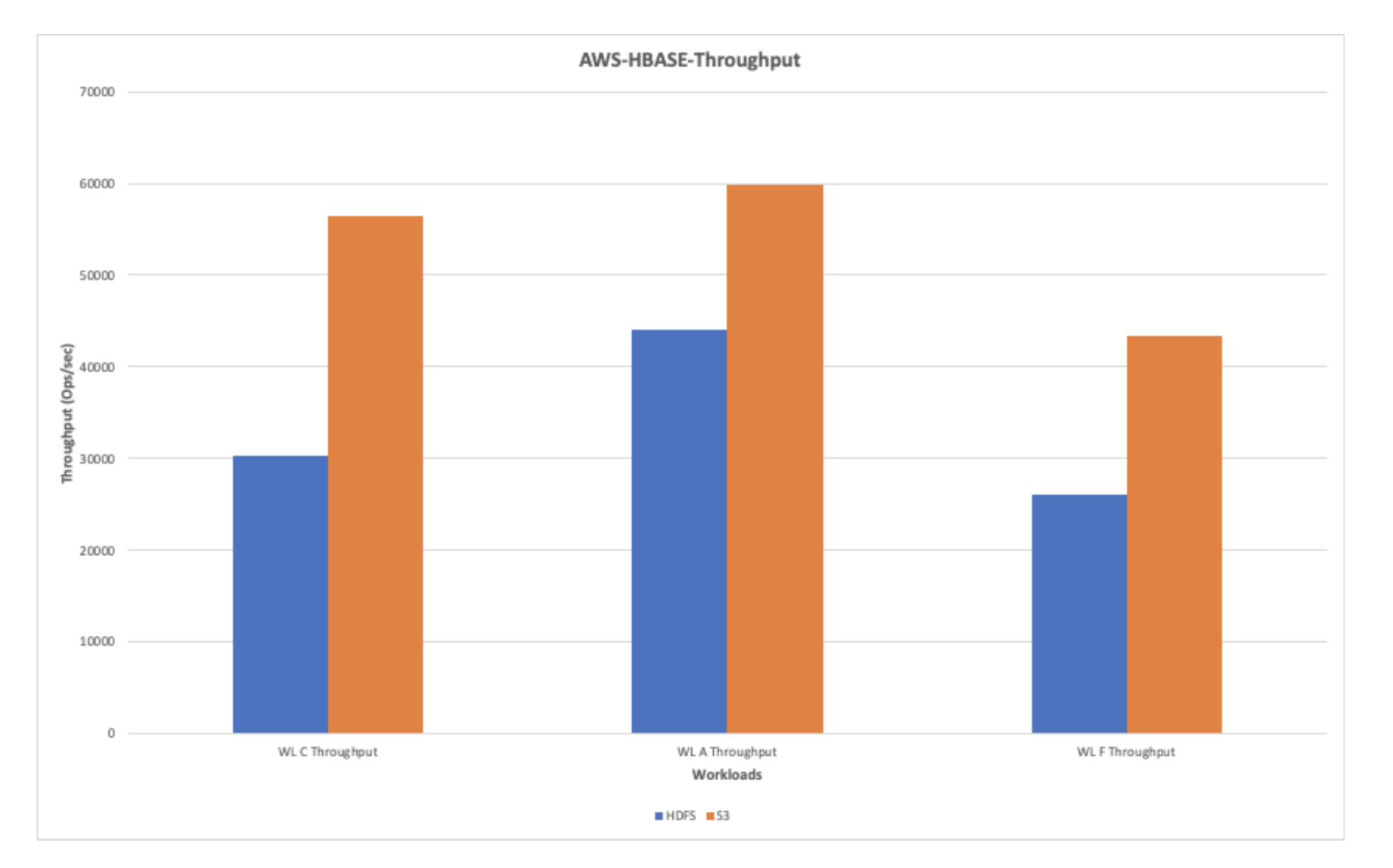

The next charts present the throughput and latencies noticed in numerous run configurations:

The following couple of charts present comparative illustration of assorted parameters when HBase is working on HDFS as in comparison with HBase working on S3.

AWS-HBase-Throughput (Ops/sec)

The next chart exhibits the throughput noticed whereas working workloads on HDFS and AWS S3. Total, AWS exhibits a greater throughput efficiency as in comparison with HDFS.

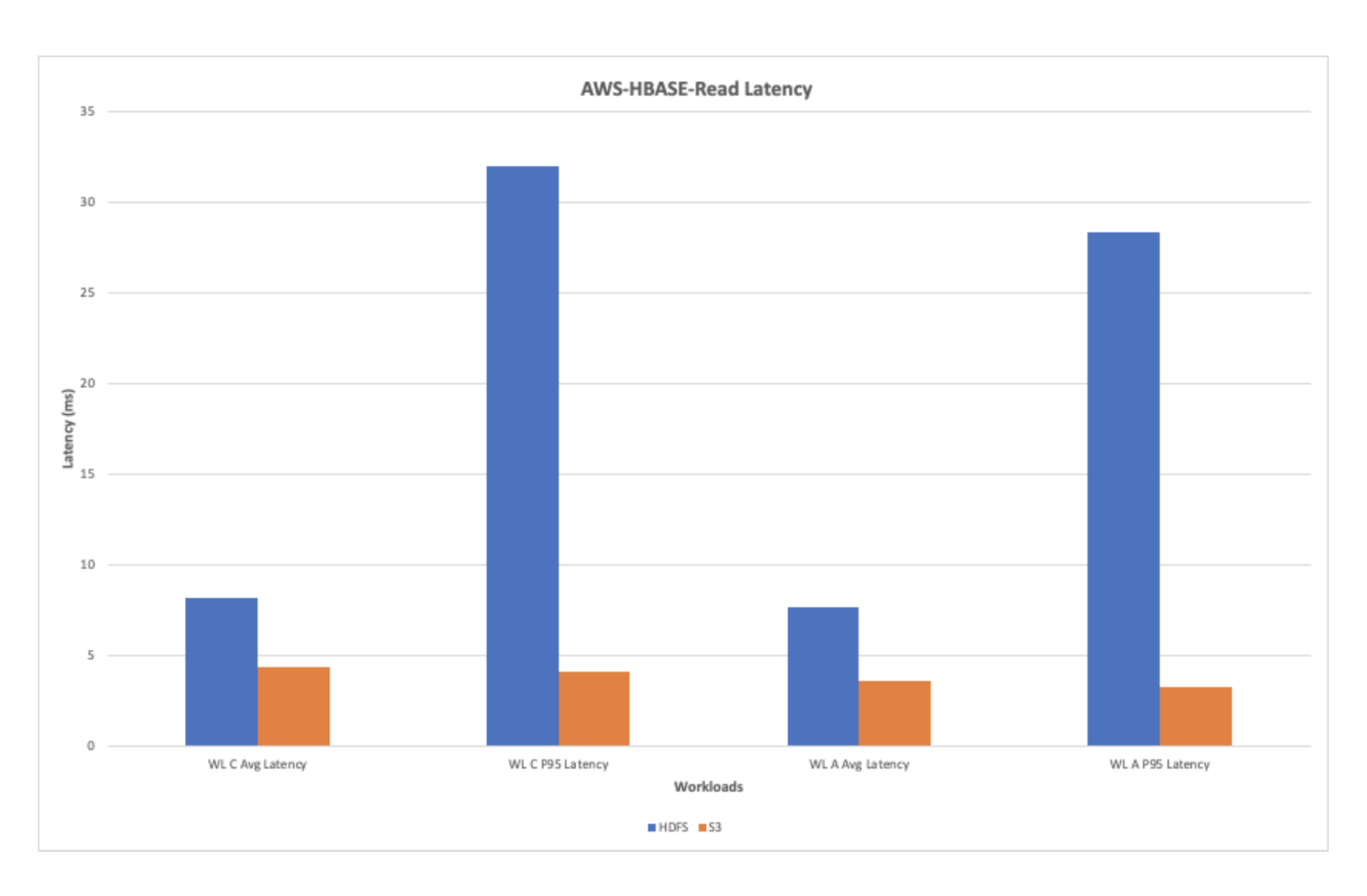

AWS-HBase-Learn Latency

The chart under exhibits the learn latency noticed whereas working the learn workloads. Total, the learn latency is improved with AWS with ephemeral storage when in comparison with the HDFS.

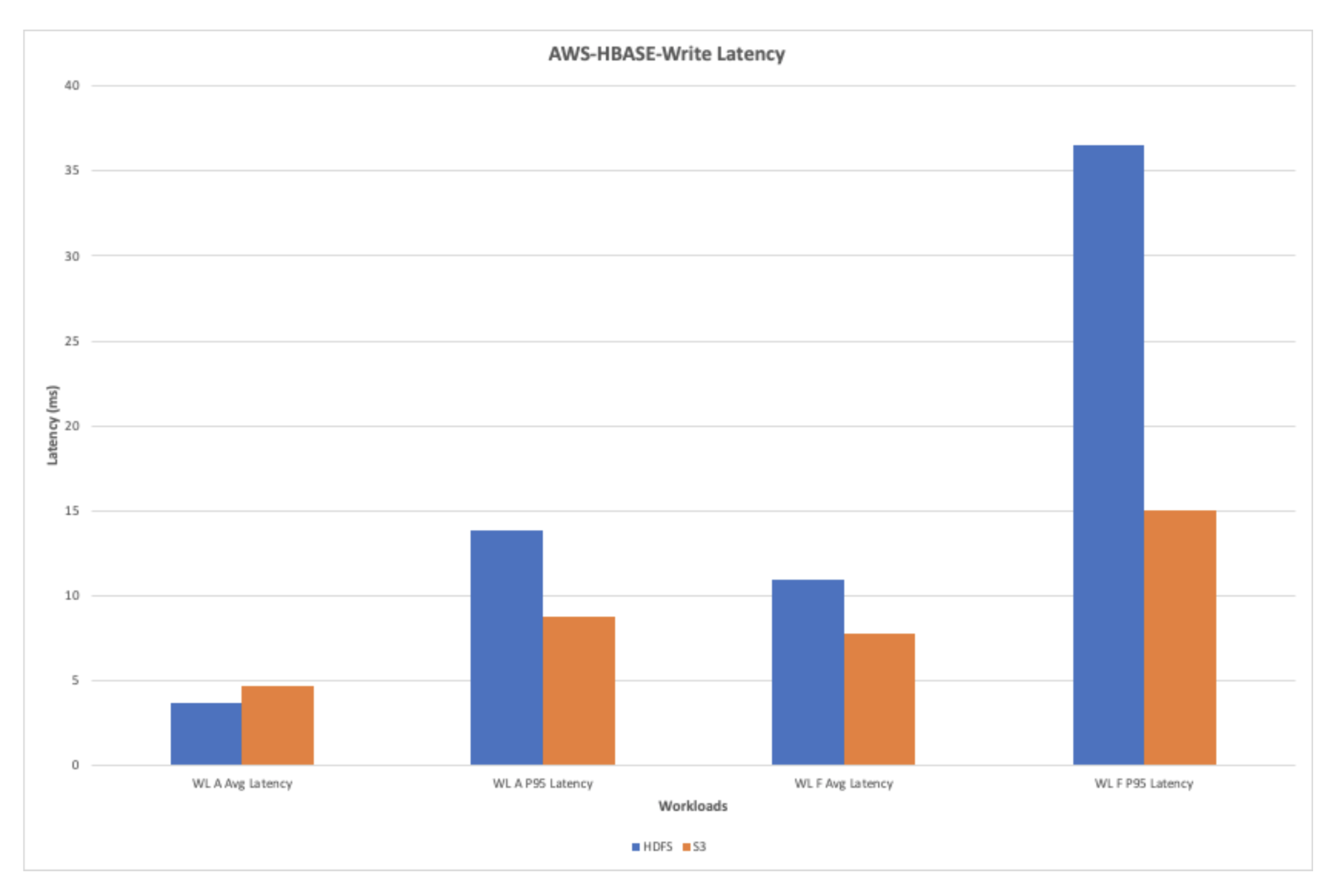

AWS-HBase-Write Latency

The chart under exhibits the write latency noticed whereas working the workloads A and F. The S3 exhibits an general enchancment within the write latency through the write heavy workloads.

The assessments had been additionally run to check the efficiency of Phoenix when run with HBase working on HDFS as in comparison with HBase working on S3. The next charts present the efficiency comparability of some key indicators.

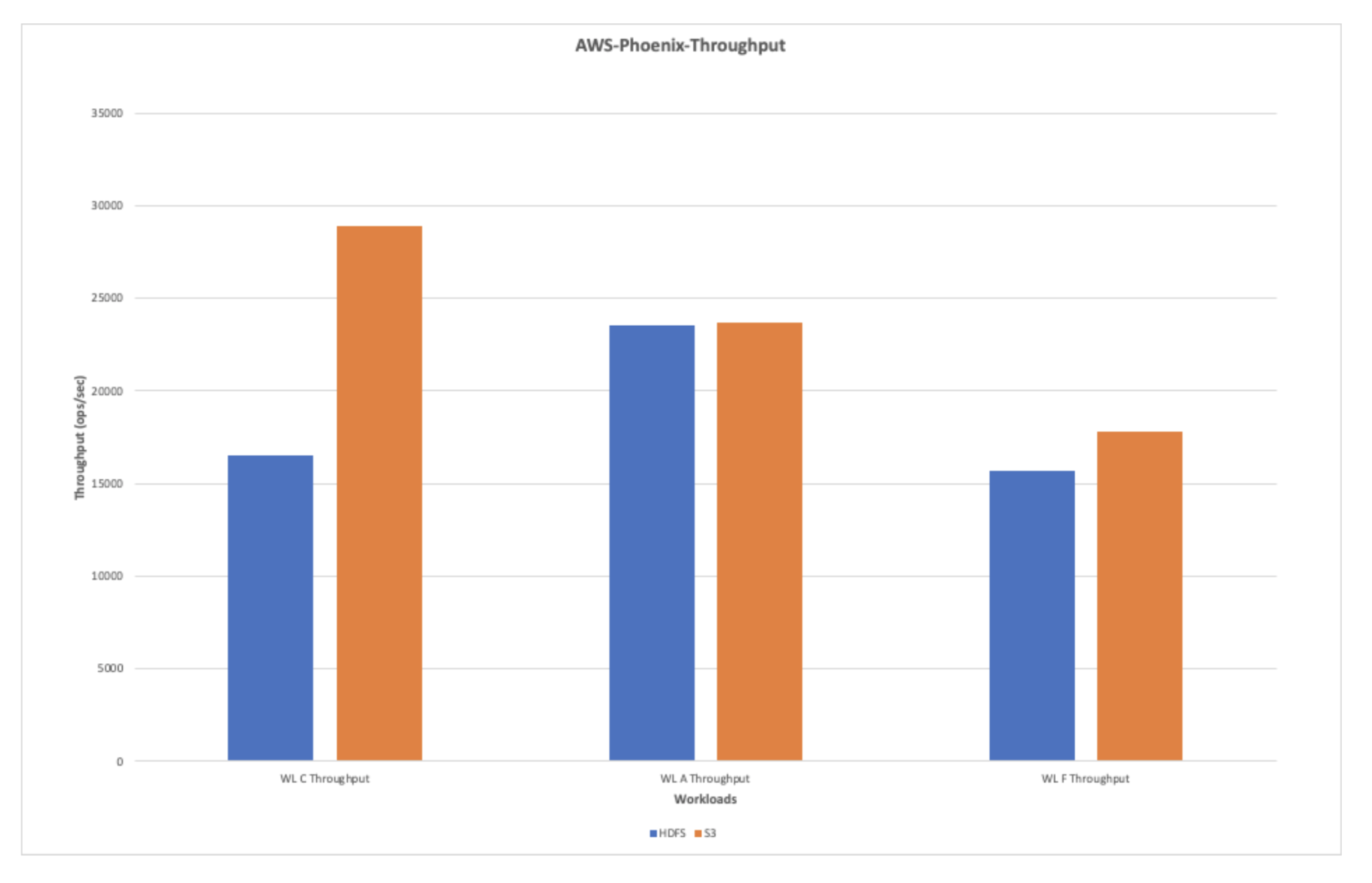

AWS-Phoenix-Throughput(ops/sec)

The chart under exhibits the typical throughput when the workloads had been run with Phoenix in opposition to HDFS and S3. The general learn throughput is discovered to be higher than the write throughput through the assessments.

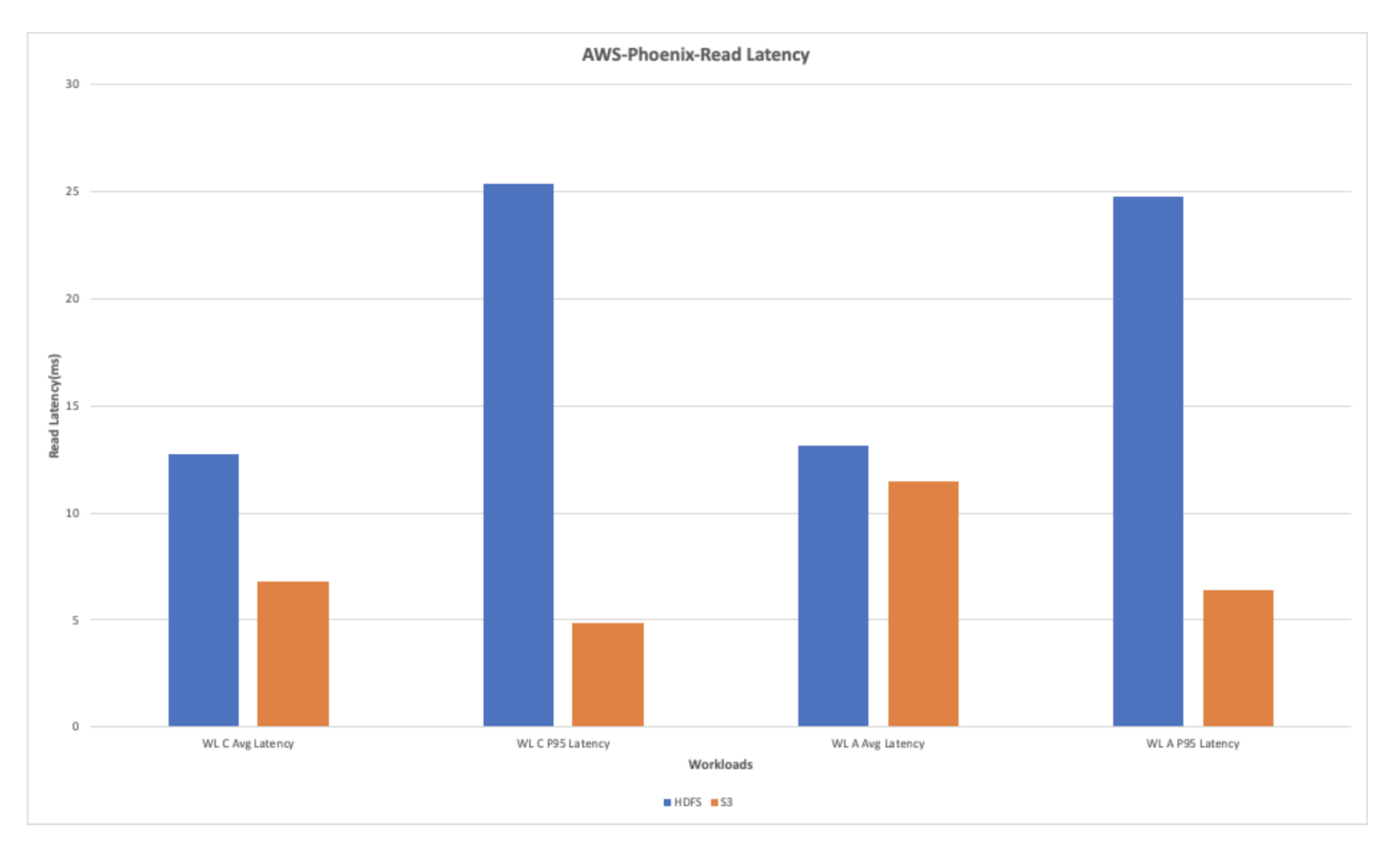

AWS-Phoenix Learn Latency

The general learn latency for the learn heavy workloads exhibits enchancment when utilizing S3. The chart under exhibits that the learn latency noticed with S3 is best by multifold in comparison with the latency noticed whereas working the workloads on HDFS.

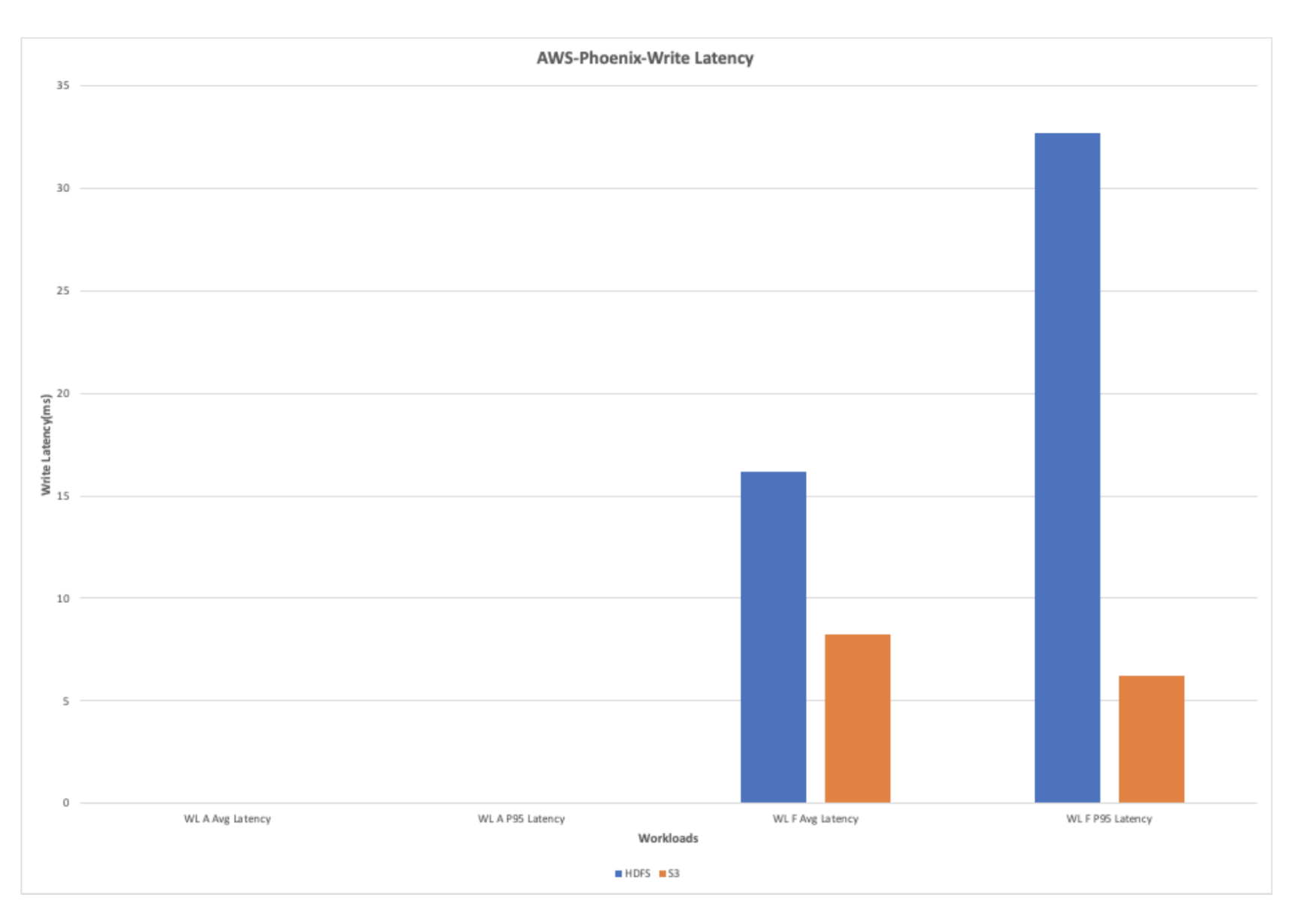

AWS-Phoenix-Write Latency

The write heavy workload exhibits great enchancment within the efficiency due to the lowered write latency in S3 when in comparison with HDFS.

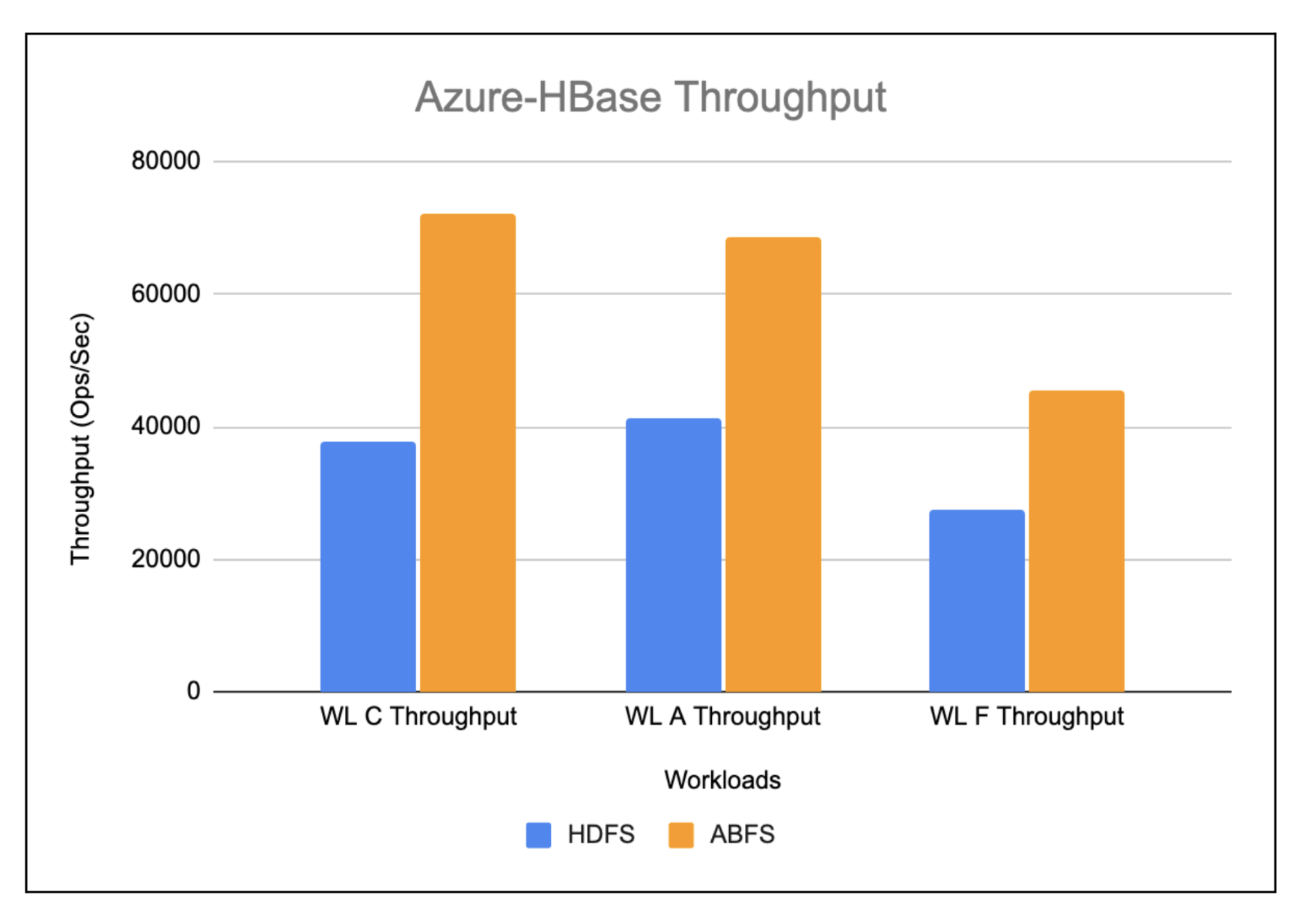

Azure

The efficiency measurements had been additionally performed on HBase working on Azure ABFS storage and the outcomes had been in contrast with HBase working on HDFS. The following couple of charts present the comparability of key efficiency metrics when HBase is working on HDFS vs. HBase working on ABFS.

Azure-HBase-Throughput(ops/sec)

The workloads working on HBase ABFS present nearly 2x enchancment when in comparison with HBase working on HDFS as depicted within the chart under.

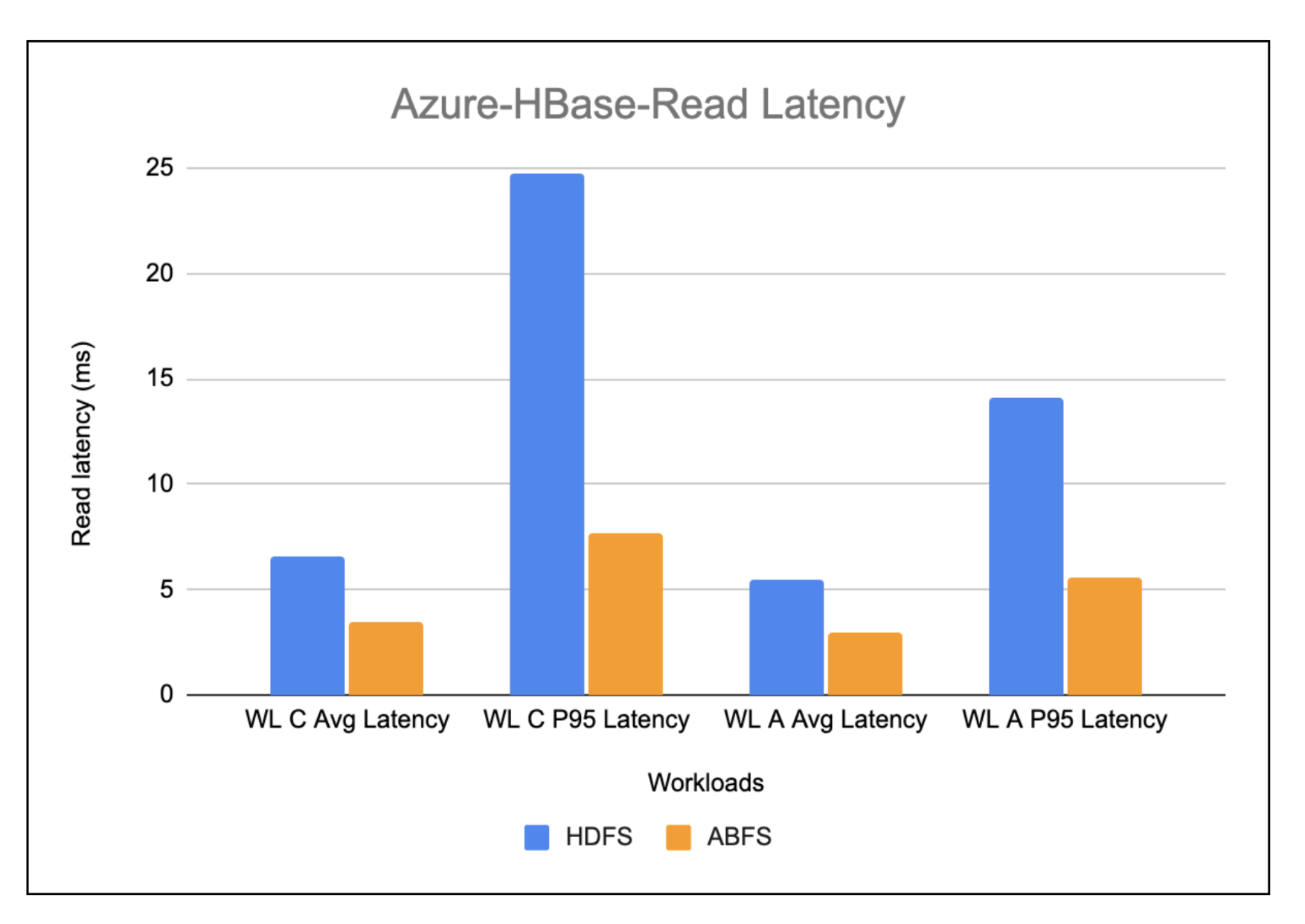

Azure-Hbase-Learn Latency

The chart under exhibits the learn latency noticed whereas working the learn heavy workloads on HBase working on HDFS vs. HBase working on ABFS. Total, the learn latency in HBase working on ABFS is discovered to be greater than 2x higher when in comparison with HBase working on HDFS.

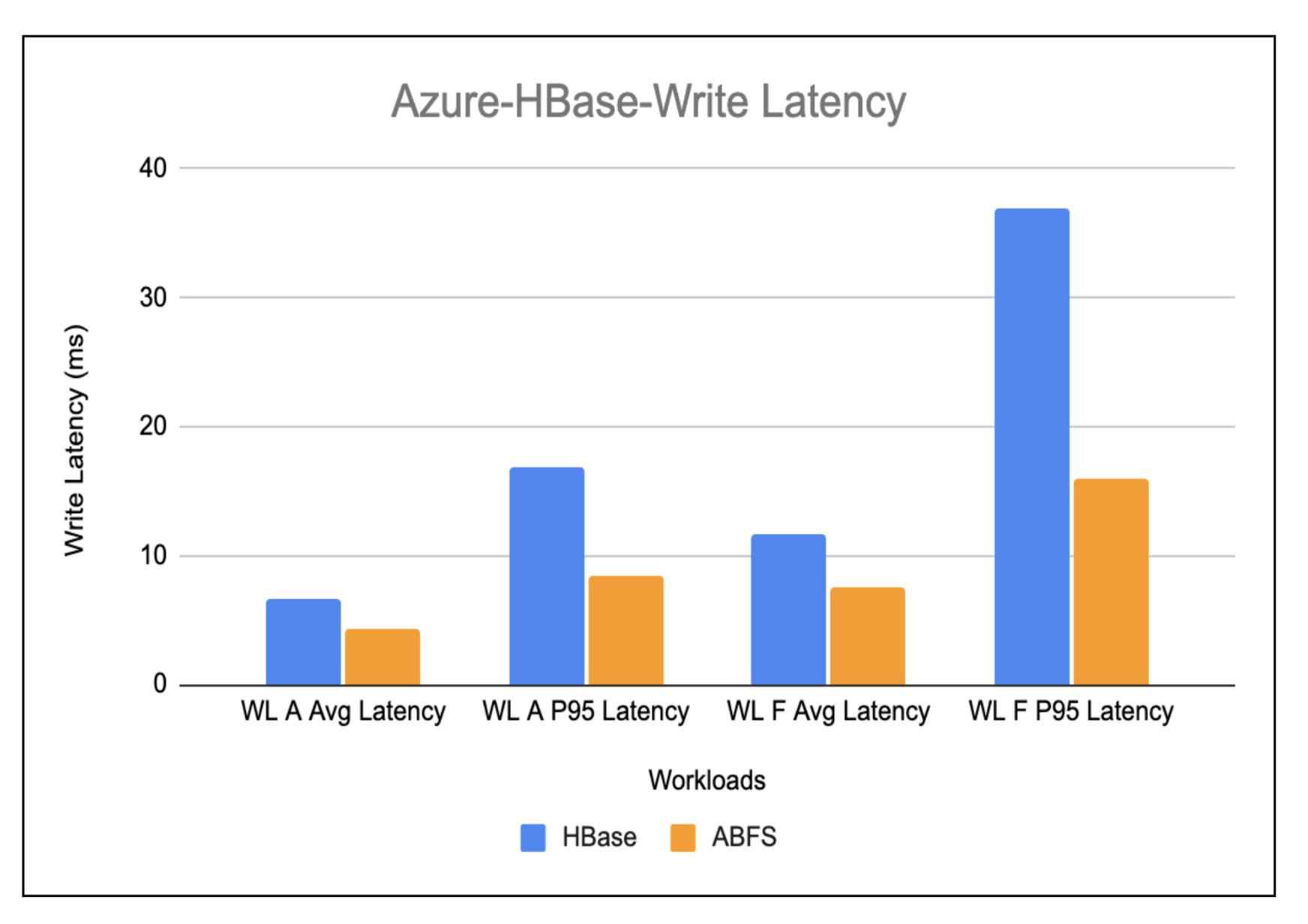

Azure-Hbase-Write Latency

The write-heavy workload outcomes proven within the under chart present an enchancment of virtually 1.5x within the write latency in HBase working on ABFS as in comparison with HBase working on HDFS.

Issues to think about when selecting the best COD deployment surroundings for you

- Cache warming whereas utilizing cloud storage

- After the preliminary creation of the cluster, a warming-up course of is initiated for the cache. This course of entails fetching knowledge from cloud storage to step by step populate the cache. Consequently, the cluster’s responsiveness to queries may expertise a brief slowdown throughout this era. This slowdown is primarily on account of queries needing to entry cloud storage for uncached blocks immediately, all whereas contending with the cache inhabitants for CPU sources.

The period of this warming-up section sometimes falls inside the vary of three to 5 hours for a cluster configured with 1.5TB of cache per employee. This preliminary section ensures optimized efficiency as soon as the cache is totally populated and the cluster is working at its peak effectivity.

The inherent latency linked with such storage options is predicted to trigger slowness in retrieving knowledge from cloud storage. And likewise, every entry ends in incurring a value. Nevertheless, the cloud storage’s built-in throttling mechanism stands as one other important issue that impacts efficiency and resilience. This mechanism confines the variety of allowed calls per second per prefix. Exceeding this restrict ends in unattended requests, with the potential consequence of halting cluster operations.

On this state of affairs, cache warming takes on a pivotal function in avoiding such conditions. By proactively populating the cache with the info, the cluster can bypass a reliance on frequent and probably throttled storage requests.

- Non-atomic operations

- Operations inside cloud storage lack atomicity, as seen in instances like renames in S3. To deal with this limitation, HBase has carried out a retailer file monitoring mechanism which minimizes the need for such operations within the important path, successfully eliminating the dependency on these operations.

Conclusion

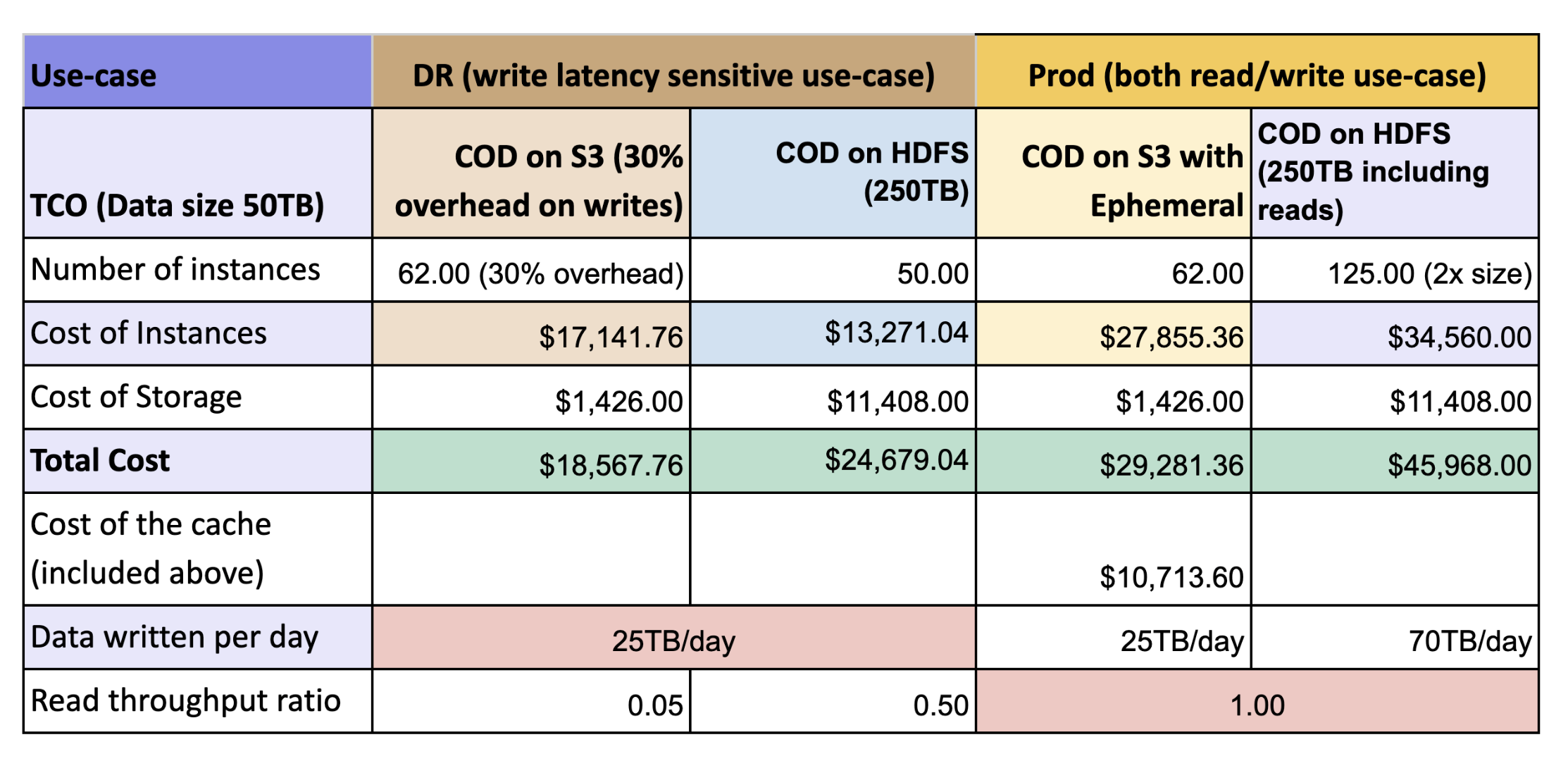

The desk under exhibits the full value of possession (TCO) for a cluster working COD on S3 with out ephemeral cache (DR state of affairs) and with ephemeral cache (manufacturing state of affairs) as in contrast with a cluster working COD on HDFS.

We noticed that the general throughput of HBase with cloud storages with bucket cache is best than HBase working on HDFS with HDD. Right here’s some highlights:

- With cached warm-up, cloud storage with cache yields 2x higher efficiency with low TCO as in comparison with HDFS. The efficiency with cloud storage is attributed to native cache based mostly on SSD the place HDFS utilizing costlier EBS-HDD requires 3 times of storage to account for replication.

- Write efficiency is predicted to be similar as each kind elements makes use of HDFS as the bottom for WAL however as we’re flushing and caching the info on the similar time there’s some 30% impression was seen

DR Cluster: This cluster is devoted to catastrophe restoration efforts and sometimes handles write operations from much less important purposes. Leveraging cloud storage with out native storage to assist cache, customers can count on to attain roughly 25% value financial savings in comparison with an HDFS-based cluster.

Prod Cluster: Serving as the first cluster, this surroundings capabilities because the definitive supply of fact for all learn and write actions generated by purposes. By using cloud storage resolution with native storage to assist cache, customers can understand a considerable 40% discount in prices

Go to the product web page to be taught extra about Cloudera Operational Database or attain out to your account staff.