As builders proceed to construct larger autonomy into cyber-physical methods (CPSs), reminiscent of unmanned aerial automobiles (UAVs) and vehicles, these methods mixture knowledge from an growing variety of sensors. The methods use this knowledge for management and for in any other case performing of their operational environments. Nevertheless, extra sensors not solely create extra knowledge and extra exact knowledge, however they require a fancy structure to accurately switch and course of a number of knowledge streams. This improve in complexity comes with extra challenges for useful verification and validation (V&V) a larger potential for faults (errors and failures), and a bigger assault floor. What’s extra, CPSs usually can not distinguish faults from assaults.

To deal with these challenges, researchers from the SEI and Georgia Tech collaborated on an effort to map the issue area and develop proposals for fixing the challenges of accelerating sensor knowledge in CPSs. This SEI Weblog publish gives a abstract our work, which comprised analysis threads addressing 4 subcomponents of the issue:

- addressing error propagation induced by studying elements

- mapping fault and assault situations to the corresponding detection mechanisms

- defining a safety index of the power to detect tampering primarily based on the monitoring of particular bodily parameters

- figuring out the impression of clock offset on the precision of reinforcement studying (RL)

Later I’ll describe these analysis threads, that are half of a bigger physique of analysis we name Security Evaluation and Fault Detection Isolation and Restoration (SAFIR) Synthesis for Time-Delicate Cyber-Bodily Techniques. First, let’s take a more in-depth have a look at the issue area and the challenges we’re working to beat.

Extra Knowledge, Extra Issues

CPS builders need extra and higher knowledge so their methods could make higher selections and extra exact evaluations of their operational environments. To realize these objectives, builders add extra sensors to their methods and enhance the power of those sensors to assemble extra knowledge. Nevertheless, feeding the system extra knowledge has a number of implications: extra knowledge means the system should execute extra, and extra complicated, computations. Consequently, these data-enhanced methods want extra highly effective central processing models (CPUs).

Extra highly effective CPUs introduce varied issues, reminiscent of vitality administration and system reliability. Bigger CPUs additionally increase questions on electrical demand and electromagnetic compatibility (i.e., the power of the system to face up to electromagnetic disturbances, reminiscent of storms or adversarial interference).

The addition of latest sensors means methods must mixture extra knowledge streams. This want drives larger architectural complexity. Furthermore, the info streams have to be synchronized. As an example, the data obtained from the left facet of an autonomous vehicle should arrive concurrently data coming from the correct facet.

Extra sensors, extra knowledge, and a extra complicated structure additionally increase challenges regarding the security, safety, and efficiency of those methods, whose interplay with the bodily world raises the stakes. CPS builders face heightened stress to make sure that the info on which their methods rely is correct, that it arrives on schedule, and that an exterior actor has not tampered with it.

A Query of Belief

As builders attempt to imbue CPSs with larger autonomy, one of many greatest hurdles is gaining the belief of customers who depend upon these methods to function safely and securely. For instance, take into account one thing so simple as the air stress sensor in your automobile’s tires. Prior to now, we needed to examine the tires bodily, with an air stress gauge, usually miles after we’d been driving on tires dangerously underinflated. The sensors we’ve got right now tell us in actual time when we have to add air. Over time, we’ve got come to depend upon these sensors. Nevertheless, the second we get a false alert telling us our entrance driver’s facet tire is underinflated, we lose belief within the capacity of the sensors to do their job.

Now, take into account the same system by which the sensors move their data wirelessly, and a flat-tire warning triggers a security operation that stops the automobile from beginning. A malicious actor learns how you can generate a false alert from a spot throughout the parking zone or merely jams your system. Your tires are tremendous, your automobile is ok, however your automobile’s sensors, both detecting a simulated downside or totally incapacitated, is not going to allow you to begin the automobile. Lengthen this state of affairs to autonomous methods working in airplanes, public transportation methods, or massive manufacturing amenities, and belief in autonomous CPSs turns into much more vital.

As these examples exhibit, CPSs are inclined to each inner faults and exterior assaults from malicious adversaries. Examples of the latter embody the Maroochy Shire incident involving sewage companies in Australia in 2000, the Stuxnet assaults focusing on energy vegetation in 2010, and the Keylogger virus towards a U.S. drone fleet in 2011.

Belief is vital, and it lies on the coronary heart of the work we’ve got been doing with Georgia Tech. It’s a multidisciplinary downside. In the end, what builders search to ship is not only a bit of {hardware} or software program, however a cyber-physical system comprising each {hardware} and software program. Builders want an assurance case, a convincing argument that may be understood by an exterior celebration. The peace of mind case should exhibit that the way in which the system was engineered and examined is per the underlying theories used to assemble proof supporting the security and safety of the system. Making such an assurance case attainable was a key a part of the work described within the following sections.

Addressing Error Propagation Induced by Studying Elements

As I famous above, autonomous CPSs are complicated platforms that function in each the bodily and cyber domains. They make use of a mixture of totally different studying elements that inform particular synthetic intelligence (AI) capabilities. Studying elements collect knowledge concerning the surroundings and the system to assist the system make corrections and enhance its efficiency.

To realize the extent of autonomy wanted by CPSs when working in unsure or adversarial environments, CPSs make use of studying algorithms. These algorithms use knowledge collected by the system—earlier than or throughout runtime—to allow determination making with no human within the loop. The training course of itself, nevertheless, will not be with out issues, and errors might be launched by stochastic faults, malicious exercise, or human error.

Many teams are engaged on the issue of verifying studying elements. Usually, they’re within the correctness of the training part itself. This line of analysis goals to provide an integration-ready part that has been verified with some stochastic properties, reminiscent of a probabilistic property. Nevertheless, the work we performed on this analysis thread examines the issue of integrating a learning-enabled part inside a system.

For instance, we ask, How can we outline the structure of the system in order that we will fence off any learning-enabled part and assess that the info it’s receiving is appropriate and arriving on the proper time? Moreover, Can we assess that the system outputs might be managed for some notion of correctness? As an example, Is the acceleration of my automobile inside the pace restrict? This sort of fencing is important to find out whether or not we will belief that the system itself is appropriate (or, not less than, not that mistaken) in comparison with the verification of a operating part, which right now will not be attainable.

To deal with these questions, we described the varied errors that may seem in CPS elements and have an effect on the training course of. We additionally supplied theoretical instruments that can be utilized to confirm the presence of such errors. Our goal was to create a framework that operators of CPSs can use to evaluate their operation when utilizing data-driven studying elements. To take action, we adopted a divide-and-conquer method that used the Structure Evaluation & Design Language (AADL) to create a illustration of the system’s elements, and their interconnections, to assemble a modular surroundings that allowed for the inclusion of various detection and studying mechanisms. This method helps a full model-based improvement, together with system specification, evaluation, system tuning, integration, and improve over the lifecycle.

We used a UAV system for example how errors propagate all through system elements when adversaries assault the training processes and acquire security tolerance thresholds. We centered solely on particular studying algorithms and detection mechanisms. We then investigated their properties of convergence, in addition to the errors that may disrupt these properties.

The outcomes of this investigation present the place to begin for a CPS designer’s information to using AADL for system-level evaluation, tuning, and improve in a modular trend. This information may comprehensively describe the totally different errors within the studying processes throughout system operation. With these descriptions, the designer can robotically confirm the correct operation of the CPS by quantifying marginal errors and integrating the system into AADL to guage vital properties in all lifecycle phases. To be taught extra about our method, I encourage you to learn the paper A Modular Method to Verification of Studying Elements in Cyber-Bodily Techniques.

Mapping Fault and Assault Eventualities to Corresponding Detection Mechanisms

UAVs have grow to be extra inclined to each stochastic faults (stemming from faults occurring on the different elements comprising the system) and malicious assaults that compromise both the bodily elements (sensors, actuators, airframe, and so on.) or the software program coordinating their operation. Different analysis communities, utilizing an assortment of instruments which can be usually incompatible with one another, have been investigating the causes and effects of faults that happen in UAVs. On this analysis thread, we sought to establish the core properties and components of those approaches to decompose them and thereby allow designers of UAV methods to think about all of the different outcomes on faults and the related detection methods by way of an built-in algorithmic method. In different phrases, in case your system is underneath assault, how do you choose the perfect mechanism for detecting that assault?

The problem of faults and assaults on UAVs has been broadly studied, and a variety of taxonomies have been proposed to assist engineers design mitigation methods for varied assaults. In our view, nevertheless, these taxonomies have been insufficient. We proposed a choice course of product of two components: first, a mapping from fault or assault situations to summary error varieties, and second, a survey of detection mechanisms primarily based on the summary error varieties they assist detect. Utilizing this method, designers may use each components to pick a detection mechanism to guard the system.

To categorise the assaults on UAVs, we created a listing of part compromises, specializing in people who reside on the intersection of the bodily and the digital realms. The checklist is way from complete, however it’s enough for representing the foremost qualities that describe the effects of these assaults to the system. We contextualized the checklist when it comes to assaults and faults on sensing, actuating, and communication elements, and extra complicated assaults focusing on a number of components to trigger system-wide errors:

|

|

|

|

|

|

|

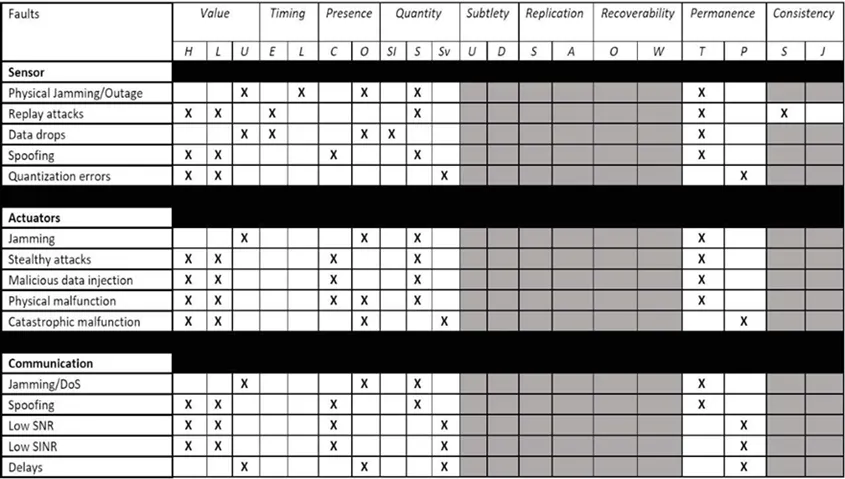

Utilizing this checklist of assaults on UAVs and people on UAV platforms, we subsequent recognized their properties when it comes to the taxonomy standards launched by the SEI’s Sam Procter and Peter Feiler in The AADL Error Library: An Operationalized Taxonomy of System Errors. Their taxonomy gives a set of information phrases to explain errors primarily based on their class: worth, timing, amount, and so on. Desk 1 presents a subset of these courses as they apply to UAV faults and assaults.

Determine 1: Classification of Assaults and Faults on UAVs Based mostly on the EMV2 Error Taxonomy

We then created a taxonomy of detection mechanisms that included statistics-based, sample-based, and Bellman-based intrusion detection methods. We associated these mechanisms to the assaults and faults taxonomy. Utilizing these examples, we developed a decision-making course of and illustrated it with a state of affairs involving a UAV system. On this state of affairs, the car undertook a mission by which it confronted a excessive chance of being topic to an acoustic injection assault.

In such an assault, an analyst would consult with the desk containing the properties of the assault and select the summary assault class of the acoustic injection from the assault taxonomy. Given the character of the assault, the suitable selection could be the spoofing sensor assault. Based mostly on the properties given by the assault taxonomy desk, the analyst would have the ability to establish the important thing traits of the assault. Cross-referencing the properties of the assault with the span of detectable traits of the different intrusion detection mechanisms will decide the subset of mechanisms that will probably be profitable in environments with these kinds of assaults.

On this analysis thread, we created a device that may assist UAV operators choose the suitable detection mechanisms for his or her system. Future work will deal with implementing the proposed taxonomy on a specific UAV platform, the place the precise sources of the assaults and faults might be explicitly identified on a low architectural stage. To be taught extra about our work on this analysis thread, I encourage you to learn the paper In direction of Clever Safety for Unmanned Aerial Autos: A Taxonomy of Assaults, Faults, and Detection Mechanisms.

Defining a Safety Index of the Skill to Detect Tampering by Monitoring Particular Bodily Parameters

CPSs have progressively grow to be massive scale and decentralized in recent times, they usually rely an increasing number of on communication networks. This high-dimensional and decentralized construction will increase the publicity to malicious assaults that may trigger faults, failures, and even important harm. Analysis efforts have been made on the cost-efficient placement or allocation of actuators and sensors. Nevertheless, most of those developed strategies primarily take into account controllability or observability properties and don’t take into consideration the safety facet.

Motivated by this hole, we thought of on this analysis thread the dependence of CPS safety on the doubtless compromised actuators and sensors, particularly, on deriving a safety measure underneath each actuator and sensor assaults. The subject of CPS safety has obtained growing consideration lately, and totally different safety indices have been developed. The primary type of safety measure is predicated on reachability evaluation, which quantifies the dimensions of reachable units (i.e., the units of all states reachable by dynamical methods with admissible inputs). Thus far, nevertheless, little work has quantified reachable units underneath malicious assaults and used the developed safety metrics to information actuator and sensor choice. The second type of safety index is outlined because the minimal variety of actuators and/or sensors that attackers must compromise with out being detected.

On this analysis thread, we developed a generic actuator safety index. We additionally proposed graph-theoretic situations for computing the index with the assistance of most linking and the generic regular rank of the corresponding structured switch operate matrix. Our contribution right here was twofold. We supplied situations for the existence of dynamical and excellent undetectability. When it comes to excellent undetectability, we proposed a safety index for discrete-time linear-time invariant (LTI) methods underneath actuator and sensor assaults. Then, we developed a graph-theoretic method for structured methods that’s used to compute the safety index by fixing a min-cut/max-flow downside. For an in depth presentation of this work, I encourage you to learn the paper A Graph-Theoretic Safety Index Based mostly on Undetectability for Cyber-Bodily Techniques.

Figuring out the Impression of Clock Offset on the Precision of Reinforcement Studying

A serious problem in autonomous CPSs is integrating extra sensors and knowledge with out decreasing the pace of efficiency. CPSs, reminiscent of vehicles, ships, and planes, all have timing constraints that may be catastrophic if missed. Complicating issues, timing acts in two instructions: timing to react to exterior occasions and timing to interact with people to make sure their safety. These situations increase a variety of challenges as a result of timing, accuracy, and precision are traits key to making sure belief in a system.

Strategies for the event of safe-by-design methods have been largely centered on the standard of the data within the community (i.e., within the mitigation of corrupted indicators both because of stochastic faults or malicious manipulation by adversaries). Nevertheless, the decentralized nature of a CPS requires the event of strategies that handle timing discrepancies amongst its elements. Problems with timing have been addressed in management methods to evaluate their robustness towards such faults, but the results of timing points on studying mechanisms are not often thought of.

Motivated by this reality, our work on this analysis thread investigated the conduct of a system with reinforcement studying (RL) capabilities underneath clock offsets. We centered on the derivation of ensures of convergence for the corresponding studying algorithm, on condition that the CPS suffers from discrepancies within the management and measurement timestamps. Specifically, we investigated the impact of sensor-actuator clock offsets on RL-enabled CPSs. We thought of an off-policy RL algorithm that receives knowledge from the system’s sensors and actuators and makes use of them to approximate a desired optimum management coverage.

Nonetheless, owing to timing mismatches, the control-state knowledge obtained from these system elements have been inconsistent and raised questions on RL robustness. After an intensive evaluation, we confirmed that RL does retain its robustness in an epsilon-delta sense. On condition that the sensor–actuator clock offsets are usually not arbitrarily massive and that the behavioral management enter satisfies a Lipschitz continuity situation, RL converges epsilon-close to the specified optimum management coverage. We performed a two-link manipulator, which clarified and verified our theoretical findings. For an entire dialogue of this work, I encourage you to learn the paper Impression of Sensor and Actuator Clock Offsets on Reinforcement Studying.

Constructing a Chain of Belief in CPS Structure

In conducting this analysis, the SEI has made some contributions within the area of CPS structure. First, we prolonged AADL to make a proper semantics we will use not solely to simulate a mannequin in a really exact approach, but additionally to confirm properties on AADL fashions. That work permits us to motive concerning the structure of CPSs. One end result of this reasoning related to assuring autonomous CPSs was the concept of building a “fence” round weak elements. Nevertheless, we nonetheless wanted to carry out fault detection to verify inputs are usually not incorrect or tampered with or the outputs invalid.

Fault detection is the place our collaborators from Georgia Tech made key contributions. They’ve executed nice work on statistics-based methods for detecting faults and developed methods that use reinforcement studying to construct fault detection mechanisms. These mechanisms search for particular patterns that characterize both a cyber assault or a fault within the system. They’ve additionally addressed the query of recursion in conditions by which a studying part learns from one other studying part (which can itself be mistaken). Kyriakos Vamvoudakis of Georgia Tech’s Daniel Guggenheim College of Aerospace Engineering labored out how you can use structure patterns to deal with these questions by increasing the fence round these elements. This work helped us implement and take a look at fault detection, isolation, and recording mechanism on use-case missions that we carried out on a UAV platform.

Now we have realized that should you would not have a very good CPS structure—one that’s modular, meets desired properties, and isolates fault tolerance—you have to have an enormous fence. It’s important to do extra processing to confirm the system and acquire belief. Then again, if in case you have an structure which you can confirm is amenable to those fault tolerance methods, then you possibly can add within the fault isolation tolerances with out degrading efficiency. It’s a tradeoff.

One of many issues we’ve got been engaged on on this mission is a set of design patterns which can be identified within the security neighborhood for detecting and mitigating faults utilizing a simplex structure to change from one model of a part to a different. We have to outline the aforementioned tradeoff for every of these patterns. As an example, patterns will differ within the variety of redundant elements, and, as we all know, extra redundancy is extra pricey as a result of we want extra CPU, extra wires, extra vitality. Some patterns will take extra time to decide or swap from nominal mode to degraded mode. We’re evaluating all these patterns, taking into account the associated fee to implement them when it comes to assets—largely {hardware} assets—and the timing facet (the time between detecting an occasion to reconfiguring the system). These sensible issues are what we need to handle—not only a formal semantics of AADL, which is sweet for pc scientists, but additionally this tradeoff evaluation made attainable by offering a cautious analysis of each sample that has been documented within the literature.

In future work, we need to handle these bigger questions:

- What can we do with fashions after we do model-based software program engineering?

- How far can we go to construct a toolbox in order that designing a system might be supported by proof throughout each part?

- You need to construct the structure of a system, however are you able to make sense of a diagram?

- What are you able to say concerning the security of the timing of the system?

The work is grounded on the imaginative and prescient of rigorous model-based methods engineering progressing from necessities to a mannequin. Builders additionally want supporting proof they will use to construct a belief package deal for an exterior auditor, to exhibit that the system they designed works. In the end, our purpose is to construct a series of belief throughout all of a CPS’s engineering artifacts.