Cloudera is launching and increasing partnerships to create a brand new enterprise synthetic intelligence “AI” ecosystem. Companies more and more acknowledge AI options as crucial differentiators in aggressive markets and are prepared to speculate closely to streamline their operations, enhance buyer experiences, and enhance top-line development. That’s why we’re constructing an ecosystem of expertise suppliers to make it simpler, extra economical, and safer for our prospects to maximise the worth they get from AI.

At our latest Evolve Convention in New York we had been extraordinarily excited to announce our founding AI ecosystem companions: Amazon Net Companies (“AWS“), NVIDIA, and Pinecone.

Along with these founding companions we’re additionally constructing tight integrations with our ecosystem accelerators: Hugging Face, the main AI group and mannequin hub, and Ray, the best-in-class compute framework for AI workloads.

On this publish we’ll provide you with an summary of those new and expanded partnerships and the way we see them becoming into the rising AI expertise stack that helps the AI software lifecycle.

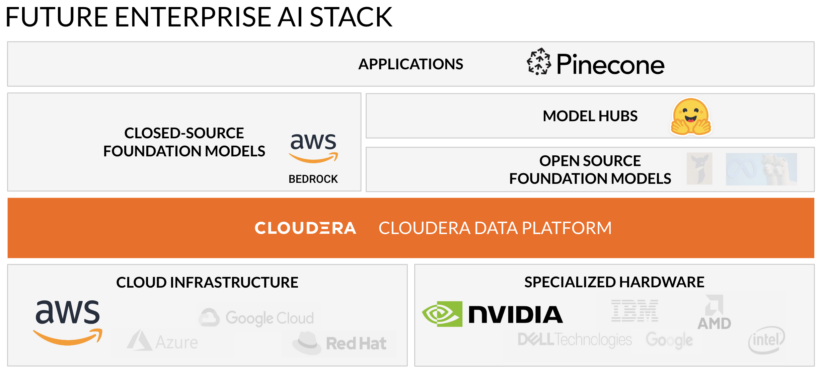

We’ll begin with the enterprise AI stack. We see AI functions like chatbots being constructed on high of closed-source or open supply foundational fashions. These fashions are skilled or augmented with information from a knowledge administration platform. The information administration platform, fashions, and finish functions are powered by cloud infrastructure and/or specialised {hardware}. In a stack together with Cloudera Knowledge Platform the functions and underlying fashions can be deployed from the info administration platform by way of Cloudera Machine Studying.

Right here’s the longer term enterprise AI stack with our founding ecosystem companions and accelerators highlighted:

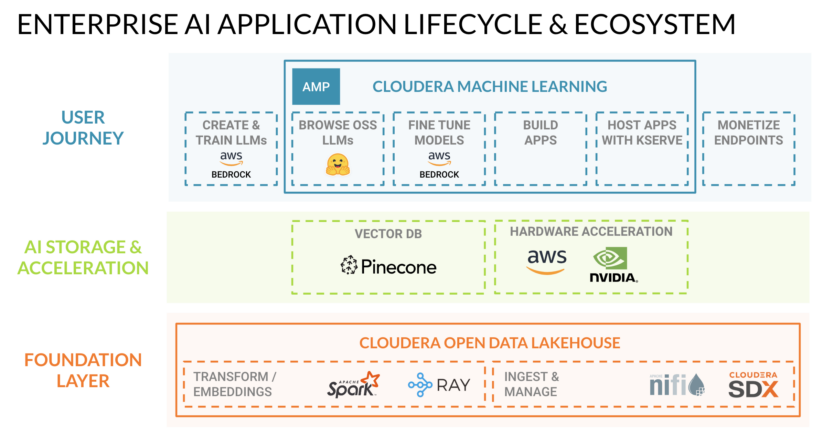

That is how we view that very same stack supporting the enterprise AI software lifecycle:

Let’s use a easy instance to elucidate how this ecosystem allows the AI software lifecycle:

- An organization desires to deploy a help chatbot to lower operational prices and enhance buyer experiences.

- They will choose the most effective foundational LLM for the job from Amazon Bedrock (accessed by way of API name) or Hugging Face (accessed by way of obtain) utilizing Cloudera Machine Studying (“CML”).

- Then they’ll construct the applying on CML utilizing frameworks like Flask.

- They will enhance the accuracy of the chatbot’s responses by checking every query towards embeddings saved in Pinecone’s vector database after which improve the query with information from Cloudera Open Knowledge Lakehouse (extra on how this works under).

- Lastly they’ll deploy the applying utilizing CML’s containerized compute classes powered by NVIDIA GPUs or AWS Inferentia—specialised {hardware} that improves inference efficiency whereas lowering prices.

Learn on to study extra about how every of our founding companions and accelerators are collaborating with Cloudera to make it simpler, extra economical, and safer for our prospects to maximise the worth they get from AI.

Founding AI ecosystem companions | NVIDIA, AWS, Pinecone

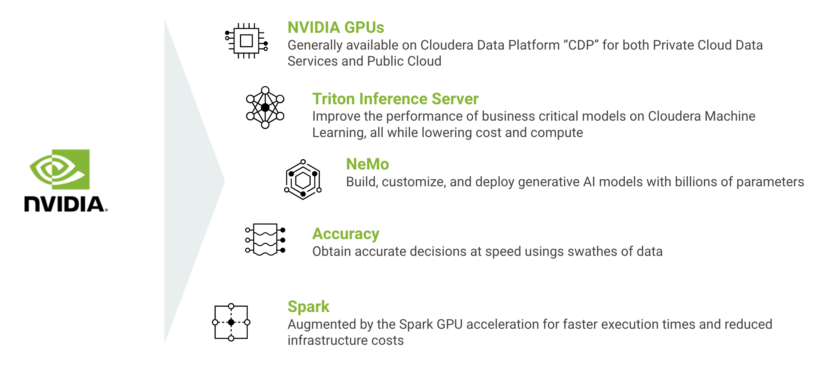

NVIDIA | Specialised {Hardware}

Highlights:

At present, NVIDIA GPUs are already obtainable in Cloudera Knowledge Platform (CDP), permitting Cloudera prospects to get eight occasions the efficiency on information engineering workloads at lower than 50 % incremental price relative to fashionable CPU-only alternate options. This new part in expertise collaboration builds off of that success by including key capabilities throughout the AI-application lifecycle in these areas:

- Speed up AI and machine studying workloads in Cloudera on Public Cloud and on-premises utilizing NVIDIA GPUs

- Speed up information pipelines with GPUs in Cloudera Personal Cloud

- Deploy AI fashions in CML utilizing NVIDIA Triton Inference Server

- Speed up generative AI fashions in CML utilizing NVIDIA NeMo

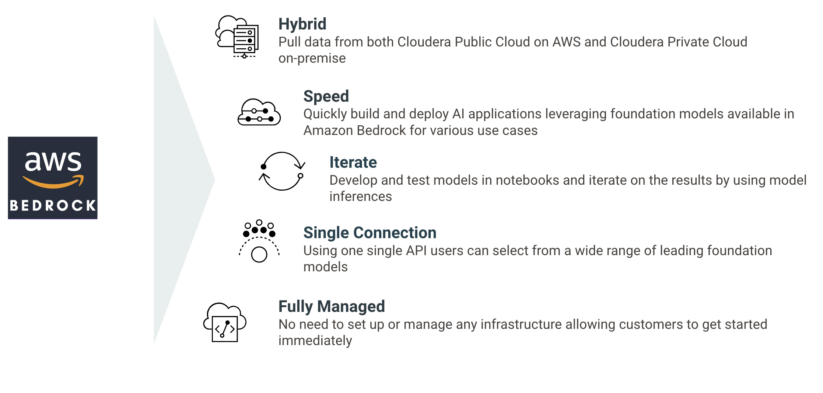

Amazon Bedrock | Closed-Supply Foundational Fashions

Highlights:

We’re constructing generative AI capabilities in Cloudera, utilizing the facility of Amazon Bedrock, a completely managed serverless service. Clients can shortly and simply construct generative AI functions utilizing these new options obtainable in Cloudera.

With the overall availability of Amazon Bedrock, Cloudera is releasing its newest utilized ML prototype (AMP) inbuilt Cloudera Machine Studying: CML Textual content Summarization AMP constructed utilizing Amazon Bedrock. Utilizing this AMP, prospects can use basis fashions obtainable in Amazon Bedrock for textual content summarization of knowledge managed each in Cloudera Public Cloud on AWS and Cloudera Personal Cloud on-premise. Extra data could be present in our weblog publish right here.

Cloudera is engaged on integrations of AWS Inferentia and AWS Trainium–powered Amazon EC2 cases into Cloudera Machine Studying service (“CML”). It will give CML prospects the power to spin-up remoted compute classes utilizing these highly effective and environment friendly accelerators purpose-built for AI workloads. Extra data could be present in our weblog publish right here.

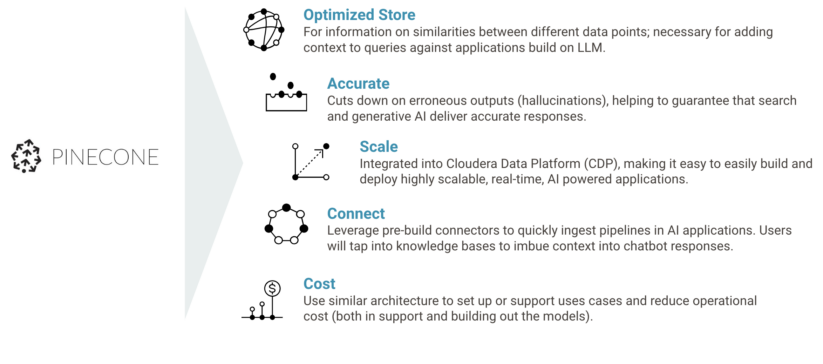

Highlights:

The partnership will see Cloudera combine Pinecone’s best-in-class vector database into Cloudera Knowledge Platform (CDP), enabling organizations to simply construct and deploy extremely scalable, actual time, AI-powered functions on Cloudera.

This consists of the discharge of a brand new Utilized ML Prototype (AMP) that can permit builders to shortly create and increase new data bases from information on their very own web site, in addition to pre-built connectors that can allow prospects to shortly arrange ingest pipelines in AI functions.

Within the AMP, Pinceone’s vector database makes use of these data bases to imbue context into chatbot responses, making certain helpful outputs. Extra data on this AMP and the way vector databases add context to AI functions could be present in our weblog publish right here.

AI ecosystem accelerators | Hugging Face, Ray:

Highlights:

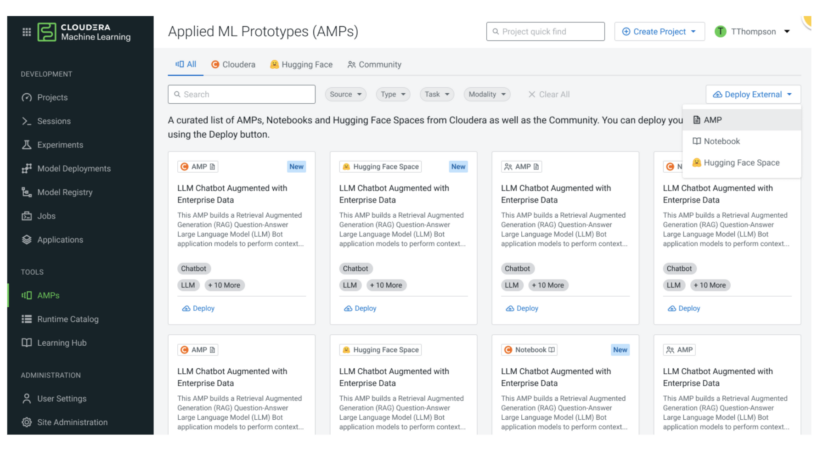

Cloudera is integrating Hugging Faces’ market-leading vary of LLMs, generative AI, and conventional pre-trained machine studying fashions and datasets into Cloudera Knowledge Platform so prospects can considerably scale back time-to-value in deploying AI functions. Cloudera and Hugging Face plan to do that with three key integrations:

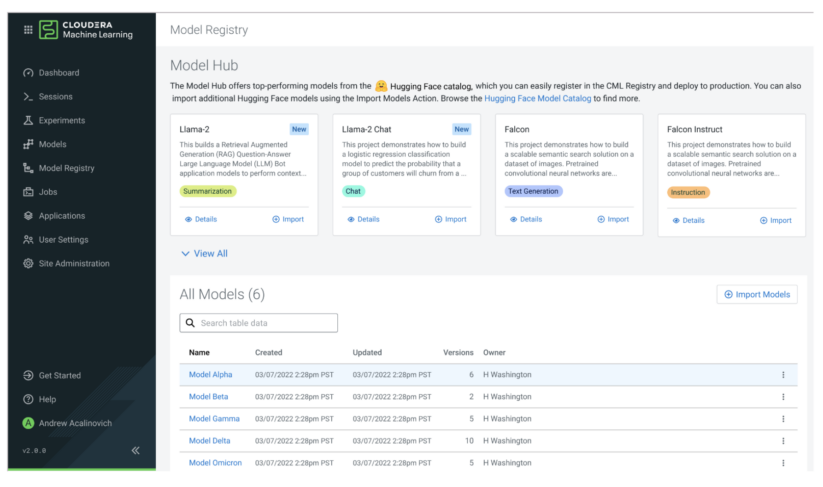

Hugging Face Fashions Integration: Import and deploy any of Hugging Face’s fashions from Cloudera Machine Studying (CML) with a single click on.

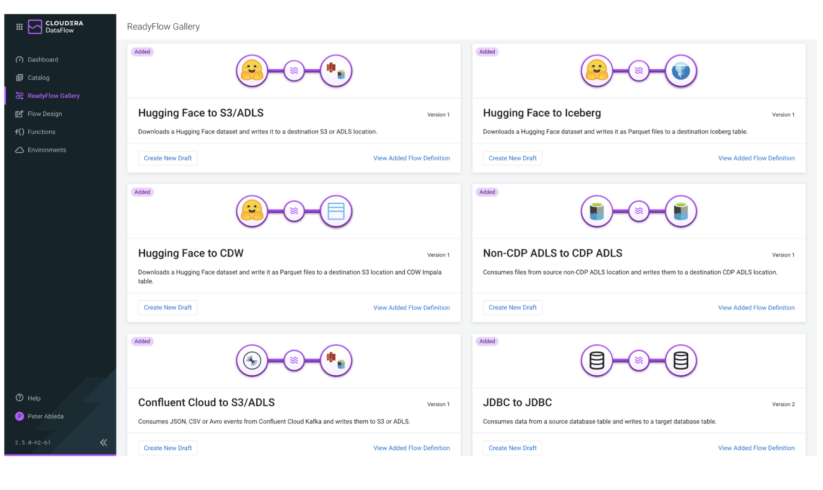

Hugging Face Datasets Integration: Import any of Hugging Face’s datasets by way of pre-built Cloudera Knowledge Stream ReadyFlows into Iceberg tables in Cloudera Knowledge Warehouse (CDW) with a single click on.

Hugging Face Areas Integration: Import and deploy any of Hugging Face’s Areas (pre-built internet functions for small-scale ML demos) by way of Cloudera Machine Studying with a single click on. These will complement CML’s already strong catalog of Utilized Machine Studying Prototypes (AMPs) that permit builders to shortly launch pre-built AI functions together with an LLM Chatbot developed utilizing an LLM from Hugging Face.

Ray | Distributed Compute Framework

Misplaced within the discuss OpenAI is the large quantity of compute wanted to coach and fine-tune LLMs, like GPT, and generative AI, like ChatGPT. Every iteration requires extra compute and the limitation imposed by Moore’s Regulation shortly strikes that job from single compute cases to distributed compute. To perform this, OpenAI has employed Ray to energy the distributed compute platform to coach every launch of the GPT fashions. Ray has emerged as a preferred framework due to its superior efficiency over Apache Spark for distributed AI compute workloads.

Ray can be utilized in Cloudera Machine Studying’s open-by-design structure to deliver quick distributed AI compute to CDP. That is enabled by a Ray Module in cml extension’s Python package deal revealed by our group. Extra details about Ray and the right way to deploy it in Cloudera Machine Studying could be present in our weblog publish right here.