Because the months of 2024 unfold, we’re all a part of a unprecedented yr for the historical past of each democracy and expertise. Extra international locations and other people will vote for his or her elected leaders than in any yr in human historical past. On the similar time, the event of AI is racing ever sooner forward, providing extraordinary advantages but in addition enabling dangerous actors to deceive voters by creating practical “deepfakes” of candidates and different people. The distinction between the promise and peril of recent expertise has seldom been extra hanging.

This rapidly has turn out to be a yr that requires all of us who care about democracy to work collectively to satisfy the second.

Right this moment, the tech sector got here collectively on the Munich Safety Convention to take an important step ahead. Standing collectively, 20 corporations [1] introduced a brand new Tech Accord to Fight Misleading Use of AI in 2024 Elections. Its aim is easy however vital – to fight video, audio, and pictures that pretend or alter the looks, voice, or actions of political candidates, election officers, and different key stakeholders. It isn’t a partisan initiative or designed to discourage free expression. It goals as a substitute to make sure that voters retain the precise to decide on who governs them, freed from this new kind of AI-based manipulation.

The challenges are formidable, and our expectations should be practical. However the accord represents a uncommon and decisive step, unifying the tech sector with concrete voluntary commitments at an important time to assist defend the elections that may happen in additional than 65 nations between the start of March and the tip of the yr.

Whereas many extra steps shall be wanted, right now marks the launch of a genuinely international initiative to take speedy sensible steps and generate extra and broader momentum.

What’s the issue we’re attempting to resolve?

It’s value beginning with the issue we have to resolve. New generative AI instruments make it attainable to create practical and convincing audio, video, and pictures that pretend or alter the looks, voice, or actions of individuals. They’re typically known as “deepfakes.” The prices of creation are low, and the outcomes are beautiful. The AI for Good Lab at Microsoft first demonstrated this for me final yr once they took off-the-shelf merchandise, spent lower than $20 on computing time, and created practical movies that not solely put new phrases in my mouth, however had me utilizing them in speeches in Spanish and Mandarin that matched the sound of my voice and the motion of my lips.

In actuality, I battle with French and generally stumble even in English. I can’t converse quite a lot of phrases in some other language. However, to somebody who doesn’t know me, the movies appeared real.

AI is bringing a brand new and probably extra harmful type of manipulation that we’ve been working to handle for greater than a decade, from pretend web sites to bots on social media. In latest months, the broader public rapidly has witnessed this increasing drawback and the dangers this creates for our elections. Upfront of the New Hampshire main, voters acquired robocalls that used AI to pretend the voice and phrases of President Biden. This adopted the documented launch of a number of deepfake movies starting in December of UK Prime Minister Rishi Sunak. These are just like deepfake movies the Microsoft Risk Evaluation Middle (MTAC) has traced to nation-state actors, together with a Russian state-sponsored effort to splice pretend audio segments into excerpts of real information movies.

This all provides as much as a rising danger of dangerous actors utilizing AI and deepfakes to deceive the general public in an election. And this goes to a cornerstone of each democratic society on the planet – the power of an accurately-informed public to decide on the leaders who will govern them.

This deepfake problem connects two components of the tech sector. The primary is corporations that create AI fashions, purposes, and companies that can be utilized to create practical video, audio, and image-based content material. And the second is corporations that run client companies the place people can distribute deepfakes to the general public. Microsoft works in each areas. We develop and host AI fashions and companies on Azure in our datacenters, create artificial voice expertise, provide picture creation instruments in Copilot and Bing, and supply purposes like Microsoft Designer, which is a graphic design app that allows individuals simply to create high-quality photos. And we function hosted client companies together with LinkedIn and our Gaming community, amongst others.

This has given us visibility to the total vary of the evolution of the issue and the potential for brand new options. As we’ve seen the issue develop, the info scientists and engineers in our AI for Good Lab and the analysts in MTAC have directed extra of their focus, together with with using AI, on figuring out deepfakes, monitoring dangerous actors, and analyzing their ways, strategies, and procedures. In some respects, we’ve seen practices we’ve lengthy combated in different contexts via the work of our Digital Crimes Unit, together with actions that attain into the darkish internet. Whereas the deepfake problem shall be troublesome to defeat, this has persuaded us that we’ve many instruments that we are able to put to work rapidly.

Like many different expertise points, our most simple problem will not be technical however altogether human. Because the months of 2023 drew to a detailed, deepfakes had turn out to be a rising subject of dialog in capitals world wide. However whereas everybody appeared to agree that one thing wanted to be finished, too few individuals had been doing sufficient, particularly on a collaborative foundation. And with elections looming, it felt like time was operating out. That want for a brand new sense of urgency, as a lot as something, sparked the collaborative work that has led to the accord launched right now in Munich.

What’s the tech sector asserting right now – and can it make a distinction?

I imagine this is a vital day, culminating arduous work by good individuals in lots of corporations throughout the tech sector. The brand new accord brings collectively corporations from each related components of our trade – people who create AI companies that can be utilized to create deepfakes and people who run hosted client companies the place deepfakes can unfold. Whereas the problem is formidable, it is a important step that may assist higher defend the elections that may happen this yr.

It’s useful to stroll via what this accord does, and the way we’ll transfer instantly to implement it as Microsoft.

The accord focuses explicitly on a concretely outlined set of deepfake abuses. It addresses “Misleading AI Election Content material,” which is outlined as “convincing AI-generated audio, video, and pictures that deceptively pretend or alter the looks, voice, or actions of political candidates, election officers, and different key stakeholders in a democratic election, or that present false info to voters about when, the place, and the way they will lawfully vote.”

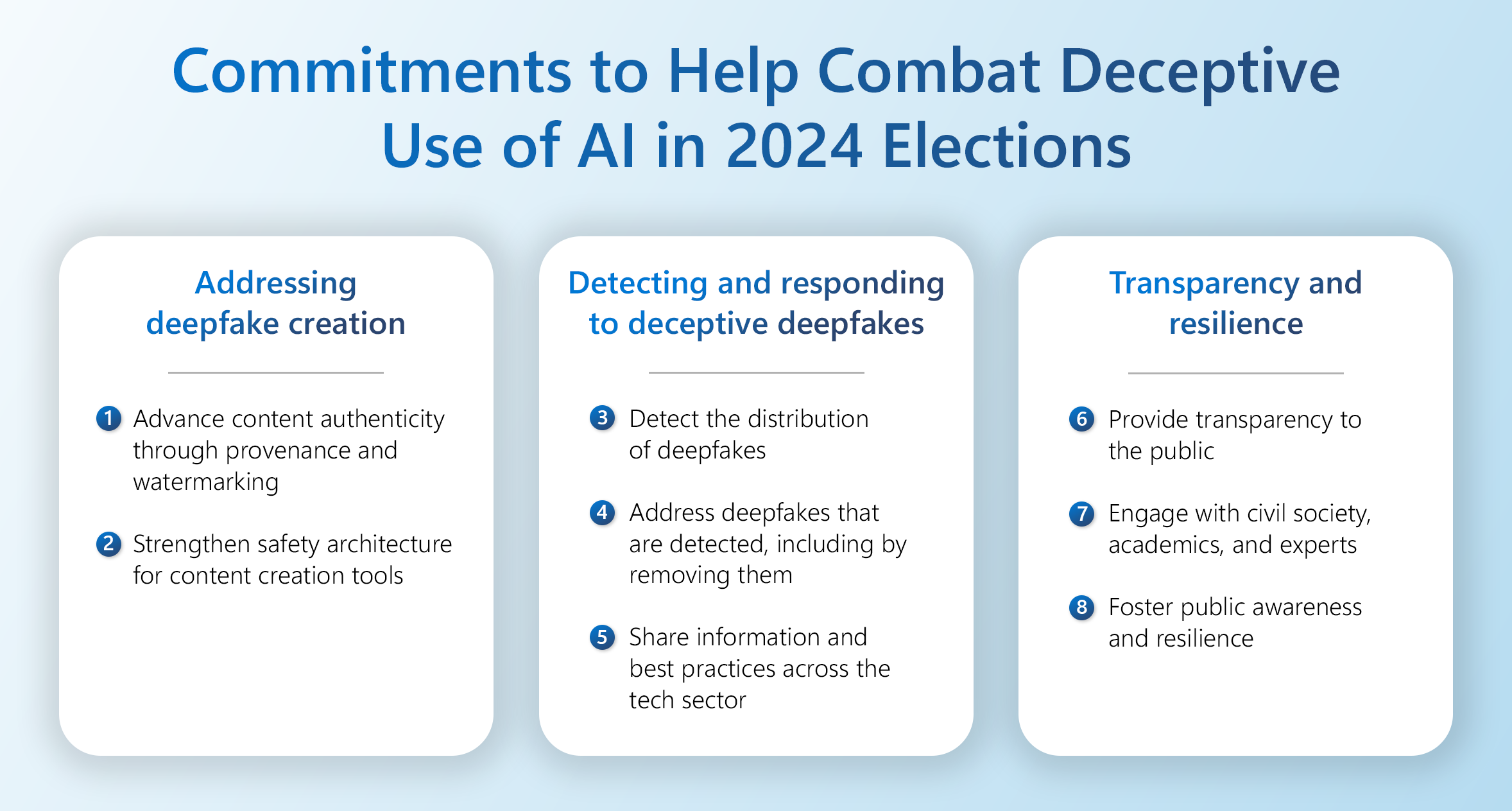

The accord addresses this content material abuse via eight particular commitments, and so they’re all value studying. To me, they fall into three vital buckets value pondering extra about:

First, the accord’s commitments will make it harder for dangerous actors to make use of official instruments to create deepfakes. The primary two commitments within the accord advance this aim. Partially, this focuses on the work of corporations that create content material technology instruments and calls on them to strengthen the protection structure in AI companies by assessing dangers and strengthening controls to assist stop abuse. This contains points reminiscent of ongoing purple group evaluation, preemptive classifiers, the blocking of abusive prompts, automated testing, and speedy bans of customers who abuse the system. All of it must be primarily based on robust and broad-based information evaluation. Consider this as security by design.

This additionally focuses on the authenticity of content material by advancing what the tech sector refers to as content material provenance and watermarking. Video, audio, and picture design merchandise can incorporate content material provenance options that connect metadata or embed indicators within the content material they produce with details about who created it, when it was created, and the product that was used, together with the involvement of AI. This might help media organizations and even shoppers higher separate genuine from inauthentic content material. And the excellent news is that the trade is transferring rapidly to rally round a typical method – the C2PA normal – to assist advance this.

However provenance will not be enough by itself, as a result of dangerous actors can use different instruments to strip this info from content material. In consequence, you will need to add different strategies like embedding an invisible watermark alongside C2PA signed metadata and to discover methods to detect content material even after these indicators are eliminated or degraded, reminiscent of by fingerprinting a picture with a novel hash which may permit individuals to match it with a provenance report in a safe database.

Right this moment’s accord helps transfer the tech sector farther and sooner in committing to, innovating in, and adopting these technological approaches. It builds on the voluntary White Home commitments first embraced by a number of corporations in america this previous July and the European Union’s Digital Companies Act’s deal with the integrity of electoral processes. At Microsoft, we’re working to speed up our work in these areas throughout our services and products. And we’re launching subsequent month new Content material Credentials as a Service to assist help political candidates world wide, backed by a devoted Microsoft group.

I’m inspired by the truth that, in some ways, all these new applied sciences signify the newest chapter of labor we’ve been pursuing at Microsoft for greater than 25 years. When CD-ROMs after which DVDs turned standard within the early Nineteen Nineties, counterfeiters sought to deceive the general public and defraud shoppers by creating realistic-looking pretend variations of standard Microsoft merchandise.

We responded with an evolving array of more and more refined anti-counterfeiting options, together with invisible bodily watermarking, which can be the forerunners of the digital safety we’re advancing right now. Our Digital Crimes Unit developed approaches that put it on the international forefront in utilizing these options to guard towards one technology of expertise fakes. Whereas it’s at all times inconceivable to eradicate any type of crime fully, we are able to once more name on these groups and this spirit of dedication and collaboration to place right now’s advances to efficient use.

Second, the accord brings the tech sector collectively to detect and reply to deepfakes in elections. That is an important second class, as a result of the cruel actuality is that decided dangerous actors, maybe particularly well-resourced nation-states, will spend money on their very own improvements and instruments to create deepfakes and use these to attempt to disrupt elections. In consequence, we should assume that we’ll have to spend money on collective motion to detect and reply to this exercise.

The third and fourth commitments in right now’s accord will advance the trade’s detection and response capabilities. At Microsoft, we’re transferring instantly in each areas. On the detection entrance, we’re harnessing the info science and technical capabilities of our AI for Good Lab and MTAC group to raised detect deepfakes on the web. We’ll name on the experience of our Digital Crimes Unit to spend money on new menace intelligence work to pursue the early detection of AI-powered legal exercise.

We’re additionally launching efficient instantly a brand new internet web page – Microsoft-2024 Elections – the place a politician can report back to us a priority a couple of deepfake of themselves. In essence, this empowers political candidates world wide to help with the worldwide detection of deepfakes.

We’re combining this work with the launch of an expanded Digital Security Unit. It will prolong the work of our current digital security group, which has lengthy addressed abusive on-line content material and conduct that impacts kids or that promotes extremist violence, amongst different classes. This group has particular potential in responding on a 24/7 foundation to weaponized content material from mass shootings that we act instantly to take away from our companies.

We’re deeply dedicated to the significance of free expression, however we don’t imagine this could defend deepfakes or different misleading AI election content material coated by right now’s accord. We due to this fact will act rapidly to take away and ban this kind of content material from LinkedIn, our Gaming community, and different related Microsoft companies in line with our insurance policies and practices. On the similar time, we’ll promptly publish a coverage that makes clear our requirements and method, and we’ll create an appeals course of that may transfer rapidly if a person believes their content material was eliminated in error.

Equally vital, as addressed within the accord’s fifth dedication, we’re devoted to sharing with the remainder of the tech sector and applicable NGOs the details about the deepfakes we detect and the most effective practices and instruments we assist develop. We’re dedicated to advancing stronger collective motion, which has confirmed indispensable in defending kids and addressing extremist violence on the web. We deeply respect and admire the work that different tech corporations and NGOs have lengthy superior in these areas, together with via the International Web Discussion board to Counter Terrorism, or GIFCT, and with governments and civil society beneath the Christchurch Name.

Third, the accord will assist advance transparency and construct societal resilience to deepfakes in elections. The ultimate three commitments within the accord tackle the necessity for transparency and the broad resilience we should foster internationally’s democracies.

As mirrored within the accord’s sixth dedication, we help the necessity for public transparency about our company and broader collective work. This dedication to transparency shall be a part of the method our Digital Security Unit takes because it addresses deepfakes of political candidates and the opposite classes coated by right now’s accord. This may even embody the event of a brand new annual transparency report we’ll publish that covers our insurance policies and information about how we’re making use of them.

The accord’s seventh dedication obliges the tech sector to proceed to have interaction with a various set of worldwide civil society organizations, teachers, and different material specialists. These teams and people play an indispensable function within the promotion and safety of the world’s democracies. For greater than two centuries, they’ve been basic to the advance of democratic rights and rules, together with their vital work to advance the abolition of slavery and the enlargement of the precise to vote in america.

We glance ahead, as an organization, to continued engagement with these teams. When various teams come collectively, we don’t at all times begin with the identical perspective, and there are days when the conversations might be difficult. However we admire from longstanding expertise that one of many hallmarks of democracy is that individuals don’t at all times agree with one another. But, when individuals actually hearken to differing views, they nearly at all times be taught one thing new. And from this studying there comes a basis for higher concepts and larger progress. Maybe greater than ever, the problems that join democracy and expertise require a broad tent with room to hearken to many alternative concepts.

This additionally gives a foundation for the accord’s closing dedication, which is help for work to foster public consciousness and resilience relating to misleading AI election content material. As we’ve discovered first-hand in latest elections in locations as distant from one another as Finland and Taiwan, a savvy and knowledgeable public might present the most effective protection of all to the chance of deepfakes in elections. One in all our broad content material provenance targets is to equip individuals with the power to look simply for C2PA indicators that may denote whether or not content material is genuine. However this may require public consciousness efforts to assist individuals be taught the place and search for this.

We’ll act rapidly to implement this closing dedication, together with by partnering with different tech corporations and supporting civil society organizations to assist equip the general public with the data wanted. Keep tuned for brand new steps and bulletins within the coming weeks.

Does right now’s tech accord do all the things that must be finished?

That is the ultimate query we must always all ask as we take into account the vital step taken right now. And, regardless of my monumental enthusiasm, I’d be the primary to say that this accord represents solely one of many many important steps we’ll have to take to guard elections.

Partially it’s because the problem is formidable. The initiative requires new steps from a big selection of corporations. Dangerous actors possible will innovate themselves, and the underlying expertise is continuous to vary rapidly. We must be massively formidable but in addition practical. We’ll have to proceed to be taught, innovate, and adapt. As an organization and an trade, Microsoft and the tech sector might want to construct upon right now’s step and proceed to spend money on getting higher.

However much more importantly, there isn’t any method the tech sector can defend elections by itself from this new kind of electoral abuse. And, even when it may, it wouldn’t be correct. In any case, we’re speaking in regards to the election of leaders in a democracy. And nobody elected any tech government or firm to guide any nation.

As soon as one displays for even a second on this most simple of propositions, it’s abundantly clear that the safety of elections requires that all of us work collectively.

In some ways, this begins with our elected leaders and the democratic establishments they lead. The last word safety of any democratic society is the rule of regulation itself. And, as we’ve famous elsewhere, it’s vital that we implement current legal guidelines and help the event of recent legal guidelines to handle this evolving drawback. This implies the world will want new initiatives by elected leaders to advance these measures.

Amongst different areas, this shall be important to handle using AI deepfakes by well-resourced nation-states. As we’ve seen throughout the cybersecurity and cyber-influence landscapes, a small variety of refined governments are placing substantial sources and experience into new varieties of assaults on people, organizations, and even international locations. Arguably, on some days, our on-line world is the house the place the rule of regulation is most beneath menace. And we’ll want extra collective inter-governmental management to handle this.

As we glance to the longer term, it appears to these of us who work at Microsoft that we’ll additionally want new types of multistakeholder motion. We imagine that initiatives just like the Paris Name and Christchurch Name have had a optimistic affect on the world exactly as a result of they’ve introduced individuals collectively from governments, the tech sector, and civil society to work on a global foundation. As we tackle not solely deepfakes however nearly each different expertise problem on the planet right now, we discover it arduous to imagine that anyone a part of society can resolve an enormous drawback by performing alone.

That is why it’s so vital that right now’s accord acknowledges explicitly that “the safety of electoral integrity and public belief is a shared duty and a typical good that transcends partisan pursuits and nationwide borders.”

Maybe greater than something, this must be our North Star.

Solely by working collectively can we protect timeless values and democratic rules in a time of monumental technological change.

[1] Adobe, Amazon, Anthropic, ARM, ElevenLabs, Google, IBM, Inflection AI, LinkedIn, McAfee, Meta, Microsoft, Nota, OpenAI, Snap, Stability AI, TikTok, TrendMicro, TruePic, and X.