(sakkmesterke/Shutterstock)

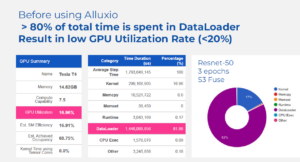

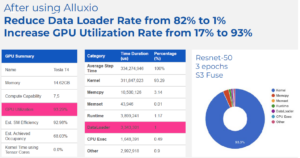

Clients that use the high-speed cache within the new Alluxio Enterprise AI platform can squeeze as much as 4 instances as a lot work out of their GPU setups than with out it, Alluxio introduced at the moment. Alluxio additionally says the general mannequin coaching pipeline, in the meantime, might be sped as much as 20x because of the information virtualization platform and its new DORA structure.

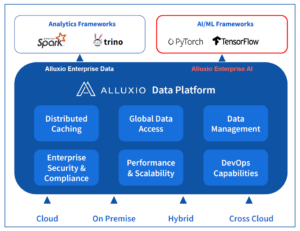

Alluxio is healthier recognized within the superior analytics market than within the AI and machine studying (ML) market, which is a results of the place it debuted within the information stack. Initially developed as a sister challenge to Apache Spark by Haoyuan “HY” Li–who was additionally a co-creator of Spark at UC Berkeley’s AMPlab–Alluxio gained traction by serving as a distrbuted file system in trendy hybrid cloud environments. The product’s actual forte was offering an abstraction layer to speed up I/O for information between storage repositories like HDFS, S3, MinIO, and ADLS and processing engines like Spark, Trino, Presto, and Hive.

By offering a single international namespace throughout hybrid cloud environments constructed across the precepts of separation of compute and storage, Alluxio reduces complexity, bolsters effectivity, and simplifies information administration for enterprise purchasers with sprawling information estates measuring within the a whole bunch of petabytes.

As deep studying matured, the oldsters at Alluxio realized that a chance existed to use its core IP to assist optimize the stream of information and compute for AI workloads too–predominantly pc imaginative and prescient use instances but additionally some pure language processing (NLP) too.

The corporate noticed that a few of its current analytic prospects had adjoining AI groups that had been struggling to develop their deep studying environments past a single GPU. To deal with the problem of coordinating information in multi-GPU environments, prospects both purchased excessive efficiency storage home equipment to speed up the stream of information into GPUs from their main information lakes, or paid information engineers to put in writing scripts for transferring information in a extra low-level and handbook vogue.

Alluxio noticed a chance to make use of its expertise to do basically the identical factor–speed up the stream of coaching information into the GPU–however with extra orhcestration and automaton round it. This gave rise to the creation of Alluxio Enterprise AI, which Alluxio unveiled at the moment.

The brand new product shares some expertise with its current product, which beforehand was known as Alluxio Enterprise and now has been rebranded as Alluxio Enterprise Information, however there are vital variations too, says Adit Madan, the director of product administration at Alluxio.

“With Alluxio Enterprise AI, although a few of the performance sounds and may be very acquainted to what was there within the Alluxio, it is a model new techniques structure,” Madan tells Datanami. “It is a utterly decentralized structure that we’re naming DORA.”

With DORA, which is brief for Decentralized Object Repository Structure, Alluxio is, for the primary time, tapping into the underlying {hardware}, together with GPUs and NVMe drives, as an alternative of residing purely on the software program degree.

“We’re saying use Alluxio in your accelerated compute itself,” i.e. the GPU nodes, Madan says. “We’ll make use of NVME, the pool of NvME that you’ve accessible, and offer you the I/O demand by merely pointing to your information lake with out the necessity for one more excessive efficiency storage answer beneath.”

Moreover, Alluxio Enterprise AI leverages methods that Alluxio has used on the analytics facet for a while to “make the I/O extra clever,” the corporate says. That features optimizing the cache for information entry patterns generally present in AI environments, that are characterised by massive file sequential entry, massive file random entry, and big small file entry. “Consider it like we’re extracting the entire I/O capabilities on the GPU cluster itself, as an alternative of you having to purchase extra {hardware} for I/O,” Madan says.

Conceptually, Alluxio Enterprise AI works equally to the corporate’s current information analytics product–it accelerates the I/O and permits customers to get extra work accomplished with out worrying a lot about how the information will get from level A (the information lake) to level B (the compute cluster). However at a expertise degree, there was fairly a little bit of innovation required, Madan says.

“To co-locate on the GPUs, we needed to optimize on how a lot assets that we use,” he says. “We have now to be actually useful resource environment friendly. As a substitute of let’s say consuming 10 CPUs, now we have to deliver it right down to solely consuming two CPUs on the GPU node to serve the I/O. So from a technical standpoint, there’s a very vital distinction there.”

Alluxio Enterprise AI can scale to greater than 10 billion objects, which can make it helpful for the numerous small recordsdata utilized in pc imaginative and prescient use instances. It’s designed to combine with PyTorch and Tensorflow frameworks. It’s primarily supposed for use for coaching AI fashions, however it may be used for mannequin deployment too.

The outcomes that Alluxio claims from the optimization are spectacular. On the GPU facet, Alluxio Enterprise AI delivers 2x to 4x extra capability, the corporate says. Clients can use that freed up capability to both get extra pc imaginative and prescient coaching work accomplished or to chop their GPU prices, Madan says.

A few of Alluxio’s early testers used the brand new product in manufacturing settings that included 200 GPU servers. “It’s not a small funding,” Madan says. “We have now some lively engagement with smaller [customers running] a number of dozen. And now we have some engagements that are a lot bigger with a number of hundred servers, every of which value a number of hundred grand.”

One early tester is Zhihu, which runs a preferred query and reply web site from its headquarters in Beijing. “Utilizing Alluxio as the information entry layer, we’ve considerably enhanced mannequin coaching efficiency by 3x and deployment by 10x with GPU utilization doubled,” Mengyu Hu, a software program engineer in Zhihu’s information platform workforce, mentioned in a press launch. “We’re enthusiastic about Alluxio’s Enterprise AI and its new DORA structure supporting entry to huge small recordsdata. This providing offers us confidence in supporting AI functions dealing with the upcoming synthetic intelligence wave.”

This answer is just not for everybody, Madam says. Clients which can be utilizing off-the-shelf AI fashions, maybe with a vector database for LLM use instances, don’t want this. “If you happen to’re adapting your mannequin [i.e. fine-tuning it] that’s the place the necessity is,” he says.

Associated Objects:

Alluxio Nabs $50M, Preps for Development in Information Orchestration

Alluxio Claims 5X Question Speedup by Optimization Information for Compute

Alluxio Bolsters Information Orchestration for Hybrid Cloud World