“Don’t ask what computer systems can do, ask what they need to do.”

That’s the title of the chapter on AI and ethics in a guide I coauthored with Carol Ann Browne in 2019. On the time, we wrote that “this can be one of many defining questions of our technology.” 4 years later, the query has seized middle stage not simply on the planet’s capitals, however round many dinner tables.

As folks use or hear concerning the energy of OpenAI’s GPT-4 basis mannequin, they’re usually shocked and even astounded. Many are enthused and even excited. Some are involved and even frightened. What has grow to be clear to nearly everyone seems to be one thing we famous 4 years in the past – we’re the primary technology within the historical past of humanity to create machines that may make choices that beforehand may solely be made by folks.

Nations world wide are asking frequent questions. How can we use this new expertise to unravel our issues? How will we keep away from or handle new issues it’d create? How will we management expertise that’s so highly effective? These questions name not just for broad and considerate dialog, however decisive and efficient motion.

All these questions and much more will probably be crucial in Japan. Few international locations have been extra resilient and revolutionary than Japan the previous half century. But the rest of this decade and past will convey new alternatives and challenges that may put expertise on the forefront of public wants and dialogue.

In Japan, one of many questions that’s being requested is the way to handle and assist a shrinking and growing older workforce. Japan might want to harness the ability of AI to concurrently tackle inhabitants shifts and different societal adjustments whereas driving its financial development. This paper gives a few of our concepts and solutions as an organization, positioned within the Japanese context.

To develop AI options that serve folks globally and warrant their belief, we’ve outlined, revealed, and carried out moral ideas to information our work. And we’re frequently enhancing engineering and governance programs to place these ideas into observe. Right this moment we now have practically 350 folks engaged on accountable AI at Microsoft, serving to us implement finest practices for constructing secure, safe, and clear AI programs designed to profit society.

New alternatives to enhance the human situation

The ensuing advances in our method to accountable AI have given us the potential and confidence to see ever-expanding methods for AI to enhance folks’s lives. By appearing as a copilot in folks’s lives, the ability of basis fashions like GPT-4 is popping search right into a extra highly effective device for analysis and enhancing productiveness for folks at work. And for any dad or mum who has struggled to recollect the way to assist their 13-year-old baby by way of an algebra homework task, AI-based help is a useful tutor.

Whereas this expertise will profit us in on a regular basis duties by serving to us do issues quicker, simpler, and higher, AI’s actual potential is in its promise to unlock a few of the world’s most elusive issues. We’ve seen AI assist save people’ eyesight, make progress on new cures for most cancers, generate new insights about proteins, and supply predictions to guard folks from hazardous climate. Different improvements are heading off cyberattacks and serving to to guard elementary human rights, even in nations by overseas invasion or civil warfare.

We’re optimistic concerning the revolutionary options from Japan which can be included in Half 3 of this paper. These options display how Japan’s creativity and innovation can tackle a few of the most urgent challenges in numerous domains similar to schooling, growing older, well being, atmosphere, and public companies.

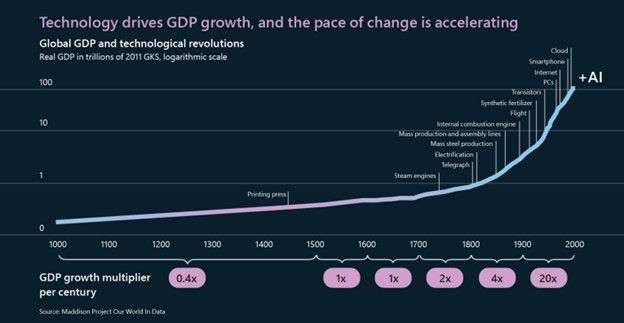

In so some ways, AI gives maybe much more potential for the great of humanity than any invention that has preceded it. For the reason that invention of the printing press with movable kind within the 1400s, human prosperity has been rising at an accelerating price. Innovations just like the steam engine, electrical energy, the car, the airplane, computing, and the web have supplied lots of the constructing blocks for contemporary civilization. And just like the printing press itself, AI gives a brand new device to genuinely assist advance human studying and thought.

Guardrails for the longer term

One other conclusion is equally essential: it’s not sufficient to focus solely on the numerous alternatives to make use of AI to enhance folks’s lives. That is maybe probably the most essential classes from the function of social media. Little greater than a decade in the past, technologists and political commentators alike gushed concerning the function of social media in spreading democracy throughout the Arab Spring. But 5 years after that, we realized that social media, like so many different applied sciences earlier than it, would grow to be each a weapon and a device – on this case geared toward democracy itself.

Right this moment, we’re 10 years older and wiser, and we have to put that knowledge to work. We have to suppose early on and in a clear-eyed method concerning the issues that might lie forward.

We additionally consider that it’s simply as essential to make sure correct management over AI as it’s to pursue its advantages. We’re dedicated and decided as an organization to develop and deploy AI in a secure and accountable method. The guardrails wanted for AI require a broadly shared sense of accountability and shouldn’t be left to expertise firms alone. Our AI merchandise and governance processes have to be knowledgeable by various multistakeholder views that assist us develop and deploy our AI applied sciences in cultural and socioeconomic contexts that could be completely different than our personal.

Once we at Microsoft adopted our six moral ideas for AI in 2018, we famous that one precept was the bedrock for the whole lot else – accountability. That is the elemental want: to make sure that machines stay topic to efficient oversight by folks and the individuals who design and function machines stay accountable to everybody else. In brief, we should at all times make sure that AI stays below human management. This have to be a first-order precedence for expertise firms and governments alike.

This connects instantly with one other important idea. In a democratic society, one among our foundational ideas is that no particular person is above the regulation. No authorities is above the regulation. No firm is above the regulation, and no product or expertise ought to be above the regulation. This results in a crucial conclusion: individuals who design and function AI programs can’t be accountable except their choices and actions are topic to the rule of regulation.

In some ways, that is on the coronary heart of the unfolding AI coverage and regulatory debate. How do governments finest make sure that AI is topic to the rule of regulation? In brief, what kind ought to new regulation, regulation, and coverage take?

A five-point blueprint for the general public governance of AI

Constructing on what we now have realized from our accountable AI program at Microsoft, we launched a blueprint in Could that detailed our five-point method to assist advance AI governance. On this model, we current these coverage concepts and solutions within the context of Japan. We accomplish that with the standard recognition that each a part of this blueprint will profit from broader dialogue and require deeper growth. However we hope this blueprint can contribute constructively to the work forward. We provide particular steps to:

- Implement and construct upon new government-led AI security frameworks.

- Require efficient security brakes for AI programs that management crucial infrastructure.

- Develop a broader authorized and regulatory framework based mostly on the expertise structure for AI.

- Promote transparency and guarantee tutorial and public entry to AI.

- Pursue new public-private partnerships to make use of AI as an efficient device to handle the inevitable societal challenges that include new expertise.

Extra broadly, to make the numerous completely different facets of AI governance work on a world degree, we’ll want a multilateral framework that connects numerous nationwide guidelines and ensures that an AI system licensed as secure in a single jurisdiction also can qualify as secure in one other. There are numerous efficient precedents for this, similar to frequent security requirements set by the Worldwide Civil Aviation Group, which suggests an airplane doesn’t should be refitted midflight from Tokyo to New York.

As the present holder of the G7 Presidency, Japan has demonstrated spectacular management in launching and driving the Hiroshima AI Course of (HAP) and is effectively positioned to assist advance international discussions on AI points and a multilateral framework. By the HAP, G7 leaders and multi-stakeholder contributors are strengthening coordinated approaches to AI governance and selling the event of reliable AI programs that champion human rights and democratic values. Efforts to develop international ideas are additionally being prolonged past G7 international locations, together with organizations just like the Group for Financial Cooperation and Improvement (OECD) and the World Partnership on AI.

The G7 Digital and Know-how Ministerial Assertion launched in September 2023 acknowledged the necessity to develop worldwide guiding ideas for all AI actors, together with builders and deployers of AI programs. It additionally endorsed a code of conduct for organizations creating superior AI programs. Given Japan’s dedication to this work and its strategic place in these dialogues, many international locations will look to Japan’s management and instance on AI regulation.

Working in direction of an internationally interoperable and agile method to accountable AI, as demonstrated by Japan, is crucial to maximizing the advantages of AI globally. Recognizing that AI governance is a journey, not a vacation spot, we stay up for supporting these efforts within the months and years to come back.

Governing AI inside Microsoft

Finally, each group that creates or makes use of superior AI programs might want to develop and implement its personal governance programs. Half 2 of this paper describes the AI governance system inside Microsoft – the place we started, the place we’re at this time, and the way we’re transferring into the longer term.

As this part acknowledges, the event of a brand new governance system for brand new expertise is a journey in and of itself. A decade in the past, this area barely existed. Right this moment Microsoft has nearly 350 workers specializing in it, and we’re investing in our subsequent fiscal yr to develop this additional.

As described on this part, over the previous six years we now have constructed out a extra complete AI governance construction and system throughout Microsoft. We didn’t begin from scratch, borrowing as a substitute from finest practices for the safety of cybersecurity, privateness, and digital security. That is all a part of the corporate’s complete Enterprise Threat Administration (ERM) system, which has grow to be a crucial a part of the administration of companies and plenty of different organizations on the planet at this time.

On the subject of AI, we first developed moral ideas after which needed to translate these into extra particular company insurance policies. We’re now on model 2 of the company customary that embodies these ideas and defines extra exact practices for our engineering groups to comply with. We’ve carried out the usual by way of coaching, tooling, and testing programs that proceed to mature quickly. That is supported by extra governance processes that embody monitoring, auditing, and compliance measures.

As with the whole lot in life, one learns from expertise. On the subject of AI governance, a few of our most essential studying has come from the detailed work required to overview particular delicate AI use circumstances. In 2019, we based a delicate use overview program to topic our most delicate and novel AI use circumstances to rigorous, specialised overview that ends in tailor-made steerage. Since that point, we now have accomplished roughly 600 delicate use case opinions. The tempo of this exercise has quickened to match the tempo of AI advances, with nearly 150 such opinions going down within the final 11 months.

All of this builds on the work we now have achieved and can proceed to do to advance accountable AI by way of firm tradition. Meaning hiring new and various expertise to develop our accountable AI ecosystem and investing within the expertise we have already got at Microsoft to develop abilities and empower them to suppose broadly concerning the potential influence of AI programs on people and society. It additionally means that rather more than up to now, the frontier of expertise requires a multidisciplinary method that mixes nice engineers with proficient professionals from throughout the liberal arts.

At Microsoft, we interact stakeholders from world wide as we develop our accountable AI program – it’s essential to us that our program is knowledgeable by the perfect pondering from folks engaged on these points globally and to advance a consultant dialogue on AI governance. It is because of this that we’re excited to take part in upcoming multistakeholder convenings in Japan.

This October, the Japanese authorities will host the Web Governance Discussion board 2023 (IGF) centered on the theme “The Web We Need – Empowering All Folks.” The IGF will embody crucial multistakeholder conferences to advance worldwide guiding ideas and different AI governance matters. We’re wanting ahead to those and different conferences in Japan to be taught from others and supply our experiences creating and deploying superior AI programs, in order that we are able to make progress towards shared guidelines of the street.

As one other instance of our multistakeholder engagement, earlier in 2023, Microsoft’s Workplace of Accountable AI partnered with the Stimson Middle’s Strategic foresight hub to launch our World Views Accountable AI Fellowship. The aim of the fellowship is to convene various stakeholders from civil society, academia, and the personal sector in World South international locations for substantive discussions on AI, its influence on society, and ways in which we are able to all higher incorporate the nuanced social, financial, and environmental contexts during which these programs are deployed. A complete international search led us to pick fellows from Africa (Nigeria, Egypt, and Kenya), Latin America (Mexico, Chile, Dominican Republic, and Peru), Asia (Indonesia, Sri Lanka, India, Kyrgyzstan, and Tajikistan) and Jap Europe (Turkey). Later this yr, we’ll share outputs of our conversations and video contributions to shine gentle on the problems at hand, current proposals to harness the advantages of AI purposes, and share key insights concerning the accountable growth and use of AI within the World South.

All that is provided in this paper within the spirit that we’re on a collective journey to forge a accountable future for synthetic intelligence. We are able to all be taught from one another. And regardless of how good we might imagine one thing is at this time, we’ll all must hold getting higher.

As expertise change accelerates, the work to manipulate AI responsibly should hold tempo with it. With the appropriate commitments and investments that hold folks on the middle of AI programs globally, we consider it will possibly.