Scientific ink is a set of software program utilized in over a thousand scientific trials to streamline the information assortment and administration course of, with the objective of enhancing the effectivity and accuracy of trials. Its cloud-based digital knowledge seize system permits scientific trial knowledge from greater than 2 million sufferers throughout 110 international locations to be collected electronically in real-time from quite a lot of sources, together with digital well being data and wearable gadgets.

With the COVID-19 pandemic forcing many scientific trials to go digital, Scientific ink has been an more and more invaluable answer for its skill to help distant monitoring and digital scientific trials. Fairly than require trial individuals to come back onsite to report affected person outcomes they’ll shift their monitoring to the house. Because of this, trials take much less time to design, develop and deploy and affected person enrollment and retention will increase.

To successfully analyze knowledge from scientific trials within the new remote-first surroundings, scientific trial sponsors got here to Scientific ink with the requirement for a real-time 360-degree view of sufferers and their outcomes throughout the complete international examine. With a centralized real-time analytics dashboard outfitted with filter capabilities, scientific groups can take quick motion on affected person questions and opinions to make sure the success of the trial. The 360-degree view was designed to be the information epicenter for scientific groups, offering a birds-eye view and sturdy drill down capabilities so scientific groups might preserve trials on monitor throughout all geographies.

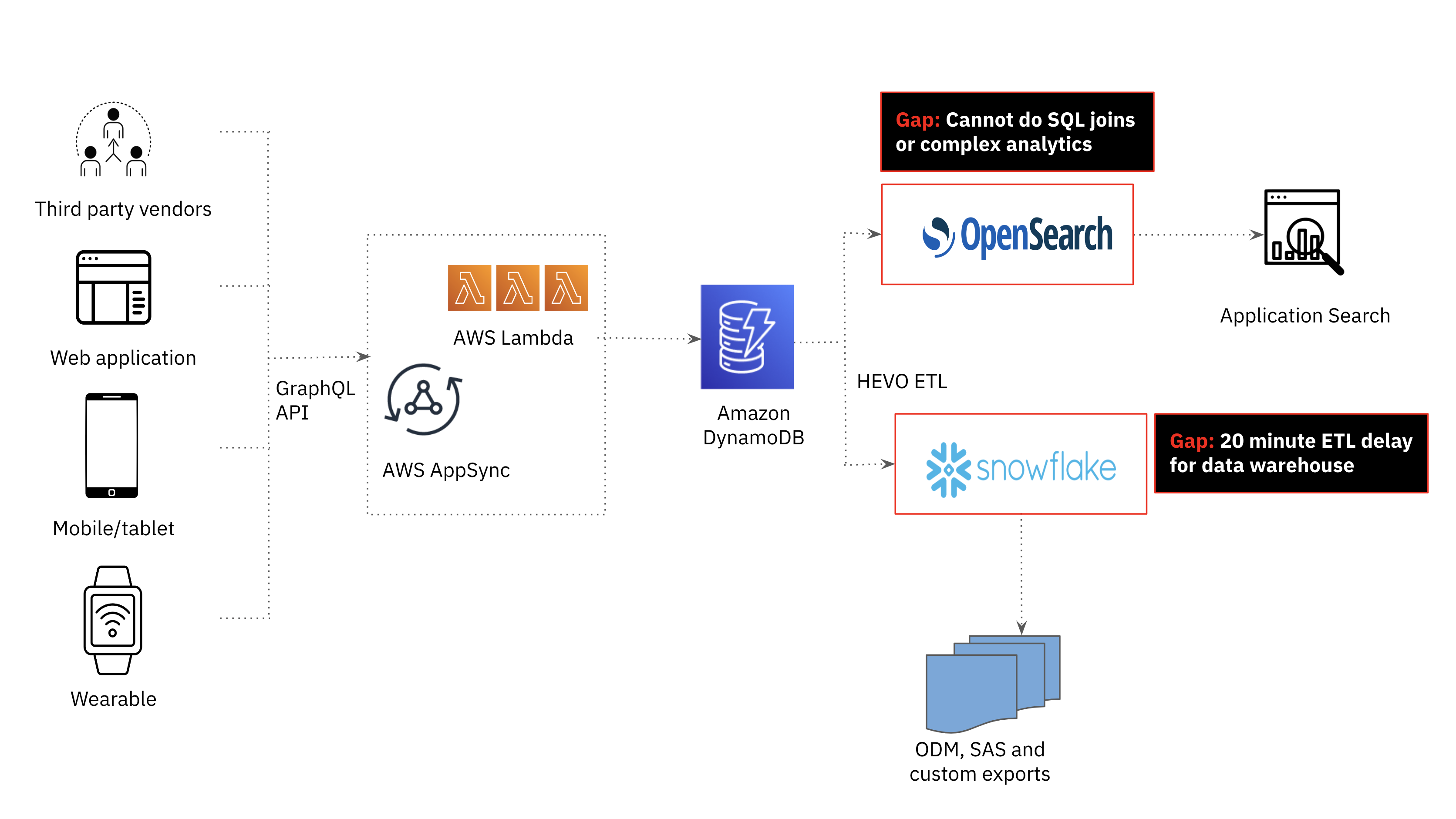

When the necessities for the brand new real-time examine participant monitoring got here to the engineering group, I knew that the present technical stack couldn’t help millisecond-latency advanced analytics on real-time knowledge. Amazon OpenSearch, a fork of Elasticsearch used for our software search, was quick however not purpose-built for advanced analytics together with joins. Snowflake, the sturdy cloud knowledge warehouse utilized by our analyst group for performant enterprise intelligence workloads, noticed vital knowledge delays and couldn’t meet the efficiency necessities of the appliance. This despatched us to the drafting board to give you a brand new structure; one which helps real-time ingest and complicated analytics whereas being resilient.

The Earlier than Structure

Amazon DynamoDB for Operational Workloads

Within the Scientific ink platform, third occasion vendor knowledge, internet functions, cellular gadgets and wearable machine knowledge is saved in Amazon DynamoDB. Amazon DynamoDB’s versatile schema makes it straightforward to retailer and retrieve knowledge in quite a lot of codecs, which is especially helpful for Scientific ink’s software that requires dealing with dynamic, semi-structured knowledge. DynamoDB is a serverless database so the group didn’t have to fret in regards to the underlying infrastructure or scaling of the database as these are all managed by AWS.

Amazon Opensearch for Search Workloads

Whereas DynamoDB is a superb selection for quick, scalable and extremely accessible transactional workloads, it isn’t the very best for search and analytics use circumstances. Within the first technology Scientific ink platform, search and analytics was offloaded from DynamoDB to Amazon OpenSearch. As the quantity and number of knowledge elevated, we realized the necessity for joins to help extra superior analytics and supply real-time examine affected person monitoring. Joins aren’t a first-class citizen in OpenSearch, requiring numerous operationally advanced and expensive workarounds together with knowledge denormalization, parent-child relationships, nested objects and application-side joins which can be difficult to scale.

We additionally encountered knowledge and infrastructure operational challenges when scaling OpenSearch. One knowledge problem we confronted centered on dynamic mapping in OpenSearch or the method of robotically detecting and mapping the information forms of fields in a doc. Dynamic mapping was helpful as we had numerous fields with various knowledge varieties and had been indexing knowledge from a number of sources with completely different schemas. Nonetheless, dynamic mapping generally led to sudden outcomes, similar to incorrect knowledge varieties or mapping conflicts that pressured us to reindex the information.

On the infrastructure aspect, despite the fact that we used managed Amazon Opensearch, we had been nonetheless answerable for cluster operations together with managing nodes, shards and indexes. We discovered that as the dimensions of the paperwork elevated we would have liked to scale up the cluster which is a guide, time-consuming course of. Moreover, as OpenSearch has a tightly coupled structure with compute and storage scaling collectively, we needed to overprovision compute sources to help the rising variety of paperwork. This led to compute wastage and better prices and decreased effectivity. Even when we might have made advanced analytics work on OpenSearch, we’d have evaluated extra databases as the information engineering and operational administration was vital.

Snowflake for Knowledge Warehousing Workloads

We additionally investigated the potential of our cloud knowledge warehouse, Snowflake, to be the serving layer for analytics in our software. Snowflake was used to offer weekly consolidated experiences to scientific trial sponsors and supported SQL analytics, assembly the advanced analytics necessities of the appliance. That mentioned, offloading DynamoDB knowledge to Snowflake was too delayed; at a minimal, we might obtain a 20 minute knowledge latency which fell outdoors the time window required for this use case.

Necessities

Given the gaps within the present structure, we got here up with the next necessities for the substitute of OpenSearch because the serving layer:

- Actual-time streaming ingest: Knowledge modifications from DynamoDB should be seen and queryable within the downstream database inside seconds

- Millisecond-latency advanced analytics (together with joins): The database should be capable to consolidate international trial knowledge on sufferers right into a 360-degree view. This contains supporting advanced sorting and filtering of the information and aggregations of 1000’s of various entities.

- Extremely Resilient: The database is designed to take care of availability and decrease knowledge loss within the face of assorted forms of failures and disruptions.

- Scalable: The database is cloud-native and might scale on the click on of a button or an API name with no downtime. We had invested in a serverless structure with Amazon DynamoDB and didn’t need the engineering group to handle cluster-level operations transferring ahead.

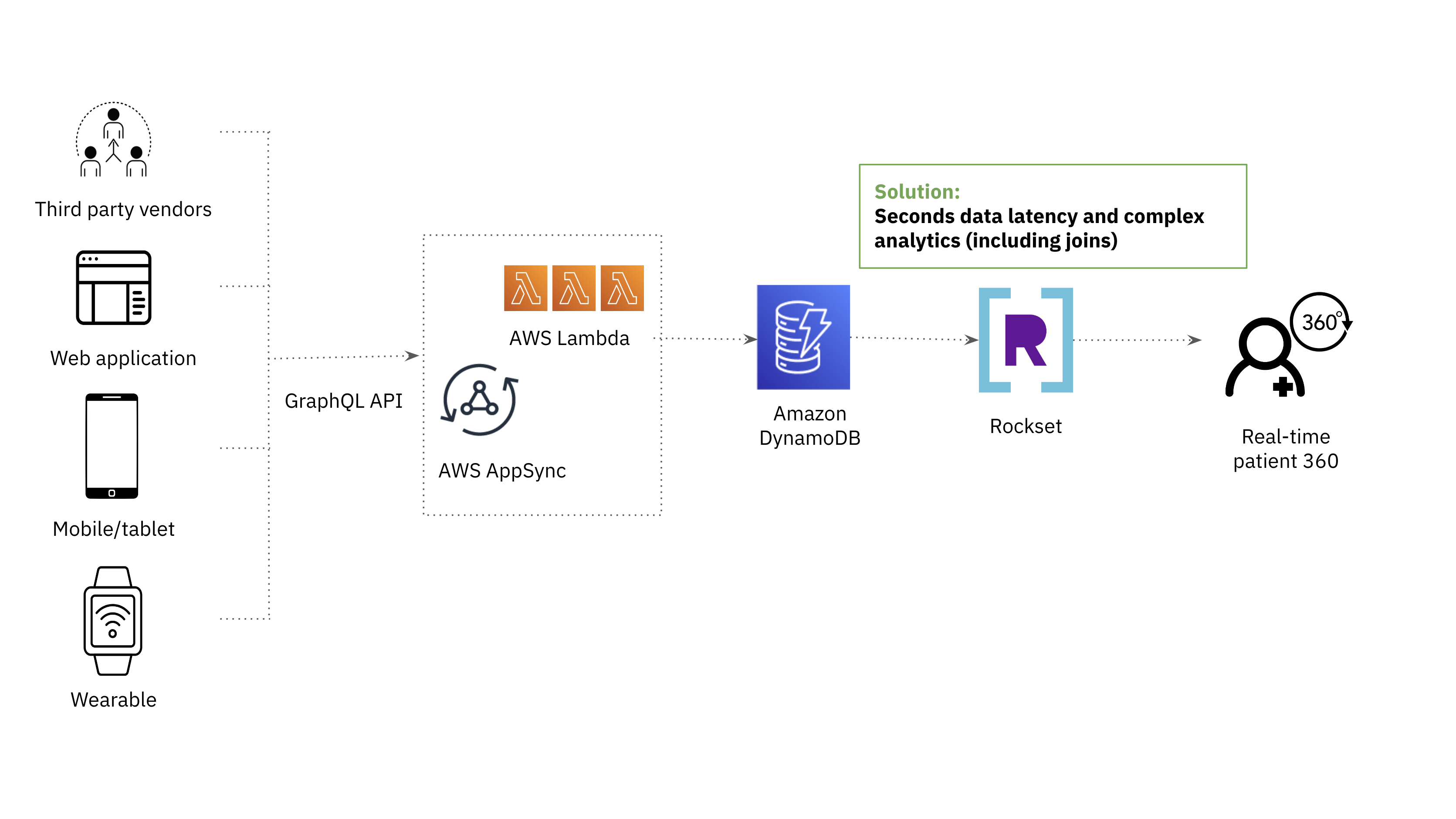

The After Structure

Rockset initially got here on our radar as a substitute for OpenSearch for its help of advanced analytics on low latency knowledge.

Each OpenSearch and Rockset use indexing to allow quick querying over massive quantities of information. The distinction is that Rockset employs a Converged Index which is a mixture of a search index, columnar retailer and row retailer for optimum question efficiency. The Converged Index helps a SQL-based question language, which permits us to fulfill the requirement for advanced analytics.

Along with Converged Indexing, there have been different options that piqued our curiosity and made it straightforward to start out efficiency testing Rockset on our personal knowledge and queries.

- Constructed-in connector to DynamoDB: New knowledge from our DynamoDB tables are mirrored and made queryable in Rockset with only some seconds delay. This made it straightforward for Rockset to suit into our current knowledge stack.

- Capability to take a number of knowledge varieties into the identical area: This addressed the information engineering challenges that we confronted with dynamic mapping in OpenSearch, guaranteeing that there have been no breakdowns in our ETL course of and that queries continued to ship responses even when there have been schema modifications.

- Cloud-native structure: Now we have additionally invested in a serverless knowledge stack for resource-efficiency and decreased operational overhead. We had been capable of scale ingest compute, question compute and storage independently with Rockset in order that we not have to overprovision sources.

Efficiency Outcomes

As soon as we decided that Rockset fulfilled the wants of our software, we proceeded to evaluate the database’s ingestion and question efficiency. We ran the next exams on Rockset by constructing a Lambda perform with Node.js:

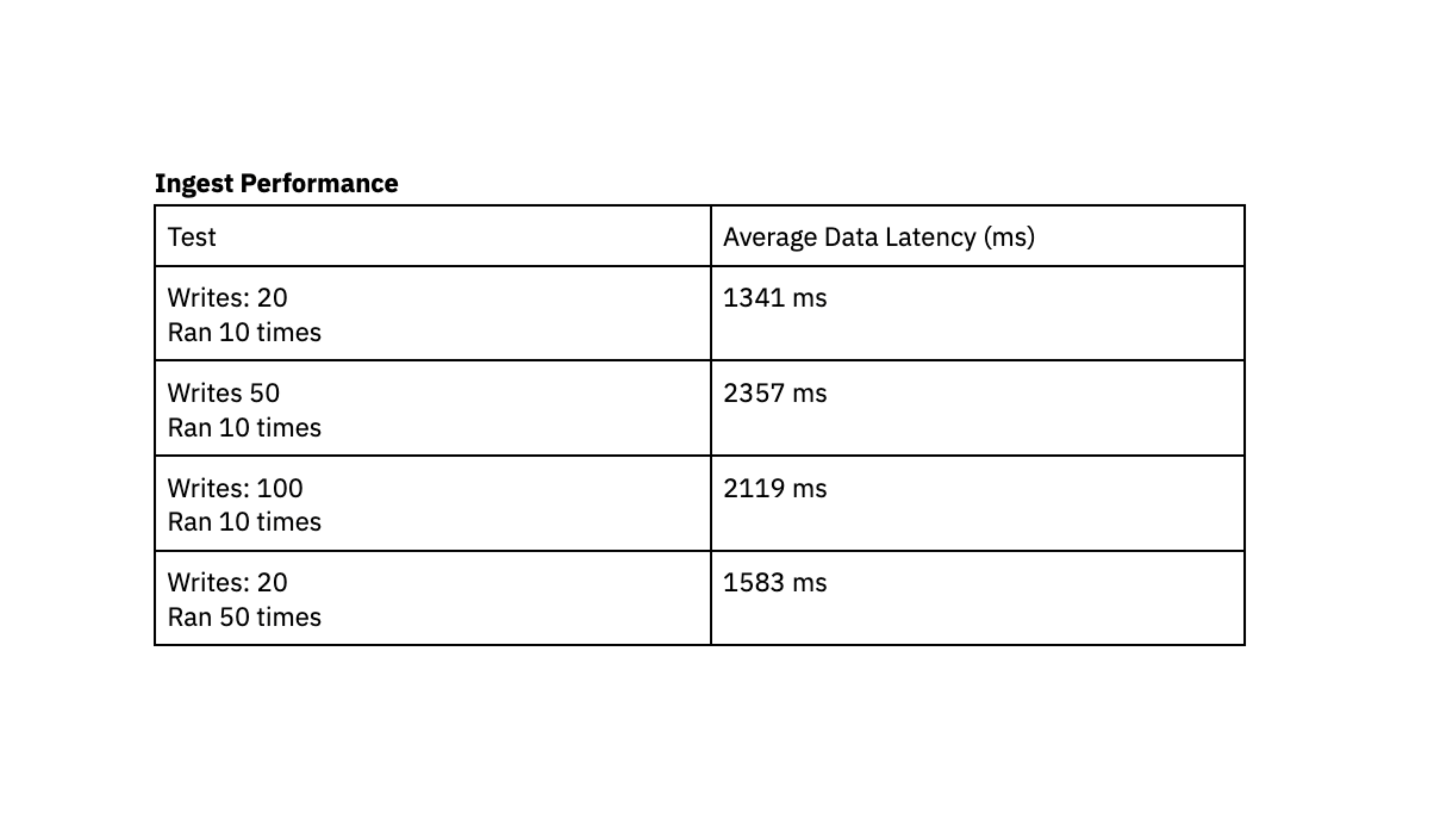

Ingest Efficiency

The frequent sample we see is loads of small writes, ranging in dimension from 400 bytes to 2 kilobytes, grouped collectively and being written to the database regularly. We evaluated ingest efficiency by producing X writes into DynamoDB in fast succession and recording the typical time in milliseconds that it took for Rockset to sync that knowledge and make it queryable, also called knowledge latency.

To run this efficiency take a look at, we used a Rockset medium digital occasion with 8 vCPU of compute and 64 GiB of reminiscence.

The efficiency exams point out that Rockset is able to reaching a knowledge latency beneath 2.4 seconds, which represents the period between the technology of information in DynamoDB and its availability for querying in Rockset. This load testing made us assured that we might persistently entry knowledge roughly 2 seconds after writing to DynamoDB, giving customers up-to-date knowledge of their dashboards. Prior to now, we struggled to realize predictable latency with Elasticsearch and had been excited by the consistency that we noticed with Rockset throughout load testing.

Question Efficiency

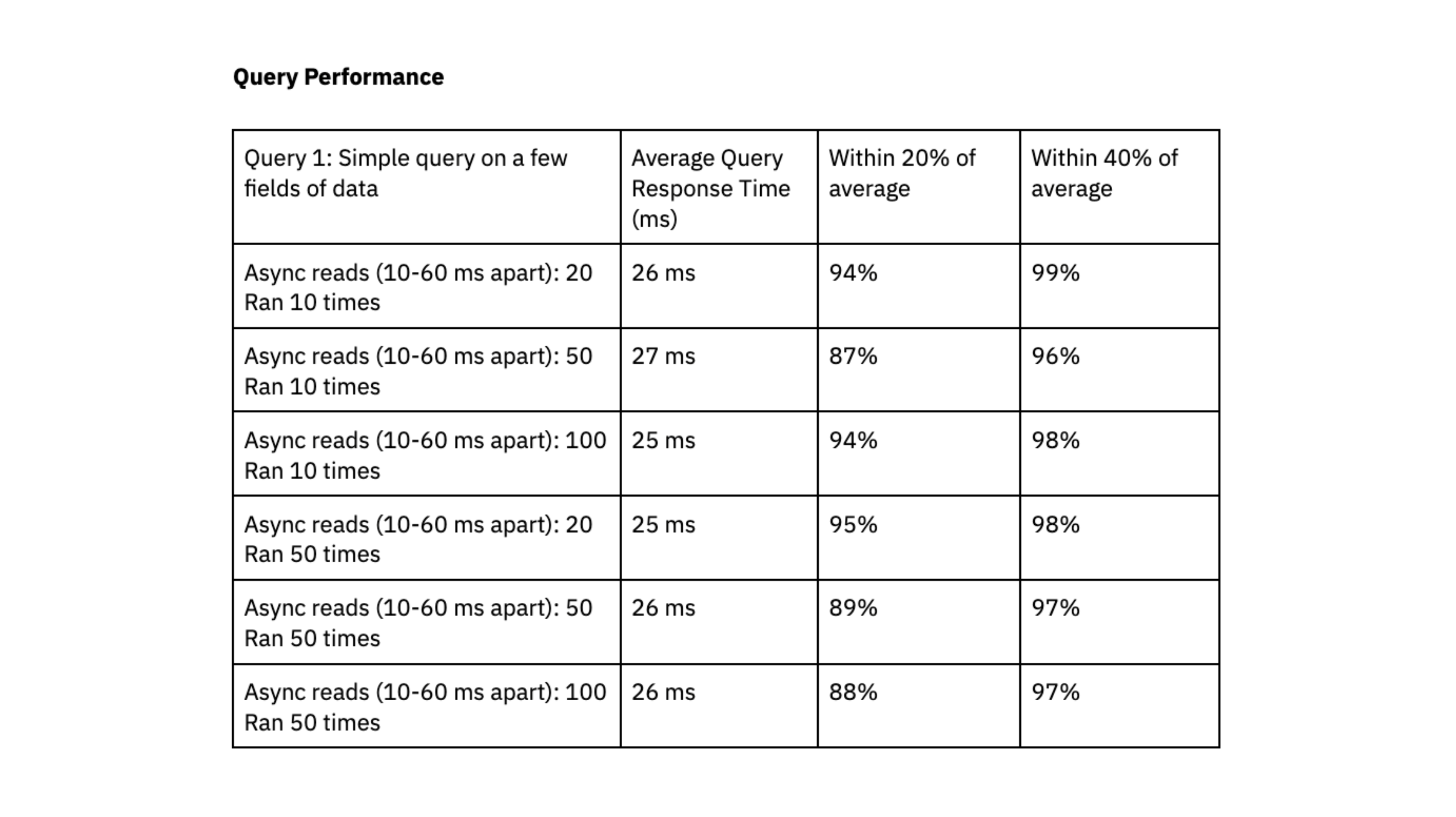

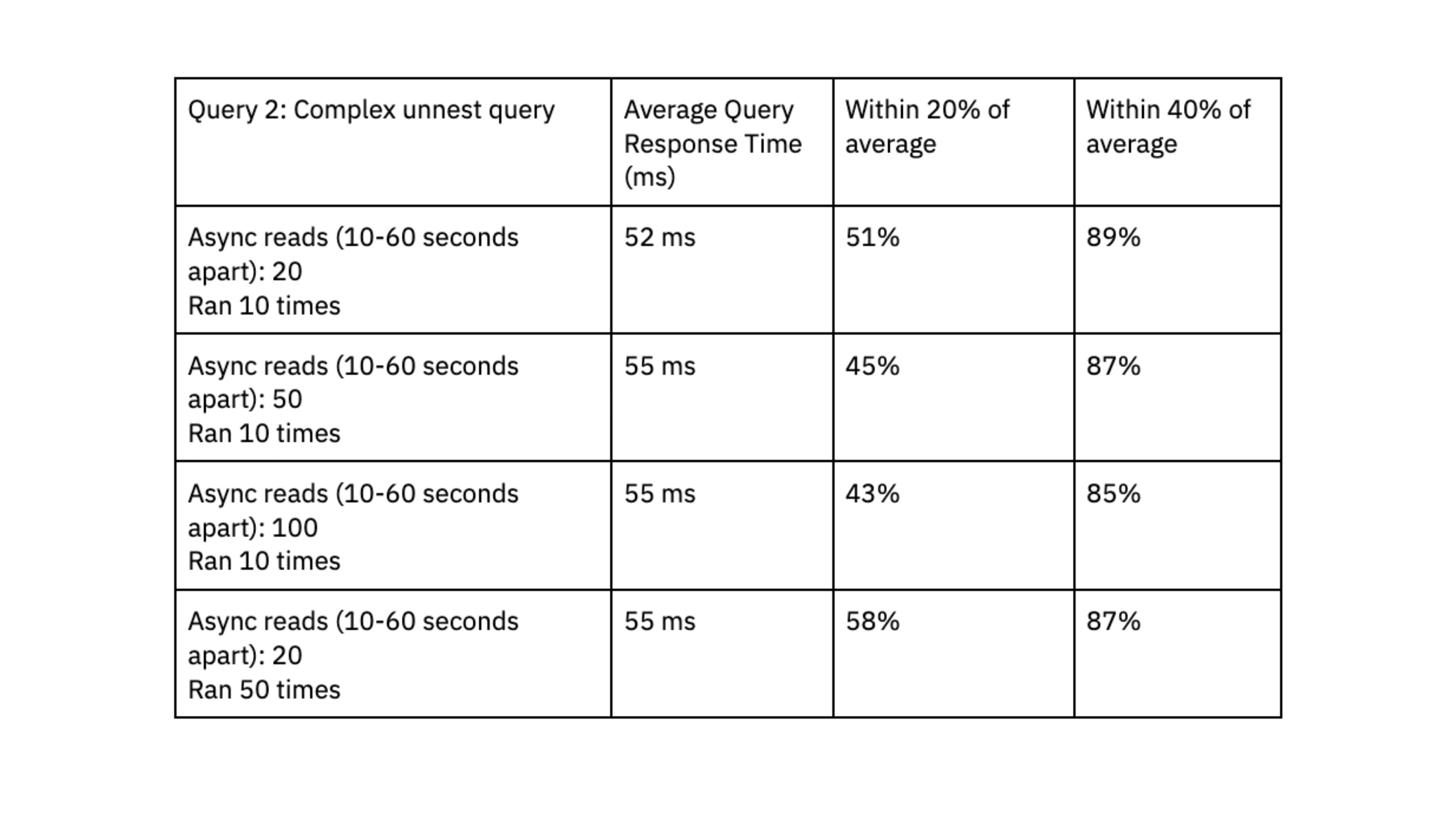

For question efficiency, we executed X queries randomly each 10-60 milliseconds. We ran two exams utilizing queries with completely different ranges of complexity:

- Question 1: Easy question on a couple of fields of information. Dataset dimension of ~700K data and a couple of.5 GB.

- Question 2: Advanced question that expands arrays into a number of rows utilizing an unnest perform. Knowledge is filtered on the unnested fields. Two datasets had been joined collectively: one dataset had 700K rows and a couple of.5 GB, the opposite dataset had 650K rows and 3GB.

We once more ran the exams on a Rockset medium digital occasion with 8 vCPU of compute and 64 GiB of reminiscence.

Rockset was capable of ship question response instances within the vary of double-digit milliseconds, even when dealing with workloads with excessive ranges of concurrency.

To find out if Rockset can scale linearly, we evaluated question efficiency on a small digital occasion, which had 4vCPU of compute and 32 GiB of reminiscence, towards the medium digital occasion. The outcomes confirmed that the medium digital occasion decreased question latency by an element of 1.6x for the primary question and 4.5x for the second question, suggesting that Rockset can scale effectively for our workload.

We preferred that Rockset achieved predictable question efficiency, clustered inside 40% and 20% of the typical, and that queries persistently delivered in double-digit milliseconds; this quick question response time is crucial to our person expertise.

Conclusion

We’re at the moment phasing real-time scientific trial monitoring into manufacturing as the brand new operational knowledge hub for scientific groups. Now we have been blown away by the pace of Rockset and its skill to help advanced filters, joins, and aggregations. Rockset achieves double-digit millisecond latency queries and might scale ingest to help real-time updates, inserts and deletes from DynamoDB.

In contrast to OpenSearch, which required guide interventions to realize optimum efficiency, Rockset has confirmed to require minimal operational effort on our half. Scaling up our operations to accommodate bigger digital situations and extra scientific sponsors occurs with only a easy push of a button.

Over the following yr, we’re excited to roll out the real-time examine participant monitoring to all prospects and proceed our management within the digital transformation of scientific trials.