Within the movie “High Gun: Maverick,” Maverick, performed by Tom Cruise, is charged with coaching younger pilots to finish a seemingly not possible mission — to fly their jets deep right into a rocky canyon, staying so low to the bottom they can’t be detected by radar, then quickly climb out of the canyon at an excessive angle, avoiding the rock partitions. Spoiler alert: With Maverick’s assist, these human pilots accomplish their mission.

A machine, however, would battle to finish the identical pulse-pounding job. To an autonomous plane, as an example, probably the most easy path towards the goal is in battle with what the machine must do to keep away from colliding with the canyon partitions or staying undetected. Many present AI strategies aren’t capable of overcome this battle, referred to as the stabilize-avoid downside, and can be unable to succeed in their purpose safely.

MIT researchers have developed a brand new method that may clear up complicated stabilize-avoid issues higher than different strategies. Their machine-learning strategy matches or exceeds the security of present strategies whereas offering a tenfold enhance in stability, that means the agent reaches and stays steady inside its purpose area.

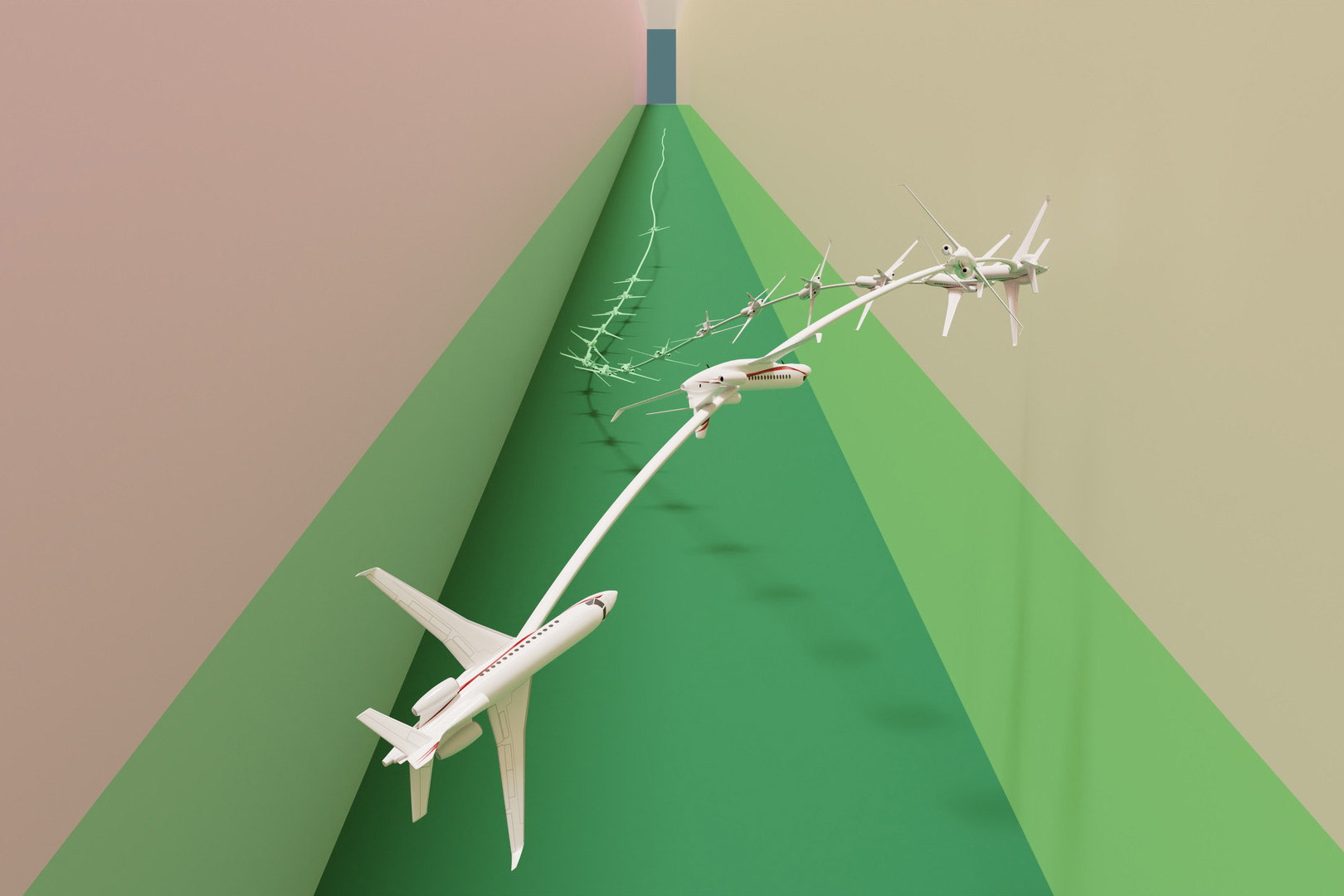

In an experiment that may make Maverick proud, their method successfully piloted a simulated jet plane by a slender hall with out crashing into the bottom.

“This has been a longstanding, difficult downside. Lots of people have checked out it however didn’t know learn how to deal with such high-dimensional and sophisticated dynamics,” says Chuchu Fan, the Wilson Assistant Professor of Aeronautics and Astronautics, a member of the Laboratory for Data and Resolution Programs (LIDS), and senior creator of a new paper on this method.

Fan is joined by lead creator Oswin So, a graduate pupil. The paper can be introduced on the Robotics: Science and Programs convention.

The stabilize-avoid problem

Many approaches sort out complicated stabilize-avoid issues by simplifying the system to allow them to clear up it with easy math, however the simplified outcomes usually don’t maintain as much as real-world dynamics.

More practical strategies use reinforcement studying, a machine-learning methodology the place an agent learns by trial-and-error with a reward for habits that will get it nearer to a purpose. However there are actually two objectives right here — stay steady and keep away from obstacles — and discovering the suitable steadiness is tedious.

The MIT researchers broke the issue down into two steps. First, they reframe the stabilize-avoid downside as a constrained optimization downside. On this setup, fixing the optimization permits the agent to succeed in and stabilize to its purpose, that means it stays inside a sure area. By making use of constraints, they make sure the agent avoids obstacles, So explains.

Then for the second step, they reformulate that constrained optimization downside right into a mathematical illustration referred to as the epigraph type and clear up it utilizing a deep reinforcement studying algorithm. The epigraph type lets them bypass the difficulties different strategies face when utilizing reinforcement studying.

“However deep reinforcement studying isn’t designed to resolve the epigraph type of an optimization downside, so we couldn’t simply plug it into our downside. We needed to derive the mathematical expressions that work for our system. As soon as we had these new derivations, we mixed them with some present engineering tips utilized by different strategies,” So says.

No factors for second place

To check their strategy, they designed quite a few management experiments with totally different preliminary circumstances. For example, in some simulations, the autonomous agent wants to succeed in and keep inside a purpose area whereas making drastic maneuvers to keep away from obstacles which are on a collision course with it.

Courtesy of the researchers

In comparison with a number of baselines, their strategy was the one one that might stabilize all trajectories whereas sustaining security. To push their methodology even additional, they used it to fly a simulated jet plane in a situation one would possibly see in a “High Gun” film. The jet needed to stabilize to a goal close to the bottom whereas sustaining a really low altitude and staying inside a slender flight hall.

This simulated jet mannequin was open-sourced in 2018 and had been designed by flight management consultants as a testing problem. Might researchers create a situation that their controller couldn’t fly? However the mannequin was so difficult it was troublesome to work with, and it nonetheless couldn’t deal with complicated situations, Fan says.

The MIT researchers’ controller was capable of forestall the jet from crashing or stalling whereas stabilizing to the purpose much better than any of the baselines.

Sooner or later, this method might be a place to begin for designing controllers for extremely dynamic robots that should meet security and stability necessities, like autonomous supply drones. Or it might be applied as a part of bigger system. Maybe the algorithm is barely activated when a automobile skids on a snowy street to assist the driving force safely navigate again to a steady trajectory.

Navigating excessive situations {that a} human wouldn’t be capable of deal with is the place their strategy actually shines, So provides.

“We imagine {that a} purpose we should always attempt for as a discipline is to offer reinforcement studying the security and stability ensures that we might want to present us with assurance once we deploy these controllers on mission-critical techniques. We expect this can be a promising first step towards attaining that purpose,” he says.

Shifting ahead, the researchers need to improve their method so it’s higher capable of take uncertainty into consideration when fixing the optimization. Additionally they need to examine how nicely the algorithm works when deployed on {hardware}, since there can be mismatches between the dynamics of the mannequin and people in the actual world.

“Professor Fan’s group has improved reinforcement studying efficiency for dynamical techniques the place security issues. As a substitute of simply hitting a purpose, they create controllers that make sure the system can attain its goal safely and keep there indefinitely,” says Stanley Bak, an assistant professor within the Division of Laptop Science at Stony Brook College, who was not concerned with this analysis. “Their improved formulation permits the profitable technology of protected controllers for complicated situations, together with a 17-state nonlinear jet plane mannequin designed partly by researchers from the Air Power Analysis Lab (AFRL), which contains nonlinear differential equations with carry and drag tables.”

The work is funded, partly, by MIT Lincoln Laboratory below the Security in Aerobatic Flight Regimes program.