Imaginative and prescient-language foundational fashions are constructed on the premise of a single pre-training adopted by subsequent adaptation to a number of downstream duties. Two primary and disjoint coaching situations are common: a CLIP-style contrastive studying and next-token prediction. Contrastive studying trains the mannequin to foretell if image-text pairs appropriately match, successfully constructing visible and textual content representations for the corresponding picture and textual content inputs, whereas next-token prediction predicts the most certainly subsequent textual content token in a sequence, thus studying to generate textual content, in keeping with the required process. Contrastive studying allows image-text and text-image retrieval duties, comparable to discovering the picture that greatest matches a sure description, and next-token studying allows text-generative duties, comparable to Picture Captioning and Visible Query Answering (VQA). Whereas each approaches have demonstrated highly effective outcomes, when a mannequin is pre-trained contrastively, it usually doesn’t fare effectively on text-generative duties and vice-versa. Moreover, adaptation to different duties is usually achieved with advanced or inefficient strategies. For instance, with a view to lengthen a vision-language mannequin to movies, some fashions must do inference for every video body individually. This limits the dimensions of the movies that may be processed to only some frames and doesn’t totally benefit from movement info out there throughout frames.

Motivated by this, we current “A Easy Structure for Joint Studying for MultiModal Duties”, referred to as MaMMUT, which is ready to prepare collectively for these competing goals and which gives a basis for a lot of vision-language duties both instantly or by way of easy adaptation. MaMMUT is a compact, 2B-parameter multimodal mannequin that trains throughout contrastive, textual content generative, and localization-aware goals. It consists of a single picture encoder and a textual content decoder, which permits for a direct reuse of each parts. Moreover, a simple adaptation to video-text duties requires solely utilizing the picture encoder as soon as and may deal with many extra frames than prior work. In keeping with current language fashions (e.g., PaLM, GLaM, GPT3), our structure makes use of a decoder-only textual content mannequin and might be considered a easy extension of language fashions. Whereas modest in measurement, our mannequin outperforms the cutting-edge or achieves aggressive efficiency on image-text and text-image retrieval, video query answering (VideoQA), video captioning, open-vocabulary detection, and VQA.

|

|

Decoder-only mannequin structure

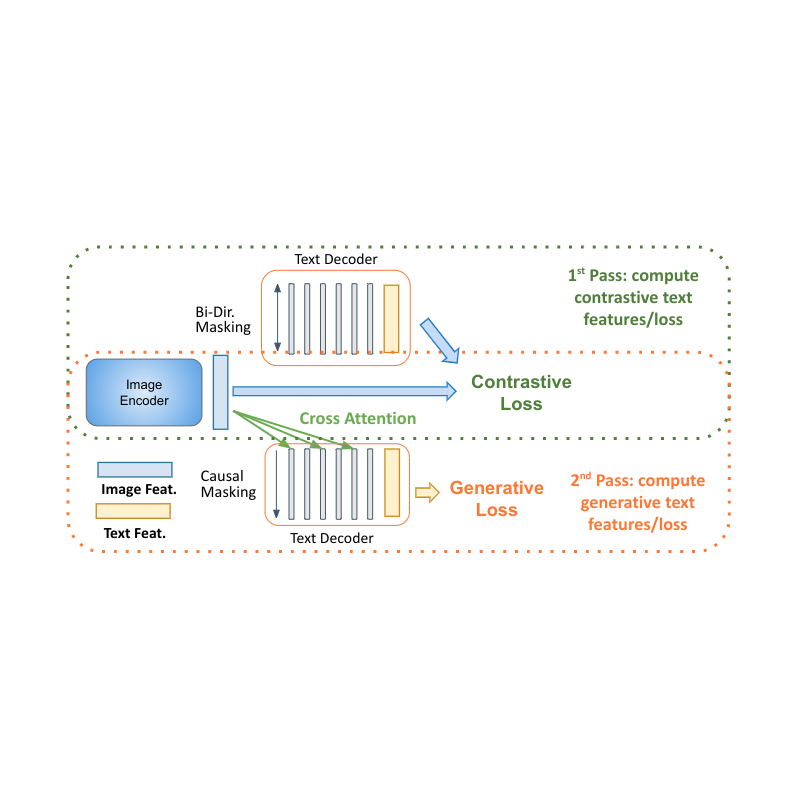

One stunning discovering is {that a} single language-decoder is enough for all these duties, which obviates the necessity for each advanced constructs and coaching procedures introduced earlier than. For instance, our mannequin (introduced to the left within the determine under) consists of a single visible encoder and single text-decoder, related by way of cross consideration, and trains concurrently on each contrastive and text-generative kinds of losses. Comparatively, prior work is both not capable of deal with image-text retrieval duties, or applies just some losses to just some components of the mannequin. To allow multimodal duties and totally benefit from the decoder-only mannequin, we have to collectively prepare each contrastive losses and text-generative captioning-like losses.

|

| MaMMUT structure (left) is an easy assemble consisting of a single imaginative and prescient encoder and a single textual content decoder. In comparison with different common vision-language fashions — e.g., PaLI (center) and ALBEF, CoCa (proper) — it trains collectively and effectively for a number of vision-language duties, with each contrastive and text-generative losses, totally sharing the weights between the duties. |

Decoder two-pass studying

Decoder-only fashions for language studying present clear benefits in efficiency with smaller mannequin measurement (nearly half the parameters). The primary problem for making use of them to multimodal settings is to unify the contrastive studying (which makes use of unconditional sequence-level illustration) with captioning (which optimizes the probability of a token conditioned on the earlier tokens). We suggest a two-pass method to collectively study these two conflicting kinds of textual content representations inside the decoder. Throughout the first go, we make the most of cross consideration and causal masking to study the caption era process — the textual content options can attend to the picture options and predict the tokens in sequence. On the second go, we disable the cross-attention and causal masking to study the contrastive process. The textual content options is not going to see the picture options however can attend bidirectionally to all textual content tokens directly to supply the ultimate text-based illustration. Finishing this two-pass method inside the identical decoder permits for accommodating each kinds of duties that had been beforehand arduous to reconcile. Whereas easy, we present that this mannequin structure is ready to present a basis for a number of multimodal duties.

|

| MaMMUT decoder-only two-pass studying allows each contrastive and generative studying paths by the identical mannequin. |

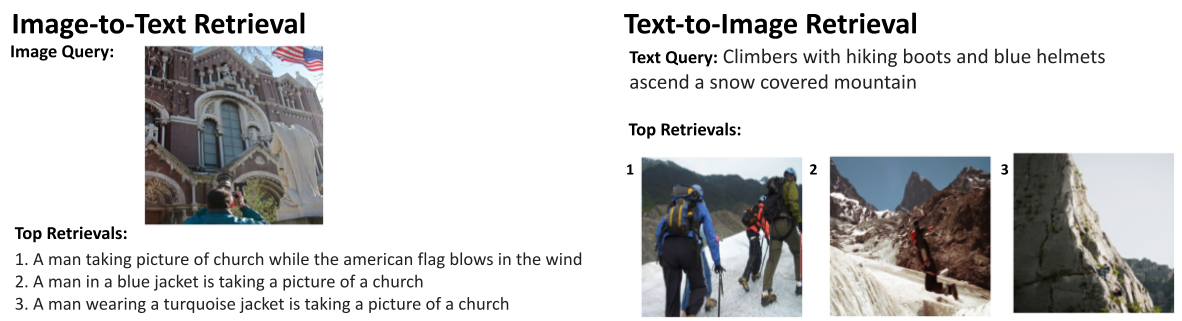

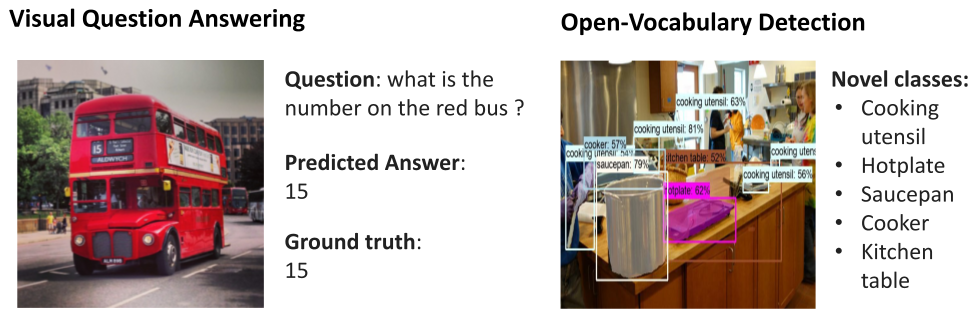

One other benefit of our structure is that, since it’s skilled for these disjoint duties, it may be seamlessly utilized to a number of purposes comparable to image-text and text-image retrieval, VQA, and captioning.

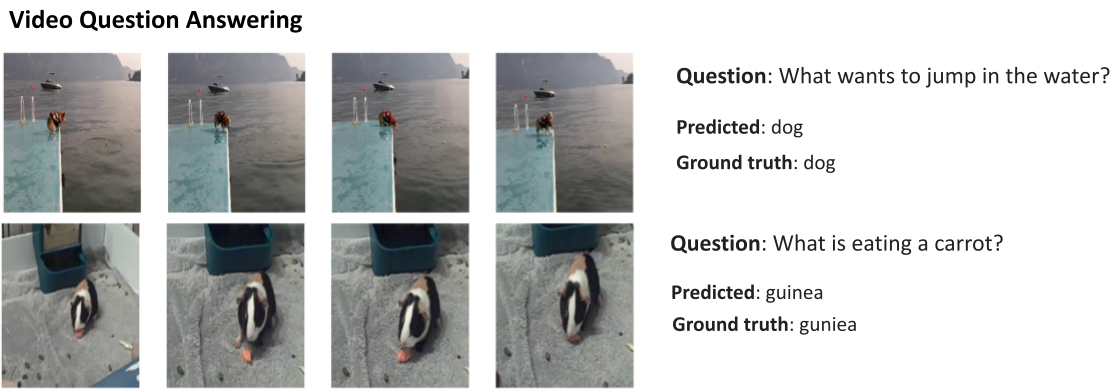

Furthermore, MaMMUT simply adapts to video-language duties. Earlier approaches used a imaginative and prescient encoder to course of every body individually, which required making use of it a number of instances. That is sluggish and restricts the variety of frames the mannequin can deal with, usually to solely 6–8. With MaMMUT, we use sparse video tubes for light-weight adaptation instantly by way of the spatio-temporal info from the video. Moreover, adapting the mannequin to Open-Vocabulary Detection is finished by merely coaching to detect bounding-boxes by way of an object-detection head.

Outcomes

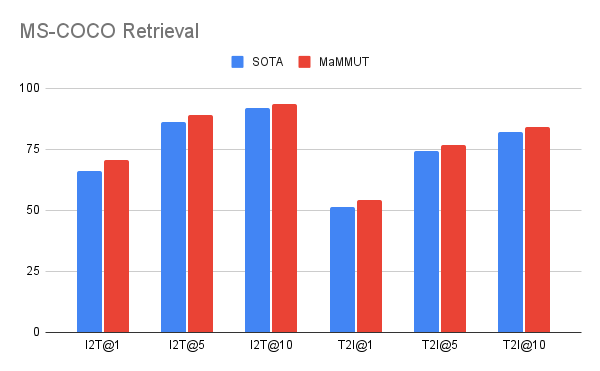

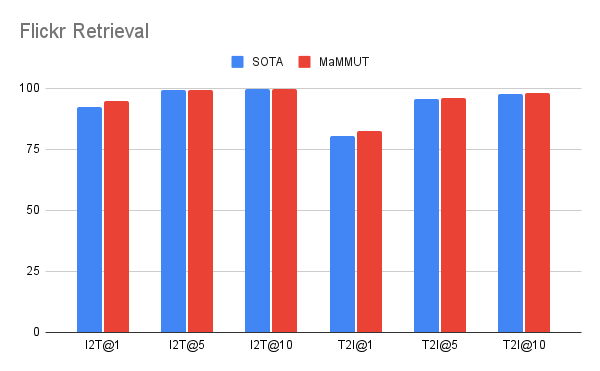

Our mannequin achieves wonderful zero-shot outcomes on image-text and text-image retrieval with none adaptation, outperforming all earlier state-of-the-art fashions. The outcomes on VQA are aggressive with state-of-the-art outcomes, that are achieved by a lot bigger fashions. The PaLI mannequin (17B parameters) and the Flamingo mannequin (80B) have the most effective efficiency on the VQA2.0 dataset, however MaMMUT (2B) has the identical accuracy because the 15B PaLI.

|

|

| MaMMUT outperforms the cutting-edge (SOTA) on Zero-Shot Picture-Textual content (I2T) and Textual content-Picture (T2I) retrieval on each MS-COCO (high) and Flickr (backside) benchmarks. |

|

| Efficiency on the VQA2.0 dataset is aggressive however doesn’t outperform giant fashions comparable to Flamingo-80B and PalI-17B. Efficiency is evaluated within the more difficult open-ended textual content era setting. |

MaMMUT additionally outperforms the state-of-the-art on VideoQA, as proven under on the MSRVTT-QA and MSVD-QA datasets. Observe that we outperform a lot greater fashions comparable to Flamingo, which is particularly designed for picture+video pre-training and is pre-trained with each image-text and video-text knowledge.

|

Our outcomes outperform the state-of-the-art on open-vocabulary detection fine-tuning as can also be proven under.

Key components

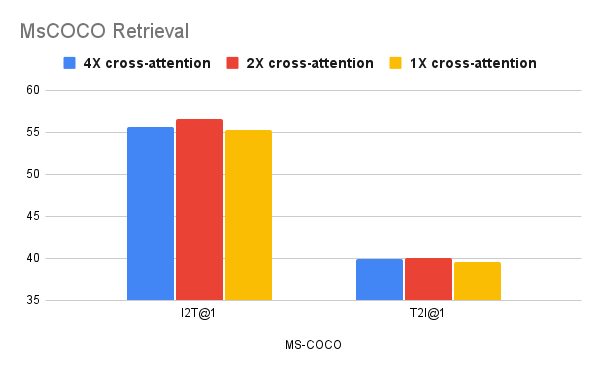

We present that joint coaching of each contrastive and text-generative goals isn’t a straightforward process, and in our ablations we discover that these duties are served higher by completely different design decisions. We see that fewer cross-attention connections are higher for retrieval duties, however extra are most well-liked by VQA duties. But, whereas this exhibits that our mannequin’s design decisions could be suboptimal for particular person duties, our mannequin is more practical than extra advanced, or bigger, fashions.

|

|

| Ablation research exhibiting that fewer cross-attention connections (1-2) are higher for retrieval duties (high), whereas extra connections favor text-generative duties comparable to VQA (backside). |

Conclusion

We introduced MaMMUT, a easy and compact vision-encoder language-decoder mannequin that collectively trains a lot of conflicting goals to reconcile contrastive-like and text-generative duties. Our mannequin additionally serves as a basis for a lot of extra vision-language duties, attaining state-of-the-art or aggressive efficiency on image-text and text-image retrieval, videoQA, video captioning, open-vocabulary detection and VQA. We hope it may be additional used for extra multimodal purposes.

Acknowledgements

The work described is co-authored by: Weicheng Kuo, AJ Piergiovanni, Dahun Kim, Xiyang Luo, Ben Caine, Wei Li, Abhijit Ogale, Luowei Zhou, Andrew Dai, Zhifeng Chen, Claire Cui, and Anelia Angelova. We wish to thank Mojtaba Seyedhosseini, Vijay Vasudevan, Priya Goyal, Jiahui Yu, Zirui Wang, Yonghui Wu, Runze Li, Jie Mei, Radu Soricut, Qingqing Huang, Andy Ly, Nan Du, Yuxin Wu, Tom Duerig, Paul Natsev, Zoubin Ghahramani for his or her assist and help.