Robotic studying has been utilized to a variety of difficult actual world duties, together with dexterous manipulation, legged locomotion, and greedy. It’s much less widespread to see robotic studying utilized to dynamic, high-acceleration duties requiring tight-loop human-robot interactions, equivalent to desk tennis. There are two complementary properties of the desk tennis job that make it attention-grabbing for robotic studying analysis. First, the duty requires each pace and precision, which places important calls for on a studying algorithm. On the identical time, the issue is highly-structured (with a set, predictable setting) and naturally multi-agent (the robotic can play with people or one other robotic), making it a fascinating testbed to research questions on human-robot interplay and reinforcement studying. These properties have led to a number of analysis teams creating desk tennis analysis platforms [1, 2, 3, 4].

The Robotics crew at Google has constructed such a platform to review issues that come up from robotic studying in a multi-player, dynamic and interactive setting. In the remainder of this publish we introduce two initiatives, Iterative-Sim2Real (to be offered at CoRL 2022) and GoalsEye (IROS 2022), which illustrate the issues we’ve got been investigating thus far. Iterative-Sim2Real allows a robotic to carry rallies of over 300 hits with a human participant, whereas GoalsEye allows studying goal-conditioned insurance policies that match the precision of novice people.

| Iterative-Sim2Real insurance policies enjoying cooperatively with people (high) and a GoalsEye coverage returning balls to totally different places (backside). |

Iterative-Sim2Real: Leveraging a Simulator to Play Cooperatively with People

On this challenge, the aim for the robotic is cooperative in nature: to hold out a rally with a human for so long as potential. Since it could be tedious and time-consuming to coach immediately towards a human participant in the true world, we undertake a simulation-based (i.e., sim-to-real) method. Nonetheless, as a result of it’s tough to simulate human habits precisely, making use of sim-to-real studying to duties that require tight, close-loop interplay with a human participant is tough.

In Iterative-Sim2Real, (i.e., i-S2R), we current a technique for studying human habits fashions for human-robot interplay duties, and instantiate it on our robotic desk tennis platform. We’ve got constructed a system that may obtain rallies of as much as 340 hits with an novice human participant (proven under).

| A 340-hit rally lasting over 4 minutes. |

Studying Human Conduct Fashions: a Hen and Egg Downside

The central drawback in studying correct human habits fashions for robotics is the next: if we should not have a good-enough robotic coverage to start with, then we can’t accumulate high-quality knowledge on how an individual would possibly work together with the robotic. However with no human habits mannequin, we can’t receive robotic insurance policies within the first place. An alternate could be to coach a robotic coverage immediately in the true world, however that is typically sluggish, cost-prohibitive, and poses safety-related challenges, that are additional exacerbated when individuals are concerned. i-S2R, visualized under, is an answer to this rooster and egg drawback. It makes use of a easy mannequin of human habits as an approximate place to begin and alternates between coaching in simulation and deploying in the true world. In every iteration, each the human habits mannequin and the coverage are refined.

|

| i-S2R Methodology. |

Outcomes

To guage i-S2R, we repeated the coaching course of 5 instances with 5 totally different human opponents and in contrast it with a baseline method of peculiar sim-to-real plus fine-tuning (S2R+FT). When aggregated throughout all gamers, the i-S2R rally size is increased than S2R+FT by about 9% (under on the left). The histogram of rally lengths for i-S2R and S2R+FT (under on the best) exhibits that a big fraction of the rallies for S2R+FT are shorter (i.e., lower than 5), whereas i-S2R achieves longer rallies extra ceaselessly.

|

| Abstract of i-S2R outcomes. Boxplot particulars: The white circle is the imply, the horizontal line is the median, field bounds are the twenty fifth and seventy fifth percentiles. |

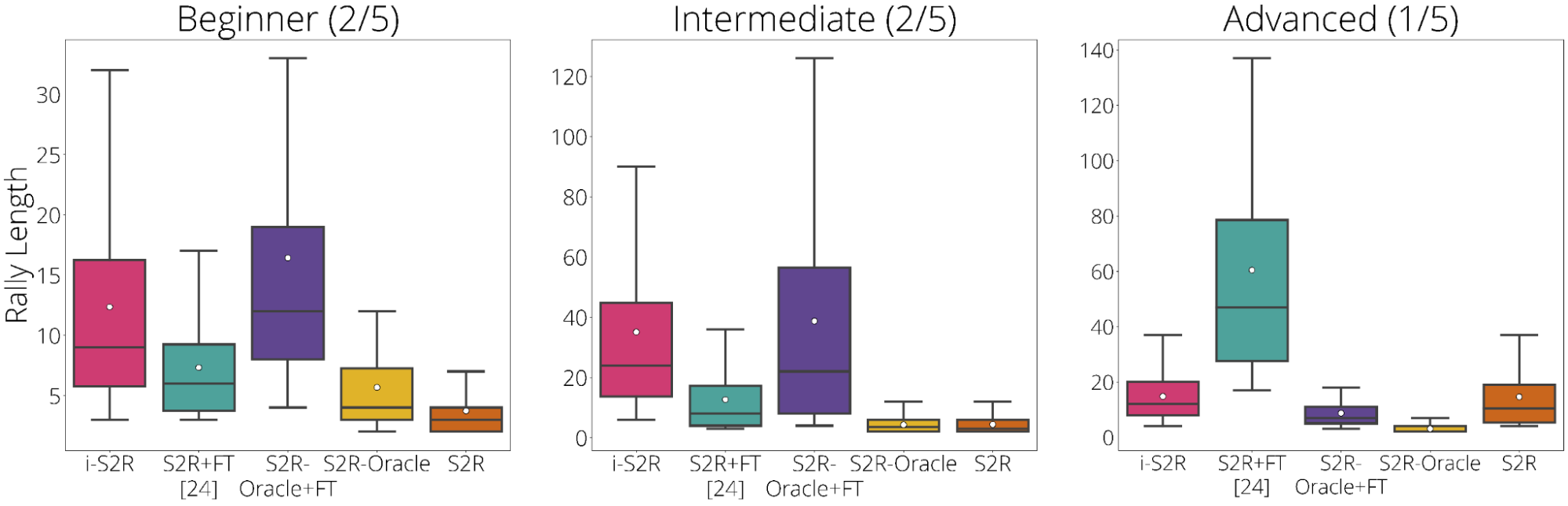

We additionally break down the outcomes primarily based on participant kind: newbie (40% gamers), intermediate (40% of gamers) and superior (20% gamers). We see that i-S2R considerably outperforms S2R+FT for each newbie and intermediate gamers (80% of gamers).

|

| i-S2R Outcomes by participant kind. |

Extra particulars on i-S2R may be discovered on our preprint, web site, and in addition within the following abstract video.

GoalsEye: Studying to Return Balls Exactly on a Bodily Robotic

Whereas we centered on sim-to-real studying in i-S2R, it’s generally fascinating to study utilizing solely real-world knowledge — closing the sim-to-real hole on this case is pointless. Imitation studying (IL) offers a easy and secure method to studying in the true world, however it requires entry to demonstrations and can’t exceed the efficiency of the trainer. Amassing knowledgeable human demonstrations of exact goal-targeting in excessive pace settings is difficult and generally unattainable (resulting from restricted precision in human actions). Whereas reinforcement studying (RL) is well-suited to such high-speed, high-precision duties, it faces a tough exploration drawback (particularly at the beginning), and may be very pattern inefficient. In GoalsEye, we display an method that mixes latest habits cloning strategies [5, 6] to study a exact goal-targeting coverage, ranging from a small, weakly-structured, non-targeting dataset.

Right here we think about a special desk tennis job with an emphasis on precision. We would like the robotic to return the ball to an arbitrary aim location on the desk, e.g. “hit the again left nook” or ”land the ball simply over the online on the best aspect” (see left video under). Additional, we wished to discover a methodology that may be utilized immediately on our actual world desk tennis setting with no simulation concerned. We discovered that the synthesis of two current imitation studying strategies, Studying from Play (LFP) and Purpose-Conditioned Supervised Studying (GCSL), scales to this setting. It’s protected and pattern environment friendly sufficient to coach a coverage on a bodily robotic which is as correct as novice people on the job of returning balls to particular objectives on the desk.

| GoalsEye coverage aiming at a 20cm diameter aim (left). Human participant aiming on the identical aim (proper). |

The important substances of success are:

- A minimal, however non-goal-directed “bootstrap” dataset of the robotic hitting the ball to beat an preliminary tough exploration drawback.

- Hindsight relabeled aim conditioned behavioral cloning (GCBC) to coach a goal-directed coverage to achieve any aim within the dataset.

- Iterative self-supervised aim reaching. The agent improves constantly by setting random objectives and making an attempt to achieve them utilizing the present coverage. All makes an attempt are relabeled and added right into a constantly increasing coaching set. This self-practice, during which the robotic expands the coaching knowledge by setting and making an attempt to achieve objectives, is repeated iteratively.

|

| GoalsEye methodology. |

Demonstrations and Self-Enchancment By way of Apply Are Key

The synthesis of strategies is essential. The coverage’s goal is to return a selection of incoming balls to any location on the opponent’s aspect of the desk. A coverage educated on the preliminary 2,480 demonstrations solely precisely reaches inside 30 cm of the aim 9% of the time. Nonetheless, after a coverage has self-practiced for ~13,500 makes an attempt, goal-reaching accuracy rises to 43% (under on the best). This enchancment is clearly seen as proven within the movies under. But if a coverage solely self-practices, coaching fails fully on this setting. Apparently, the variety of demonstrations improves the effectivity of subsequent self-practice, albeit with diminishing returns. This means that demonstration knowledge and self-practice may very well be substituted relying on the relative time and price to assemble demonstration knowledge in contrast with self-practice.

|

| Self-practice considerably improves accuracy. Left: simulated coaching. Proper: actual robotic coaching. The demonstration datasets comprise ~2,500 episodes, each in simulation and the true world. |

| Visualizing the advantages of self-practice. Left: coverage educated on preliminary 2,480 demonstrations. Proper: coverage after a further 13,500 self-practice makes an attempt. |

Extra particulars on GoalsEye may be discovered within the preprint and on our web site.

Conclusion and Future Work

We’ve got offered two complementary initiatives utilizing our robotic desk tennis analysis platform. i-S2R learns RL insurance policies which might be capable of work together with people, whereas GoalsEye demonstrates that studying from real-world unstructured knowledge mixed with self-supervised apply is efficient for studying goal-conditioned insurance policies in a exact, dynamic setting.

One attention-grabbing analysis path to pursue on the desk tennis platform could be to construct a robotic “coach” that might adapt its play type in line with the talent degree of the human participant to maintain issues difficult and thrilling.

Acknowledgements

We thank our co-authors, Saminda Abeyruwan, Alex Bewley, Krzysztof Choromanski, David B. D’Ambrosio, Tianli Ding, Deepali Jain, Corey Lynch, Pannag R. Sanketi, Pierre Sermanet and Anish Shankar. We’re additionally grateful for the assist of many members of the Robotics Workforce who’re listed within the acknowledgement sections of the papers.