Because the tempo of AI and the transformation it allows throughout industries continues to speed up, Microsoft is dedicated to constructing and enhancing our international cloud infrastructure to satisfy the wants from prospects and builders with sooner, extra performant, and extra environment friendly compute and AI options. Azure AI infrastructure includes expertise from {industry} leaders in addition to Microsoft’s personal improvements, together with Azure Maia 100, Microsoft’s first in-house AI accelerator, introduced in November. On this weblog, we are going to dive deeper into the expertise and journey of creating Azure Maia 100, the co-design of {hardware} and software program from the bottom up, constructed to run cloud-based AI workloads and optimized for Azure AI infrastructure.

Azure Maia 100, pushing the boundaries of semiconductor innovation

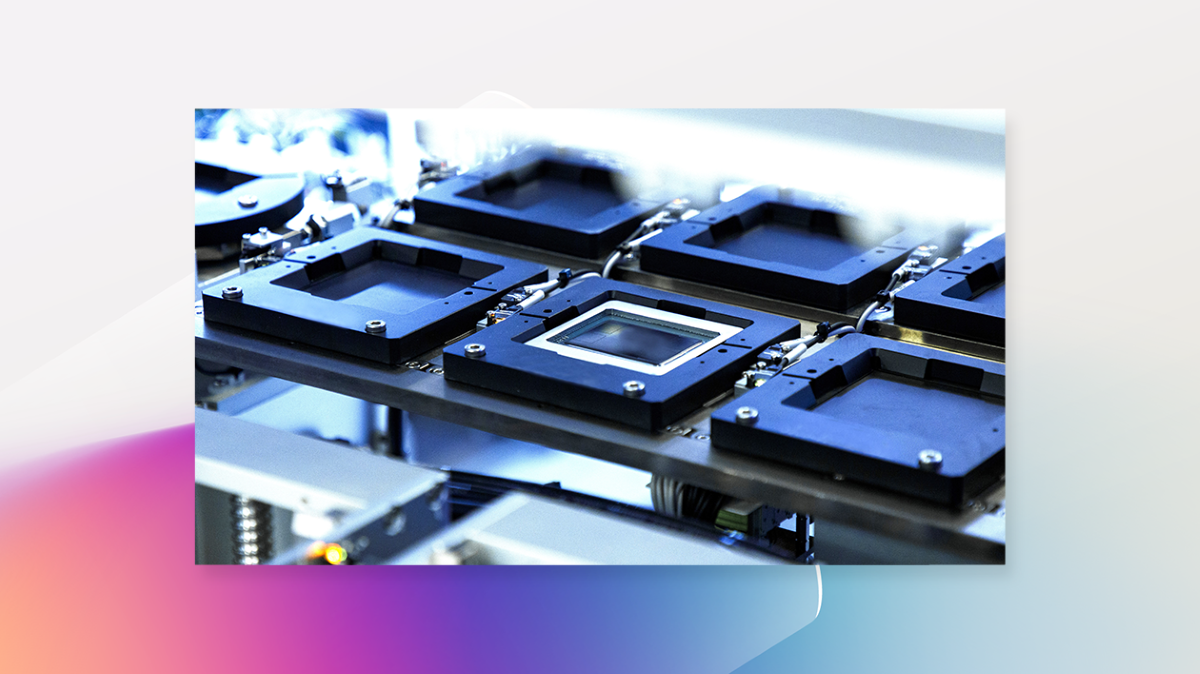

Maia 100 was designed to run cloud-based AI workloads, and the design of the chip was knowledgeable by Microsoft’s expertise in operating complicated and large-scale AI workloads similar to Microsoft Copilot. Maia 100 is likely one of the largest processors made on 5nm node utilizing superior packaging expertise from TSMC.

By means of collaboration with Azure prospects and leaders within the semiconductor ecosystem, similar to foundry and EDA companions, we are going to proceed to use real-world workload necessities to our silicon design, optimizing all the stack from silicon to service, and delivering one of the best expertise to our prospects to empower them to realize extra.

Finish-to-end programs optimization, designed for scalability and sustainability

When creating the structure for the Azure Maia AI accelerator collection, Microsoft reimagined the end-to-end stack in order that our programs may deal with frontier fashions extra effectively and in much less time. AI workloads demand infrastructure that’s dramatically completely different from different cloud compute workloads, requiring elevated energy, cooling, and networking functionality. Maia 100’s {custom} rack-level energy distribution and administration integrates with Azure infrastructure to realize dynamic energy optimization. Maia 100 servers are designed with a fully-custom, Ethernet-based community protocol with combination bandwidth of 4.8 terabits per accelerator to allow higher scaling and end-to-end workload efficiency.

After we developed Maia 100, we additionally constructed a devoted “sidekick” to match the thermal profile of the chip and added rack-level, closed-loop liquid cooling to Maia 100 accelerators and their host CPUs to realize greater effectivity. This structure permits us to carry Maia 100 programs into our current datacenter infrastructure, and to suit extra servers into these services, all inside our current footprint. The Maia 100 sidekicks are additionally constructed and manufactured to satisfy our zero waste dedication.

Co-optimizing {hardware} and software program from the bottom up with the open-source ecosystem

From the beginning, transparency and collaborative development have been core tenets in our design philosophy as we construct and develop Microsoft’s cloud infrastructure for compute and AI. Collaboration allows sooner iterative growth throughout the {industry}—and on the Maia 100 platform, we’ve cultivated an open neighborhood mindset from algorithmic information varieties to software program to {hardware}.

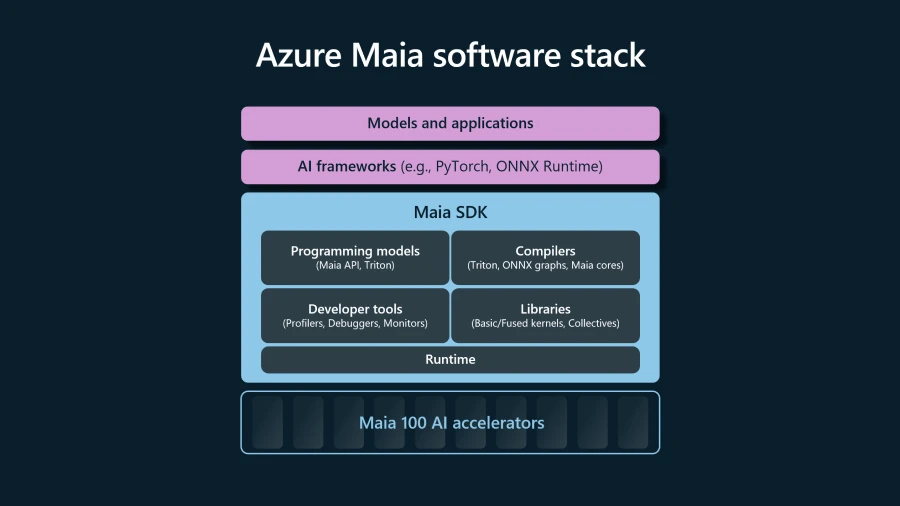

To make it simple to develop AI fashions on Azure AI infrastructure, Microsoft is creating the software program for Maia 100 that integrates with widespread open-source frameworks like PyTorch and ONNX Runtime. The software program stack gives wealthy and complete libraries, compilers, and instruments to equip information scientists and builders to efficiently run their fashions on Maia 100.

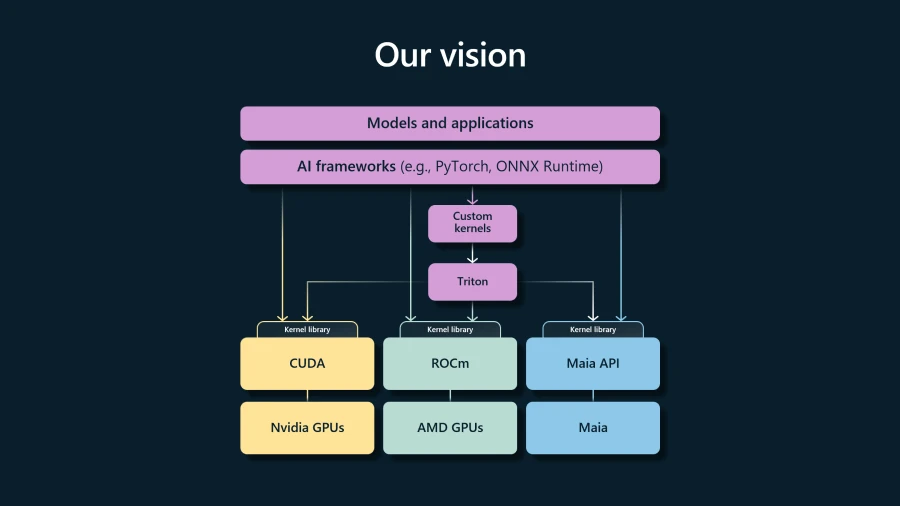

To optimize workload efficiency, AI {hardware} usually requires growth of {custom} kernels which can be silicon-specific. We envision seamless interoperability amongst AI accelerators in Azure, so now we have built-in Triton from OpenAI. Triton is an open-source programming language that simplifies kernel authoring by abstracting the underlying {hardware}. It will empower builders with full portability and suppleness with out sacrificing effectivity and the flexibility to focus on AI workloads.

Maia 100 can be the primary implementation of the Microscaling (MX) information format, an industry-standardized information format that results in sooner mannequin coaching and inferencing occasions. Microsoft has partnered with AMD, ARM, Intel, Meta, NVIDIA, and Qualcomm to launch the v1.0 MX specification via the Open Compute Mission neighborhood in order that all the AI ecosystem can profit from these algorithmic enhancements.

Azure Maia 100 is a singular innovation combining state-of-the-art silicon packaging strategies, ultra-high-bandwidth networking design, fashionable cooling and energy administration, and algorithmic co-design of {hardware} with software program. We stay up for persevering with to advance our objective of constructing AI actual by introducing extra silicon, programs, and software program improvements into our datacenters globally.