AI picture turbines, which create fantastical sights on the intersection of goals and actuality, bubble up on each nook of the net. Their leisure worth is demonstrated by an ever-expanding treasure trove of whimsical and random pictures serving as oblique portals to the brains of human designers. A easy textual content immediate yields an almost instantaneous picture, satisfying our primitive brains, that are hardwired for immediate gratification.

Though seemingly nascent, the sphere of AI-generated artwork may be traced again so far as the Sixties with early makes an attempt utilizing symbolic rule-based approaches to make technical pictures. Whereas the development of fashions that untangle and parse phrases has gained growing sophistication, the explosion of generative artwork has sparked debate round copyright, disinformation, and biases, all mired in hype and controversy. Yilun Du, a PhD pupil within the Division of Electrical Engineering and Pc Science and affiliate of MIT’s Pc Science and Synthetic Intelligence Laboratory (CSAIL), not too long ago developed a brand new technique that makes fashions like DALL-E 2 extra inventive and have higher scene understanding. Right here, Du describes how these fashions work, whether or not this technical infrastructure may be utilized to different domains, and the way we draw the road between AI and human creativity.

Q: AI-generated pictures use one thing known as “steady diffusion” fashions to show phrases into astounding pictures in just some moments. However for each picture used, there’s often a human behind it. So what’s the the road between AI and human creativity? How do these fashions actually work?

A: Think about all the pictures you might get on Google Search and their related patterns. That is the food regimen these fashions are ate up. They’re educated on all of those pictures and their captions to generate pictures just like the billions of pictures it has seen on the web.

Let’s say a mannequin has seen quite a lot of canine images. It’s educated in order that when it will get an analogous textual content enter immediate like “canine,” it is in a position to generate a photograph that appears similar to the various canine footage already seen. Now, extra methodologically, how this all works dates again to a really outdated class of fashions known as “energy-based fashions,” originating within the ’70’s or ’80’s.

In energy-based fashions, an power panorama over pictures is constructed, which is used to simulate the bodily dissipation to generate pictures. If you drop a dot of ink into water and it dissipates, for instance, on the finish, you simply get this uniform texture. However in case you attempt to reverse this technique of dissipation, you progressively get the unique ink dot within the water once more. Or let’s say you’ve this very intricate block tower, and in case you hit it with a ball, it collapses right into a pile of blocks. This pile of blocks is then very disordered, and there is not likely a lot construction to it. To resuscitate the tower, you may attempt to reverse this folding course of to generate your unique pile of blocks.

The way in which these generative fashions generate pictures is in a really comparable method, the place, initially, you’ve this very nice picture, the place you begin from this random noise, and also you mainly discover ways to simulate the method of the way to reverse this technique of going from noise again to your unique picture, the place you attempt to iteratively refine this picture to make it an increasing number of practical.

By way of what is the line between AI and human creativity, you may say that these fashions are actually educated on the creativity of individuals. The web has all forms of work and pictures that individuals have already created previously. These fashions are educated to recapitulate and generate the photographs which were on the web. Because of this, these fashions are extra like crystallizations of what folks have spent creativity on for tons of of years.

On the identical time, as a result of these fashions are educated on what people have designed, they’ll generate very comparable items of artwork to what people have accomplished previously. They’ll discover patterns in artwork that individuals have made, nevertheless it’s a lot tougher for these fashions to really generate inventive images on their very own.

If you happen to attempt to enter a immediate like “summary artwork” or “distinctive artwork” or the like, it doesn’t actually perceive the creativity facet of human artwork. The fashions are, relatively, recapitulating what folks have accomplished previously, so to talk, versus producing essentially new and inventive artwork.

Since these fashions are educated on huge swaths of pictures from the web, quite a lot of these pictures are doubtless copyrighted. You do not precisely know what the mannequin is retrieving when it is producing new pictures, so there is a massive query of how one can even decide if the mannequin is utilizing copyrighted pictures. If the mannequin relies upon, in some sense, on some copyrighted pictures, are then these new pictures copyrighted? That’s one other query to handle.

Q: Do you imagine pictures generated by diffusion fashions encode some form of understanding about pure or bodily worlds, both dynamically or geometrically? Are there efforts towards “instructing” picture turbines the fundamentals of the universe that infants study so early on?

A: Do they perceive, in code, some grasp of pure and bodily worlds? I believe undoubtedly. If you happen to ask a mannequin to generate a steady configuration of blocks, it undoubtedly generates a block configuration that’s steady. If you happen to inform it, generate an unstable configuration of blocks, it does look very unstable. Or in case you say “a tree subsequent to a lake,” it is roughly in a position to generate that.

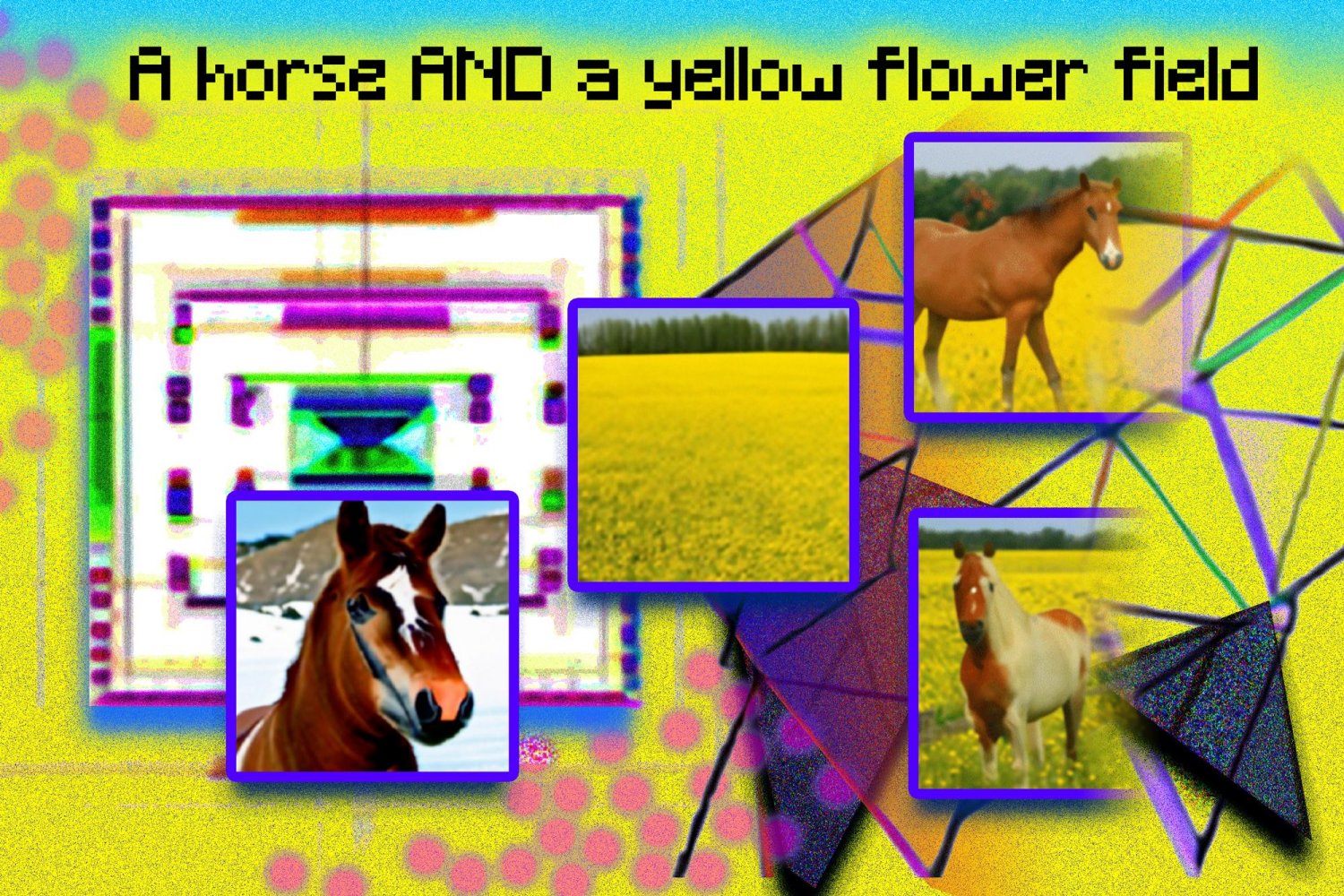

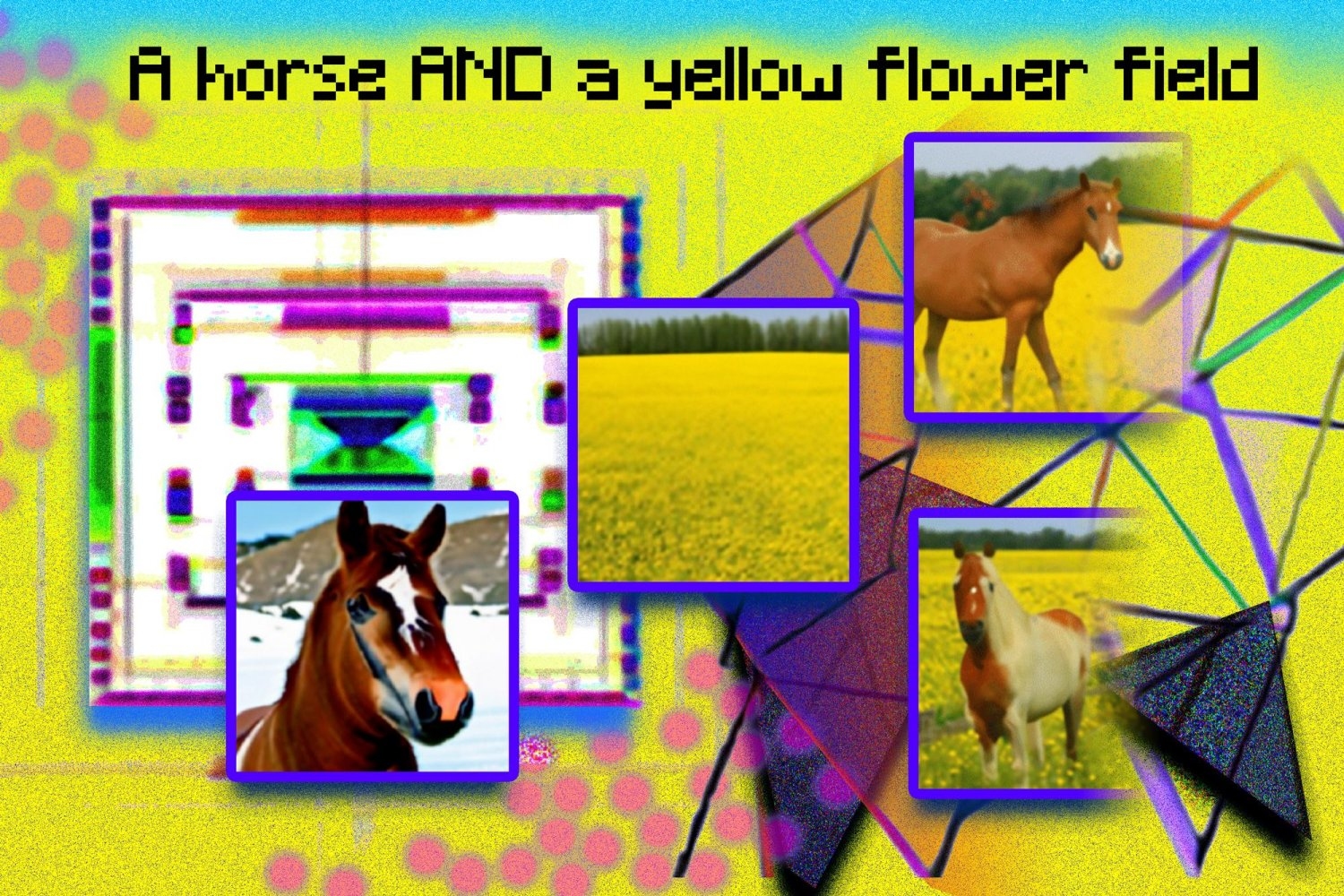

In a way, it looks like these fashions have captured a big facet of frequent sense. However the concern that makes us, nonetheless, very far-off from actually understanding the pure and bodily world is that while you attempt to generate rare combos of phrases that you just or I in our working our minds can very simply think about, these fashions can’t.

For instance, in case you say, “put a fork on high of a plate,” that occurs on a regular basis. If you happen to ask the mannequin to generate this, it simply can. If you happen to say, “put a plate on high of a fork,” once more, it is very straightforward for us to think about what this is able to seem like. However in case you put this into any of those giant fashions, you’ll by no means get a plate on high of a fork. You as a substitute get a fork on high of a plate, because the fashions are studying to recapitulate all the photographs it has been educated on. It will probably’t actually generalize that nicely to combos of phrases it hasn’t seen.

A reasonably well-known instance is an astronaut driving a horse, which the mannequin can do with ease. However in case you say a horse driving an astronaut, it nonetheless generates an individual driving a horse. It looks like these fashions are capturing quite a lot of correlations within the datasets they’re educated on, however they are not truly capturing the underlying causal mechanisms of the world.

One other instance that is generally used is in case you get very difficult textual content descriptions like one object to the fitting of one other one, the third object within the entrance, and a 3rd or fourth one flying. It actually is simply in a position to fulfill possibly one or two of the objects. This may very well be partially due to the coaching knowledge, because it’s uncommon to have very difficult captions Nevertheless it may additionally recommend that these fashions aren’t very structured. You may think about that in case you get very difficult pure language prompts, there’s no method during which the mannequin can precisely characterize all of the element particulars.

Q: You lately got here up with a brand new technique that makes use of a number of fashions to create extra complicated pictures with higher understanding for generative artwork. Are there potential functions of this framework exterior of picture or textual content domains?

A: We had been actually impressed by one of many limitations of those fashions. If you give these fashions very difficult scene descriptions, they are not truly in a position to appropriately generate pictures that match them.

One thought is, because it’s a single mannequin with a hard and fast computational graph, that means you may solely use a hard and fast quantity of computation to generate a picture, in case you get a particularly difficult immediate, there’s no means you should utilize extra computational energy to generate that picture.

If I gave a human an outline of a scene that was, say, 100 strains lengthy versus a scene that is one line lengthy, a human artist can spend for much longer on the previous. These fashions do not actually have the sensibility to do that. We suggest, then, that given very difficult prompts, you may truly compose many alternative impartial fashions collectively and have every particular person mannequin characterize a portion of the scene you need to describe.

We discover that this allows our mannequin to generate extra difficult scenes, or those who extra precisely generate completely different facets of the scene collectively. As well as, this strategy may be usually utilized throughout quite a lot of completely different domains. Whereas picture technology is probably going probably the most at the moment profitable software, generative fashions have truly been seeing all forms of functions in quite a lot of domains. You should use them to generate completely different various robotic behaviors, synthesize 3D shapes, allow higher scene understanding, or design new supplies. You might doubtlessly compose a number of desired components to generate the precise materials you want for a specific software.

One factor we have been very thinking about is robotics. In the identical means that you may generate completely different pictures, it’s also possible to generate completely different robotic trajectories (the trail and schedule), and by composing completely different fashions collectively, you’ll be able to generate trajectories with completely different combos of expertise. If I’ve pure language specs of leaping versus avoiding an impediment, you might additionally compose these fashions collectively, after which generate robotic trajectories that may each soar and keep away from an impediment .

In an analogous method, if we need to design proteins, we will specify completely different features or facets — in an identical method to how we use language to specify the content material of the photographs — with language-like descriptions, reminiscent of the sort or performance of the protein. We may then compose these collectively to generate new proteins that may doubtlessly fulfill all of those given features.

We’ve additionally explored utilizing diffusion fashions on 3D form technology, the place you should utilize this strategy to generate and design 3D property. Usually, 3D asset design is a really difficult and laborious course of. By composing completely different fashions collectively, it turns into a lot simpler to generate shapes reminiscent of, “I need a 3D form with 4 legs, with this model and peak,” doubtlessly automating parts of 3D asset design.