That is the fourth weblog in our sequence on LLMOps for enterprise leaders. Learn the first, second, and third articles to study extra about LLMOps on Azure AI.

In our LLMOps weblog sequence, we’ve explored numerous dimensions of Massive Language Fashions (LLMs) and their accountable use in AI operations. Elevating our dialogue, we now introduce the LLMOps maturity mannequin, a significant compass for enterprise leaders. This mannequin isn’t just a roadmap from foundational LLM utilization to mastery in deployment and operational administration; it’s a strategic information that underscores why understanding and implementing this mannequin is crucial for navigating the ever-evolving panorama of AI. Take, as an illustration, Siemens’ use of Microsoft Azure AI Studio and immediate movement to streamline LLM workflows to assist help their business main product lifecycle administration (PLM) resolution Teamcenter and join individuals who discover issues with those that can repair them. This real-world software exemplifies how the LLMOps maturity mannequin facilitates the transition from theoretical AI potential to sensible, impactful deployment in a posh business setting.

Exploring software maturity and operational maturity in Azure

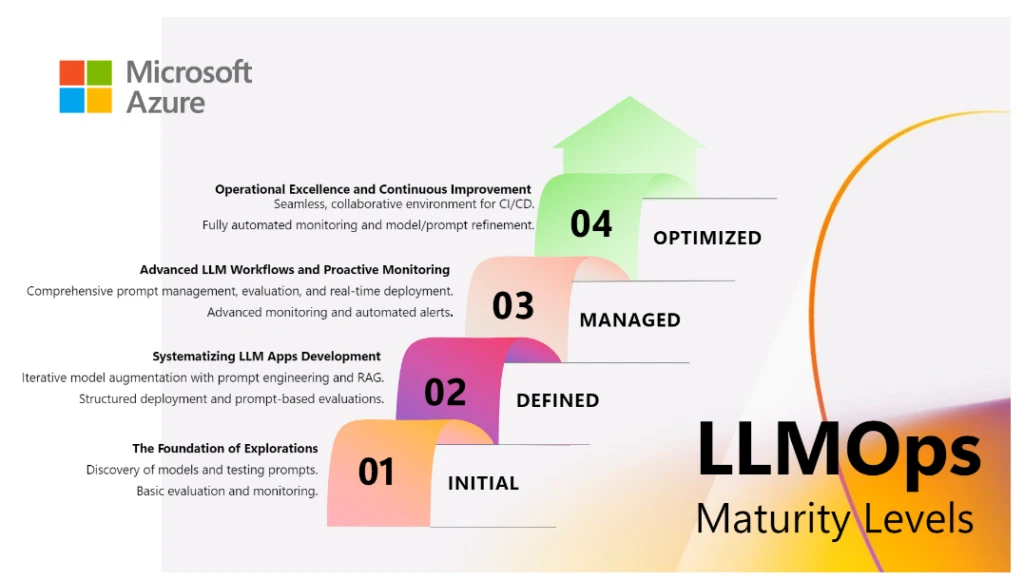

The LLMOps maturity mannequin presents a multifaceted framework that successfully captures two important points of working with LLMs: the sophistication in software improvement and the maturity of operational processes.

Utility maturity: This dimension facilities on the development of LLM strategies inside an software. Within the preliminary phases, the emphasis is positioned on exploring the broad LLM capabilities, typically progressing in the direction of extra intricate strategies like fine-tuning and Retrieval Augmented Technology (RAG) to satisfy particular wants.

Operational maturity: Whatever the complexity of LLM strategies employed, operational maturity is crucial for scaling purposes. This contains systematic deployment, sturdy monitoring, and upkeep methods. The main target right here is on making certain that the LLM purposes are dependable, scalable, and maintainable, regardless of their degree of sophistication.

This maturity mannequin is designed to replicate the dynamic and ever-evolving panorama of LLM know-how, which requires a steadiness between flexibility and a methodical strategy. This steadiness is essential in navigating the continual developments and exploratory nature of the sector. The mannequin outlines numerous ranges, every with its personal rationale and technique for development, offering a transparent roadmap for organizations to boost their LLM capabilities.

LLMOps maturity mannequin

Stage One—Preliminary: The muse of exploration

At this foundational stage, organizations embark on a journey of discovery and foundational understanding. The main target is predominantly on exploring the capabilities of pre-built LLMs, akin to these supplied by Microsoft Azure OpenAI Service APIs or Fashions as a Service (MaaS) by means of inference APIs. This part sometimes includes fundamental coding expertise for interacting with these APIs, gaining insights into their functionalities, and experimenting with easy prompts. Characterised by guide processes and remoted experiments, this degree doesn’t but prioritize complete evaluations, monitoring, or superior deployment methods. As a substitute, the first goal is to know the potential and limitations of LLMs by means of hands-on experimentation, which is essential in understanding how these fashions could be utilized to real-world eventualities.

At firms like Contoso1, builders are inspired to experiment with a wide range of fashions, together with GPT-4 from Azure OpenAI Service and LLama 2 from Meta AI. Accessing these fashions by means of the Azure AI mannequin catalog permits them to find out which fashions are simplest for his or her particular datasets. This stage is pivotal in setting the groundwork for extra superior purposes and operational methods within the LLMOps journey.

Stage Two—Outlined: Systematizing LLM app improvement

As organizations develop into more adept with LLMs, they begin adopting a scientific methodology of their operations. This degree introduces structured improvement practices, specializing in immediate design and the efficient use of several types of prompts, akin to these discovered within the meta immediate templates in Azure AI Studio. At this degree, builders begin to perceive the affect of various prompts on the outputs of LLMs and the significance of accountable AI in generated content material.

An vital device that comes into play right here is Azure AI immediate movement. It helps streamline your entire improvement cycle of AI purposes powered by LLMs, offering a complete resolution that simplifies the method of prototyping, experimenting, iterating, and deploying AI purposes. At this level, builders begin specializing in responsibly evaluating and monitoring their LLM flows. Immediate movement presents a complete analysis expertise, permitting builders to evaluate purposes on numerous metrics, together with accuracy and accountable AI metrics like groundedness. Moreover, LLMs are built-in with RAG strategies to tug data from organizational information, permitting for tailor-made LLM options that preserve information relevance and optimize prices.

For example, at Contoso, AI builders at the moment are using Azure AI Search to create indexes in vector databases. These indexes are then integrated into prompts to supply extra contextual, grounded and related responses utilizing RAG with immediate movement. This stage represents a shift from fundamental exploration to a extra targeted experimentation, aimed toward understanding the sensible use of LLMs in fixing particular challenges.

Stage Three—Managed: Superior LLM workflows and proactive monitoring

Throughout this stage, the main target shifts to sophisticated immediate engineering, the place builders work on creating extra complicated prompts and integrating them successfully into purposes. This includes a deeper understanding of how totally different prompts affect LLM conduct and outputs, resulting in extra tailor-made and efficient AI options.

At this degree, builders harness immediate movement’s enhanced options, akin to plugins and performance callings, for creating subtle flows involving a number of LLMs. They will additionally handle numerous variations of prompts, code, configurations, and environments by way of code repositories, with the aptitude to trace modifications and rollback to earlier variations. The iterative analysis capabilities of immediate movement develop into important for refining LLM flows, by conducting batch runs, using analysis metrics like relevance, groundedness, and similarity. This enables them to assemble and evaluate numerous metaprompt variations, figuring out which of them yield increased high quality outputs that align with their enterprise goals and accountable AI tips.

As well as, this stage introduces a extra systematic strategy to movement deployment. Organizations begin implementing automated deployment pipelines, incorporating practices akin to steady integration/steady deployment (CI/CD). This automation enhances the effectivity and reliability of deploying LLM purposes, marking a transfer in the direction of extra mature operational practices.

Monitoring and upkeep additionally evolve throughout this stage. Builders actively observe numerous metrics to make sure sturdy and accountable operations. These embrace high quality metrics like groundedness and similarity, in addition to operational metrics akin to latency, error charge, and token consumption, alongside content material security measures.

At this stage in Contoso, builders think about creating numerous immediate variations in Azure AI immediate movement, refining them for enhanced accuracy and relevance. They make the most of superior metrics like Query and Answering (QnA) Groundedness and QnA Relevance throughout batch runs to continuously assess the standard of their LLM flows. After assessing these flows, they use the immediate movement SDK and CLI for packaging and automating deployment, integrating seamlessly with CI/CD processes. Moreover, Contoso improves its use of Azure AI Search, using extra subtle RAG strategies to develop extra complicated and environment friendly indexes of their vector databases. This leads to LLM purposes that aren’t solely faster in response and extra contextually knowledgeable, but in addition more cost effective, decreasing operational bills whereas enhancing efficiency.

Stage 4—Optimized: Operational excellence and steady enchancment

On the pinnacle of the LLMOps maturity mannequin, organizations attain a stage the place operational excellence and steady enchancment are paramount. This part options extremely subtle deployment processes, underscored by relentless monitoring and iterative enhancement. Superior monitoring options provide deep insights into LLM purposes, fostering a dynamic technique for steady mannequin and course of enchancment.

At this superior stage, Contoso’s builders have interaction in complicated immediate engineering and mannequin optimization. Using Azure AI’s complete toolkit, they construct dependable and extremely environment friendly LLM purposes. They fine-tune fashions like GPT-4, Llama 2, and Falcon for particular necessities and arrange intricate RAG patterns, enhancing question understanding and retrieval, thus making LLM outputs extra logical and related. They repeatedly carry out large-scale evaluations with subtle metrics assessing high quality, price, and latency, making certain thorough analysis of LLM purposes. Builders may even use an LLM-powered simulator to generate artificial information, akin to conversational datasets, to guage and enhance the accuracy and groundedness. These evaluations, carried out at numerous phases, embed a tradition of steady enhancement.

For monitoring and upkeep, Contoso adopts complete methods incorporating predictive analytics, detailed question and response logging, and tracing. These methods are aimed toward enhancing prompts, RAG implementations, and fine-tuning. They implement A/B testing for updates and automatic alerts to determine potential drifts, biases, and high quality points, aligning their LLM purposes with present business requirements and moral norms.

The deployment course of at this stage is streamlined and environment friendly. Contoso manages your entire lifecycle of LLMOps purposes, encompassing versioning and auto-approval processes based mostly on predefined standards. They constantly apply superior CI/CD practices with sturdy rollback capabilities, making certain seamless updates to their LLM purposes.

At this part, Contoso stands as a mannequin of LLMOps maturity, showcasing not solely operational excellence but in addition a steadfast dedication to steady innovation and enhancement within the LLM area.

Determine the place you’re within the journey

Every degree of the LLMOps maturity mannequin represents a strategic step within the journey towards production-level LLM purposes. The development from fundamental understanding to stylish integration and optimization encapsulates the dynamic nature of the sector. It acknowledges the necessity for steady studying and adaptation, making certain that organizations can harness the transformative energy of LLMs successfully and sustainably.

The LLMOps maturity mannequin presents a structured pathway for organizations to navigate the complexities of implementing and scaling LLM purposes. By understanding the excellence between software sophistication and operational maturity, organizations could make extra knowledgeable selections about how one can progress by means of the degrees of the mannequin. The introduction of Azure AI Studio that encapsulated immediate movement, mannequin catalog, and the Azure AI Search integration into this framework underscores the significance of each cutting-edge know-how and sturdy operational methods in attaining success with LLMs.

Be taught extra

Discover Azure AI Studio

Construct, consider, and deploy your AI options all inside one area

- Contoso is a fictional however consultant world group constructing generative AI purposes.