Sponsored Article

The increasing use of AI in business is accelerating extra advanced approaches — together with machine studying (ML), deep studying and even giant language fashions. These developments provide a glimpse of the large quantities of knowledge anticipated for use on the edge. Though the present focus has been on the right way to speed up the neural community operation, Micron is pushed on making reminiscence and storage that’s refined for AI on the edge.

What’s artificial information?

The IDC predicts that, by 2025, there might be 175 zettabytes (1 zettabyte =1 billion terabytes) of recent information generated worldwide. These portions are laborious to fathom, but the developments of AI will proceed to push the envelope for data-starved methods.

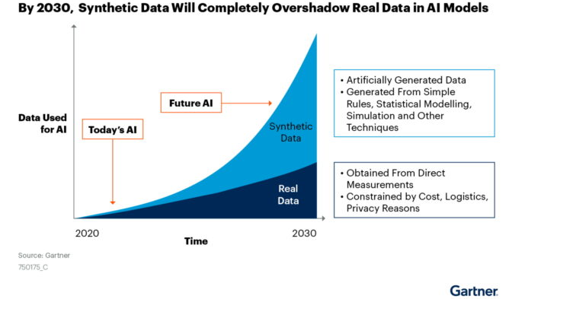

The truth is, the ever-increasing AI fashions have been stifled by the quantity of actual bodily information that’s obtained from direct measurements or bodily pictures. It’s simple to establish an orange when you’ve got a pattern of 10,000 available pictures of oranges. However for those who want particular scenes to check — for instance, a random crowd vs. an organised march or anomalies in a baked cookie vs. an ideal cookie — correct outcomes could be troublesome to verify until you may have all of the variant samples to create your baseline mannequin.

The business is more and more utilizing artificial information. Artificial information is artificially generated primarily based on simulation fashions that, for instance, provide statistical realities of the identical picture. This strategy is particularly true in industrial imaginative and prescient methods the place baselines for bodily pictures are distinctive and the place not sufficient “widgets” could be discovered on the net to supply a legitimate mannequin illustration.

In fact, the problem is the place these new types of information will reside. Definitely, any new datasets which can be created should be saved both within the cloud or, for extra distinctive representations, nearer to the place information must be analysed – on the edge.

Mannequin complexity and the reminiscence wall

Discovering the optimum stability between algorithmic effectivity and AI mannequin efficiency is a fancy activity, because it is determined by components corresponding to information traits and quantity, useful resource availability, energy consumption, workload necessities and extra.

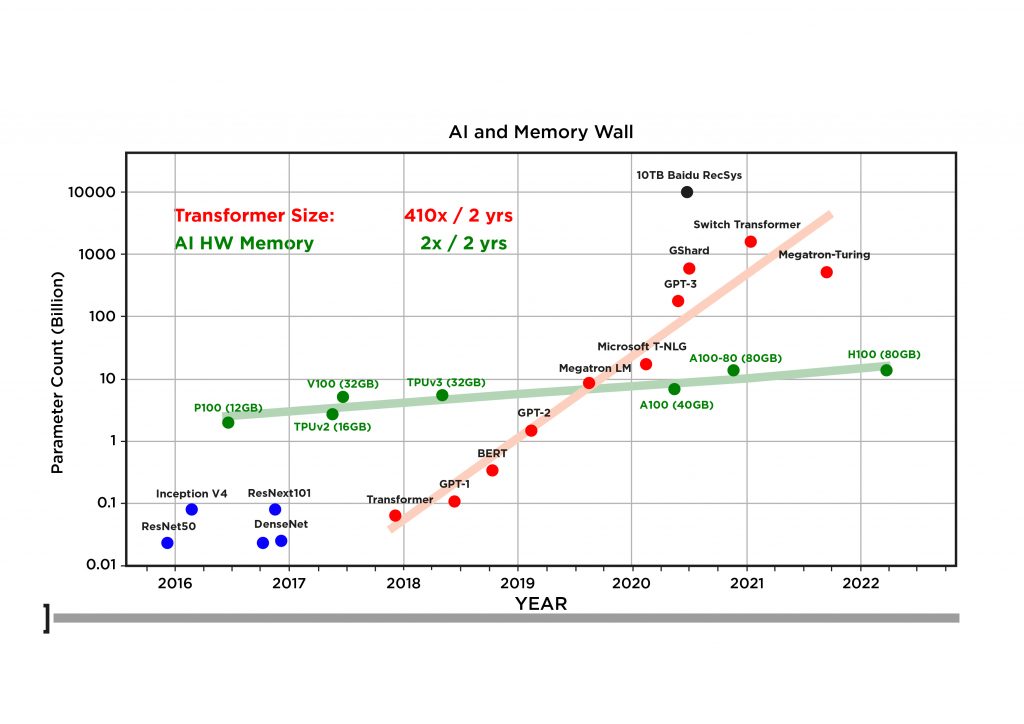

AI fashions are advanced algorithms that may be characterised by their variety of parameters: The higher the variety of parameters, the extra correct the outcomes. The business began with a typical baseline mannequin, corresponding to ResNet50 because it was simple to implement and have become the baseline for community efficiency. However that mannequin was centered on restricted datasets and restricted purposes. As these transformers have developed, we see that the evolution of transformers has elevated parameters over elevated reminiscence bandwidth. This consequence is an apparent pressure: No matter how a lot information the mannequin can deal with, we’re restricted by the bandwidth of reminiscence and storage accessible for the mannequin and parameters.

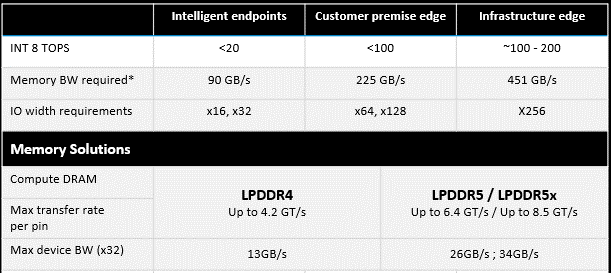

For a fast comparability, we are able to have a look at an embedded AI system’s efficiency in tera operations per second (TOPS). Right here we see that AI edge gadgets lower than 100 TOPS might have round 225 GB/s and people above 100 TOPS might require 451 GB/s of reminiscence bandwidth (Desk 1).

So, one technique to optimise that mannequin is to think about larger performing reminiscence that additionally provides the bottom energy consumption.

Reminiscence is maintaining with AI accelerated options by evolving with new requirements. For instance, LPDDR4/4X (low-power DDR4 DRAM) and LPDDR5/5X (low-power DDR5 DRAM) options have important efficiency enhancements over prior applied sciences.

LPDDR4 can run as much as 4.2 GT/s per pin (giga switch per second per pin) and assist as much as x64 bus width. LPDDR5X provides a 50% improve in efficiency over the LPDDR4, doubling the efficiency to as a lot as 8.5GT/s per pin. As well as, LPDDR5 provides 20% higher energy effectivity than the LPDDR4X (supply: Micron). These are important developments that may assist the necessity to cater to widening AI edge use instances.

What are the storage concerns?

It’s not sufficient to assume that compute sources are restricted by the uncooked TOPs of the processing unit or by the bandwidth of the reminiscence structure. As ML fashions have gotten extra refined, the variety of parameters for the mannequin are increasing exponentially as nicely.

Machine studying fashions and datasets broaden to attain higher mannequin efficiencies, so higher-performing embedded storage might be wanted as nicely. Typical managed NAND options corresponding to e.MMC 5.1 with 3.2 Gb/s are superb not just for code bring-up but in addition for distant information storage. As well as, options corresponding to UFS 3.1 can run seven occasions quicker — to 23.2 Gb/s — to permit for extra advanced fashions.

New architectures are additionally pushing capabilities to the sting that had been sometimes relegated to cloud or IT infrastructure. For instance, edge options implement a safe layer that provides an air hole between restricted operation information and the IT/cloud area. AI on the edge additionally helps clever automation corresponding to categorizing, tagging and retrieving saved information.

Reminiscence storage developments corresponding to NVMe SSDs that assist 3D TLC NAND provide excessive efficiency for varied edge workloads. For instance, Micron’s 7450 NVMe SSD makes use of a 176-layer NAND expertise that’s superb for many edge and information middle workloads. With 2ms high quality of service (QoS) latency, it’s superb for the efficiency necessities of SQL server platforms. It additionally provides FIPS 140-3 Stage 2 and TAA compliance for US federal authorities procurement necessities.

The rising ecosystem of AI edge processors

Allied Market Analysis estimates the AI edge processor market will develop to US$9.6 billion by 2030. 4 Curiously although, this new cohort of AI processor start-ups are creating ASICs and proprietary ASSPs geared for extra space-and-power-constrained edge purposes. These new chipsets additionally want the trade-off stability of efficiency and energy relating to reminiscence and storage options.

As well as, we see that AI chipset distributors have developed enterprise and information centre normal kind issue (EDSFF) accelerator playing cards that may be put in in a 1U answer and positioned with storage servers adaptable to speed up any workload — from AI/ML inference to video processing — utilizing the identical module.

How do you search the precise reminiscence and storage associate?

AI is now not hype however a actuality that’s being applied in all verticals. In a single examine, 89% of business already has a method or can have a method round AI on the edge inside the subsequent two years.5

However implementing AI isn’t a trivial activity, and the precise applied sciences and elements will make all of the distinction. Micron’s portfolio of the most recent applied sciences, each in reminiscence and storage, leads the best way for industrial clients with our IQ worth proposition. If you’re designing an AI Edge System, let Micron assist get your product to market quicker than ever. Contact your native Micron consultant or distributor of Micron merchandise (www.micron.com).

Touch upon this text beneath or by way of X: @IoTNow_