An online web page should reside in Google’s index earlier than it might seem in natural search outcomes. A web page can rank with out Google crawling it however by no means with out being listed.

Thus monitoring a web site’s indexation is vital.

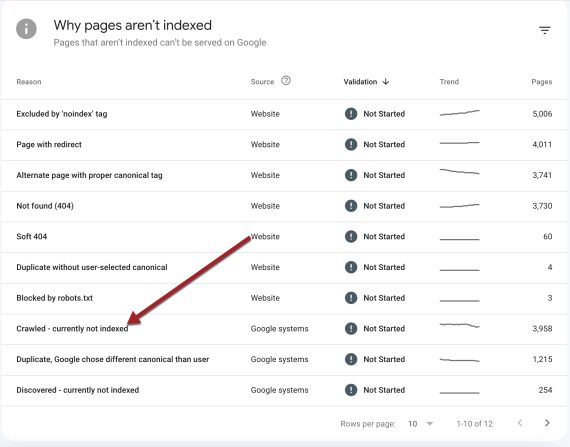

Google’s Search Console helpfully offers a web site’s index standing at Indexing > Pages. The part incorporates a “Why pages aren’t listed” checklist with a rundown of “Crawled – at present not listed.”

It may be complicated. Why would Google crawl a web page however not index it? Is the web page inferior in a roundabout way?

No. Failure to index is not essentially a sign of poor high quality.

Indexing 101

Google doesn’t at all times instantly apply algorithmic scores to pages it crawls, particularly with newer websites. It typically gathers knowledge first, then indexes.

Thus it’s not a top quality difficulty as a lot as timing.

A low-ranking web page is a greater indicator of poor high quality since Google presumably has knowledge for its algorithm.

Actually Google can take away a web page from its index. That’s the opposite motive to observe Search Console’s “Crawled – at present not listed” checklist. A web site with no new pages however a rising quantity within the “Crawled – at present not listed” checklist has an issue.

For instance, widespread deindexing occurred shortly after a core replace in 2020. Google’s Gary Illyes confirmed that the pages had been eliminated due to “low-quality and spammy content material.”

In my expertise, failure to index is frequent for websites with a half-million or extra URLs for 2 causes.

- Too massive. The positioning has too many pages for Google to index. Google doesn’t have indexation maximums, but it surely does have crawl limitations. Thus a monster web site might have each superior high quality and spotty indexing.

- Poor visibility. The positioning has many pages a number of clicks away from the house web page or with few inside backlinks. I’ve seen websites the place half the pages have only one or two inside backlinks. This alerts to Google that these pages are unimportant.

But deindexing wants fast consideration if it’s getting worse or contains 25% or extra pages. The previous sometimes outcomes from core or useful content material algorithm updates. The latter is probably going owing to poor web site construction that buries pages, prompting Google to devalue them.