Body interpolation is the method of synthesizing in-between photographs from a given set of photographs. The method is commonly used for temporal up-sampling to extend the refresh price of movies or to create sluggish movement results. These days, with digital cameras and smartphones, we frequently take a number of images inside just a few seconds to seize the most effective image. Interpolating between these “near-duplicate” images can result in partaking movies that reveal scene movement, usually delivering an much more pleasing sense of the second than the unique images.

Body interpolation between consecutive video frames, which frequently have small movement, has been studied extensively. In contrast to movies, nevertheless, the temporal spacing between near-duplicate images may be a number of seconds, with commensurately massive in-between movement, which is a serious failing level of present body interpolation strategies. Current strategies try to deal with massive movement by coaching on datasets with excessive movement, albeit with restricted effectiveness on smaller motions.

In “FILM: Body Interpolation for Massive Movement”, revealed at ECCV 2022, we current a technique to create prime quality slow-motion movies from near-duplicate images. FILM is a brand new neural community structure that achieves state-of-the-art leads to massive movement, whereas additionally dealing with smaller motions nicely.

|

| FILM interpolating between two near-duplicate images to create a sluggish movement video. |

FILM Mannequin Overview

The FILM mannequin takes two photographs as enter and outputs a center picture. At inference time, we recursively invoke the mannequin to output in-between photographs. FILM has three parts: (1) A characteristic extractor that summarizes every enter picture with deep multi-scale (pyramid) options; (2) a bi-directional movement estimator that computes pixel-wise movement (i.e., flows) at every pyramid degree; and (3) a fusion module that outputs the ultimate interpolated picture. We practice FILM on common video body triplets, with the center body serving because the ground-truth for supervision.

|

| A normal characteristic pyramid extraction on two enter photographs. Options are processed at every degree by a collection of convolutions, that are then downsampled to half the spatial decision and handed as enter to the deeper degree. |

Scale-Agnostic Characteristic Extraction

Massive movement is usually dealt with with hierarchical movement estimation utilizing multi-resolution characteristic pyramids (proven above). Nonetheless, this technique struggles with small and fast-moving objects as a result of they will disappear on the deepest pyramid ranges. As well as, there are far fewer obtainable pixels to derive supervision on the deepest degree.

To beat these limitations, we undertake a characteristic extractor that shares weights throughout scales to create a “scale-agnostic” characteristic pyramid. This characteristic extractor (1) permits using a shared movement estimator throughout pyramid ranges (subsequent part) by equating massive movement at shallow ranges with small movement at deeper ranges, and (2) creates a compact community with fewer weights.

Particularly, given two enter photographs, we first create a picture pyramid by successively downsampling every picture. Subsequent, we use a shared U-Web convolutional encoder to extract a smaller characteristic pyramid from every picture pyramid degree (columns within the determine under). Because the third and ultimate step, we assemble a scale-agnostic characteristic pyramid by horizontally concatenating options from completely different convolution layers which have the identical spatial dimensions. Observe that from the third degree onwards, the characteristic stack is constructed with the identical set of shared convolution weights (proven in the identical shade). This ensures that every one options are comparable, which permits us to proceed to share weights within the subsequent movement estimator. The determine under depicts this course of utilizing 4 pyramid ranges, however in apply, we use seven.

Bi-directional Movement Estimation

After characteristic extraction, FILM performs pyramid-based residual circulate estimation to compute the flows from the yet-to-be-predicted center picture to the 2 inputs. The circulate estimation is completed as soon as for every enter, ranging from the deepest degree, utilizing a stack of convolutions. We estimate the circulate at a given degree by including a residual correction to the upsampled estimate from the following deeper degree. This method takes the next as its enter: (1) the options from the primary enter at that degree, and (2) the options of the second enter after it’s warped with the upsampled estimate. The identical convolution weights are shared throughout all ranges, apart from the 2 best ranges.

Shared weights permit the interpretation of small motions at deeper ranges to be the identical as massive motions at shallow ranges, boosting the variety of pixels obtainable for big movement supervision. Moreover, shared weights not solely allow the coaching of highly effective fashions that will attain the next peak signal-to-noise ratio (PSNR), however are additionally wanted to allow fashions to suit into GPU reminiscence for sensible purposes.

|

|

| The influence of weight sharing on picture high quality. Left: no sharing, Proper: sharing. For this ablation we used a smaller model of our mannequin (known as FILM-med within the paper) as a result of the complete mannequin with out weight sharing would diverge because the regularization advantage of weight sharing was misplaced. |

Fusion and Body Era

As soon as the bi-directional flows are estimated, we warp the 2 characteristic pyramids into alignment. We get hold of a concatenated characteristic pyramid by stacking, at every pyramid degree, the 2 aligned characteristic maps, the bi-directional flows and the enter photographs. Lastly, a U-Web decoder synthesizes the interpolated output picture from the aligned and stacked characteristic pyramid.

Loss Features

Throughout coaching, we supervise FILM by combining three losses. First, we use the absolute L1 distinction between the expected and ground-truth frames to seize the movement between enter photographs. Nonetheless, this produces blurry photographs when used alone. Second, we use perceptual loss to enhance picture constancy. This minimizes the L1 distinction between the ImageNet pre-trained VGG-19 options extracted from the expected and floor reality frames. Third, we use Fashion loss to attenuate the L2 distinction between the Gram matrix of the ImageNet pre-trained VGG-19 options. The Fashion loss allows the community to supply sharp photographs and lifelike inpaintings of enormous pre-occluded areas. Lastly, the losses are mixed with weights empirically chosen such that every loss contributes equally to the overall loss.

Proven under, the mixed loss vastly improves sharpness and picture constancy when in comparison with coaching FILM with L1 loss and VGG losses. The mixed loss maintains the sharpness of the tree leaves.

|

| FILM’s mixed loss features. L1 loss (left), L1 plus VGG loss (center), and Fashion loss (proper), displaying vital sharpness enhancements (inexperienced field). |

Picture and Video Outcomes

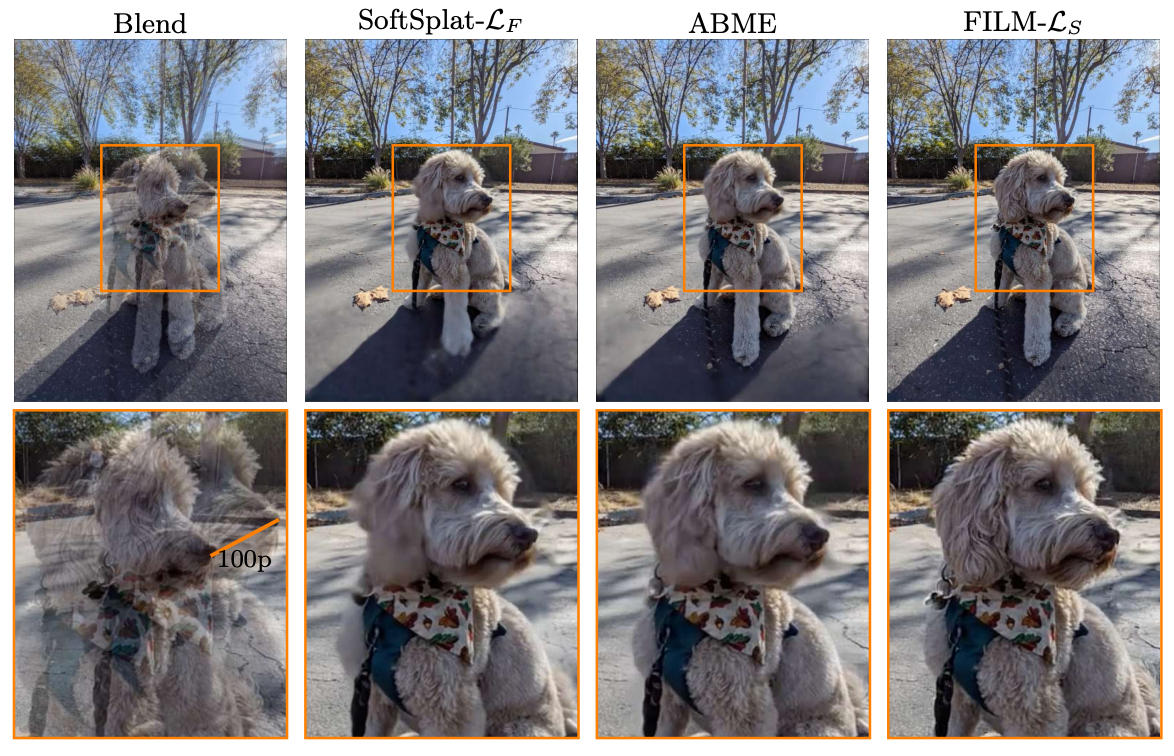

We consider FILM on an inner near-duplicate images dataset that displays massive scene movement. Moreover, we examine FILM to current body interpolation strategies: SoftSplat and ABME. FILM performs favorably when interpolating throughout massive movement. Even within the presence of movement as massive as 100 pixels, FILM generates sharp photographs in line with the inputs.

|

| Body interpolation with SoftSplat (left), ABME (center) and FILM (proper) displaying favorable picture high quality and temporal consistency. |

|

Conclusion

We introduce FILM, a big movement body interpolation neural community. At its core, FILM adopts a scale-agnostic characteristic pyramid that shares weights throughout scales, which permits us to construct a “scale-agnostic” bi-directional movement estimator that learns from frames with regular movement and generalizes nicely to frames with massive movement. To deal with broad disocclusions brought on by massive scene movement, we supervise FILM by matching the Gram matrix of ImageNet pre-trained VGG-19 options, which leads to lifelike inpainting and crisp photographs. FILM performs favorably on massive movement, whereas additionally dealing with small and medium motions nicely, and generates temporally easy prime quality movies.

Strive It Out Your self

You may check out FILM in your images utilizing the supply code, which is now publicly obtainable.

Acknowledgements

We wish to thank Eric Tabellion, Deqing Solar, Caroline Pantofaru, Brian Curless for his or her contributions. We thank Marc Comino Trinidad for his contributions on the scale-agnostic characteristic extractor, Orly Liba and Charles Herrmann for suggestions on the textual content, Jamie Aspinall for the imagery within the paper, Dominik Kaeser, Yael Pritch, Michael Nechyba, William T. Freeman, David Salesin, Catherine Wah, and Ira Kemelmacher-Shlizerman for help. Due to Tom Small for creating the animated diagram on this put up.