Introduction

Anomaly detection is broadly utilized throughout numerous industries, enjoying a big function within the enterprise sector. This weblog focuses on its software in manufacturing, the place it yields appreciable enterprise advantages. We are going to discover a case research centered on monitoring the well being of a simulated course of subsystem. The weblog will delve into dimension discount strategies like Principal Part Evaluation (PCA) and study the real-world affect of implementing such programs in a manufacturing surroundings. By analyzing a real-life instance, we are going to display how this method could be scaled as much as extract helpful insights from intensive sensor knowledge, using Databricks as a device.

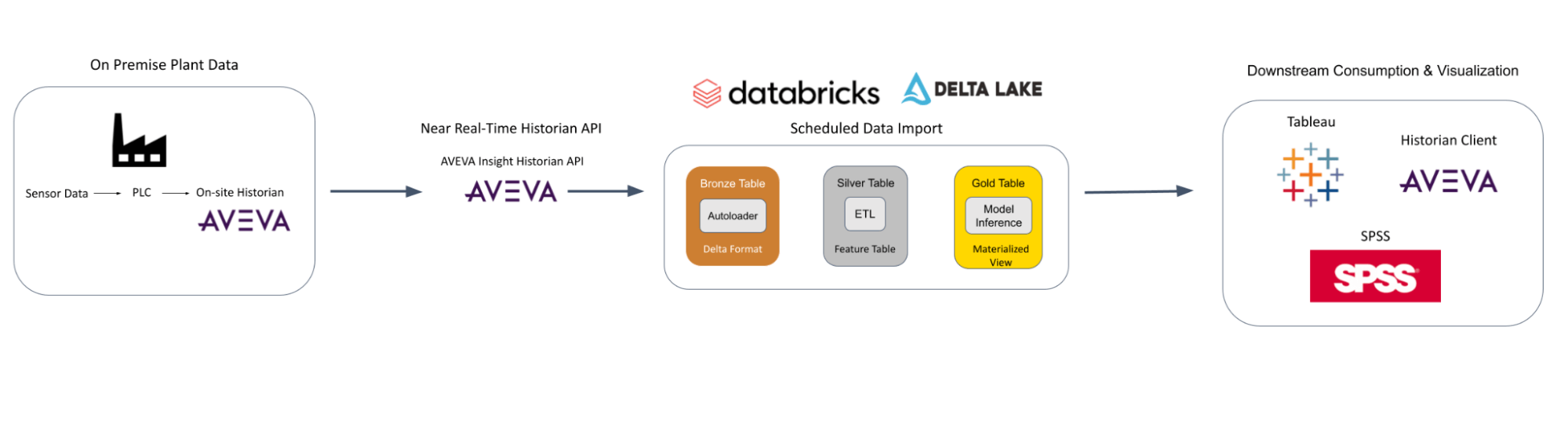

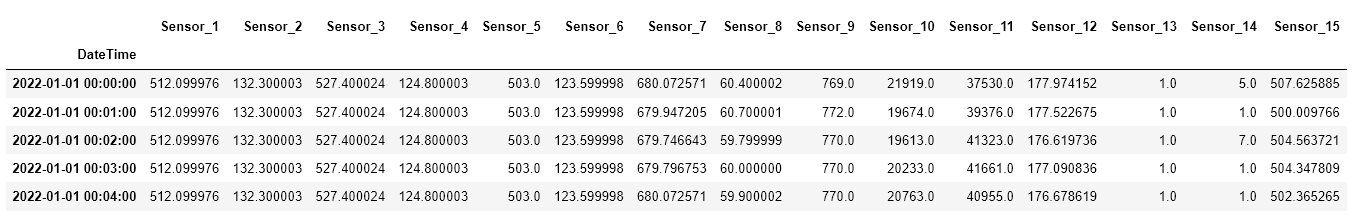

LP Constructing Options (LP) is a wood-based product manufacturing firm with an over 50-year observe file of shaping the constructing business. With operations in North and South America, LP manufactures constructing product options with moisture, fireplace, and termite resistance. At LP, petabytes of historic course of knowledge have been collected for years together with environmental, well being, and security (EHS) knowledge. Giant quantities of those historic knowledge have been saved and maintained in a wide range of programs similar to on-premise SQL servers, knowledge historian databases, statistical course of management software program, and enterprise asset administration options. Each millisecond, sensor knowledge is collected all through the manufacturing processes for all of their mills from dealing with uncooked supplies to packaging completed merchandise. By constructing lively analytical options throughout a wide range of knowledge, the info workforce has the flexibility to tell decision-makers all through the corporate on operational processes, conduct predictive upkeep, and acquire insights to make knowledgeable data-driven selections.

One of many largest data-driven use instances at LP was monitoring course of anomalies with time-series knowledge from hundreds of sensors. With Apache Spark on Databricks, massive quantities of information could be ingested and ready at scale to help mill decision-makers in bettering high quality and course of metrics. To arrange these knowledge for mill knowledge analytics, knowledge science, and superior predictive analytics, it’s mandatory for firms like LP to course of sensor data quicker and extra reliably than on-premises knowledge warehousing options alone

Structure

ML Modeling a Simulated Course of

For example, let’s contemplate a situation the place small anomalies upstream in a course of for a specialty product increase into bigger anomalies in a number of programs downstream. Let’s additional assume that these bigger anomalies within the downstream programs have an effect on product high quality, and trigger a key efficiency attribute to fall beneath acceptable limits. Utilizing prior data in regards to the course of from mill-level consultants, together with modifications within the ambient surroundings and former product runs of this product, it is potential to foretell the character of the anomaly, the place it occurred, and the way it can have an effect on downstream manufacturing.

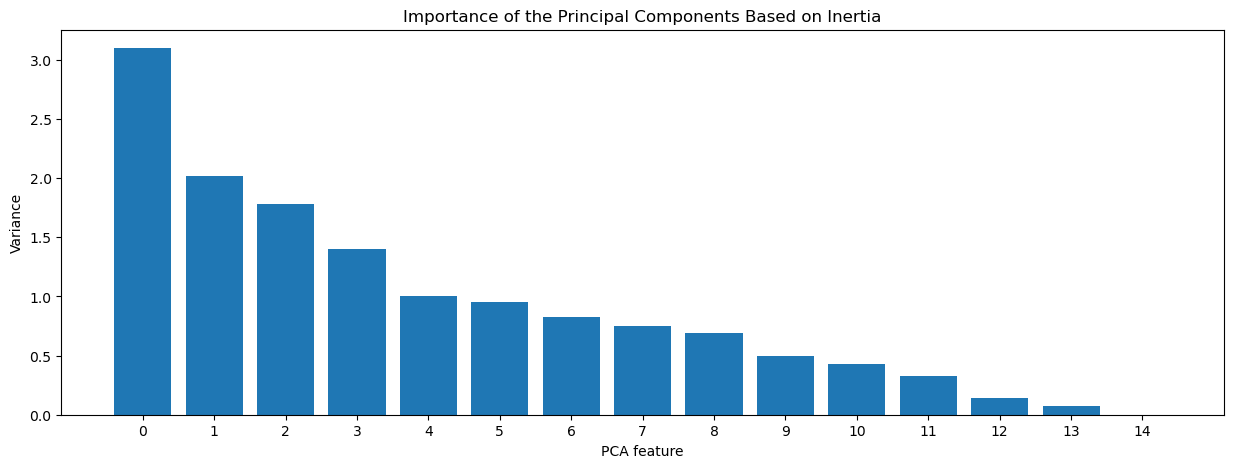

First, a dimensionality discount method of the time-series sensor knowledge would enable for identification of kit relationships which will have been missed by operators. The dimensionality discount serves as a information for validating relationships between items of kit which may be intuitive to operators who’re uncovered to this gear on daily basis. The first purpose right here is to cut back the variety of correlated time sequence overhead into comparatively unbiased and related time-based relationships as a substitute. Ideally, this could begin with course of knowledge with as a lot variety in acceptable product SKUs and operational home windows as potential. These elements will enable for a workable tolerance.

from sklearn.preprocessing import StandardScaler

from sklearn.decomposition import PCA

from sklearn.pipeline import make_pipeline

names=model_features.columns

x = model_features[names].dropna()

scaler = StandardScaler()

pca = PCA()

pipeline = make_pipeline(scaler, pca)

pipeline.match(x)Plotting

options = vary(pca.n_components_)

_ = plt.determine(figsize=(15, 5))

_ = plt.bar(options, pca.explained_variance_)

_ = plt.xlabel('PCA function')

_ = plt.ylabel('Variance')

_ = plt.xticks(options)

_ = plt.title("Significance of the Principal Parts primarily based on inertia")

plt.present()

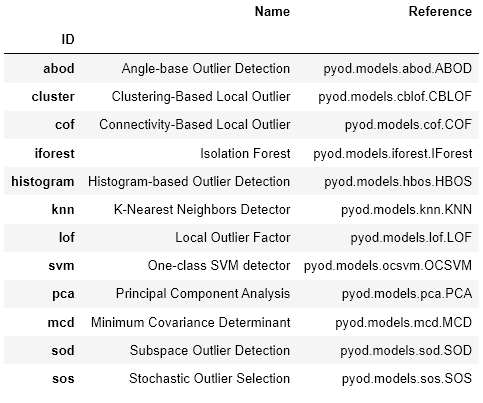

Subsequent, these time-based relationships could be fed into an anomaly detection mannequin to establish irregular behaviors. By detecting anomalies in these relationships, modifications in relationship patterns could be attributed to course of breakdown, downtime, or basic put on and tear of producing gear. This mixed method will use a mixture of dimensionality discount and anomaly detection methods to establish system- and process-level failures. As an alternative of counting on anomaly detection strategies on each sensor individually, it may be much more highly effective to make use of a mixed method to establish holistic sub-system failures. There are lots of pre-built packages that may be mixed to establish relationships after which establish anomalies inside these relationships. One such instance of a pre-built bundle that may deal with that is pycaret.

from pycaret.anomaly import *

db_ad = setup(knowledge = databricks_df, pca = True,use_gpu = True)fashions()

mannequin = create_model('cluster')

model_result = assign_model(mannequin)

print(model_result.Anomaly.value_counts(normalize=True))

model_resultFashions ought to be run at common intervals to establish doubtlessly critical course of disruptions earlier than the product is accomplished or results in extra critical downstream interruptions. If potential, all anomalies ought to be investigated by both a top quality supervisor, website reliability engineer, upkeep supervisor, or environmental supervisor relying on the character and site of the anomaly.

Whereas AI and knowledge availability are the important thing to delivering fashionable manufacturing functionality, insights and course of simulations imply nothing if the plant ground operators can not act upon them. Transferring from knowledge assortment from sensors to data-driven insights, developments, and alerts typically requires the ability set of cleansing, munging, modeling, and visualizing in real- or close to real-time timescales. This is able to enable plant decision-makers to reply to sudden course of upsets in the intervening time earlier than product high quality is affected.

CI/CD and MLOps for Manufacturing Information Science

Ultimately, any anomaly detection mannequin skilled on these knowledge will turn into much less correct over time. To handle this, an information drift monitoring system can proceed to run as a examine towards intentional system modifications versus unintentional modifications. Moreover, intentional disruptions that contribute to modifications in course of response will happen that the mannequin is not going to have seen earlier than. These disruptions can embody changed items of kit, new product SKUs, main gear restore, or modifications in uncooked materials. With these two factors in thoughts, knowledge drift displays ought to be carried out to establish intentional disruptions from unintentional disruptions by checking in with plant-level consultants on the method. Upon verification, the outcomes could be included into the earlier dataset for retraining of the mannequin.

Mannequin growth and administration profit significantly from sturdy cloud compute and deployment assets. MLOps, as a follow, gives an organized method to managing knowledge pipelines, addressing knowledge shifts, and facilitating mannequin growth via DevOps greatest practices. Presently, at LP, the Databricks platform is used for MLOps capabilities for each real-time and close to real-time anomaly predictions together with Azure Cloud-native capabilities and different inner tooling. This built-in method has allowed the info science workforce to streamline the mannequin growth processes, which has led to extra environment friendly manufacturing timelines. This method permits the workforce to focus on extra strategic duties, making certain the continuing relevance and effectiveness of their fashions.

Abstract

The Databricks platform has enabled us to make the most of petabytes of time sequence knowledge in a manageable approach. We used Databricks to do the next:

- Streamline the info ingestion course of from numerous sources and effectively retailer the info utilizing Delta Lake.

- Shortly rework and manipulate knowledge to be used in ML in an environment friendly and distributed method.

- Monitor ML fashions and automatic knowledge pipelines for CI/CD MLOps deployment.

These have helped our group make environment friendly data-driven selections that improve success and productiveness for LP and our prospects.

To study extra about MLOps, please check with the massive e-book of MLOps and for a deeper dive into the technical intricacies of anomaly detection utilizing Databricks, please learn the linked weblog.