When constructing data-driven purposes, it’s been a standard observe for years to maneuver analytics away from the supply database into both a slave, information warehouse or one thing comparable. The principle motive for that is that analytical queries, equivalent to aggregations and joins, are likely to require much more assets. When operating, the detrimental influence on database efficiency might reverberate again to front-end customers and have a adverse influence on their expertise.

Analytical queries are likely to take longer to run and use extra assets as a result of firstly they’re performing calculations on giant information units and in addition probably becoming a member of a number of information units collectively. Moreover, an information mannequin that works for quick storage and retrieval of single rows in all probability gained’t be probably the most performant for big analytical queries.

To alleviate the stress on the primary database, information groups usually replicate information to an exterior database for operating analytical queries. Personally, with MongoDB, shifting information to a SQL-based platform is extraordinarily helpful for analytics. Most information practitioners perceive easy methods to write SQL queries, nonetheless MongoDB’s question language isn’t as intuitive so will take time to study. On high of this, MongoDB additionally isn’t a relational database so becoming a member of information isn’t trivial or that performant. It subsequently could be helpful to carry out any analytical queries that require joins throughout a number of and/or giant datasets elsewhere.

To this finish, Rockset has partnered with MongoDB to launch a MongoDB-Rockset connector. Because of this information saved in MongoDB can now be immediately listed in Rockset by way of a built-in connector. On this submit I’m going to discover the use circumstances for utilizing a platform like Rockset to your aggregations and joins on MongoDB information and stroll by way of organising the mixing so you may rise up and operating your self.

Suggestions API for an On-line Occasion Ticketing System

To discover the advantages of replicating a MongoDB database into an analytics platform like Rockset, I’ll be utilizing a simulated occasion ticketing web site. MongoDB is used to retailer weblogs, ticket gross sales and consumer information. On-line ticketing techniques can usually have a really excessive throughput of information briefly time frames, particularly when wanted tickets are launched and 1000’s of individuals are all attempting to buy tickets on the similar time.

It’s subsequently anticipated {that a} scaleable, high-throughput database like MongoDB could be used because the backend to such a system. Nonetheless, if we’re additionally attempting to floor real-time analytics on this information, this might trigger efficiency points particularly when coping with a spike in exercise. To beat this, I’ll use Rockset to copy the information in actual time to permit computational freedom on a separate platform. This manner, MongoDB is free to cope with the massive quantity of incoming information, while Rockset handles the advanced queries for purposes, equivalent to making suggestions to customers, dynamic pricing of tickets, or detecting anomalous transactions.

I’ll run by way of connecting MongoDB to Rockset after which present how one can construct dynamic and real-time suggestions for customers that may be accessed through the Rockset REST API.

Connecting MongoDB to Rockset

The MongoDB connector is at the moment out there to be used with a MongoDB Atlas cluster. On this article I’ll be utilizing a MongoDB Atlas free tier deployment, so be sure to have entry to an Atlas cluster if you’ll observe alongside.

To get began, open the Rockset console. The MongoDB connector might be discovered throughout the Catalog, choose it after which click on the Create Assortment button adopted by Create Integration.

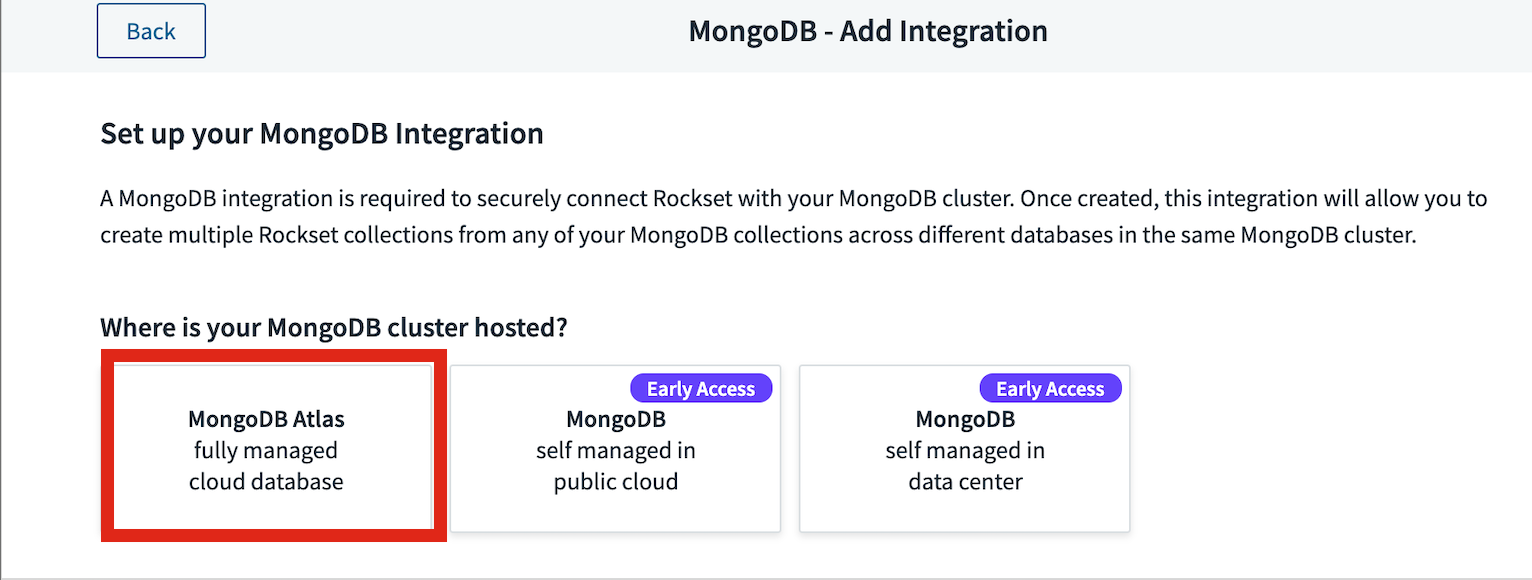

As talked about earlier, I’ll be utilizing the totally managed MongoDB Atlas integration highlighted in Fig 1.

Fig 1. Including a MongoDB integration

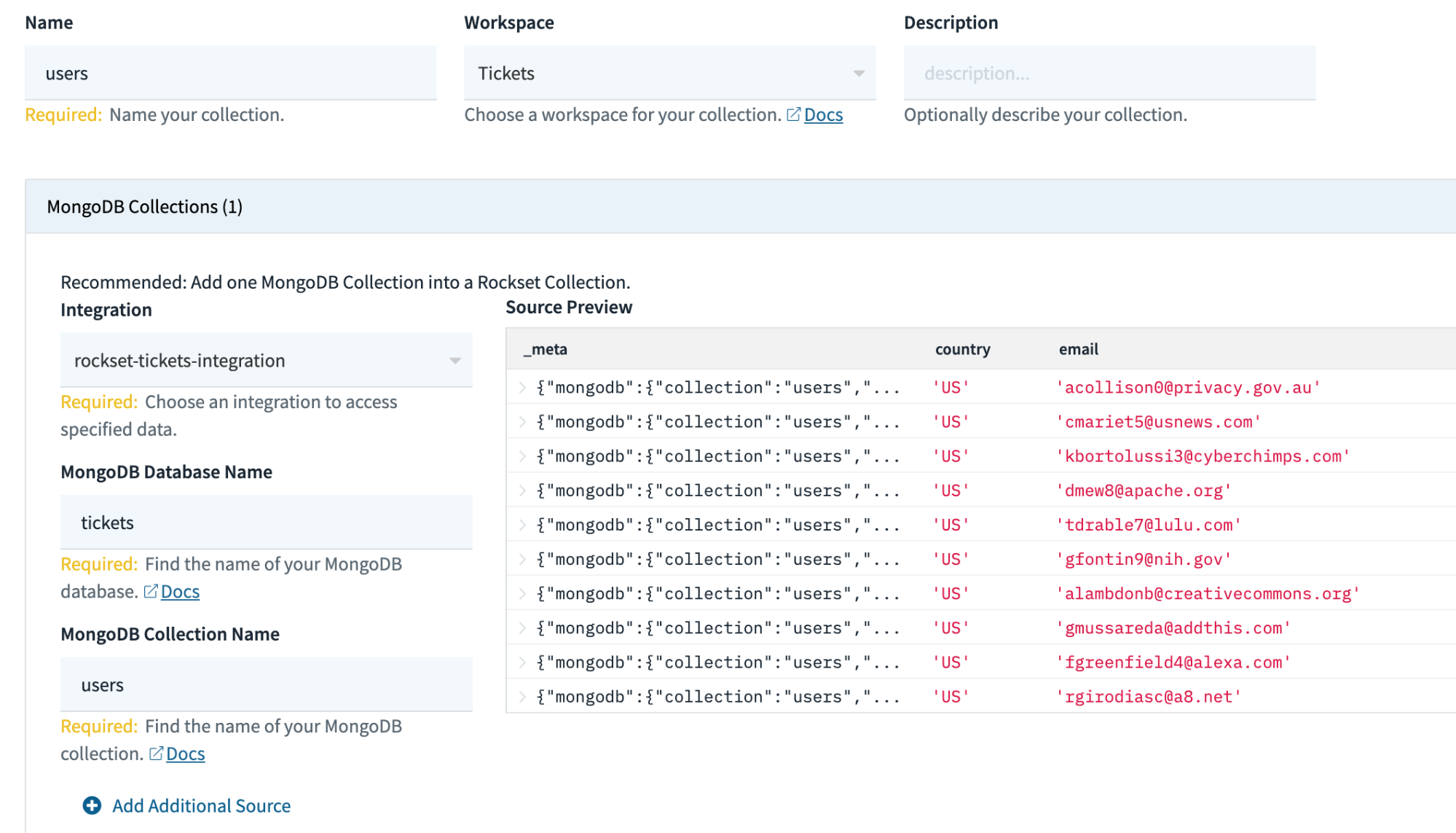

Simply observe the directions to get your Atlas occasion built-in with Rockset and also you’ll then be capable of use this integration to create Rockset collections. It’s possible you’ll discover you must tweak a couple of permissions in Atlas for Rockset to have the ability to see the information, but when every thing is working, you’ll see a preview of your information while creating the gathering as proven in Fig 2.

Fig 2. Making a MongoDB assortment

Utilizing this similar integration I’ll be creating 3 collections in complete: customers, tickets and logs. These collections in MongoDB are used to retailer consumer information together with favorite genres, ticket purchases and weblogs respectively.

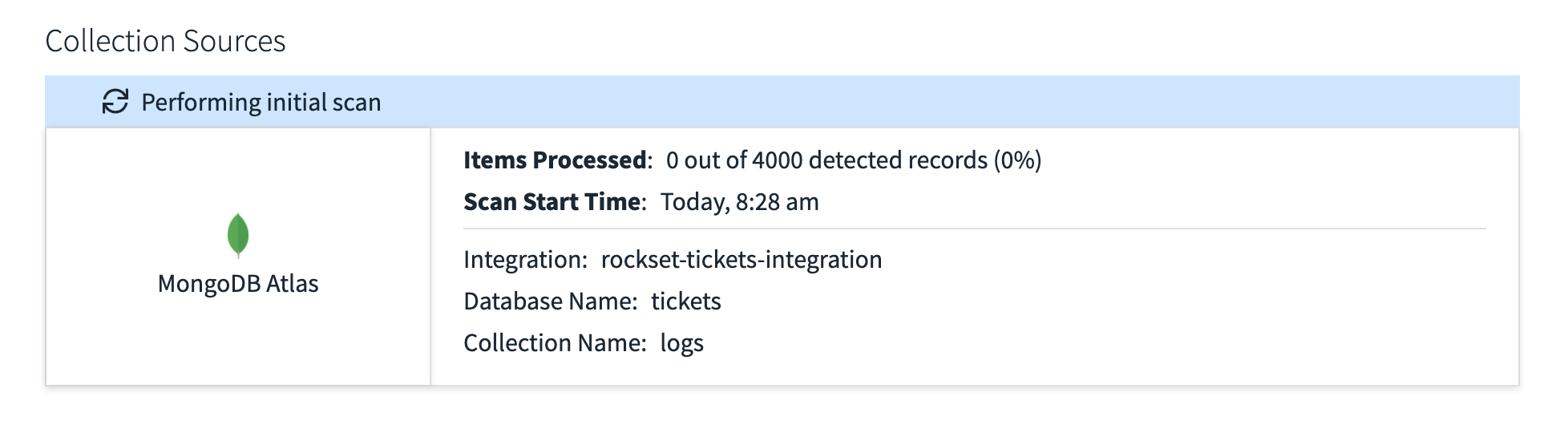

After creating the gathering, Rockset will then fetch all the information from Mongo for that assortment and offer you a reside replace of what number of data it has processed. Fig.3 reveals the preliminary scan of my logs desk reporting that it has discovered 4000 data however 0 have been processed.

Fig 3. Performing preliminary scan of MongoDB assortment

Inside only a minute all 4000 data had been processed and introduced into Rockset, as new information is added or updates are made, Rockset will replicate them within the assortment too. To check this out I simulated a couple of situations.

Testing the Sync

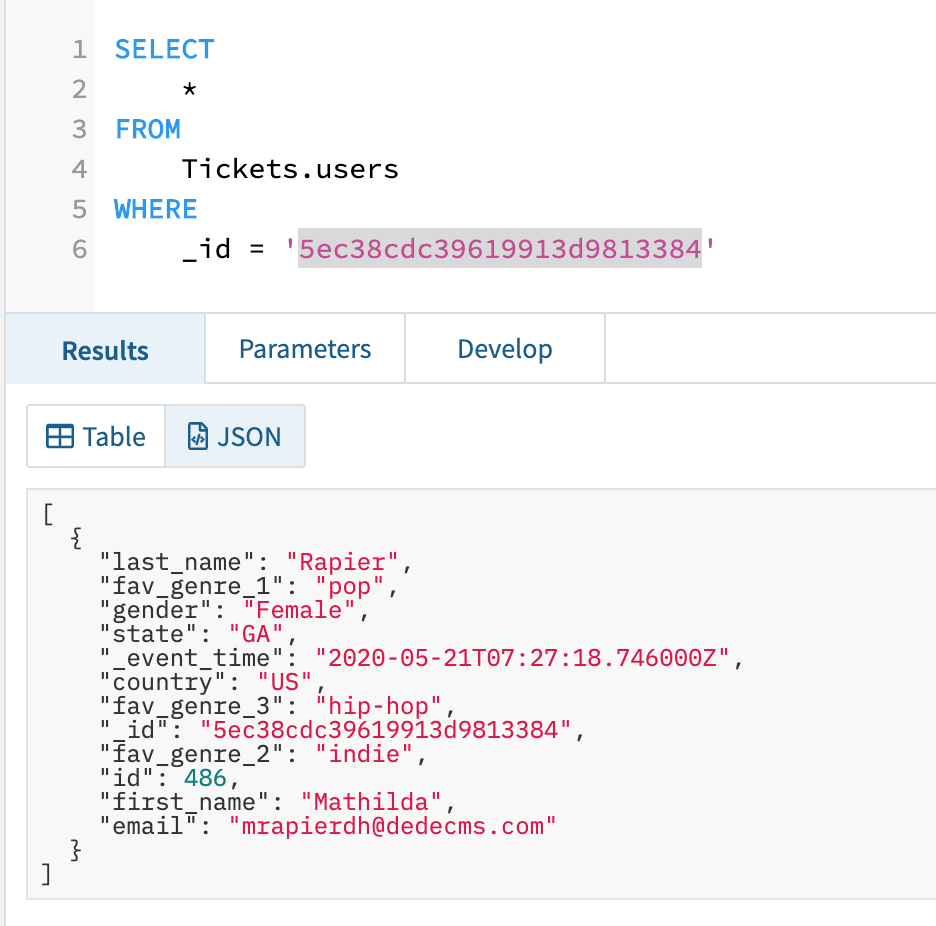

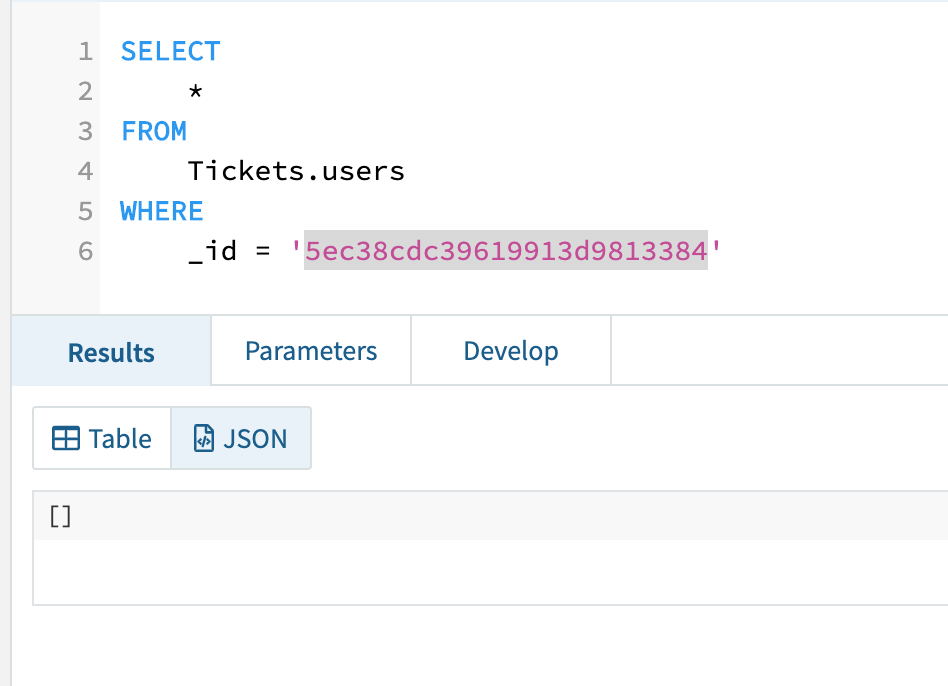

To check the syncing functionality between Mongo and Rockset I simulated some updates and deletes on my information to test they had been synced accurately. You possibly can see the preliminary model of the file in Rockset in Fig 4.

Fig 4. Instance consumer file earlier than an replace

Now let’s say that this consumer adjustments one in all their favorite genres, let’s say fav_genre_1 is now ‘pop’ as an alternative of ‘r&b’. First I’ll carry out the replace in Mongo like so.

db.customers.replace({"_id": ObjectId("5ec38cdc39619913d9813384")}, { $set: {"fav_genre_1": "pop"} } )

Then run my question in Rockset once more and test to see if it has mirrored the change. As you may see in Fig 5, the replace was synced accurately to Rockset.

Fig 5. Up to date file in Rockset

I then eliminated the file from Mongo and once more as proven in Fig 6 you may see the file now now not exists in Rockset.

Fig 6. Deleted file in Rockset

Now we’re assured that Rockset is accurately syncing our information, we are able to begin to leverage Rockset to carry out analytical queries on the information.

Composing Our Suggestions Question

We will now question our information inside Rockset. We’ll begin within the console and have a look at some examples earlier than shifting on to utilizing the API.

We will now use commonplace SQL to question our MongoDB information and this brings one notable profit: the power to simply be a part of datasets collectively. If we needed to point out the variety of tickets bought by customers, displaying their first and final title and variety of tickets, in Mongo we’d have to write down a reasonably prolonged and complex question, particularly for these unfamiliar with Mongo question syntax. In Rockset we are able to simply write an easy SQL question.

SELECT customers.id, customers.first_name as "First Identify", customers.last_name as "Final Identify", depend(tickets.ticket_id) as "Variety of Tickets Bought"

FROM Tickets.customers

LEFT JOIN Tickets.tickets ON tickets.user_id = customers.id

GROUP BY customers.id, customers.first_name, customers.last_name

ORDER BY 4 DESC;

With this in thoughts, let’s write some queries to supply suggestions to customers and present how they may very well be built-in into an internet site or different entrance finish.

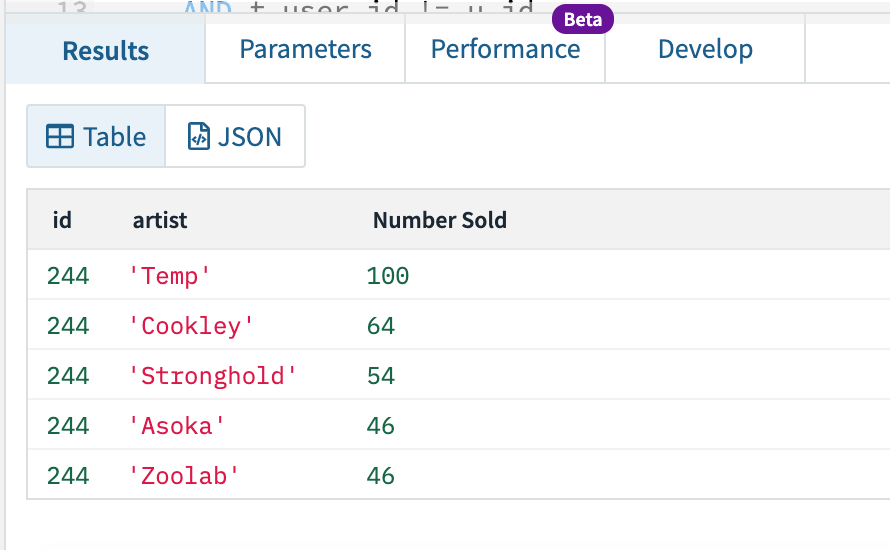

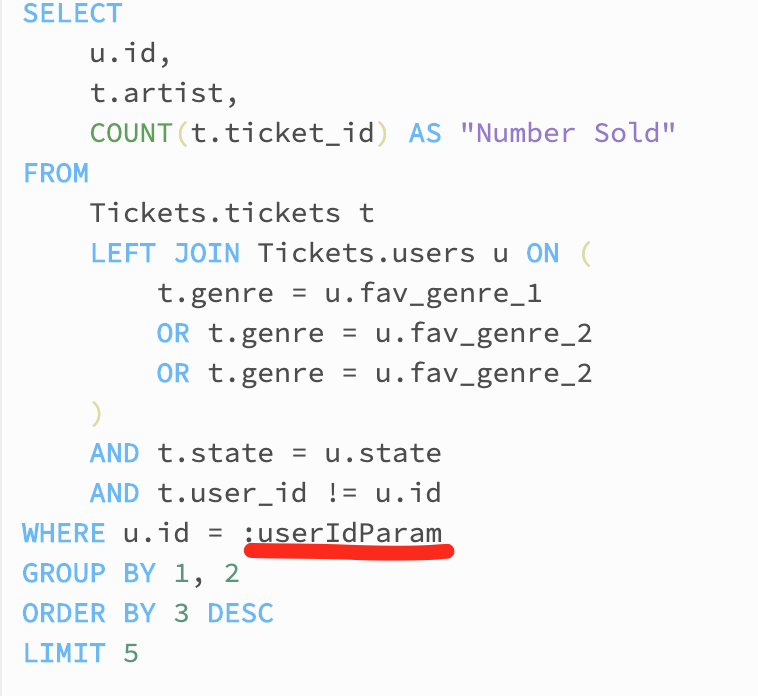

First we are able to develop and check our question within the Rockset console. We’re going to search for the highest 5 tickets which were bought for a consumer’s favorite genres inside their state. We’ll use consumer ID 244 for this instance.

SELECT

u.id,

t.artist,

depend(t.ticket_id)

FROM

Tickets.tickets t

LEFT JOIN Tickets.customers u on (

t.style = u.fav_genre_1

OR t.style = u.fav_genre_2

OR t.style = u.fav_genre_2

)

AND t.state = u.state

AND t.user_id != u.id

WHERE u.id = 244

GROUP BY 1, 2

ORDER BY 3 DESC

LIMIT 5

This could return the highest 5 tickets being really helpful for this consumer.

Fig 7. Suggestion question outcomes

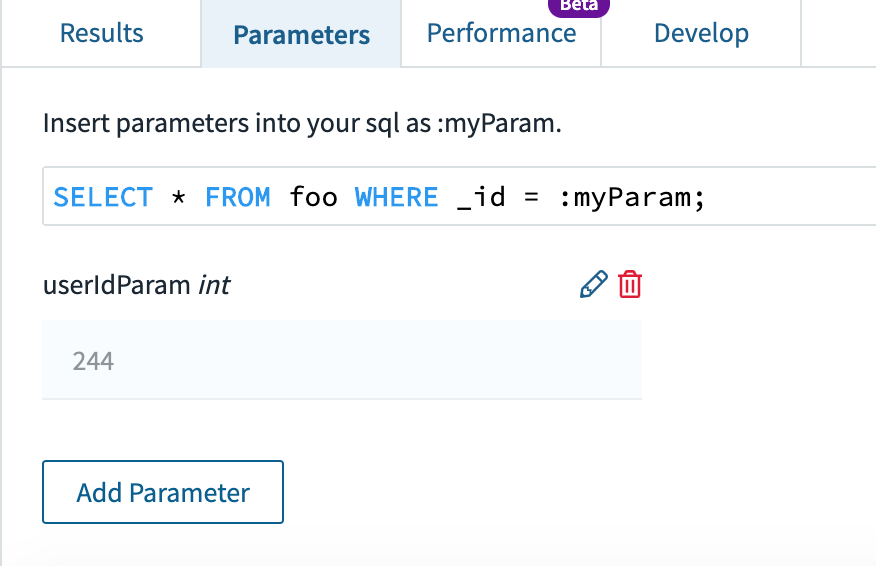

Now clearly we wish this question to be dynamic in order that we are able to run it for any consumer, and return it again to the entrance finish to be exhibited to the consumer. To do that we are able to create a Question Lambda in Rockset. Consider a Question Lambda like a saved process or a perform. As an alternative of writing the SQL each time, we simply name the Lambda and inform it which consumer to run for, and it submits the question and returns the outcomes.

Very first thing we have to do is prep our assertion in order that it’s parameterised earlier than turning it right into a Question Lambda. To do that choose the Parameters tab above the place the outcomes are proven within the console. You possibly can then add a parameter, on this case I added an int parameter known as userIdParam as proven in Fig 8.

Fig 8. Including a consumer ID parameter

With a slight tweak to our the place clause proven in Fig 9 we are able to then utilise this parameter to make our question dynamic.

Fig 9. Parameterised the place clause

With our assertion parameterised, we are able to now click on the Create Question Lambda button above the SQL editor. Give it a reputation and outline and reserve it. That is now a perform we are able to name to run the SQL for a given consumer. Within the subsequent part I’ll run by way of utilizing this Lambda through the REST API which might then enable a entrance finish interface to show the outcomes to customers.

Suggestions through REST API

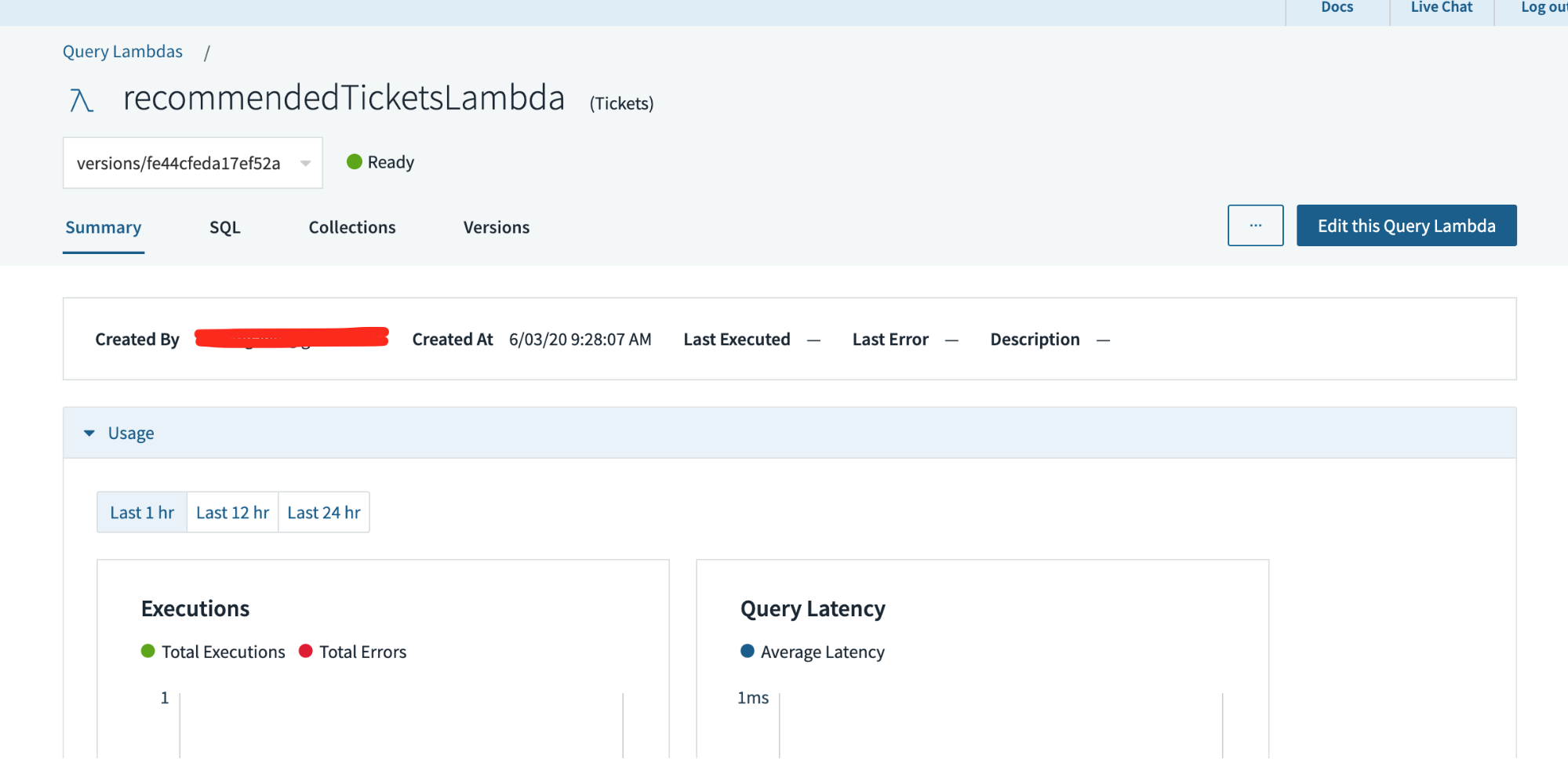

To see the Lambda you’ve simply created, on the left hand navigation choose Question Lambdas and choose the Lambda you’ve simply created. You’ll be introduced with the display screen proven in Fig 10.

Fig 10. Question Lambda overview

This web page reveals us particulars about how usually the Lambda has been run and its common latency, we are able to additionally edit the Lambda, have a look at the SQL and in addition see the model historical past.

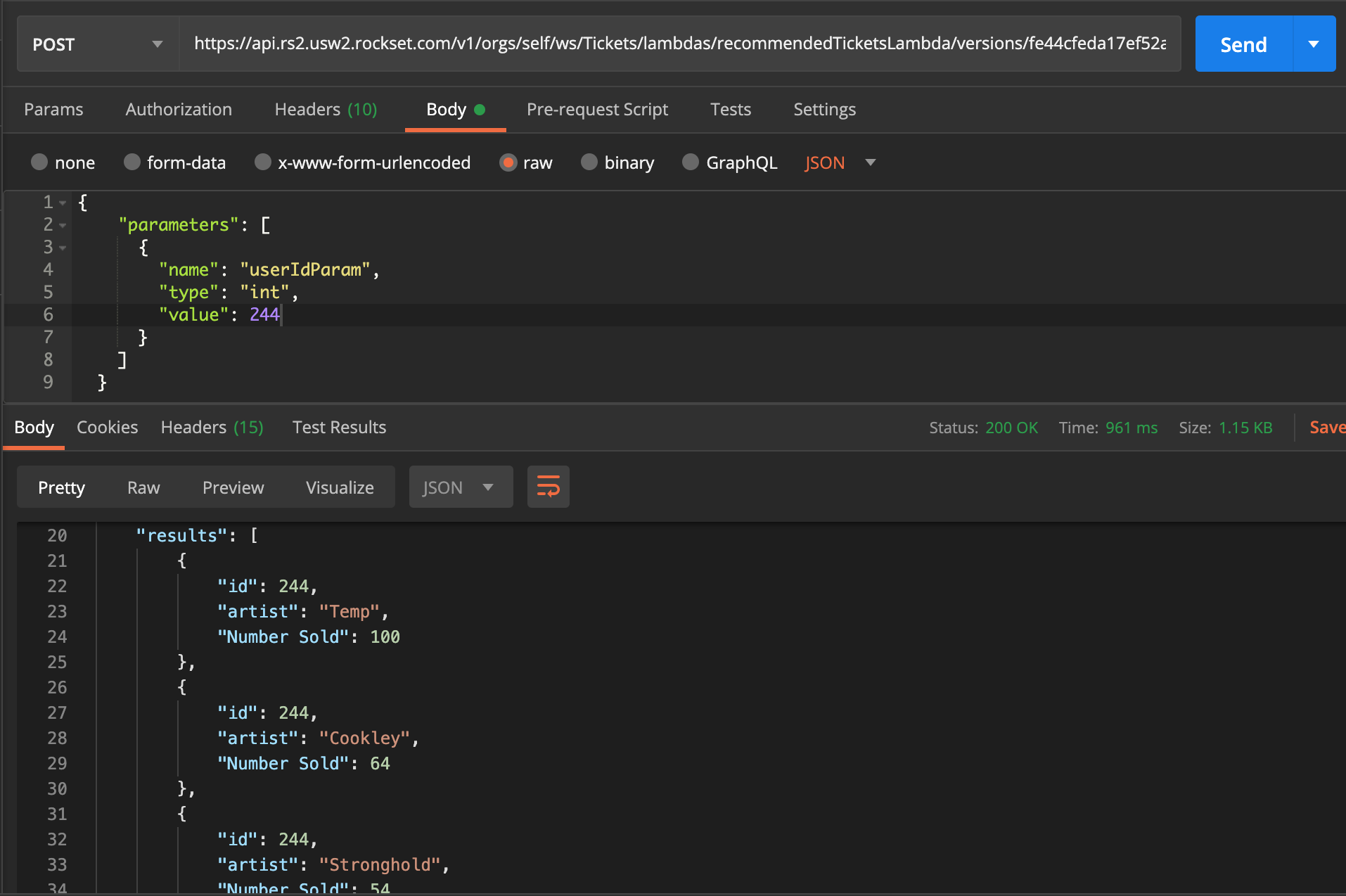

Scrolling down the web page we’re additionally given examples of code that we might use to execute the Lambda. I’m going to take the Curl instance and duplicate it into Postman so we are able to try it out. Notice, you might have to configure the REST API first and get your self a key setup (within the console on the left navigation go to ‘API Keys’).

Fig 11. Question Lambda Curl instance in Postman

As you may see in Fig 11, I’ve imported the API name into Postman and might merely change the worth of the userIdParam throughout the physique, on this case to id 244, and get the outcomes again. As you may see from the outcomes, consumer 244’s highest really helpful artist is ‘Temp’ with 100 tickets bought not too long ago of their state. This might then be exhibited to the consumer when searching for tickets, or on a homepage that gives really helpful tickets.

Conclusion

The great thing about that is that each one the work is completed by Rockset, liberating up our Mongo occasion to cope with giant spikes in ticket purchases and consumer exercise. As customers proceed to buy tickets, the information is copied over to Rockset in actual time and the suggestions for customers will subsequently be up to date in actual time too. This implies well timed and correct suggestions that can enhance total consumer expertise.

The implementation of the Question Lambda implies that the suggestions can be found to be used instantly and any adjustments to the underlying performance of constructing suggestions might be rolled out to all customers of the information in a short time, as they’re all utilizing the underlying perform.

These two options present nice enhancements over accessing MongoDB straight and provides builders extra analytical energy with out affecting core enterprise performance.

Different MongoDB assets:

Lewis Gavin has been an information engineer for 5 years and has additionally been running a blog about abilities throughout the Knowledge neighborhood for 4 years on a private weblog and Medium. Throughout his pc science diploma, he labored for the Airbus Helicopter group in Munich enhancing simulator software program for navy helicopters. He then went on to work for Capgemini the place he helped the UK authorities transfer into the world of Huge Knowledge. He’s at the moment utilizing this expertise to assist remodel the information panorama at easyfundraising.org.uk, an internet charity cashback website, the place he’s serving to to form their information warehousing and reporting functionality from the bottom up.

Photograph by Tuur Tisseghem from Pexels