OPENAI

The researchers level out that the issue is tough to review as a result of superhuman machines don’t exist. In order that they used stand-ins. As an alternative of taking a look at how people may supervise superhuman machines, they checked out how GPT-2, a mannequin that OpenAI launched 5 years in the past, may supervise GPT-4, OpenAI’s newest and strongest mannequin. “If you are able to do that, it is perhaps proof that you should use related strategies to have people supervise superhuman fashions,” says Collin Burns, one other researcher on the superalignment workforce.

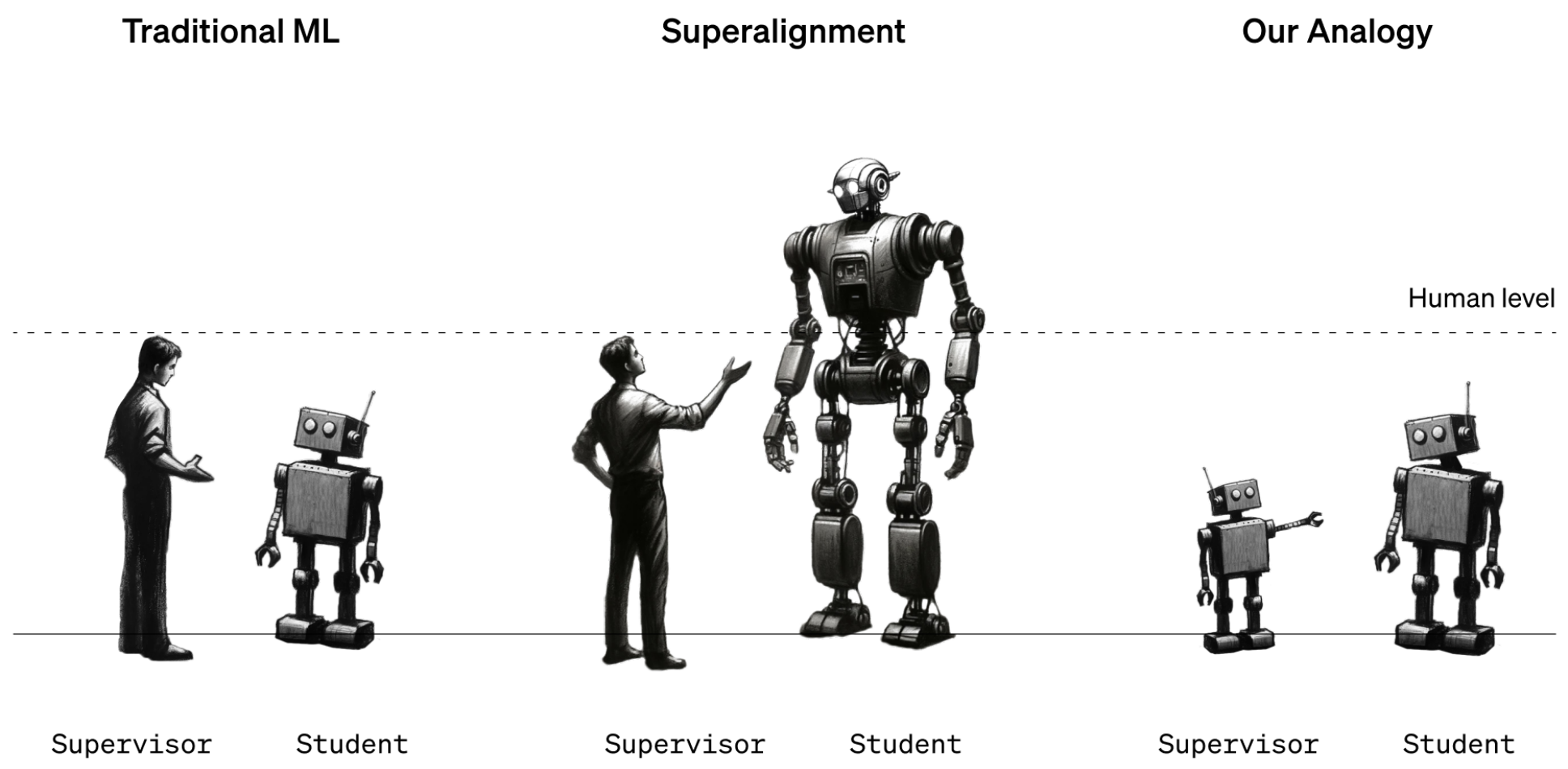

The workforce took GPT-2 and educated it to carry out a handful of various duties, together with a set of chess puzzles and 22 widespread natural-language-processing assessments that assess inference, sentiment evaluation, and so forth. They used GPT-2’s responses to these assessments and puzzles to coach GPT-4 to carry out the identical duties. It’s as if a twelfth grader have been taught how you can do a job by a 3rd grader. The trick was to do it with out GPT-4 taking too massive successful in efficiency.

The outcomes have been blended. The workforce measured the hole in efficiency between GPT-4 educated on GPT-2’s finest guesses and GPT-4 educated on appropriate solutions. They discovered that GPT-4 educated by GPT-2 carried out 20% to 70% higher than GPT-2 on the language duties however did much less nicely on the chess puzzles.

The truth that GPT-4 outdid its instructor in any respect is spectacular, says workforce member Pavel Izmailov: “It is a actually stunning and optimistic consequence.” However it fell far wanting what it may do by itself, he says. They conclude that the strategy is promising however wants extra work.

“It’s an fascinating thought,” says Thilo Hagendorff, an AI researcher on the College of Stuttgart in Germany who works on alignment. However he thinks that GPT-2 is perhaps too dumb to be a superb instructor. “GPT-2 tends to provide nonsensical responses to any job that’s barely advanced or requires reasoning,” he says. Hagendorff wish to know what would occur if GPT-3 have been used as an alternative.

He additionally notes that this strategy doesn’t handle Sutskever’s hypothetical state of affairs by which a superintelligence hides its true conduct and pretends to be aligned when it isn’t. “Future superhuman fashions will doubtless possess emergent talents that are unknown to researchers,” says Hagendorff. “How can alignment work in these circumstances?”

However it’s simple to level out shortcomings, he says. He’s happy to see OpenAI shifting from hypothesis to experiment: “I applaud OpenAI for his or her effort.”

OpenAI now needs to recruit others to its trigger. Alongside this analysis replace, the corporate introduced a new $10 million cash pot that it plans to make use of to fund individuals engaged on superalignment. It can supply grants of as much as $2 million to college labs, nonprofits, and particular person researchers and one-year fellowships of $150,000 to graduate college students. “We’re actually enthusiastic about this,” says Aschenbrenner. “We actually suppose there’s lots that new researchers can contribute.”