Following the bulletins we made final week about Retrieval Augmented Era (RAG), we’re excited to announce main updates to Mannequin Serving. Databricks Mannequin Serving now provides a unified interface, making it simpler to experiment, customise, and productionize basis fashions throughout all clouds and suppliers. This implies you’ll be able to create high-quality GenAI apps utilizing the very best mannequin in your use case whereas securely leveraging your group’s distinctive information.

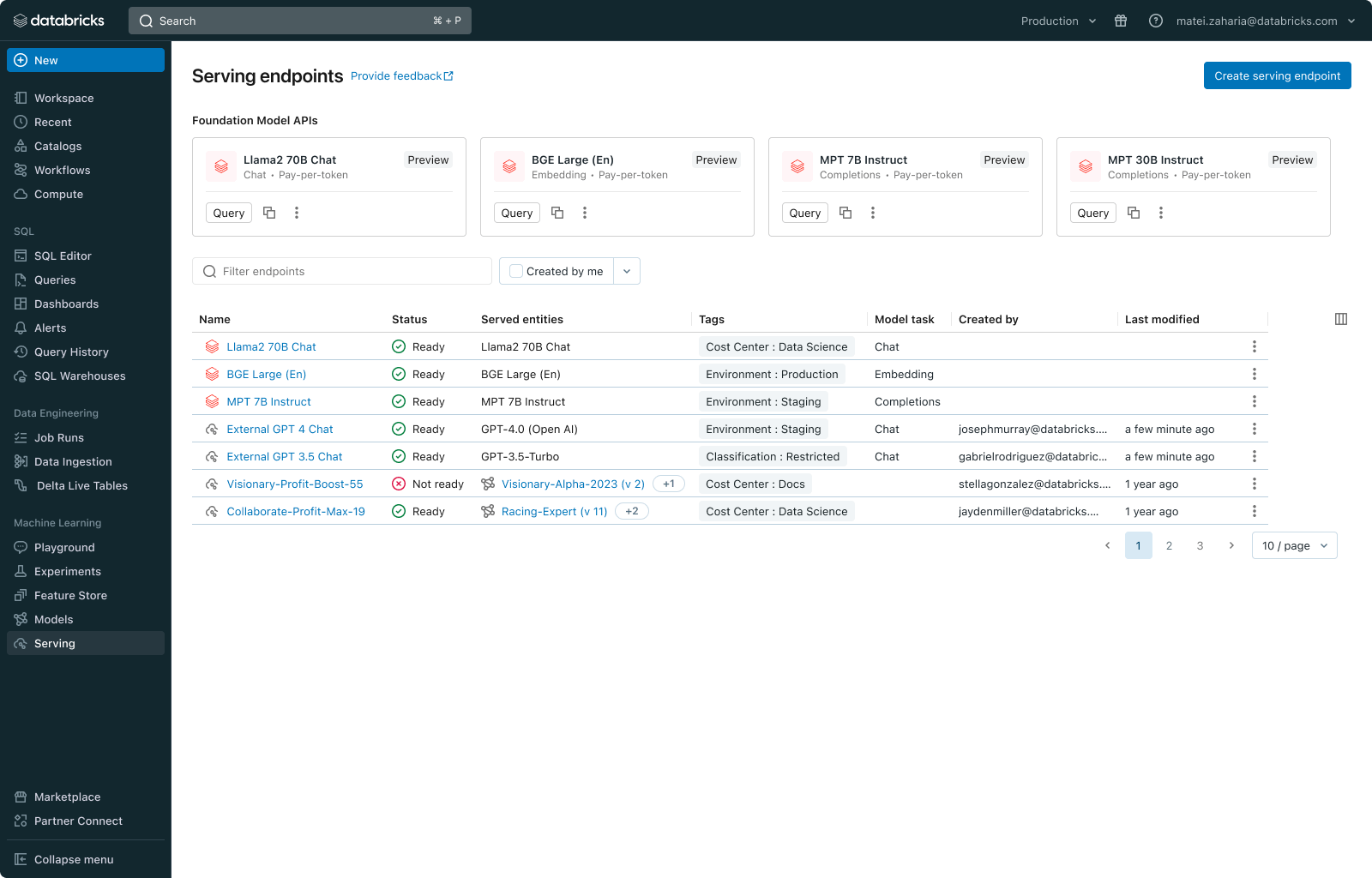

The brand new unified interface helps you to handle all fashions in a single place and question them with a single API, irrespective of in the event that they’re on Databricks or hosted externally. Moreover, we’re releasing Basis Mannequin APIs that present you on the spot entry to in style Giant Language Fashions (LLMs), corresponding to Llama2 and MPT fashions, instantly from inside Databricks. These APIs include on-demand pricing choices, corresponding to pay-per-token or provisioned throughput, decreasing value and growing flexibility.

Begin constructing GenAI apps as we speak! Go to the Databricks AI Playground to shortly attempt generative AI fashions instantly out of your workspace.

Challenges with Productionizing Basis Fashions

Software program has revolutionized each business, and we imagine AI will quickly rework present software program to be extra clever. The implications are huge and diversified, impacting every thing from buyer assist to healthcare and schooling. Whereas lots of our prospects have already begun integrating AI into their merchandise, rising to full-scale manufacturing nonetheless faces a number of challenges:

- Experimenting Throughout Fashions: Every use case requires experimentation to establish the very best mannequin amongst a number of open and proprietary choices. Enterprises have to shortly experiment throughout fashions, which incorporates managing credentials, fee limits, permissions, and question syntaxes from completely different mannequin suppliers.

- Missing Enterprise Context: Basis fashions have broad data however lack inner data and area experience. Used as is, they do not absolutely meet distinctive enterprise necessities.

- Operationalizing Fashions: Requests and mannequin responses have to be persistently monitored for high quality, debugging, and security functions. Totally different interfaces amongst fashions make it difficult to manipulate and combine them.

Databricks Mannequin Serving: Unified Serving for any Basis Mannequin

Databricks Mannequin Serving is already utilized in manufacturing by lots of of enterprises for a variety of use circumstances, together with Giant Language Fashions and Imaginative and prescient functions. With the most recent replace, we’re making it considerably easier to question, govern and monitor any Basis Fashions.

“With Databricks Mannequin Serving, we’re capable of combine generative AI into our processes to enhance buyer expertise and enhance operational effectivity. Mannequin Serving permits us to deploy LLM fashions whereas retaining full management over our information and mannequin.”

— Ben Dias, Director of Information Science and Analytics at easyJet

Entry any Basis Mannequin

Databricks Mannequin Serving helps any Basis Mannequin, be it a totally customized mannequin, a Databricks-managed mannequin, or a third-party Basis Mannequin. This flexibility means that you can select the fitting mannequin for the fitting job, protecting you forward of future advances within the vary of accessible fashions. To understand this imaginative and prescient, as we speak we’re introducing two new capabilities:

- Basis Mannequin APIs: Basis Mannequin APIs present on the spot entry to in style basis fashions on Databricks. These APIs utterly take away the trouble of internet hosting and deploying basis fashions whereas making certain your information stays safe inside Databricks’ safety perimeter. You may get began with Basis Mannequin APIs on a pay-per-token foundation, which considerably reduces operational prices. Alternatively, for workloads requiring fine-tuned fashions or efficiency ensures, you’ll be able to swap to Provisioned Throughput (beforehand often known as Optimized LLM Serving). The APIs presently assist numerous fashions, together with chat (llama-2-70b-chat), completion (mpt-30B-instruct & mpt-7B-instruct), and embedding fashions (bge-large-en-v1.5). We might be increasing the mannequin choices over time.

- Exterior Fashions: Exterior Fashions (previously AI Gateway) let you add endpoints for accessing fashions hosted outdoors of Databricks, corresponding to Azure OpenAI GPT fashions, Anthropic Claude Fashions, or AWS Bedrock Fashions. As soon as added, these fashions will be managed from inside Databricks.

Moreover, we now have added a listing of curated basis fashions to the Databricks Market, an open market for information and AI belongings, which will be fine-tuned and deployed on Mannequin Serving.

“Databricks’ Basis Mannequin APIs enable us to question state-of-the-art open fashions with the push of a button, letting us deal with our prospects moderately than on wrangling compute. We’ve been utilizing a number of fashions on the platform and have been impressed with the steadiness and reliability we’ve seen to date, in addition to the assist we’ve obtained any time we’ve had a problem.” — Sidd Seethepalli, CTO & Founder, Vellum

“Databricks’ Basis Mannequin APIs product was extraordinarily straightforward to arrange and use proper out of the field, making our RAG workflows a breeze. We’ve been excited by the efficiency, throughput, and the pricing we’ve seen with this product, and love how a lot time it’s been capable of save us!” – Ben Hills, CEO, HeyIris.AI”

Question Fashions by way of a Unified Interface

Databricks Mannequin Serving now provides a unified OpenAI-compatible API and SDK for straightforward querying of Basis Fashions. You too can question fashions instantly from SQL by way of AI capabilities, simplifying AI integration into your analytics workflows. A normal interface permits for straightforward experimentation and comparability. For instance, you may begin with a proprietary mannequin after which swap to a fine-tuned open mannequin for decrease latency and value, as demonstrated with Databricks’ AI-generated documentation.

import mlflow.deployments

shopper = mlflow.deployments.get_deploy_client("databricks")

inputs = {

"messages": [

{

"role": "user",

"content": "Hello!"

},

{

"role": "assistant",

"content": "Hello! How can I assist you today?"

},

{

"role": "user",

"content": (

"List 3 reasons why you should train an AI model on "

"domain specific data sets? No explanations required.")

}

],

"max_tokens": 64,

"temperature": 0

}

response = shopper.predict(endpoint="databricks-llama-2-70b-chat", inputs=inputs)

print(response["choices"][0]['message']['content'])

#"n1. Improved accuracyn2. Higher generalizationn3. Elevated relevance"SELECT ai_query(

'databricks-llama-2-70b-chat',

'Describe Databricks SQL in 30 phrases.'

) AS chatGovern and Monitor All Fashions

The brand new Databricks Mannequin Serving UI and structure enable all mannequin endpoints, together with externally hosted ones, to be managed in a single place. This contains the power to handle permissions, observe utilization limits, and monitor the standard of all kinds of fashions. As an example, admins can arrange exterior fashions and grant entry to groups and functions, permitting them to question fashions by way of an ordinary interface with out exposing credentials. This method democratizes entry to highly effective SaaS and open LLMs inside a company whereas offering needed guardrails.

“Databricks Mannequin Serving is accelerating our AI-driven initiatives by making it straightforward to securely entry and handle a number of SaaS and open fashions, together with these hosted on or outdoors Databricks. Its centralized method simplifies safety and value administration, permitting our information groups to focus extra on innovation and fewer on administrative overhead.” — Greg Rokita, AVP, Expertise at Edmunds.com

Securely Customise Fashions with Your Personal Information

Constructed on a Information Intelligence Platform, Databricks Mannequin Serving makes it straightforward to increase the ability of basis fashions utilizing strategies corresponding to retrieval augmented era (RAG), parameter-efficient fine-tuning (PEFT), or commonplace fine-tuning. You may fine-tune basis fashions with proprietary information and deploy them effortlessly on Mannequin Serving. The newly launched Databricks Vector Search integrates seamlessly with Mannequin Serving, permitting you to generate up-to-date and contextually related responses.

“Utilizing Databricks Mannequin Serving, we shortly deployed a fine-tuned GenAI mannequin for Stardog Voicebox, a query answering and information modeling device that democratizes enterprise analytics and reduces value for data graphs. The convenience of use, versatile deployment choices, and LLM optimization supplied by Databricks Mannequin Serving have accelerated our deployment course of, liberating our workforce to innovate moderately than handle infrastructure.” — Evren Sirin, CTO and Co-founder at Stardog

Get Began Now with Databricks AI Playground

Go to the AI Playground now and start interacting with highly effective basis fashions instantly. With AI Playground, you’ll be able to immediate, evaluate and modify settings corresponding to system immediate and inference parameters, all while not having programming expertise.